1. Background

LLM (Large Language Model) Large Language Model, designed to understand and generate human language, needs to be trained on a large amount of text data. It is generally based on the Transformer structure and has a parameter count of Billion or more. For example, GPT-3 (175B), PaLM (560B).

Three big things are happening in the NLP world:

-

ChatGPT: AI chatbot program released by OpenAI in November 2022, based on GPT-3.5

-

LLaMA: Pre-trained models released by Meta in February 2023 redefine “big” for big models

-

Alpaca: Fine-Tuning Models Released by Stanford in March 2023 to Demonstrate the Feasibility of Instruction Fine-Tuning

The technology behind ChatGPT:

-

GPT models: base model, GPT-3, GPT-3.5-Turbo and GPT4, larger model capacity, requires pre-training on large amount of data.

-

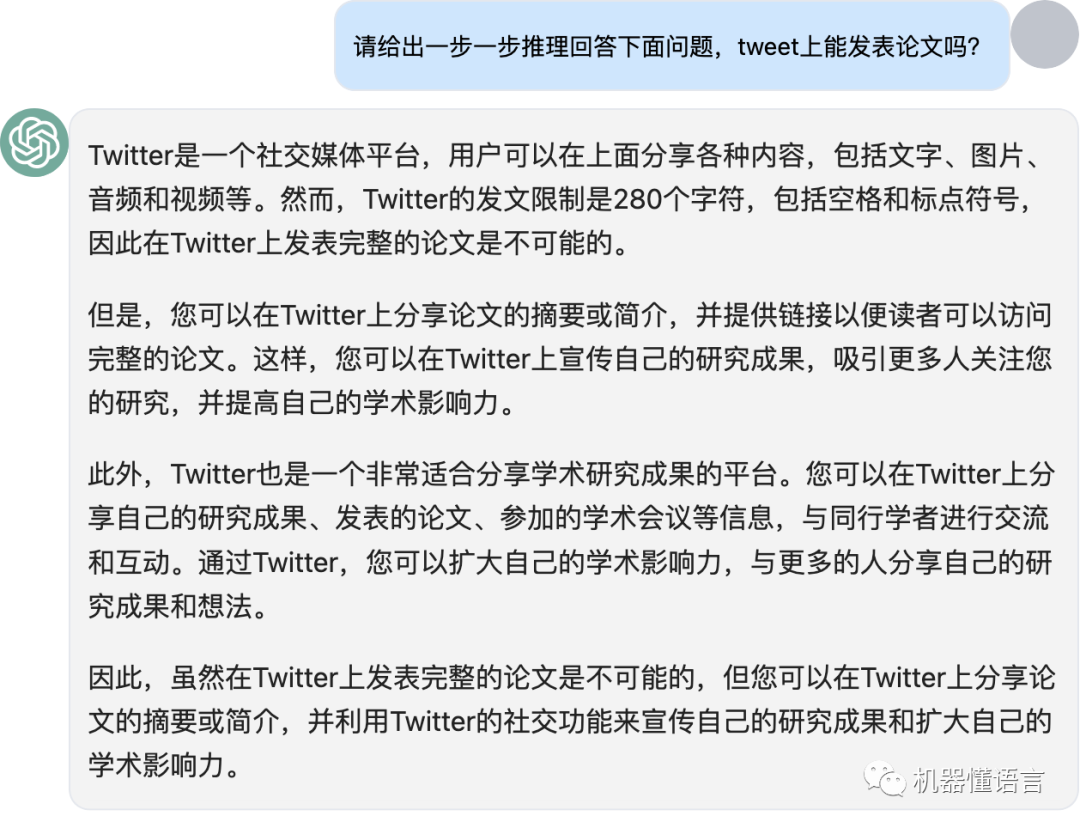

IFT (Instruction Fine-Tuning): Instruction Fine-Tuning, instruction is the purposeful input text passed in by the user, instruction fine-tuning is used to allow the model to learn to follow the user’s instructions. openAI is called SFT (Supervised Fine-Tuning), which is the same meaning.

-

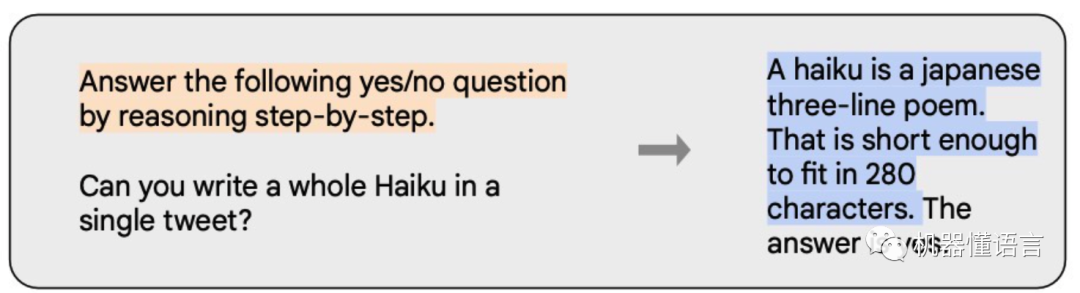

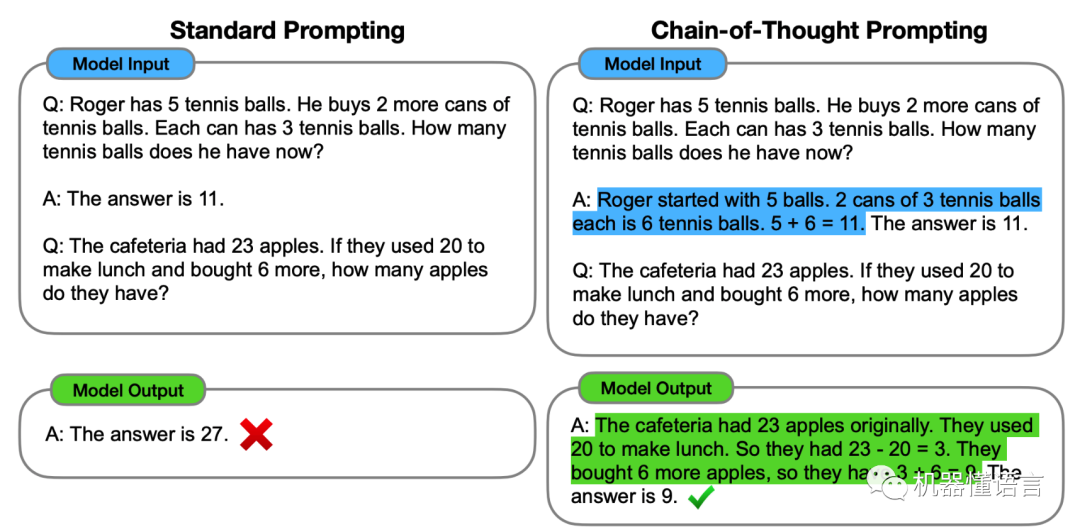

CoT (Chain-of-Thought): at the data level, denotes a special case of the instruction form that contains step-by-step reasoning processes (as shown below). At the model level, it means that the model has the REASONING capability of step-by-step reasoning.

-

RLHF (Reinforcement Learning from Human Feedback): Reinforcement learning to optimize language models based on human feedback.

2. Large model training methodology

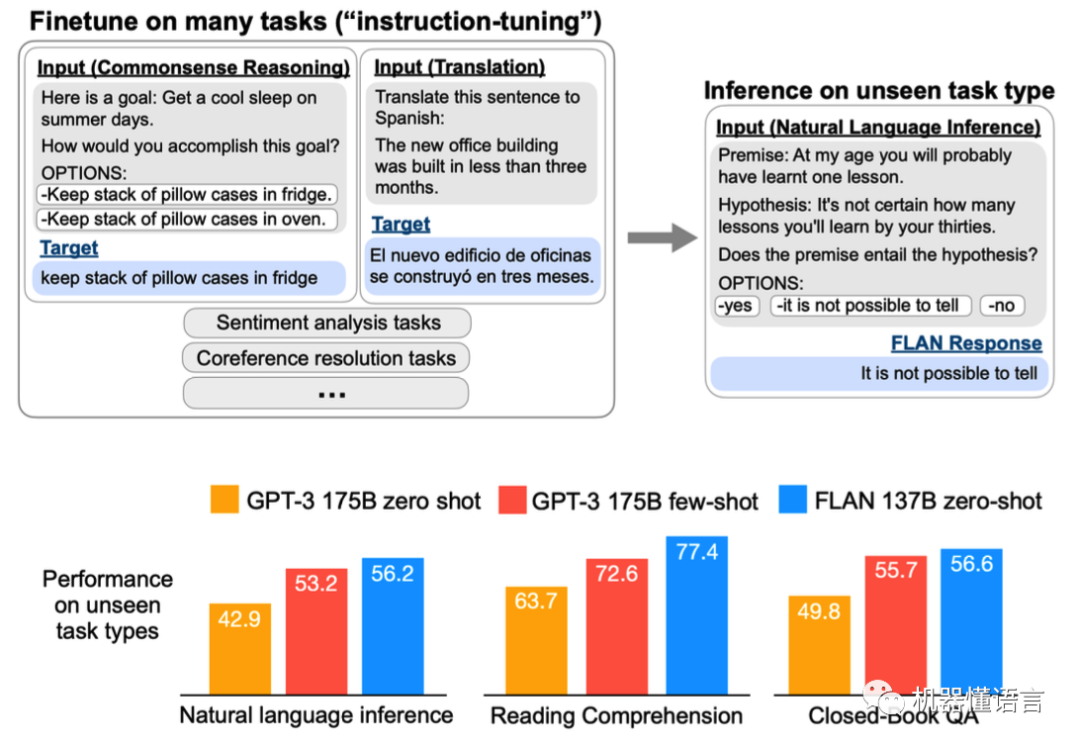

2.1 FLAN

discuss a paper or thesis (old)《Finetuned Language Models Are Zero-Shot Learners》FLAN explicitly proposes instruction fine-tuning, which essentially aims to convert NLP tasks into natural language instructions before feeding them to the model for training, allowing it to improve the performance performance of zero-shot tasks.

paper:https://arxiv.org/abs/2109.01652

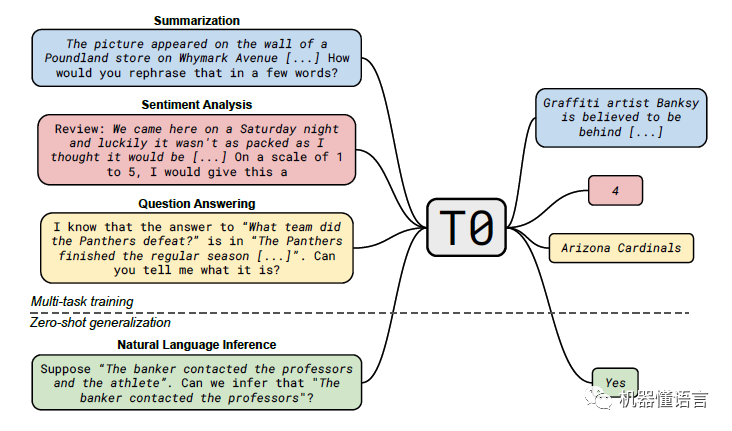

2.2 T0

discuss a paper or thesis (old)《Multitask Prompted Training Enables Zero-shot Task Generalization》T0 explores how the zero-shot generalization ability of large models is achieved, and demonstrates that the zero-shot generalization ability of language models can be achieved through explicit multi-task prompt training.

paper:https://arxiv.org/abs/2110.08207

1. Multi-task prompted training is more powerful than zero-shot with same-parameter model.

2. The paper compares the zero-shot performance of the T0 and GPT-3 models:

a. T0 was found to exceed GPT-3 in 9 of the 11 data sets;

b. Neither T0 nor GPT-3 is trained in natural language reasoning, but T0 outperforms GPT-3 on all NLI datasets.

2.3 Flan-T5

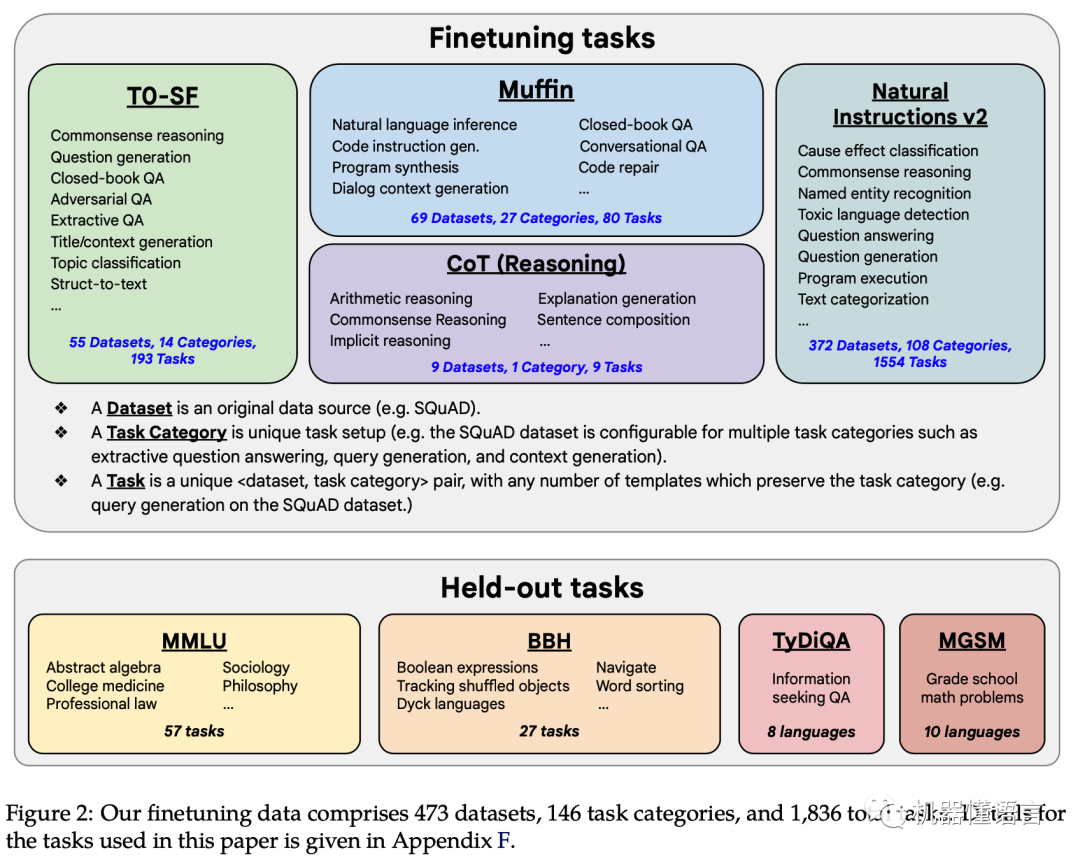

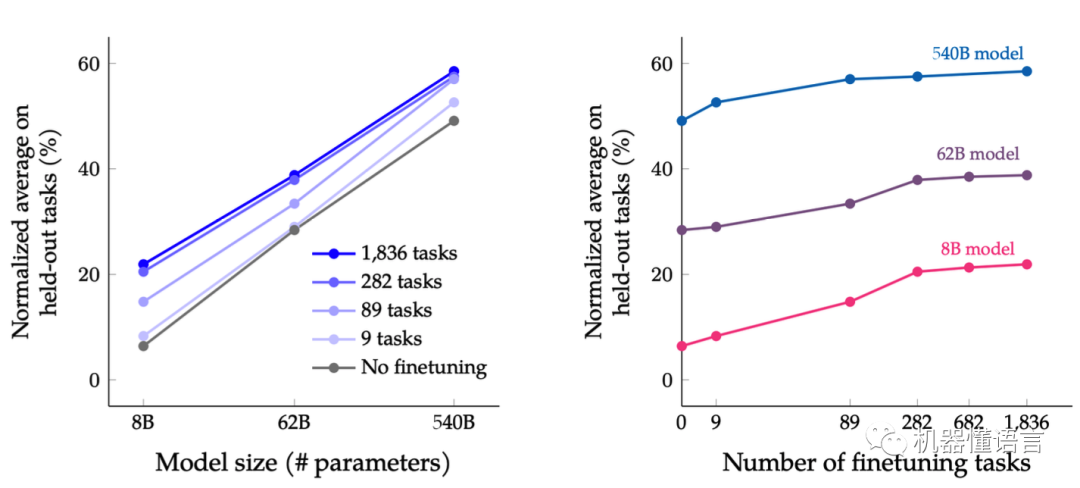

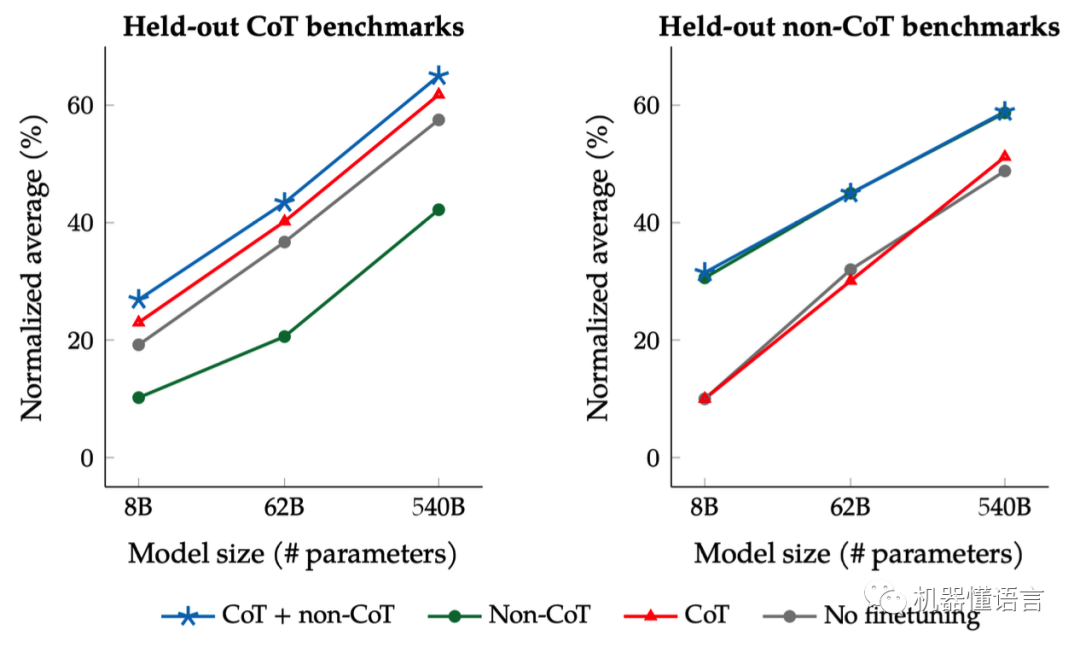

discuss a paper or thesis (old)《Scaling Instruction-Finetuned Language Models》Flan-T5 proposes a multi-task fine-tuning scheme (Flan), which, by fine-tuning on super-sized tasks, gives language models extremely strong generalization performance, to the point where a single model can perform well on more than 1800 NLP tasks. This means that the model can be used directly on almost all NLP tasks, realizing “One model for ALL tasks”, which is very tempting!

paper:https://arxiv.org/abs/2210.11416

Flan-T5 showed the following experimental conclusions:

-

scaling the number of tasks (Finetune works better with more tasks)

-

scaling the model size (the more model parameters the better)

-

finetuning on chain-of-thought (CoT) data (chain-of-thought data can enhance reasoning)

2.4 Chain-of-Thought(CoT)

● Few-shot CoT

The paper “Chain-of-Thought Prompting Elicits Reasoning in Large Language Models” proposes the Chain-of-Thought (CoT) chain-of-thinking approach to improve the reasoning of large models on tasks such as mathematical computation, common sense, and symbolic reasoning.

paper:https://arxiv.org/abs/2201.11903

COT Chain of Thought is inspired by the process of human reasoning, and the author draws on this process by designing the chain of thought to stimulate the large model to have the ability to reason, and due to the existence of this logical chain of thought, multiple steps of intermediate reasoning can lead to the final correct answer.

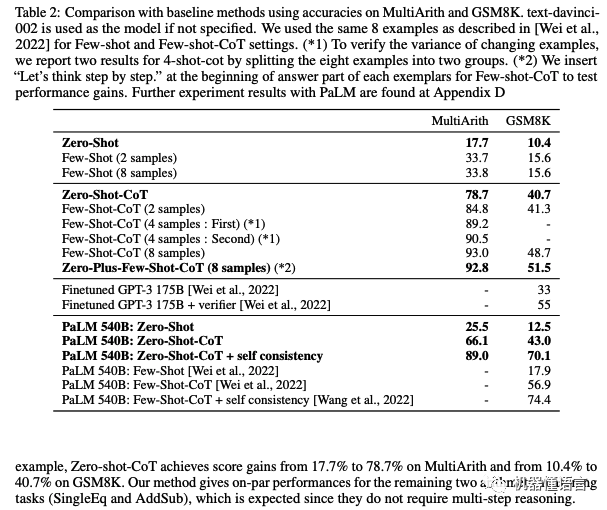

● Zero-shot CoT

The paper “Large Language Models are Zero-Shot Reasoners” explores the reasoning ability of large models, and the authors find that for GPT3, which has 175B parameters, the simple addition of `Let’s think step by step` improves the model’s zero-shot ability in both mathematical reasoning (arithmetics) and symbolic reasoning (symbolic reasoning). reasoning) and symbolic reasoning can be improved by simply adding `Let’s think step by step` to the model’s zero-shot ability.

paper:https://arxiv.org/abs/2205.11916

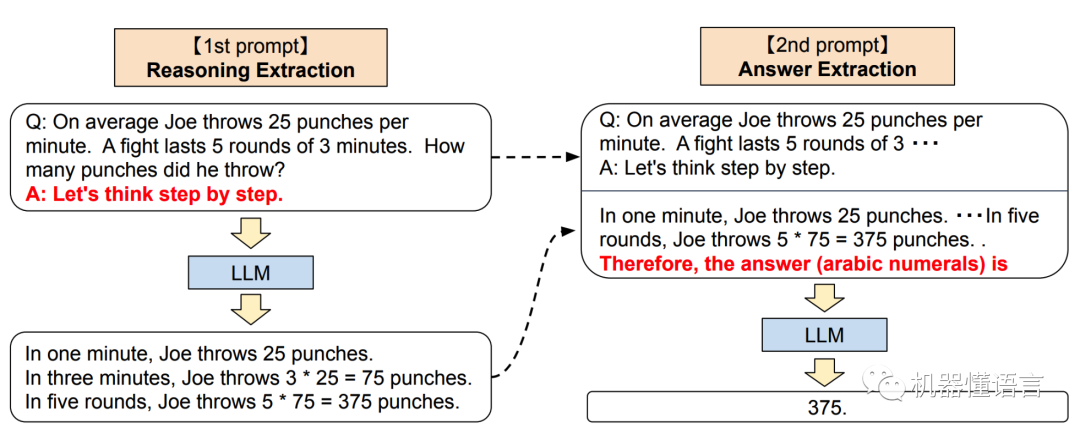

The authors propose a Zero-shot-CoT in two phases:

1. Add textual hints to the original problem and use LLM to generate a reasoning process. (left side of the figure above)

2. Add the LLM-generated reasoning process to the original question and add a hint for generating the answer, using LLM to generate the final answer to the question. (Right side of the figure above)

Effect: A little coaxing can skyrocket GPT-3 accuracy by 61%!

2.5 Reinforcement Learning Human Feedback (RLHF)

● InstructGPT

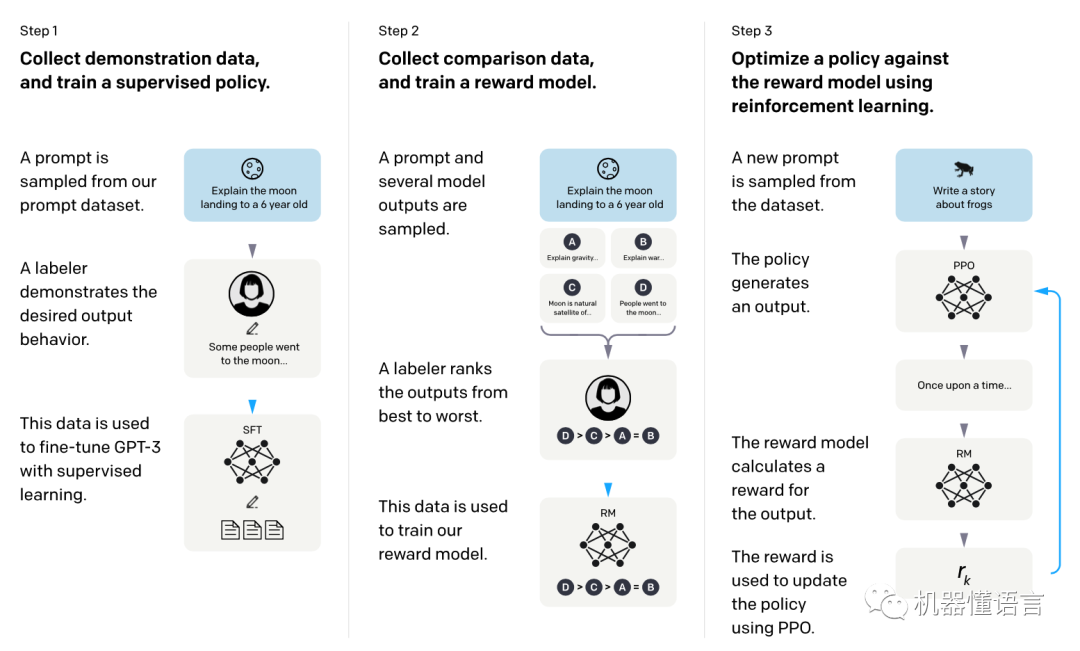

The paper “Training language models to follow instructions with human feedback” InstructGPT, a precursor to ChatGPT, focuses on exploring the use of RLHF (Reinforcement Learning from Human Feedback) approach to align human intentions in large models.

paper:https://arxiv.org/abs/2203.02155

One big problem with the Prompt’s zero shot based learning paradigm for large language models such as GPT is that the pre-trained model accomplishes the task of predicting the succeeding text, which deviates somewhat from the requirements of the specific task, and generates results that don’t necessarily match the person’s intentions. So some form of fine-tuning is needed to align this.

The methodology is a three-step process:

1. Supervised training of strategy models using manually given exemplary data

2. Training reward models using manually sorted comparative data

3. Training of strategy models through reinforcement learning (using a reward model)

Steps 2 and 3 can be alternately iterated to involve people in the optimization process.

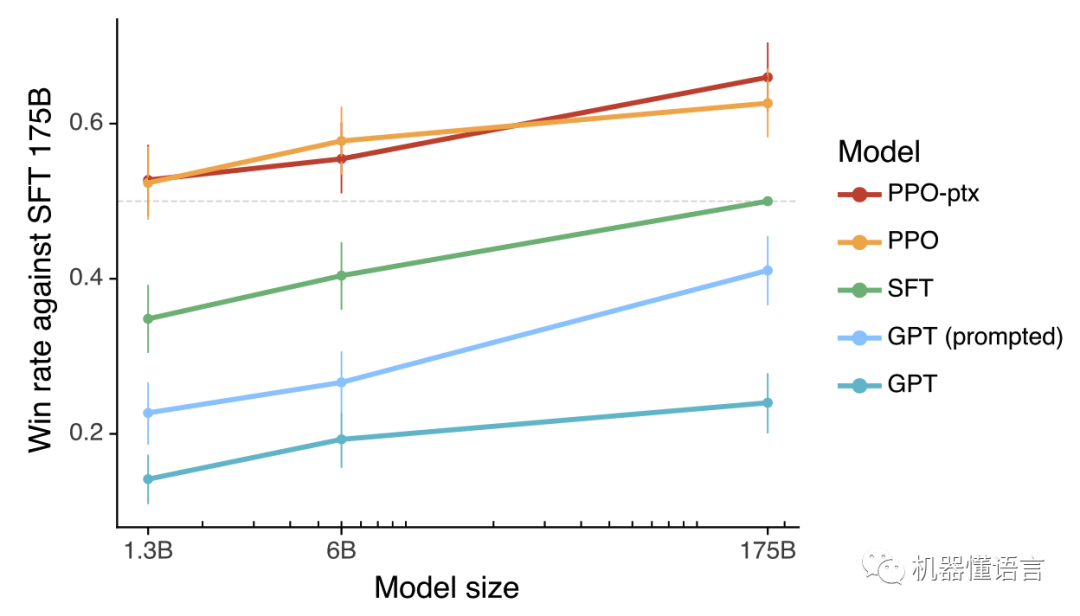

Regardless of the left and right graphs, the models using reinforcement learning (PPO and PPO-ptx) are far better than the models with GPT or supervised learning.

Here “good” means that its output is more preferred by the annotator (truthful, useful, harmless). The data and evaluation criteria are the same as in training, all aligned to human preferences. This is where RLHF excels and naturally beats GPT3.

In addition, the PPO-ptx model adds pretraining regularity to reinforcement learning to avoid dropping points on public NLP tasks (solving the so-called “alignment tax” problem), but here it has a slightly negative effect.

3. LLaMA

The authors of the paper “LLaMA: Open and Efficient Foundation Language Models” have demonstrated that SOTA-level models can be trained using only publicly available datasets, by training on 1T-level tokens.

Unlike Chinchilla, PaLM or GPT-3, LLaMA uses only publicly available data, making the work in this paper compatible with open source, whereas most existing models rely on non-public data.

paper:https://research.facebook.com/publications/llama-open-and-efficient-foundation-language-models/

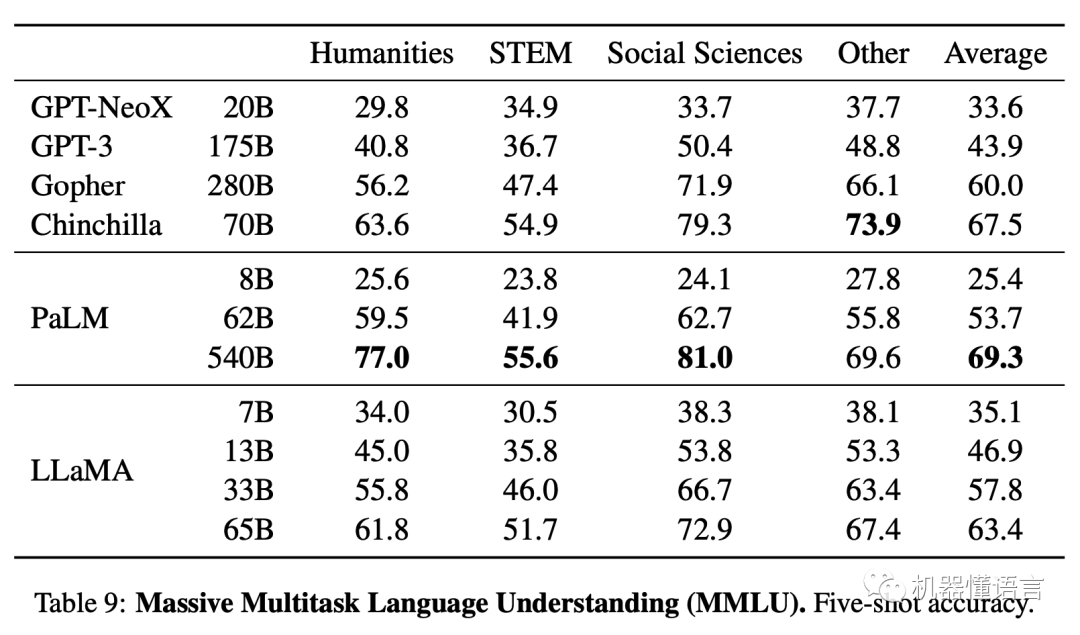

3.1 Performance

LLaMA-13B > GPT-3 (175B): LLaMA-13B outperforms GPT3 (175B) in most tests.

● LLaMA-65B ≈ PaLM-540B: LLaMA-65B is also competitive with the best models, Chinchilla-70B and PaLM-540B.

LLaMA redefines “big” for large models

3.2 Motivation

1. Open source OPT (Zhang et al., 2022), GPT-NeoX (Black et al., 2022), BLOOM (Scao et al., 2022) and GLM (Zeng et al., 2022) models are not comparable to PaLM-62B or Chinchilla, but LLaMA is stronger. is stronger.

2. The paper “Training Compute-Optimal Large Language Models” found that the best performance was not on the largest model, but on the one that used more tokens, so the authors argued that a smaller model that took longer to train and used more tokens would achieve the same modeling results and would be cheaper (cheaper) to predict. cheaper (cheaper) for prediction.

paper:https://arxiv.org/abs/2203.15556

3.3 Optimizer

LLaMA has also made improvements to the transformer architecture:

●Pre-normalization[GPT3]: RMSNorm normalizing function

● SwiGLU activation function [PaLM].

● Rotary Embeddings [GPTNeo].

3.4 Future Work

1. Continue to scale larger models

2. Do instruction tuning and RLHF

4. Fine-tuning methodology

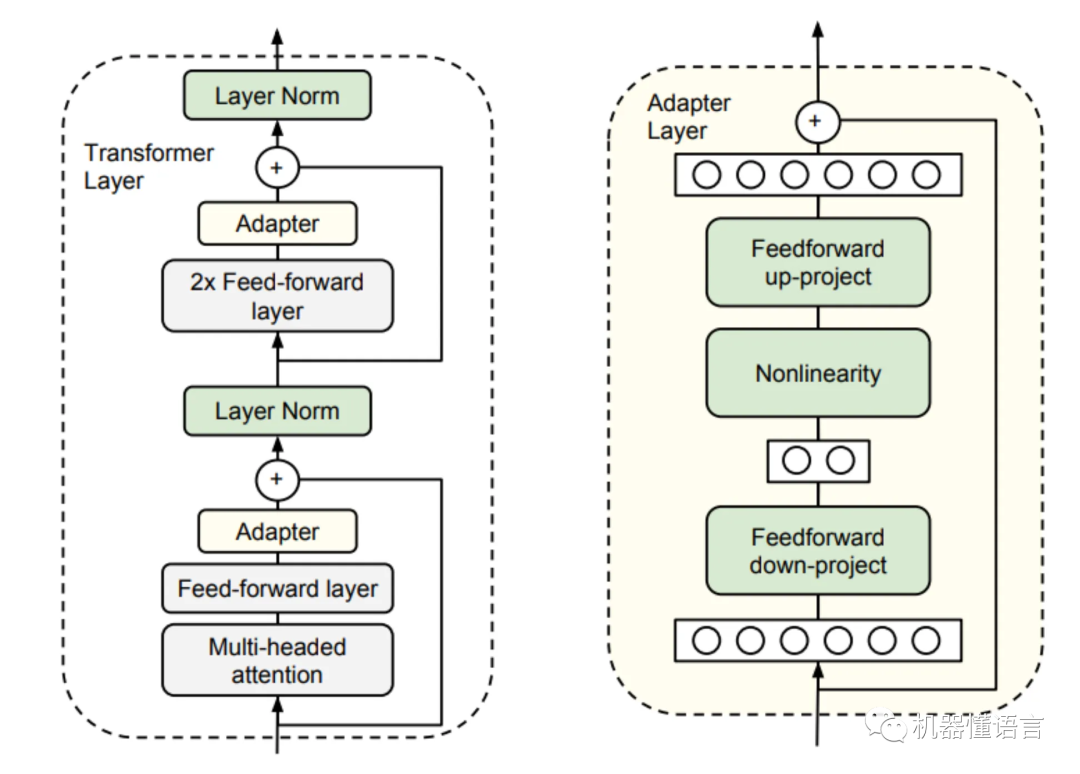

4.1 adapter

In 2019, the paper “Parameter-Efficient Transfer Learning for NLP” proposed the Adapter scheme, which became the groundbreaking Delta Tuning technology scheme.

The main idea of this scheme is to fix the parameters of the pre-trained model without further fine-tuning in models with large parameter sizes, and to add some trainable parameters to the original network structure. These trainable parameters are added behind the ATTENTION layer and the FEED-FORWARD layer in each layer. Specifically, the Adapter scheme adds a new small neural network, called Adapter, between the attention and feed-forward layers of each layer.

Adapter’s parameters can be trained during the fine-tuning process to adapt to specific downstream tasks. This approach can significantly reduce the number of parameters that need to be fine-tuned, thus improving the parameter efficiency and training speed of the model.

As shown in the figure below, the Adapter scheme adds an Adapter behind the ATTENTION layer and the FEED-FORWARD layer in each layer, which makes the parameter size of the model effectively reduced. This approach has been widely used in the field of natural language processing and achieved good results.

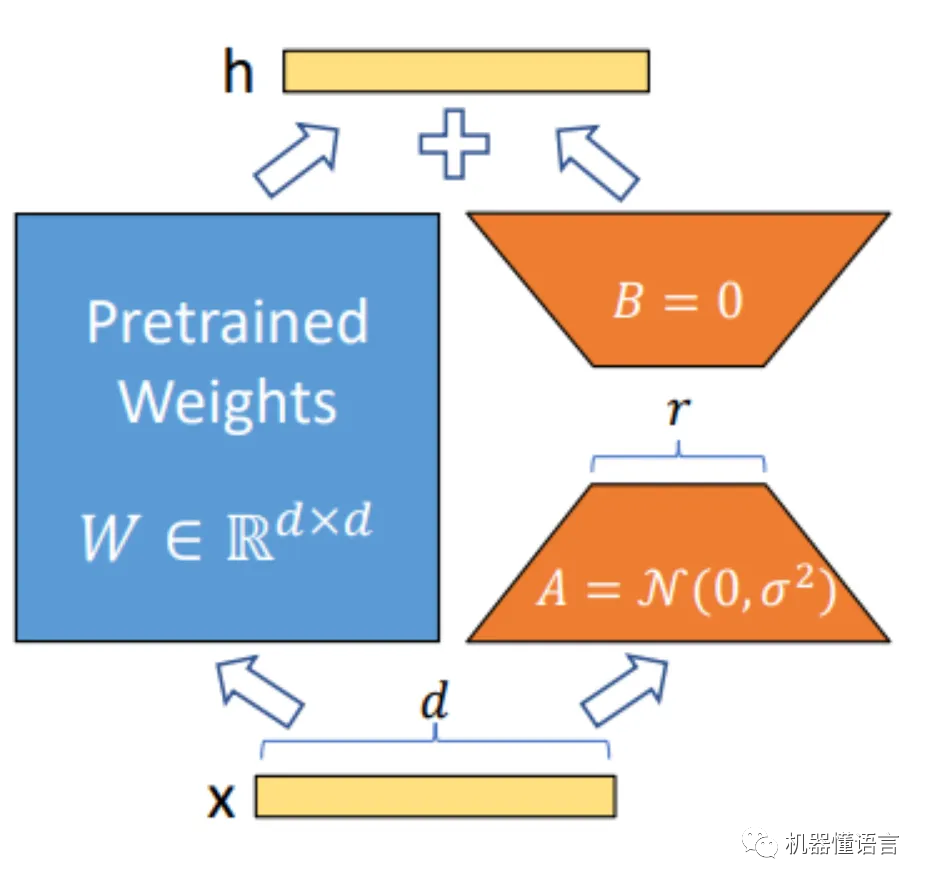

4.2 LoRA

In 2021, Microsoft proposed a scheme called LORA in the paper LoRA: Low-Rank Adaptation of Large Language Models. The scheme analyzed the network structure of Transformer and found that the computation of weight matrices took up a lot of computation time, including the Q/K/V transformation matrix in the Attention layer and the MLP matrix in the Feed-Forward layer.

paper:https://arxiv.org/abs/2106.09685

LORA mainly focuses on the transformation matrix of Attention to improve the speed and efficiency of model training.The core idea of LORA scheme is to add a matrix to the transformation matrix side by side. At the same time, a combination of low-rank matrices is used instead of the added matrix. This approach can effectively reduce the number of parameters.

Experimental results show that the LORA scheme can significantly improve the speed and efficiency of model training without affecting the model performance. The scheme has been widely used in the field of natural language processing and has achieved excellent results on several tasks.

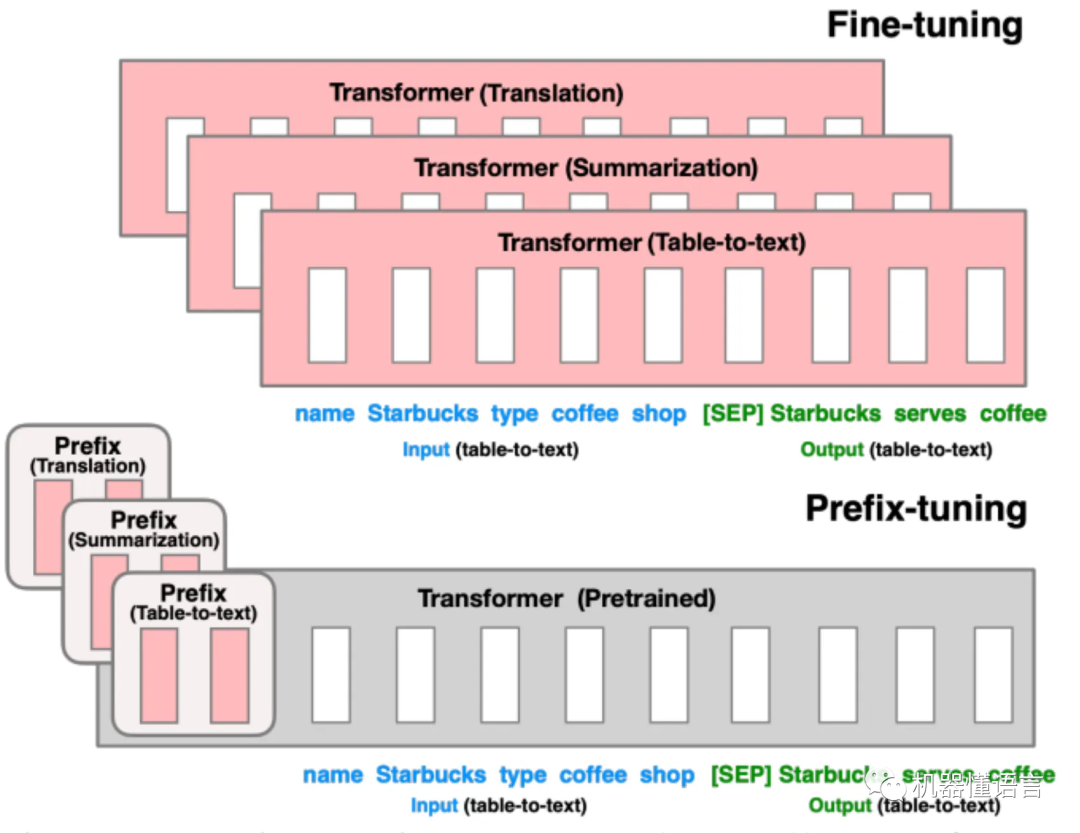

4.3 Prefix-Tuning

In 2021, Stanford proposed the Prefix-Tuning method in his paper “Prefix-Tuning: Optimizing Continuous Prompts for Generation”, the main idea of which is not to change the structure of the original network layer, but to add a prompt prefix to the input part. The prompt can be discrete and specific, for example, for the ner task, we can add “Please find all the entities in the sentence” as the prompt.

This prompt can be manually designed or automated search, the problem is that the final performance of the manually designed prompt is particularly sensitive to changes, add a word or a word less, or change the position of what will cause relatively large changes. Automated search prompt cost is also relatively high.

The implementation in this paper uses the second scheme to train a separate continuous fine-tunable virual token for each task, which is more effective compared to discrete tokens. At the same time, in order to expand the number of fine-tunable parameters, they are added not only in the first layer but in each layer of the transformer. For the T5 network structure, there are both encoder and decoder parts, so it is necessary to add the prompt prefix to both the encoder input and decoder input.

To summarize, the idea of delta-tuning is to fix the parameters of the original pre-trained model, and then add a new part of network parameters for fine-tuning the downstream task. As for where to put the new network parameters? Anywhere, as long as there is an effect.

5. Fine-tuning realizations

5.1 Alpaca

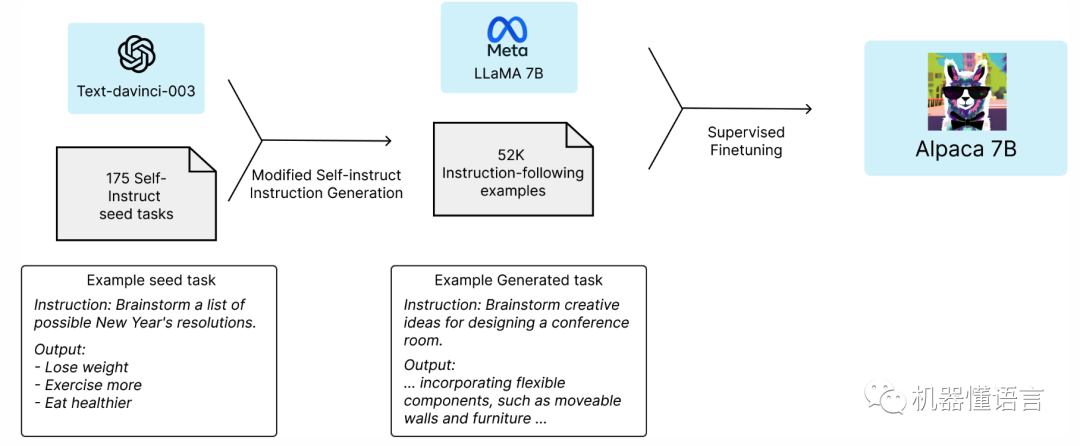

On March 15, 2023, Stanford released the Alpaca model, Alpaca: A Strong, Replicable Instruction-Following Model, fine-tuned from Meta’s LLaMA-7B, which, using only 52k of data, has a performance about equal to GPT-3.5, and costs still less than $600.

paper:Stanford CRFM

There are two main challenges in training large models:

1. Large excellent base model: use LLaMA-7B;

2. High-quality instruction data: Based on the automatic instruction data generation method in the SELF-INSTRUCT paper, 52K instruction data were generated using OpenAI’s text-davinci-003.

The Alpaca alpaca model was followed by a large number of LLaMA-based fine-tuning models, including ChatLLama, FreedomGPT, Vicuna, Koala, etc., and the alpaca family name is running out of names.

In essence, ChatGPT is a large language model that burns money and computing power to achieve the effect of `miracle’. And this also brings a problem, that is, the degree of money burned by such a large language model will make many small companies deterred, and can only join the hegemonic monopoly of the circle. For companies like Xiaohongshu/B station which are not up and down, they can neither afford the cost of training their own big models, nor are they willing to give away the data of their own content pool, which is actually in a rather awkward situation.

Alpaca and Vicuna show another possibility, which is to replicate 90% or even 99% of the capabilities of a large language model at a very low price by means of “knowledge distillation”. This means that small companies can also train their own AI models.

In other words, ChatGPT kicked off AI on the ground, and Vicuna tells us that a world of AI everywhere may be just around the corner.

5.2 Self-Instruct

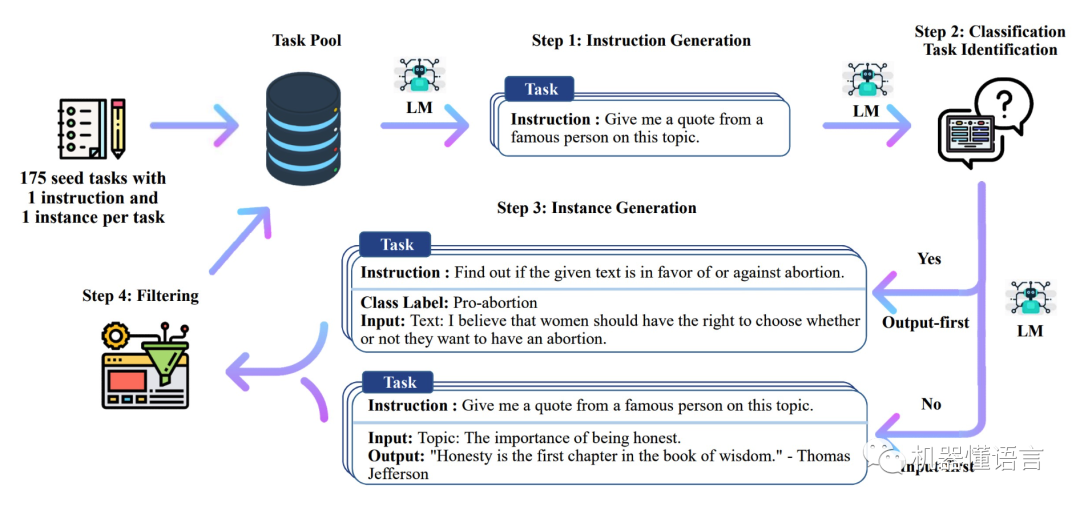

In 2022, the University of Washington proposed a framework, Self-Instruct, in the paper SELF-INSTRUCT: Aligning Language Models with Self Generated Instructions, which can use minimal manual annotation to generate a large number of data for construct-tuning; a 52K dataset for construct-tuning obtained using the above method was also released.

paper:https://arxiv.org/abs/2212.10560

Self-Instruct dataset construction method

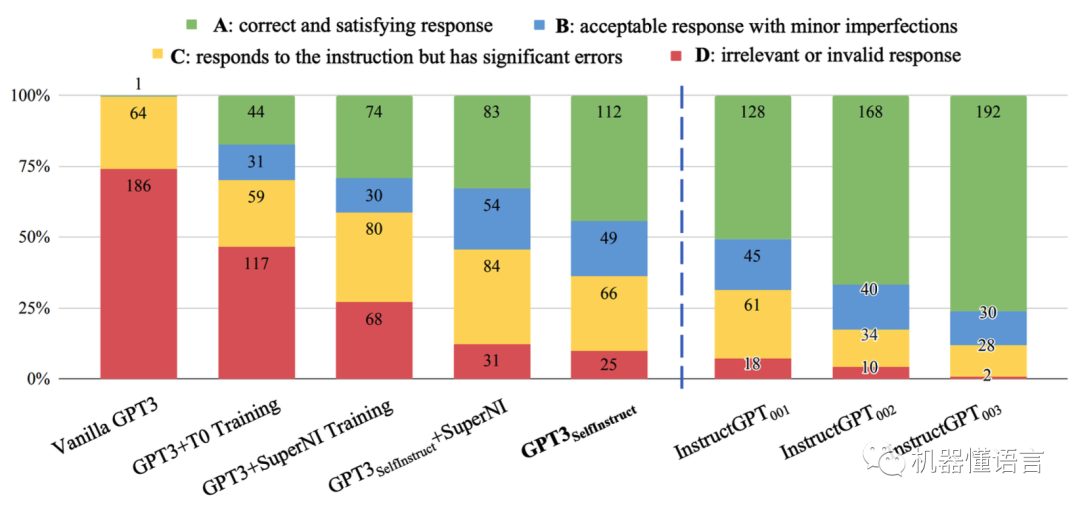

Effect Comparison:

Scoring of response results, A best, D worst; green best, red worst

It can be seen:

-

The original GPT3 was barely responsive to user commands, and the results of all instruction-tuned fine-tuned models improved significantly

-

Even though the data generated by Self-Instruct is noisy, the model GPT3 Self-Instruct is significantly better than the models GPT3 + T0 Training and GPT3 + SuperNI Training

-

The effect of the model GPT3 self-instruct is already very close to the effect of the model InstructGPT001

-

InstructGPT003 is the most effective

Instruction data (Instruction data) collection method:

-

Refer to the self-instruct data obtained by Alpaca based on GPT 3.5;

-

Refer to the self-instruct data obtained by Alpaca based on GPT4;

-

Data shared by users using ChatGPT ShareGPT.

6. Future directions

1. Further model scaling, improved model architecture and training

Improvements in the architecture of the model or the training process may lead to high-quality models with emergent capabilities and reduced computation.

One direction is to use sparse hybrid expert architectures that have better computational efficiency while maintaining constant input cost, use more localized learning strategies rather than backpropagation over all weights of the neural network, and use external storage to augment the model.

2. Scaling up data

Training on a sufficiently large dataset for a sufficiently long period of time has been shown to be the key to acquiring syntactic, semantic, and other-worldly knowledge for language models. Recently, Hoffmann et al. argued that previous work underestimated the amount of training data on which to train an optimal model and underestimated the importance of training data. Collecting large amounts of data on which a model can be trained for a longer period of time allows for a wider range of emergent capabilities within the constraints of a fixed model size.

3. Better Prompt

While fresh-shot prompting is simple and effective, improvements to the generalization of prompting will further extend the capabilities of the language model.

For example, augmenting with a few-shot example with intermediate steps enables the model to perform a multi-step inference task, which is not possible with standard prompting. In addition, a better explanation of why prompting works may be helpful in bootstrapping emergent capabilities on smaller models. A full understanding of why models work typically lags behind the development and popularity of the technology, and prompting best practices may change as more powerful models are developed.

4. Understanding emergent capacity

Understanding Emergence In addition to investigating how to further unlock emergent capabilities, one future research direction is how and why emergent capabilities appear in large language models. Understanding emergence is a very important direction that helps us determine what emergent capabilities a model can have and how to train a stronger semantic model.