0. Preface

Deep learning is a subfield of machine learning, based on traditional neural networks and convolutional neural networks, that can achieve accuracy rates close to or even beyond human levels in areas such as speech recognition, text recognition and image classification.

OpenCV A deep learning module has been added as a base module to its core algorithm with the help of the

CPU respond in singing

GPU to improve its performance.

1. Deep learning and convolutional neural networks

The outstanding performance of machine learning algorithms when applied to real-world problems makes them offer new ideas for relevant applications. Deep learning is based on the theory of neural networks, the rapid growth of deep learning is mainly due to the following reasons, firstly the available arithmetic power allows for the deployment of large scale neural networks enabling them to solve challenging problems, while the first generation of neural networks (perceptrons) had only one layer and only a few weight parameters to be tuned, today’s networks can have hundreds of layers and tens of millions of parameters to be optimized (hence the term deep networks); secondly, the huge amount of data makes it possible to train neural networks, which require thousands or even millions of labeled samples in order to achieve excellent performance (this is due to the very large number of parameters to be optimized).

One of the most important branches of deep networks is the convolutional neural network (

Convolutional Neural Networks,

CNN), which is based on a convolutional operation, the parameters to be learned are the values in all the filter kernels that make up the network. These filters are organized into multiple network layers, with early network layers extracting basic shapes of objects, such as lines and corners, while later layers gradually detect more complex patterns, such as eyes, mouths, and hair.

OpenCV A deep neural network module is included, mainly for importing the use of other machine learning libraries (e.g.

TensorFlow、

Caffe maybe

Torch) trained deep networks.

2. Face detection using deep learning

In this section, we’ll learn how the

OpenCV face detection using the pre-trained deep learning implementation in the We need to download the pre-trained face detection model and use the

OpenCV method to import the model and learn how to transform the input image or image frame into the structure needed for the deep learning model.

exist

OpenCV It is very easy to use deep learning models in the program, and only requires loading the pre-trained model files and understanding their basic configuration. We first need to

downloadingpre-trained deep neural network model, next we take face detection as an example and explain how to use the deep neural network model in the

OpenCV Deep neural network models are used in the

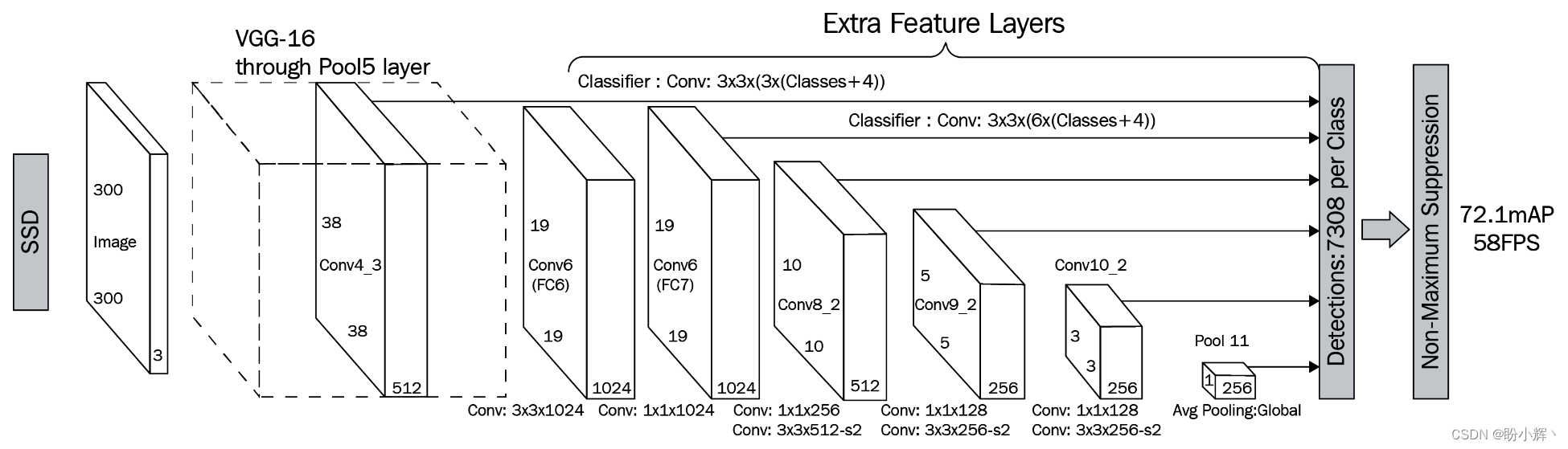

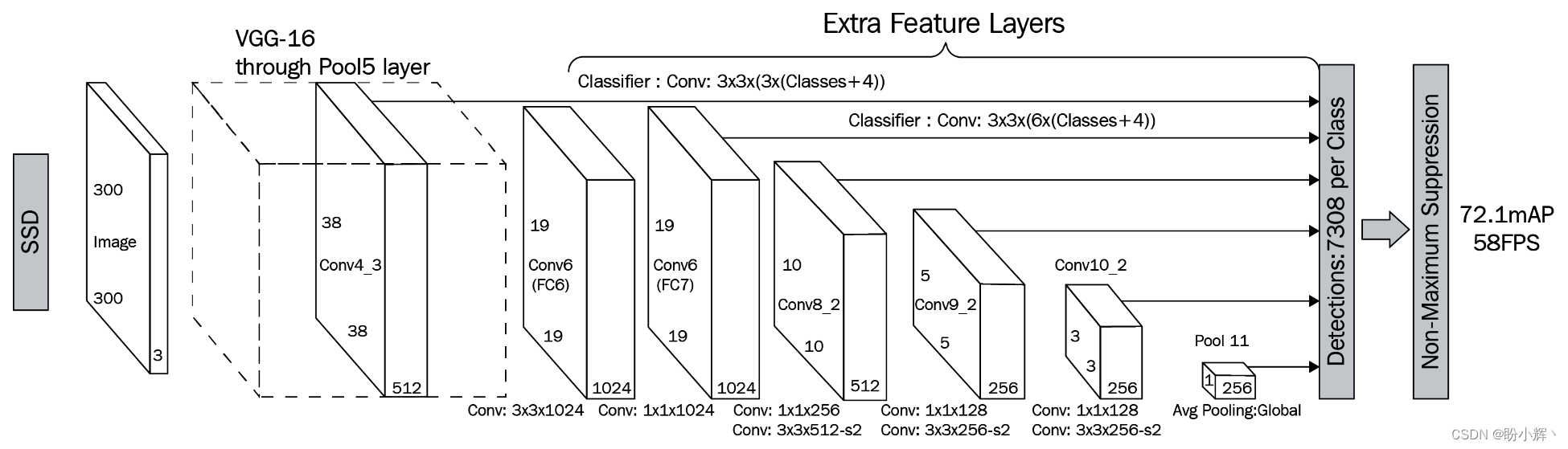

2.1 Introduction to SSDs

This section uses a single detector (

Single-Shot Detector,

SSD)

DNN algorithm to detect faces in an image.

SSD The algorithm predicts both bounding boxes and categories while processing the image.

SSD DNN The structure is as follows:

- Use sizes of

300x300 The input image of the

- The input image goes through multiple convolutional layers to get different features at different scales

- For each feature map, use the

3x3 Convolutional filters evaluate a set of default bounding boxes

- Predicted bounding box offsets and category probabilities when evaluating each default bounding box

The model architecture is shown below:

SSD is an

DNN algorithms that can be used to classify multiple categories, we can perform face detection using a modified network. In the

OpenCV in which the definition and use of

DNN The most important function of the model is

blobFomImage、

readNetFrom、

setInput respond in singing

forward。

utilization

blobFromImage function converts the input image to

blob, the calling method is as follows:

blobFromImage(image, scaleFactor, size, mean, swapRB, crop);

blobFromImage The meaning of each parameter in the function is as follows:

image: Input Imagesize: Size of the output imagemean: the scalar that will be subtracted from the image if mean subtraction is used in theswapRB = True When the result is(mean-R, mean-G, mean-B)scalefactor: Image value scaling factorswapRB: Flag bit indicating whether the exchange is required3 The first and last channels in the channel imagecrop: Flag bit indicating whether the image needs to be cropped after resizing

To load the model, we can use the

readFrom[type] The importer imports models trained using the following machine learning libraries:

- Caffe

- Tensorflow

- PyTorch

- Keras

Import deep learning models and create inputs

blob After that, you can use the

Net class

setInput function takes the input

blob input to the neural network, where the first parameter is the

blob input, and the second parameter is the name of the input layer (if more than one input layer exists, you need to specify the input layer name). Finally the function is called

forwardFor Inputs

blob Performs a forward computation and starts with

cv::Mat format to return the prediction results.

In the face detection algorithm, the returned

cv::Mat Having the following structure.

detection.size[2] is the number of objects detected.

detect.size[3] is the result data (bounding box data and confidence level) for each detection, which is structured as follows:

Column 0: Confidence in the existence of the objectColumn 1: Confidence level of the bounding boxColumn 2: Confidence level of detected facesColumn 3: Lower left bounding boxX coordinate (geometry)Column 4: Lower left bounding boxY coordinate (geometry)Column 5: Upper right corner bounding boxX coordinate (geometry)Column 6: Upper right corner bounding boxY coordinate (geometry)

The bounding box is related to the image size and when we want to draw the bounding box rectangle in the image, we need to multiply it by the image size.

2.2 Performing Face Detection Using SSDs

(1) downloadingThe face detector is modeled and saved in the

data folder, two files are usually needed: the weights file

deploy.prototxt and network structure documents

res10_300x300_ssd_iter_140000.caffemodel. In order to use the face detection algorithm in, we download the definition of the network structure of the

deploy.prototxt file and contains the network weights of the

res10_300x300_ssd_iter_140000.caffemodel Documentation.

(2) Using a pre-trained deep neural network (

Deep Neural Network,

DNN), create

face_detection.cpp file and import the required libraries:

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <iostream>

using namespace cv;

using namespace std;

using namespace cv::dnn;

(3) The statement needs to be in the

DNN Global variables used in the algorithm that define the input network, preprocessed data and the name of the file to be loaded:

float confidenceThreshold = 0.5;

String modelConfiguration = "deploy.prototxt";

String modelBinary = "res10_300x300_ssd_iter_140000.caffemodel";

const size_t inWidth = 300;

const size_t inHeight = 300;

const double inScaleFactor = 1.0;

const Scalar meanVal(104.0, 177.0, 123.0);

(4) establish

main function and add the

OpenCV dnn::Net class to load the model:

int main(int argc, char **argv) {

dnn::Net net = readNetFromCaffe(modelConfiguration, modelBinary);

(5) call (programming)

empty() function checking

DNN Whether or not it is loaded correctly:

if (net.empty()) {

cerr << "Can't load network by using the following files: " << endl;

cerr << "prototxt: " << modelConfiguration << endl;

cerr << "caffemodel: " << modelBinary << endl;

cerr << "Models are available here:" << endl;

cerr << "<OPENCV_SRC_DIR>/samples/dnn/face_detector" << endl;

cerr << "or here:" << endl;

cerr << "https://github.com/opencv/opencv/tree/master/samples/dnn/face_detector" << endl;

exit(-1);

}

(6) in the event that

DNN Loaded correctly, we can start capturing image frames. Check the number of input parameters in the application to determine if you need to load the defaults or the video file to be processed:

VideoCapture cap;

if (argc==1) {

cap = VideoCapture(0);

if(!cap.isOpened()) {

cout << "Couldn't find default camera" << endl;

return -1;

}

} else {

cap.open(argv[1]);

if(!cap.isOpened()) {

cout << "Couldn't open image or video: " << argv[1] << endl;

return -1;

}

}

(7) If the video capture object is opened correctly, you can start the main loop to acquire each video frame:

for(;;)

{

Mat frame;

cap >> frame; // get new frame

if (frame.empty()) {

waitKey();

break;

}

(8) exist

DNN algorithm to process the image. Prepare the image to be input to the

DNN algorithm for images that require the use of the

blobFromImage function will

OpenCV Mat The structure is converted to

DNN framework

blob,

OpenCV hit the nail on the head

cv::Mat class to store the

blob:

//! [Prepare blob]

Mat inputBlob = blobFromImage(frame, inScaleFactor,

Size(inWidth, inHeight), meanVal, false, false); //Convert Mat to batch of images

(9) Convert video frames to

blob After that, input to the

DNN and use the forward propagation function

forward Perform testing:

//! [Set input blob]

net.setInput(inputBlob, "data"); // set network inputs

//! [Make forward pass]

Mat detection = net.forward("detection_out"); // Calculate the output

Mat detectionMat(detection.size[2], detection.size[3], CV_32F, detection.ptr<float>());

(10) Draw a rectangular box for each detected face in the image and give its confidence level:

for(int i = 0; i < detectionMat.rows; i++)

{

float confidence = detectionMat.at<float>(i, 2);

if(confidence > confidenceThreshold)

{

int xLeftBottom = static_cast<int>(detectionMat.at<float>(i, 3) * frame.cols);

int yLeftBottom = static_cast<int>(detectionMat.at<float>(i, 4) * frame.rows);

int xRightTop = static_cast<int>(detectionMat.at<float>(i, 5) * frame.cols);

int yRightTop = static_cast<int>(detectionMat.at<float>(i, 6) * frame.rows);

Rect object((int)xLeftBottom, (int)yLeftBottom,

(int)(xRightTop - xLeftBottom),

(int)(yRightTop - yLeftBottom));

rectangle(frame, object, Scalar(0, 255, 0));

stringstream ss;

ss.str("");

ss << confidence;

String conf(ss.str());

String label = "Face: " + conf;

int baseLine = 0;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

rectangle(frame, Rect(Point(xLeftBottom, yLeftBottom - labelSize.height),

Size(labelSize.width, labelSize.height + baseLine)),

Scalar(255, 255, 255), FILLED);

putText(frame, label, Point(xLeftBottom, yLeftBottom),

FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0,0,0));

}

}

Executing the above code, the test results obtained are shown below:

3. Complete code

Full Code

face_detection.cpp As shown below:

#include <opencv2/dnn.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <iostream>

using namespace cv;

using namespace std;

using namespace cv::dnn;

float confidenceThreshold = 0.5;

String modelConfiguration = "deploy.prototxt";

String modelBinary = "res10_300x300_ssd_iter_140000.caffemodel";

const size_t inWidth = 300;

const size_t inHeight = 300;

const double inScaleFactor = 1.0;

const Scalar meanVal(104.0, 177.0, 123.0);

int main(int argc, char **argv) {

dnn::Net net = readNetFromCaffe(modelConfiguration, modelBinary);

if (net.empty()) {

cerr << "Can't load network by using the following files: " << endl;

cerr << "prototxt: " << modelConfiguration << endl;

cerr << "caffemodel: " << modelBinary << endl;

cerr << "Models are available here:" << endl;

cerr << "<OPENCV_SRC_DIR>/samples/dnn/face_detector" << endl;

cerr << "or here:" << endl;

cerr << "https://github.com/opencv/opencv/tree/master/samples/dnn/face_detector" << endl;

exit(-1);

}

VideoCapture cap;

if (argc==1) {

cap = VideoCapture(0);

if(!cap.isOpened()) {

cout << "Couldn't find default camera" << endl;

return -1;

}

} else {

cap.open(argv[1]);

if(!cap.isOpened()) {

cout << "Couldn't open image or video: " << argv[1] << endl;

return -1;

}

}

for(;;)

{

Mat frame;

cap >> frame; // get new frame

if (frame.empty()) {

waitKey();

break;

}

//! [Prepare blob]

Mat inputBlob = blobFromImage(frame, inScaleFactor,

Size(inWidth, inHeight), meanVal, false, false); //Convert Mat to batch of images

//! [Set input blob]

net.setInput(inputBlob, "data"); // set network inputs

//! [Make forward pass]

Mat detection = net.forward("detection_out"); // Calculate the output

Mat detectionMat(detection.size[2], detection.size[3], CV_32F, detection.ptr<float>());

for(int i = 0; i < detectionMat.rows; i++)

{

float confidence = detectionMat.at<float>(i, 2);

if(confidence > confidenceThreshold)

{

int xLeftBottom = static_cast<int>(detectionMat.at<float>(i, 3) * frame.cols);

int yLeftBottom = static_cast<int>(detectionMat.at<float>(i, 4) * frame.rows);

int xRightTop = static_cast<int>(detectionMat.at<float>(i, 5) * frame.cols);

int yRightTop = static_cast<int>(detectionMat.at<float>(i, 6) * frame.rows);

Rect object((int)xLeftBottom, (int)yLeftBottom,

(int)(xRightTop - xLeftBottom),

(int)(yRightTop - yLeftBottom));

rectangle(frame, object, Scalar(0, 255, 0));

stringstream ss;

ss.str("");

ss << confidence;

String conf(ss.str());

String label = "Face: " + conf;

int baseLine = 0;

Size labelSize = getTextSize(label, FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);

rectangle(frame, Rect(Point(xLeftBottom, yLeftBottom - labelSize.height),

Size(labelSize.width, labelSize.height + baseLine)),

Scalar(255, 255, 255), FILLED);

putText(frame, label, Point(xLeftBottom, yLeftBottom),

FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0,0,0));

}

}

imshow("detections", frame);

if (waitKey(1) >= 0) break;

}

return 0;

}

wrap-up

In this paper, we begin by

cv2::dnn::blobFromImage() respond in singing

cv2::dnn::blobFromImages() function understands how the

OpenCV Constructing network inputs in

blob, and then apply popular deep learning model architectures to target detection tasks through hands-on learning to build

OpenCV Computer Vision Project.