Article Catalog

- Blogger’s Boutique Column Navigation

- Note: The following source code can be run, different projects involved in the function are analyzed in detail.

- 11、Image project practice

- (i) Bank card number identification — sort_contours(), resize()

- (ii) document scanning OCR recognition — cv2.getPerspectiveTransform() + cv2.warpPerspective(), np.argmin(), np.argmax(), np.diff()

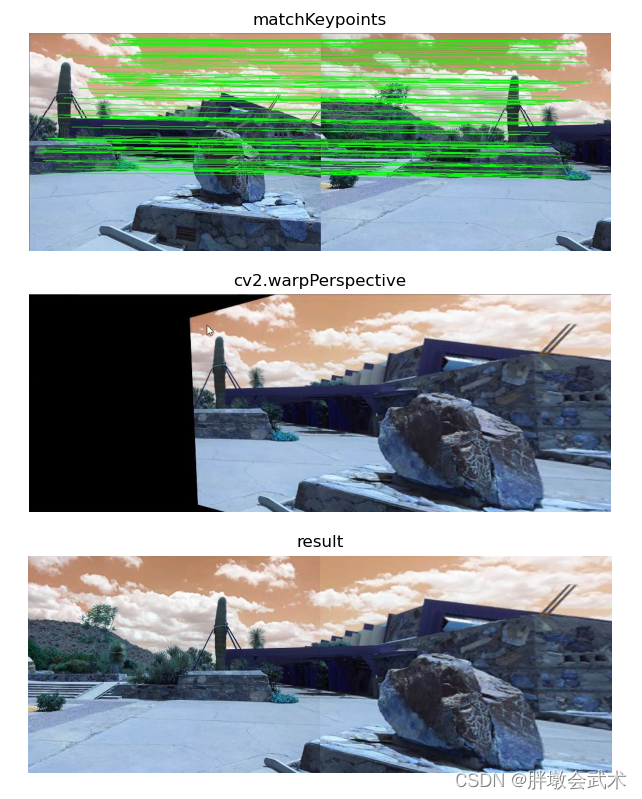

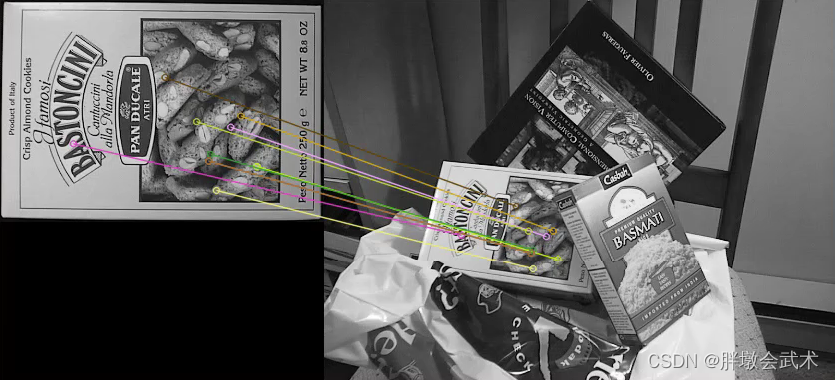

- detectAndDescribe(), matchKeypoints(), cv2.findHomography(), cv2.warpPerspective(), drawMatches()

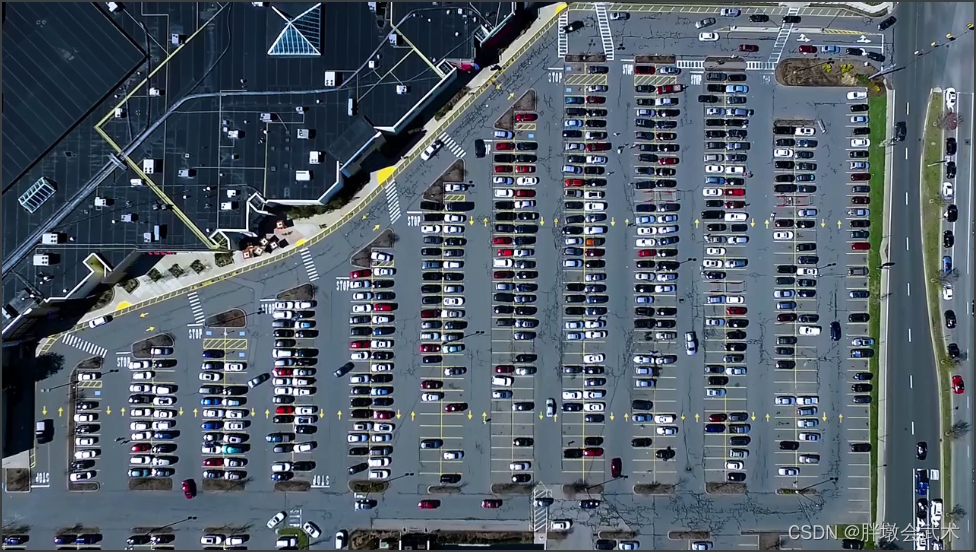

- (iv) Parking lot space detection (Keras-based CNN classification) — pickle.dump(), pickle.load(), cv2.fillPoly(), cv2.bitwise_and(), cv2.circle(), cv2. HoughLinesP(), cv2.line()

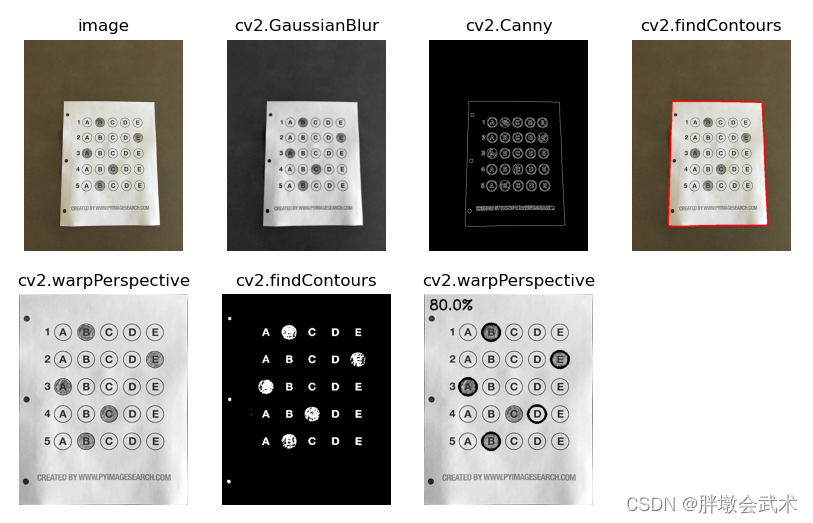

- (v) Answer key recognition and marking — cv2.putText(), cv2.countNonZero()

- (vi) Background modeling (dynamic target recognition) — cv2.getStructuringElement(), cv2.createBackgroundSubtractorMOG2()

- (vii) Optical flow estimation (track point tracking) – cv2.goodFeaturesToTrack(), cv2.calcOpticalFlowPyrLK()

- (viii) Classification of DNN modules — cv2.dnn.blobFromImage()

- (ix) Rectangular Doodle Drawing Board — cv.namedWindow(), cv.setMouseCallback()

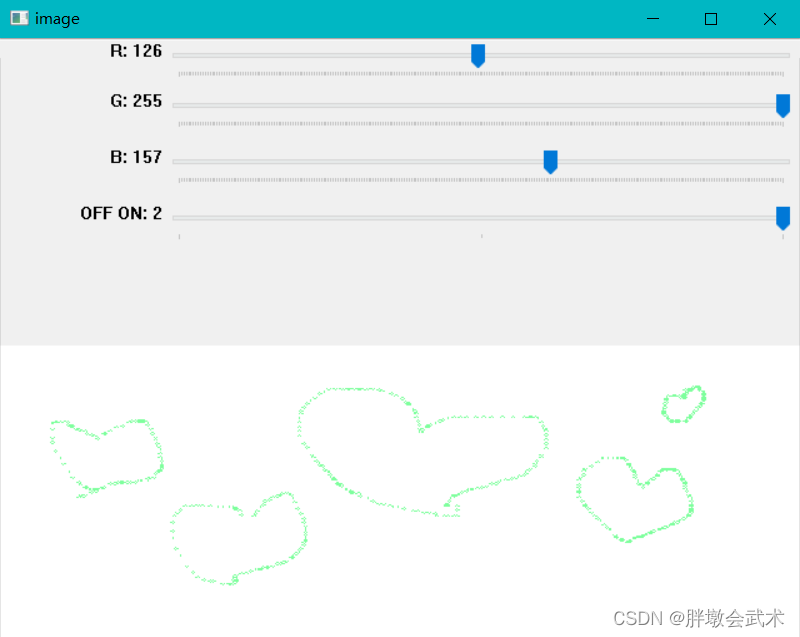

- (x) create trackbar — createTrackbar(), cv2.getTrackbarPos()

- (xi) binary-based implementation of portrait keying and background replacement — np.where(), np.uint8()

- 22. Basic image operation

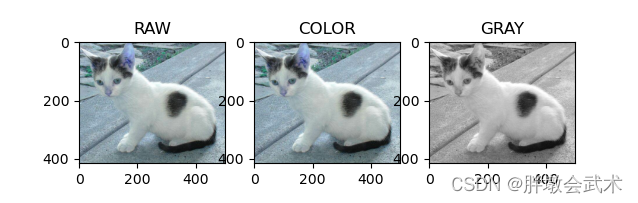

- (i) Image reading, saving and displaying — cv2.imread(), cv2.imwrite(), cv2.imshow()

- (ii) Video reading and processing — cv2.VideoCapture()

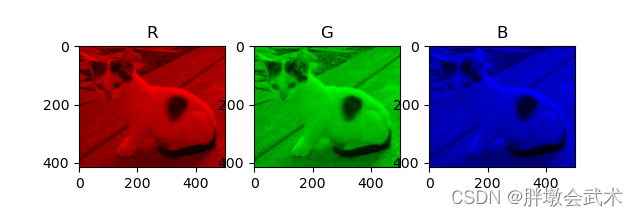

- (iii) Tricolor map of the image — cv2.split() + cv.merge()

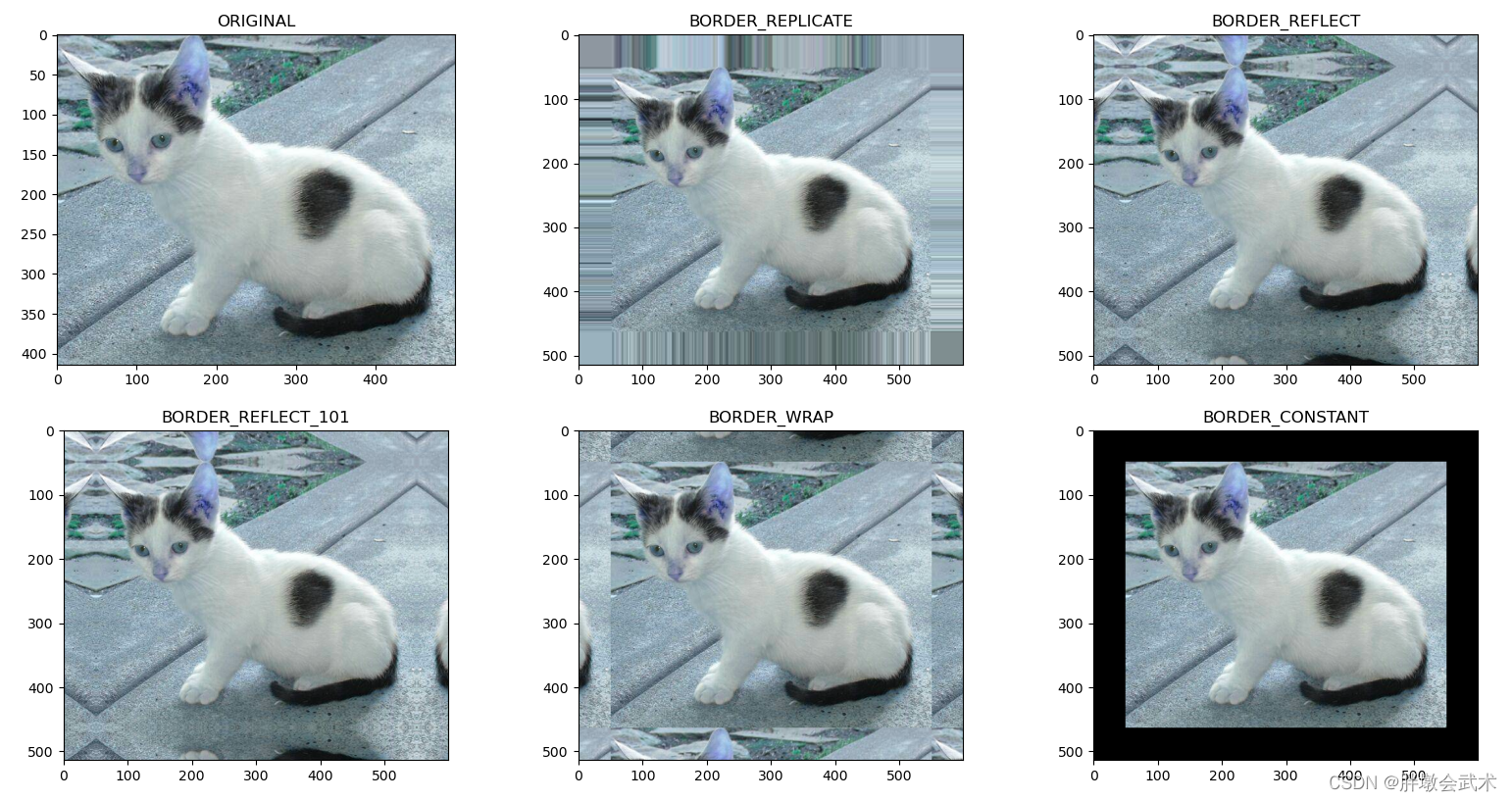

- (iv) Edge filling of an image — cv2.copyMakeBorder()

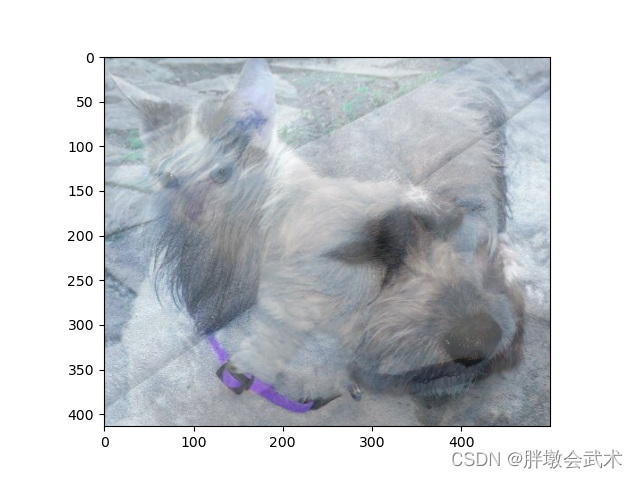

- (v) image fusion — cv2.addWeighted()

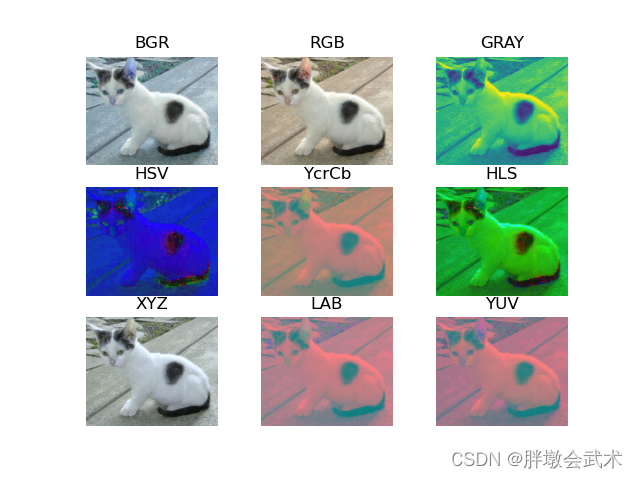

- (vi) color space conversion — cv2.cvtColor()

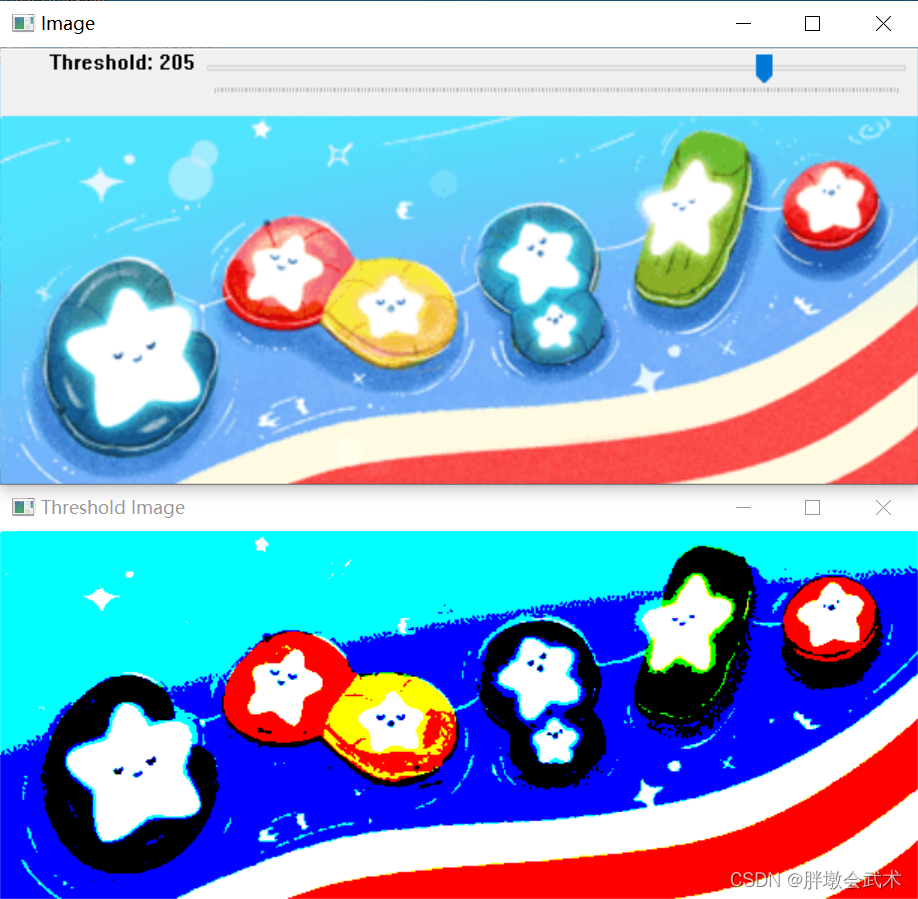

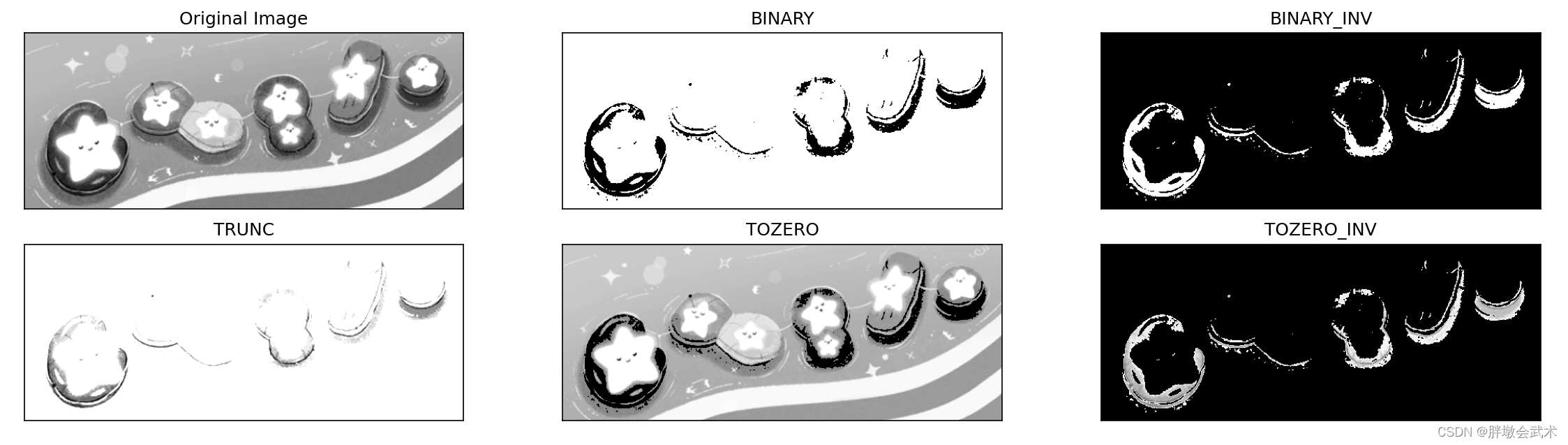

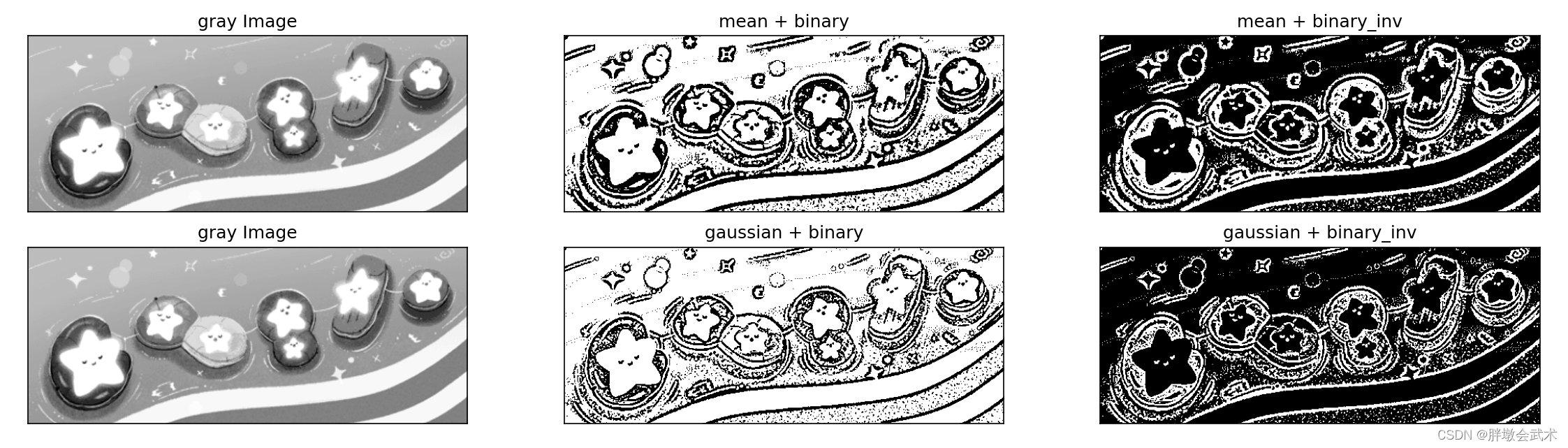

- (vii) Thresholding — cv2.threshold() + cv2.adaptiveThreshold()

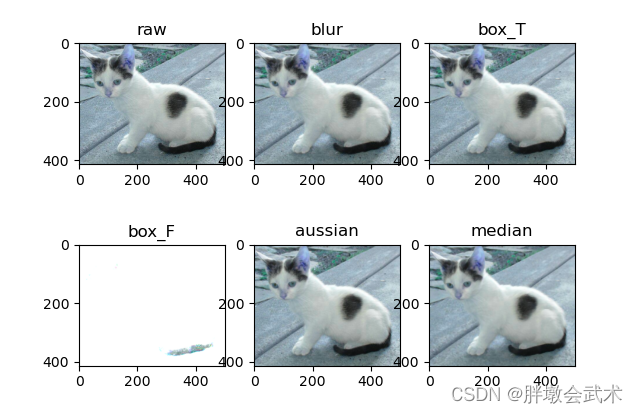

- (viii) Mean/Gaussian/box/median filter — cv2.blur() + cv2.boxFilter() + cv2.GaussianBlur() + cv2.medianBlur()

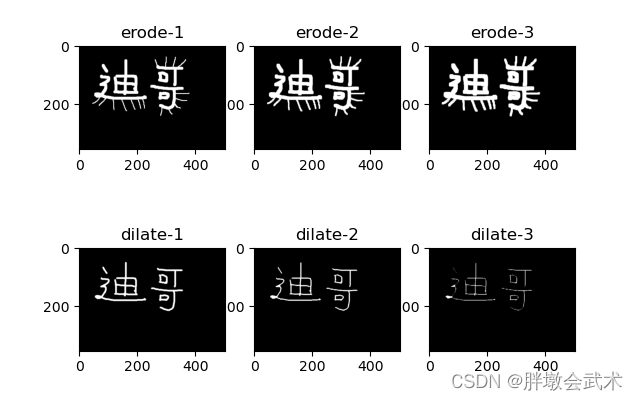

- (ix) Erosion and dilation — cv2.erode() vs. cv2.dilate() + np.zeros() vs. np.ones()

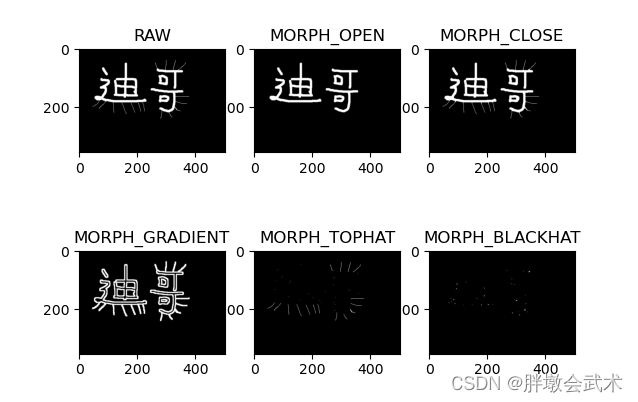

- (x) Morphological changes — cv2.morphologyEx()

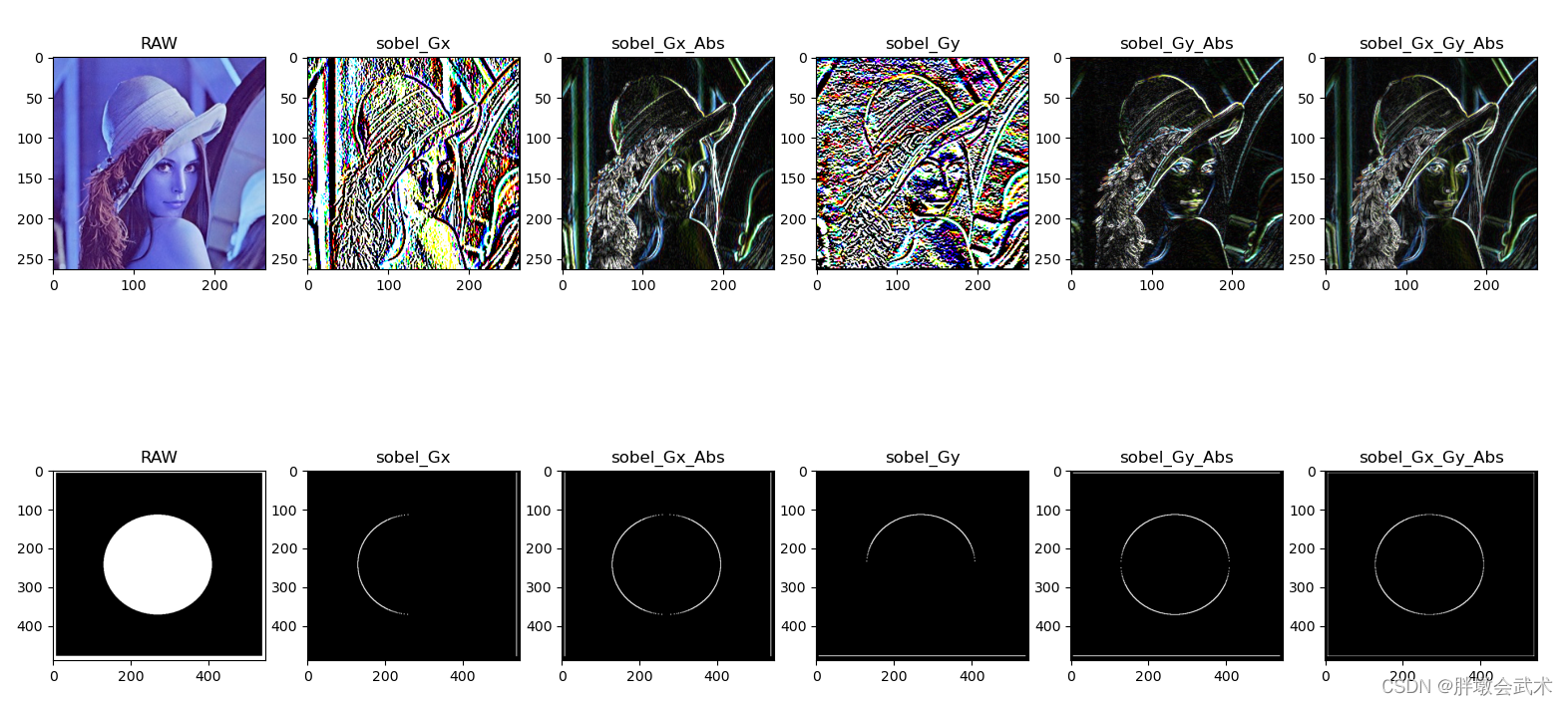

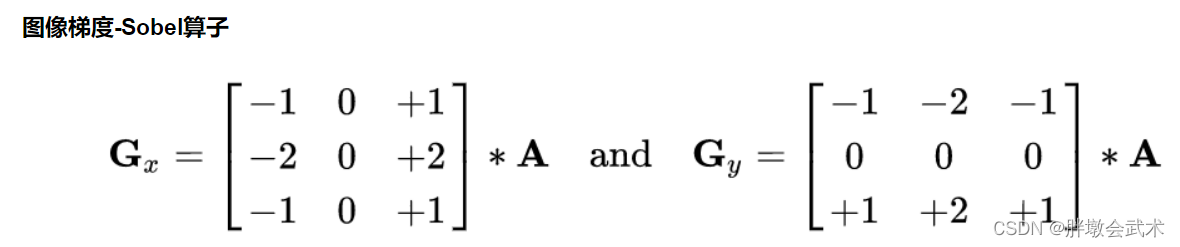

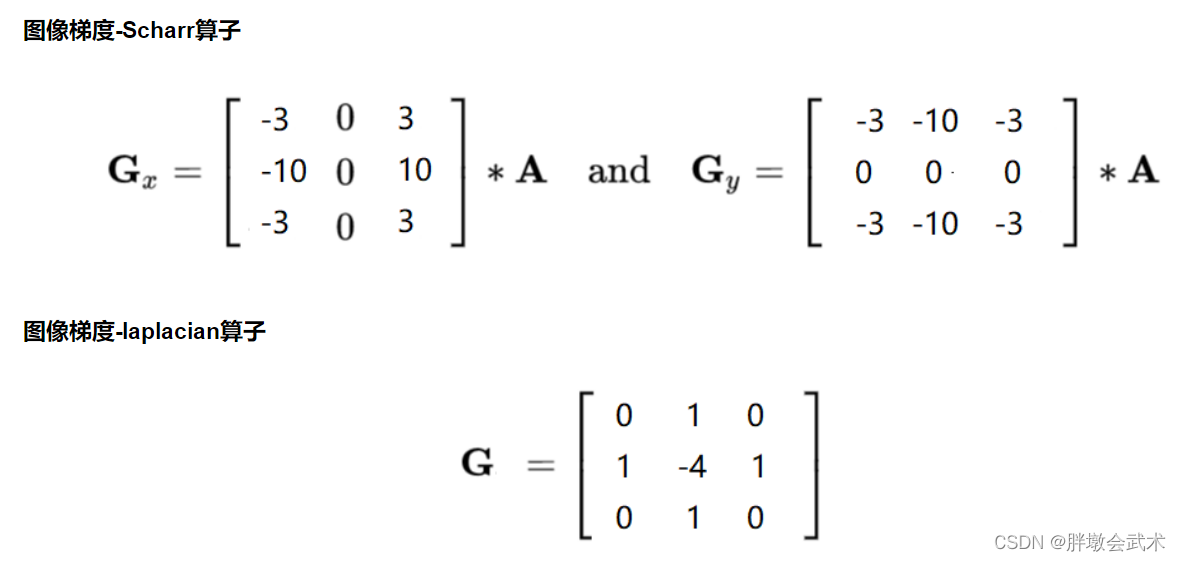

- (xi) Edge detection operators — cv2.sobel(), cv2.Scharr(), cv2.Laplacian(), cv2.Canny()

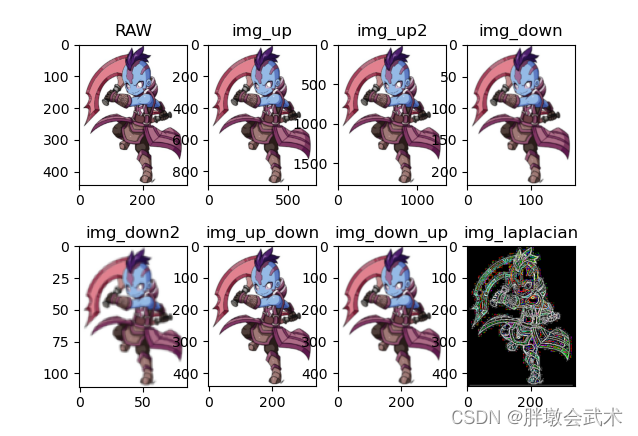

- (xii) Image pyramid — cv2.pyrUp(), cv2.pyrDown()

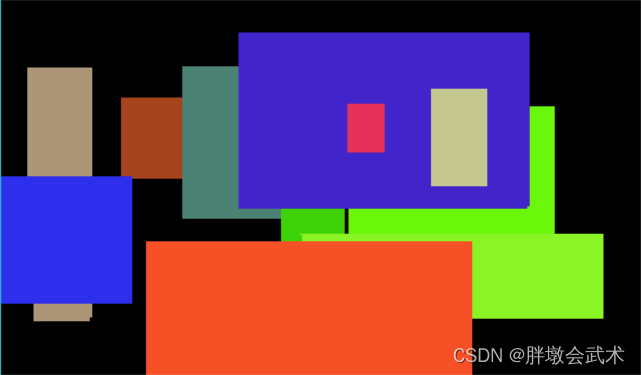

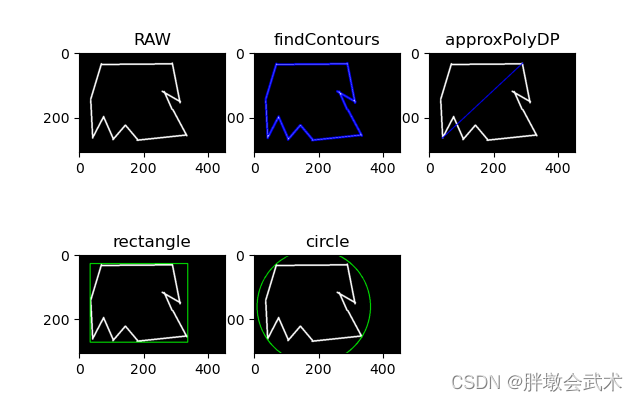

- (xiii) image contour detection — cv2.findContours(), cv2.drawContours(), cv2.arcLength(), cv2.approxPolyDP(), cv2.rectangle()

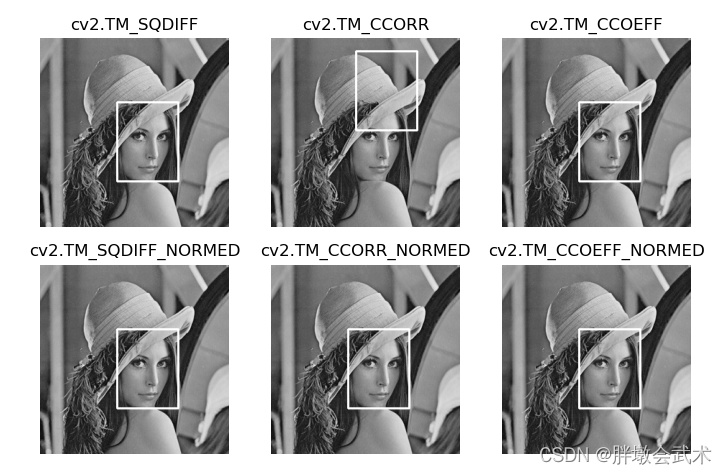

- (xiv) template matching — cv2.matchTemplate(), cv2.minMaxLoc()

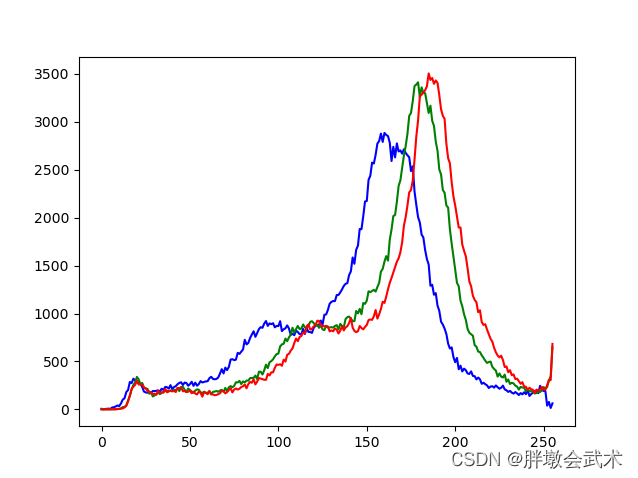

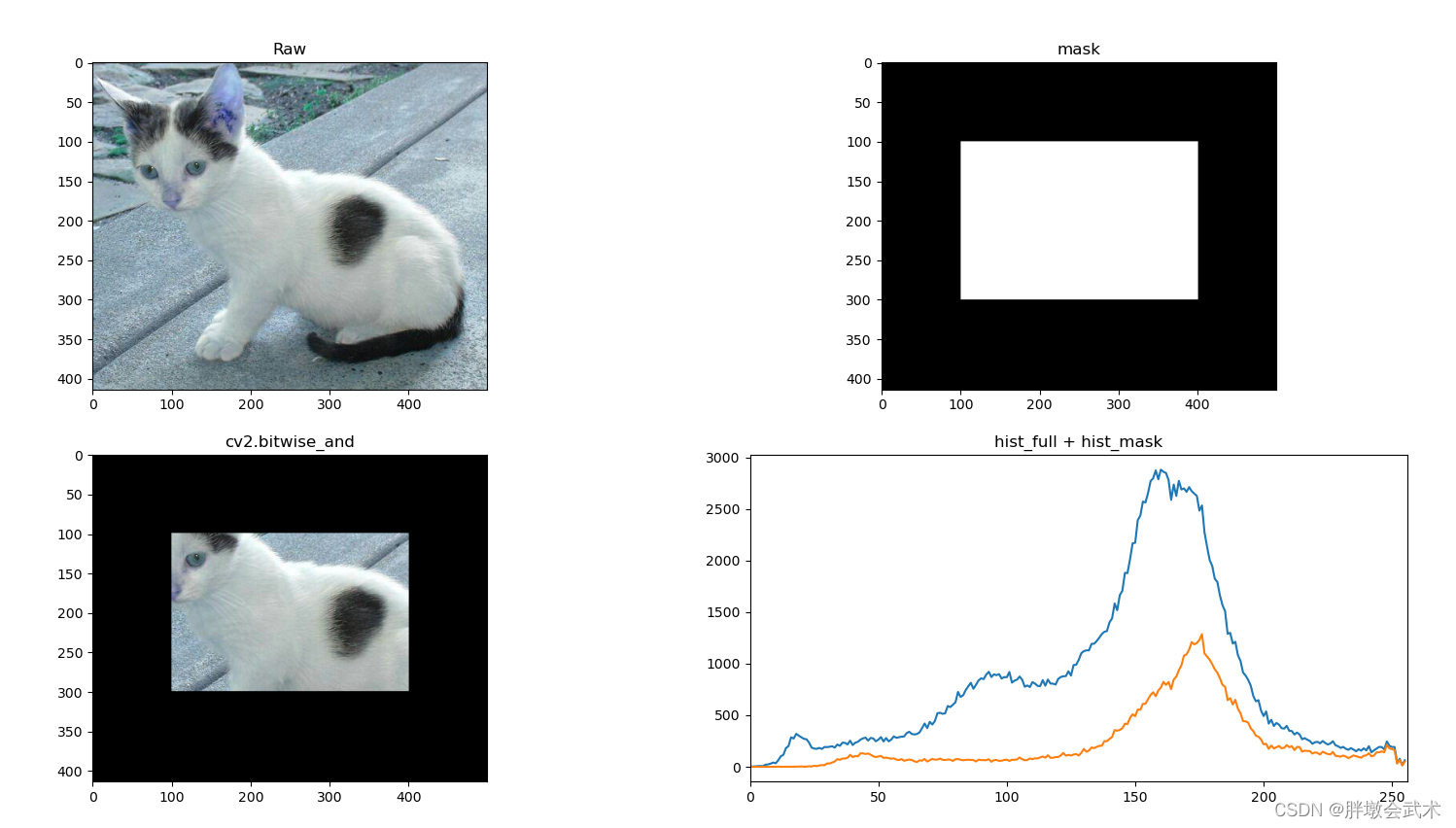

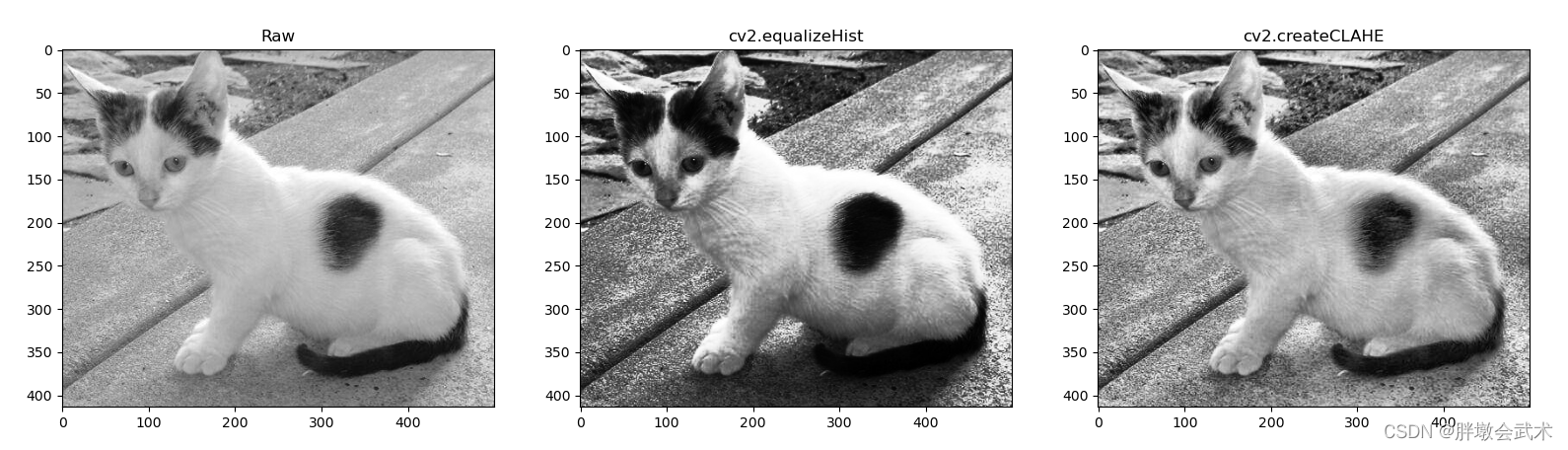

- (xv) Histogram (equalize) — cv2.calcHist(), img.ravel(), cv2.bitwise_and(), cv2.equalizeHist(), cv2.createCLAHE()

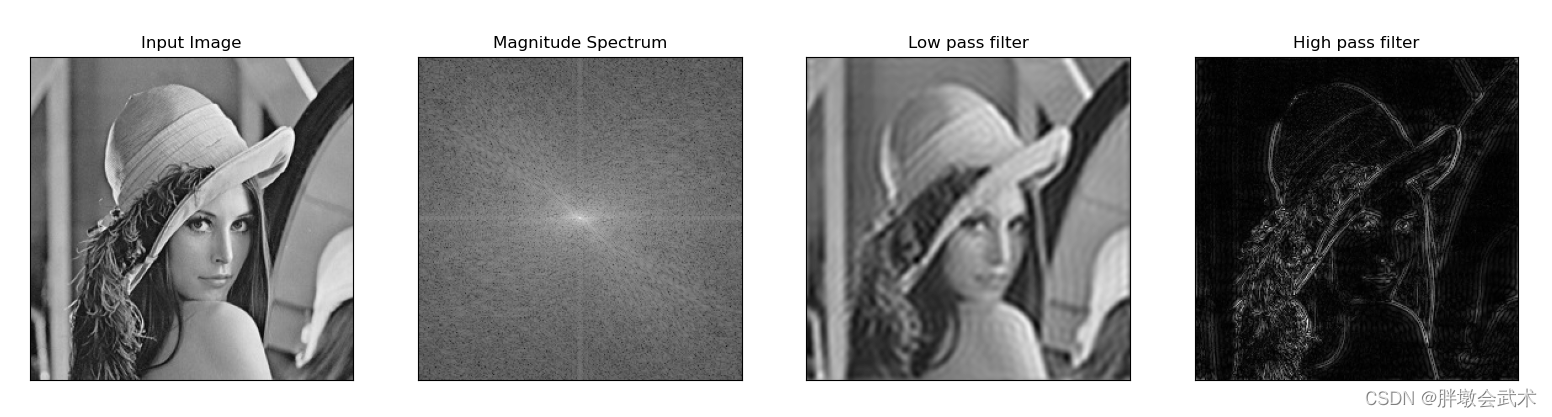

- (xvi) Fourier transform + lowpass/highpass filtering — cv2.dft(), cv2.idft(), np.fft.fftshift(), np.fft.ifftshift(), cv2.magnitud()

- (xvii) Harris corner detection — cv2.cornerHarris(), np.float32()

- (xviii) SIFT scale-invariant feature detection — cv2.xfeatures2d.SIFT_create(), sift.detectAndCompute(), sift.detect(), sift.compute(), cv2. drawKeypoints

- (xix) Violent feature matching — cv2.BFMatcher_create(), bf.match(), bf_knn.knnMatch(), cv2.drawMatches()

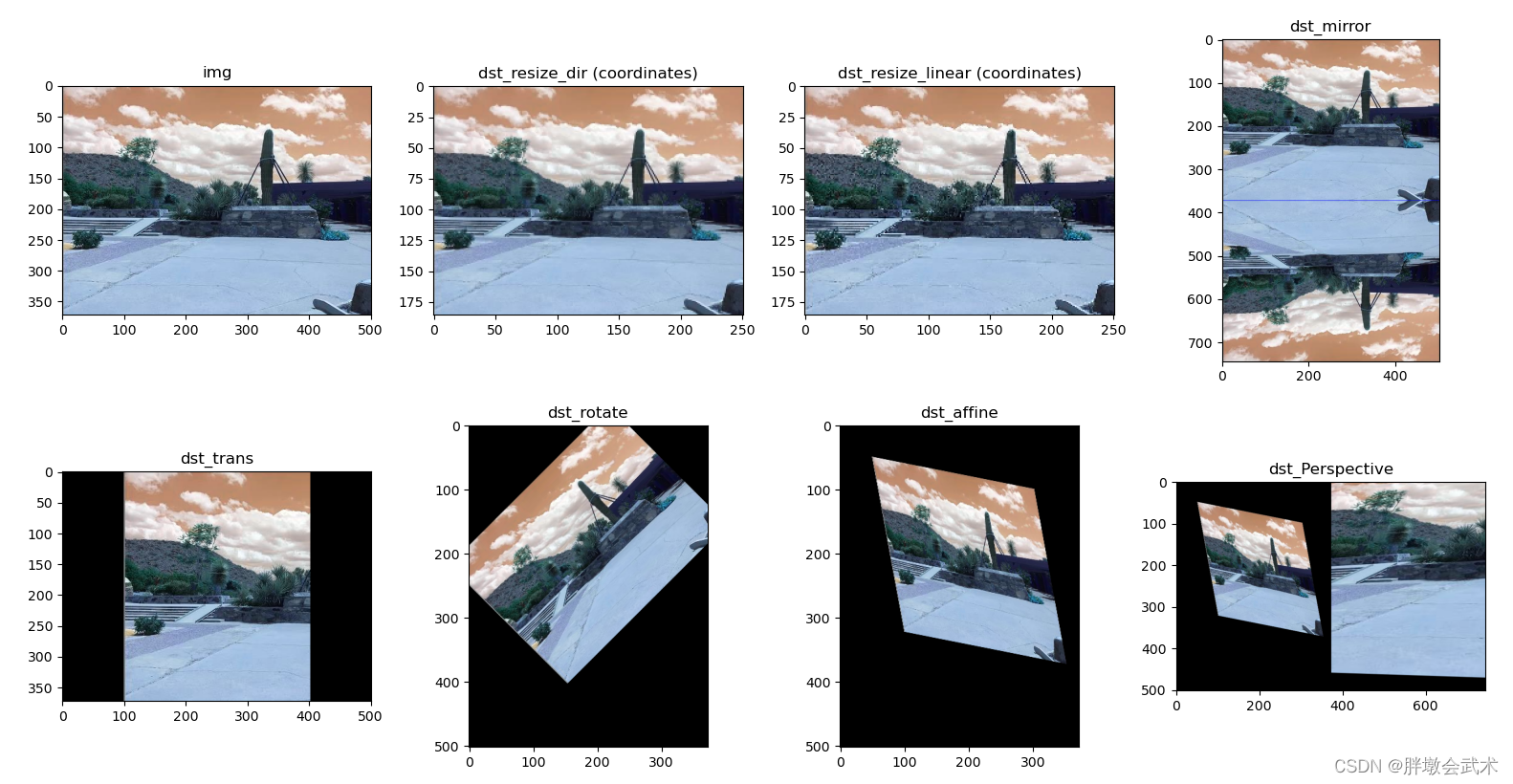

- (xx) Image scaling + mirroring + translation + rotation + affine transformation + perspective transformation — cv2.resize(), cv2.getRotationMatrix2D(), cv2.getAffineTransform(), cv2. getPerspectiveTransform(), cv2.warpPerspective(), cv2.warpAffine()

Blogger’s Boutique Column Navigation

- 🍕 Column:Pytorch project in practice

- 🌭 Opencv Image Processing (full)

- 🍱 Opencv C++ Image Processing (full)

- 🍳 Pillow Image Processing (PIL.Image)

- 🍝 Pytorch Basics (full)

- 🥙 Python common built-in functions (all)

- 🍰 Classical models of convolutional neural networks CNN

- 🍟 Hands-on knowledge of Convolutional Neural Networks CNNs

- 🥘 Thirty thousand words of hardcore detail: yolov1, yolov2, yolov3, yolov4, yolov5, yolov7

Note: The following source code can be run, different projects involved in the function are analyzed in detail.

11、Image project practice

(i) Bank card number identification — sort_contours(), resize()

[Credit card testing process explained in detail]

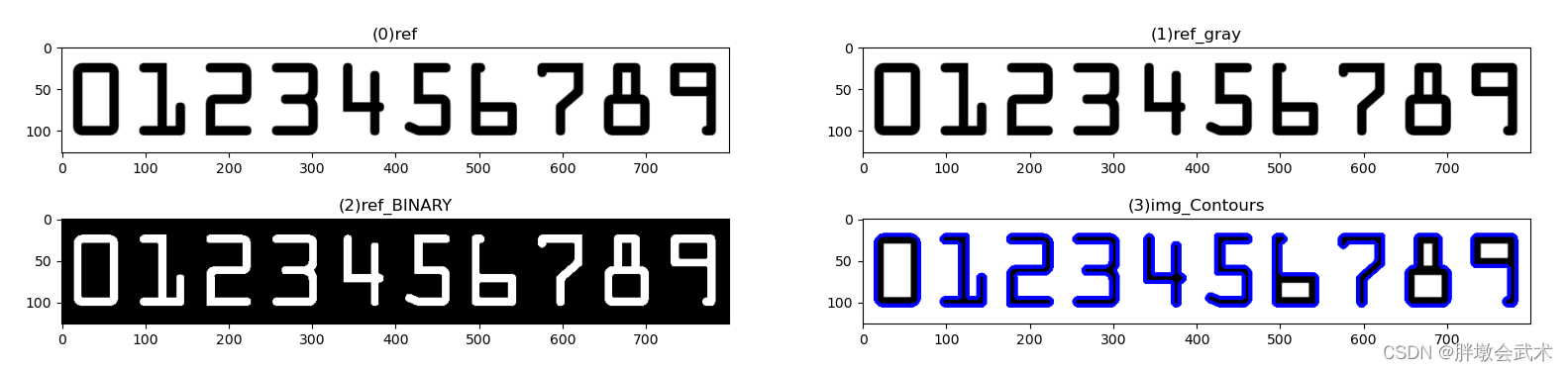

11. Extract each number of the template

1111, read template image, convert to grayscale map, convert to binary map

1122, contour detection, drawing of contours, sorting of all contours obtained (numbering)

1133, Extract all contours of the template – every number

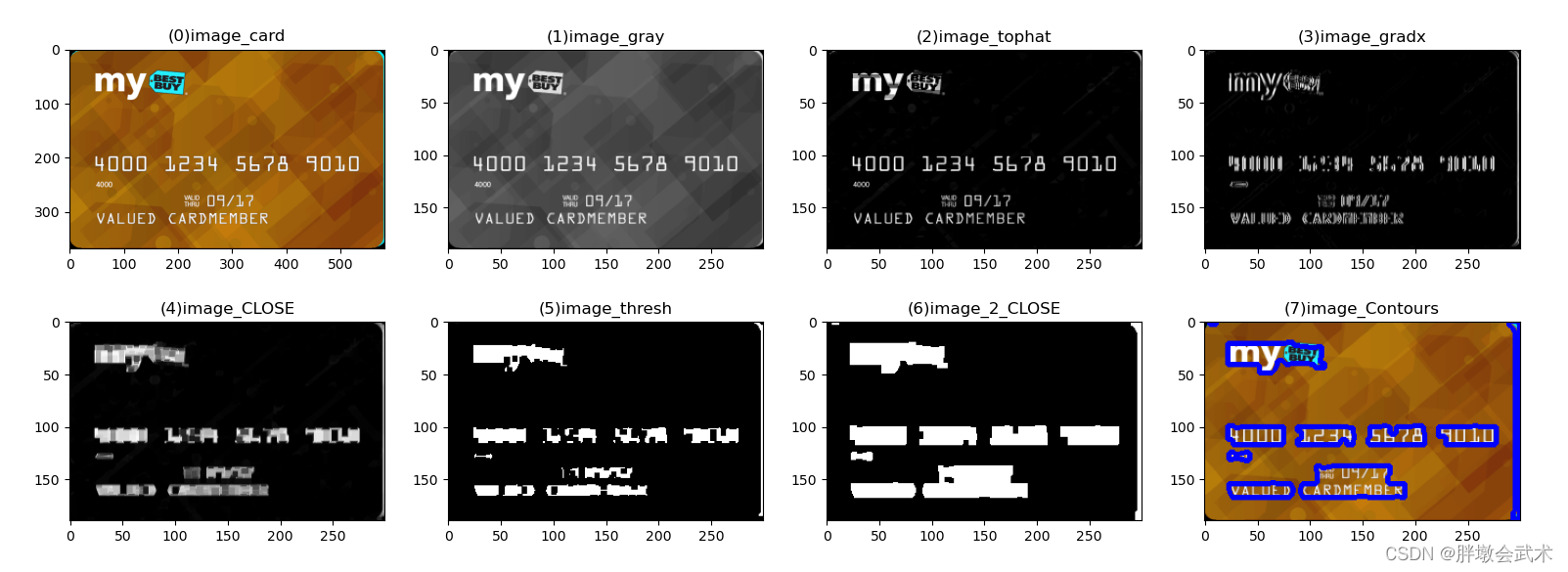

22. Extract all the outlines of the credit card

2211, read image to be detected, convert to grayscale, top hat operation, sobel operator operation, closure operation, binarization, quadratic expansion + erosion

2222, Contour detection, Contour drawing

33, extract the bank card “a group of four numbers” outline, and then each outline with the template of each number to match, get the maximum matching results

3311. Among all the contours, identify the contours of A Set of Four Numbers (there are four in total), and perform thresholding, contour detection, and contour ranking

3322, In A Set of Four Numbers, extract the outline as well as the coordinates of each number and perform template matching to get the maximum matching result

44. On the original image, use a rectangle to draw a “group of four numbers” and display all the matching results on the original image

import cv2 # opencv reads in BGR format

import matplotlib.pyplot as plt # Matplotlib is RGB

import numpy as np

def sort_contours(cnt_s, method="left-to-right"):

reverse = False

ii_myutils = 0

if method == "right-to-left" or method == "bottom-to-top":

reverse = True

if method == "top-to-bottom" or method == "bottom-to-top":

ii_myutils = 1

bounding_boxes = [cv2.boundingRect(cc_myutils) for cc_myutils in cnt_s] # Wrap the found shapes with a minimal rectangle x,y,h,w

(cnt_s, bounding_boxes) = zip(*sorted(zip(cnt_s, bounding_boxes), key=lambda b: b[1][ii_myutils], reverse=reverse))

return cnt_s, bounding_boxes

def resize(image, width=None, height=None, inter=cv2.INTER_AREA):

dim_myutils = None

(h_myutils, w_myutils) = image.shape[:2]

if width is None and height is None:

return image

if width is None:

r_myutils = height / float(h_myutils)

dim_myutils = (int(w_myutils * r_myutils), height)

else:

r_myutils = width / float(w_myutils)

dim_myutils = (width, int(h_myutils * r_myutils))

resized = cv2.resize(image, dim_myutils, interpolation=inter)

return resized

######################################################################

# 11. Extract each number of the template

######################################################################

# Read template images (bank cards correspond to number templates from 0 to 9)

img = cv2.imread(r'ocr_a_reference.png')

ref_gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) # convert to grayscale

ref_BINARY = cv2.threshold(ref_gray, 10, 255, cv2.THRESH_BINARY_INV)[1] # Convert to binary image

"""#######################################

contours, hierarchy = cv2.findContours(img, mode, method)

# Input parameter mode: contour retrieval mode

# (1) RETR_EXTERNAL: Retrieves only the outermost contour;

# (2) RETR_LIST: Retrieves all contours, but the detected contours are not hierarchically related and are stored in a linked list.

# (3) RETR_CCOMP: Retrieves all contours and creates two levels of contours. The top level is the outer boundaries of the parts and the inner level is the boundary information; the

# (4) RETR_TREE: Retrieves all profiles and builds a hierarchical tree structure of profiles; (most commonly used)

# method: contour approximation method

# (1) CHAIN_APPROX_NONE: Stores all contour points where the pixel position difference between two neighboring points is no more than 1. Example: four edges of a matrix. (most commonly used)

# (2) CHAIN_APPROX_SIMPLE: Compresses elements in the horizontal, vertical, and diagonal directions, keeping only the endpoint coordinates in that direction. Example: 4 contour points of a rectangle.

# Output parameters contours: all contours

# hierarchy: attributes corresponding to each profile

# Note 0: A contour is a curve that joins consecutive points (with boundaries) together, with the same color or gray scale. Contours are useful in shape analysis and object detection and recognition.

# Note 1: The function input image is a binary map, i.e. black and white (not grayscale). So the read image should be converted to grayscale first, and then to binary map.

# Note 2: The function returns only two values in opencv2: contours, hierarchy.

# Note 3: The function returns three values in opencv3: img, countours, hierarchy

#######################################"""

refCnts, hierarchy = cv2.findContours(ref_BINARY.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

"""#######################################

# draw contours: v2.drawContours(image, contours, contourIdx, color, thickness) ---- (on image) draws the contours of the image

# Input parameter image: target image to draw the outline on, note that it will change the original image.

# contours: contour points, the first return value of the above cv2.findContours() function

# contourIdx: index of the contour, indicates the first contour to be drawn. -1 means all contours are drawn

# color: the color (RGB) to draw the outline in

# thickness: (optional parameter) the width of the contour line, -1 for fill

# Note: The image needs a copy of copy() first, otherwise (the image of the assignment operation) and the original image will change together.

#######################################"""

img_Contours = img.copy()

cv2.drawContours(img_Contours, refCnts, -1, (0, 0, 255), 3)

# print(np.array(refCnts).shape)

# Drawing (image processing and obtaining graphical display of contours)

plt.subplot(221), plt.imshow(img, 'gray'), plt.title('(0)ref')

plt.subplot(222), plt.imshow(ref_gray, 'gray'), plt.title('(1)ref_gray')

plt.subplot(223), plt.imshow(ref_BINARY, 'gray'), plt.title('(2)ref_BINARY')

plt.subplot(224), plt.imshow(img_Contours, 'gray'), plt.title('(3)img_Contours')

plt.show()

#######################################

# Sort (number) all resulting contours: left to right, top to bottom

refCnts = sort_contours(refCnts, method="left-to-right")[0]

#######################################

# Extract all the contours (of the template) - Numeric

digits = {} # hold the digits of each template - tuple initialization

for (i, c) in enumerate(refCnts):

(x, y, w, h) = cv2.boundingRect(c) # get the (x, y) coordinates, width and length of the upper left corner of the outer rectangle of the outline (number)

roi = ref_BINARY[y:y + h, x:x + w] # get the coordinates of the outer rectangle

roi = cv2.resize(roi, (57, 88)) # resize the image of the region of interest (numbers) to the same size

digits[i] = roi # save each template (digit)

######################################################################

# 22. Extract all the outlines of the credit card

"""######################################################################

# Initialize convolution kernel: getStructuringElement(shape, ksize)

# Input parameters: shape

# (1) MORPH_RECT rectangle

# (2) MORPH_CROSS Cross-shaped

# (3) MORPH_ELLIPSE Ellipse

# ksize: convolution kernel size. For example, (3, 3) means 3*3 convolution kernel

######################################"""

rect_Kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (9, 3))

square_Kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (5, 5))

######################################

# Read the input image (credit card image to be detected) and preprocess it

image_card = cv2.imread(r'images\credit_card_01.png')

image_resize = resize(image_card, width=300)

image_gray = cv2.cvtColor(image_resize, cv2.COLOR_BGR2GRAY)

"""#######################################

# Morphology change function: cv2.morphologyEx(src, op, kernel)

# Parameter description: src incoming image, the way op changes, kernel means the size of the box

There are five ways in which # op changes:

# open: cv2.MORPH_OPEN Erode first, then expand. The open operation can be used to eliminate small black dots.

# Close: cv2.MORPH_CLOSE Swells first, then erodes. The close operation can be used to highlight edge features.

# Morphological gradient (morph-grad): cv2.MORPH_GRADIENT Swells the image (subtracts) the eroded image. Can highlight the edges of clumps (blobs), preserving the edge contours of objects.

# Top Hat (top-hat): cv2.MORPH_TOPHAT The result of the original input (minus) open operation. Will highlight parts that are brighter than the original outline.

# black-hat: cv2.MORPH_BLACKHAT The result of the closed operation (subtracting) the original input will emphasize the parts that are darker than the original outline.

#######################################"""

image_tophat = cv2.morphologyEx(image_gray, cv2.MORPH_TOPHAT, rect_Kernel) # Gift cap operation to highlight brighter areas

"""#######################################

# The Sobel operator is a commonly used edge detection operator. It has a smoothing effect on the noise and provides more accurate edge orientation information, but the edge localization accuracy is not high enough.

# An edge is where the pixel's corresponding gray value changes rapidly. E.g. black to white border

# The image is two-dimensional. the Sobel operator is derived in the x,y directions, so there are two different convolution kernels (Gx, Gy) and the transpose of Gx is equal to Gy. The luminance transformation of the pixel at each point in the horizontal direction and in the vertical direction is reflected, respectively.

########################################

# dst = cv2.Sobel(src, ddepth, dx, dy, ksize)

# Input parameters src Input image

# ddepth The depth of the image, -1 means that the same depth as the original image is used. The depth of the target image must be greater than or equal to the depth of the original image;

# dx and dy denote the order of the derivation, with 0 indicating that there is no derivation in this direction, typically 0, 1, or 2.

# ksize Convolution kernel size, typically 3, 5.

# Summing x and y at the same time causes some information to be lost. (Not recommended) - Compute x and y separately and then sum (works well)

########################################

# (1) Description of cv2.CV_16S

# (1) The Sobel function will have negative values after the derivative, and values that will be greater than 255.

# (2) And the original image is uint8, i.e. 8-bit unsigned number. So Sobel doesn't have enough bits to build the image and it will be truncated.

# (3) Therefore use the 16-bit signed data type, cv2.CV_16S.

# (2) cv2.convertScaleAbs(): add an absolute value to all pixels of the image

# Convert it back to its original uint8 form with this function. Otherwise the image will not be displayed, but just a gray window.

########################################"""

# Perform sobel operator operation ksize=-1 is equivalent to screening ll with 3*3 convolution kernel (built-in convolution kernel)

image_gradx = cv2.Sobel(image_tophat, ddepth=cv2.CV_32F, dx=1, dy=0, ksize=-1) # Retrieve the boundaries of the image, gradx is the matrix of pixel points of the image processed by the Sobel operator

image_gradx = np.absolute(image_gradx) # absolute value for each element in the array

(minVal, maxVal) = (np.min(image_gradx), np.max(image_gradx)) # find maximal bounding difference and minimal bounding interpolations

image_gradx = (255 * ((image_gradx - minVal) / (maxVal - minVal))) # Normalization formula to limit the image pixel data to 0-1 for subsequent operations

image_gradx = image_gradx.astype("uint8") # change the matrix elements of gradx to data type uint8, which is generally the pixel point type of an image

"""

sobel_Gx1 = cv2.Sobel(image_tophat, ddepth=cv2.CV_32F, dx=1, dy=0, ksize=3) # 3*3 convolution kernel

sobel_Gx_Abs1 = cv2.convertScaleAbs(sobel_Gx1) # (1) left minus right (2) white to black is positive, black to white is negative and all negatives will be truncated to 0, so take the absolute value.

sobel_Gy1 = cv2.Sobel(image_tophat, cv2.CV_64F, 0, 1, ksize=3)

sobel_Gy_Abs1 = cv2.convertScaleAbs(sobel_Gy1)

sobel_Gx_Gy_Abs1 = cv2.addWeighted(sobel_Gx_Abs1, 0.5, sobel_Gy_Abs1, 0.5, 0) # Weight value x + weight value y + offset b

"""

########################################

# Closed operation (first expansion, then erosion) divides the bank card into four parts, with four numbers in each part joined together

image_CLOSE = cv2.morphologyEx(image_gradx, cv2.MORPH_CLOSE, square_Kernel)

"""########################################

# Image threshold ret, dst = cv2.threshold(src, thresh, max_val, type)

# Input parameters dst: Output map

# src: input map, only a single channel image can be input, usually a grayscale image

# thresh: threshold

# max_val: the value assigned when the pixel value exceeds the threshold (or is less than the threshold, depending on the type)

# type: the type of the binarization operation, contains the following five types:

# (1) cv2.THRESH_BINARY exceeds the threshold by max_val, otherwise it takes 0

# (2) cv2.THRESH_BINARY_INV Inversion of THRESH_BINARY

# (3) cv2.THRESH_TRUNC greater than threshold portion set to threshold, otherwise unchanged

# (4) cv2.THRESH_TOZERO is greater than the threshold portion is not changed, otherwise it is set to 0

# (5) cv2.THRESH_TOZERO_INV Inversion of THRESH_TOZERO

########################################"""

# THRESH_OTSU will automatically find a suitable threshold, suitable for bimodal peaks, the threshold parameter needs to be set to 0.

image_thresh = cv2.threshold(image_CLOSE, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU)[1]

# (Secondary) Close operation to fill four consecutive numbers to form a whole.

dilate(image_thresh, square_Kernel, iterations=2) # iterations (2 iterations)

image_1_erode = cv2.erode(image_2_dilate, square_Kernel, iterations=1) # erode(1 iterations)

image_2_CLOSE = image_1_erode

# Calculate the profile

threshCnts, hierarchy = cv2.findContours(image_2_CLOSE.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# cnts = threshCnts # Set of points representing the contours of the image

image_Contours = image_resize.copy()

cv2.drawContours(image_Contours, threshCnts, -1, (0, 0, 255), 3)

plt.subplot(241), plt.imshow(image_card, 'gray'), plt.title('(0)image_card')

plt.subplot(242), plt.imshow(image_gray, 'gray'), plt.title('(1)image_gray')

plt.subplot(243), plt.imshow(image_tophat, 'gray'), plt.title('(2)image_tophat')

plt.subplot(244), plt.imshow(image_gradx, 'gray'), plt.title('(3)image_gradx')

plt.subplot(245), plt.imshow(image_CLOSE, 'gray'), plt.title('(4)image_CLOSE')

plt.subplot(246), plt.imshow(image_thresh, 'gray'), plt.title('(5)image_thresh')

plt.subplot(247), plt.imshow(image_2_CLOSE, 'gray'), plt.title('(6)image_2_CLOSE')

plt.subplot(248), plt.imshow(image_Contours, 'gray'), plt.title('(7)image_Contours')

plt.show()

######################################################################

# 33, extract the bank card " a set of four numbers " outline, then each outline is matched with each number of the template to get the maximum matching result

######################################################################

# 3311, Identify all contours in a group of four numbers (theoretically four)

########################################

locs = [] # hold contour coordinates in groups of four numbers - list initialization

for (i, c) in enumerate(threshCnts): # Traversing the outline

(x, y, w, h) = cv2.boundingRect(c) # compute the rectangle

ar = w / float(h) # Aspect ratio of (group of four numbers)

# Match (in groups of four numbers) the size of the outlines - adjusted to the actual image size

if (2.0 < ar and ar < 4.0):

if (35 < w < 60) and (10 < h < 20):

locs.append((x, y, w, h)) # conforming stay

# Sort conforming profiles from left to right

locs = sorted(locs, key=lambda x:x[0])

########################################

# 3322, In a set of four numbers, extract the contour coordinates of each number and perform template matching

########################################

output = [] # Save the outline coordinates of each number in the bank card - list initialization

# Iterate over every number on the bank card

for (ii, (gX, gY, gW, gH)) in enumerate(locs): # ii shall be four groups

groupOutput = [] # store the final match for each digit of the credit card

group_digit = image_gray[gY - 5:gY + gH + 5, gX - 5:gX + gW + 5] # Extract each group based on the coordinates (zoom in a bit on the results of each contour to avoid information loss)

group_digit_th = cv2.threshold(group_digit, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU)[1] # binarization

digitCnts, hierarchy = cv2.findContours(group_digit_th.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) # Obtain the outline

digitCnts = sort_contours(digitCnts, method="left-to-right")[0] # number the obtained contours

# Calculate each value in each group

for jj in digitCnts: # jj should be four numbers

(x, y, w, h) = cv2.boundingRect(jj) # Get the outline of the current value

roi = group_digit[y:y + h, x:x + w] # Get the coordinates of the current value

roi = cv2.resize(roi, (57, 88)) # change the size of the dimension (this should be the same size as the template number)

cv2.imshow("Image", roi)

cv2.waitKey(200) # delay 200ms

"""########################################

# template matching: cv2.matchTemplate(image, template, method)

# Input parameters image Image to be detected

# template template image

# method The template matching method:

# (1) cv2.TM_SQDIFF: Calculates the squared difference. The closer the calculated value is to 0, the more relevant it is

# (2) cv2.TM_CCORR: Calculates the correlation. The larger the calculated value, the more relevant

# (3) cv2.TM_CCOEFF: Calculates the correlation coefficient. The larger the calculated value, the more relevant

# (4) cv2.TM_SQDIFF_NORMED: Calculates (normalizes) the squared difference. The closer the calculated value is to 0, the more relevant it is

# (5) cv2.TM_CCORR_NORMED: Calculates (normalizes) the correlation. The closer the calculated value is to 1, the more relevant it is

# (6) cv2.TM_CCOEFF_NORMED: Calculates (normalized) correlation coefficient. The closer the calculated value is to 1, the more correlated it is

# (preferably chosen with normalization operations for good results)

########################################

# Get matching result function: min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(ret)

# where: ret is the matrix returned by the cv2.matchTemplate function;

# min_val, max_val, min_loc, max_loc denote the minimum value, the maximum value, and the position of the minimum and maximum values in the image, respectively

# If the template method is squared or normalized squared, use min_loc; for the rest, use max_loc.

########################################"""

scores = [] # Calculate the match scores for [number in outline: roi] and [number in template: digitROI]

for (kk, digitROI) in digits.items(): # kk should be a 10-digit number (corresponding to the ten digits of the template)

result = cv2.matchTemplate(roi, digitROI, cv2.TM_CCOEFF)

(_, max_score, _, _) = cv2.minMaxLoc(result) # max_score denotes maximum value

scores.append(max_score) # add the object max_score to the list after scores

groupOutput.append(str(np.argmax(scores))) # save the number corresponding to the maximum matching score

"""########################################

# Add text and modify the formatting function: cv2.putText(img, str(i), (123, 456)), font, 2, (0, 255, 0), 3)

# Input parameters in order: image, added text, top-left coordinates, font, font size, color, font thickness

########################################"""

# On the original image, draw " a group of four numbers" in rectangles (there should be a total of four rectangles corresponding to four groups)

cv2.rectangle(image_resize, (gX - 5, gY - 5), (gX + gW + 5, gY + gH + 5), (0, 0, 255), 1)

cv2.putText(image_resize, "".join(groupOutput), (gX, gY - 15), cv2.FONT_HERSHEY_SIMPLEX, 0.65, (0, 0, 255), 2)

# Save the actual matches corresponding to each digit of the bank card

output.extend(groupOutput) # add the list of groupOutputs to the list of outputs

# Print results (show all matches on original map)

cv2.imshow("Image", image_resize)

cv2.waitKey(0)Delve into Pycharm shadows name ‘xxxx’ from outer scope warnings

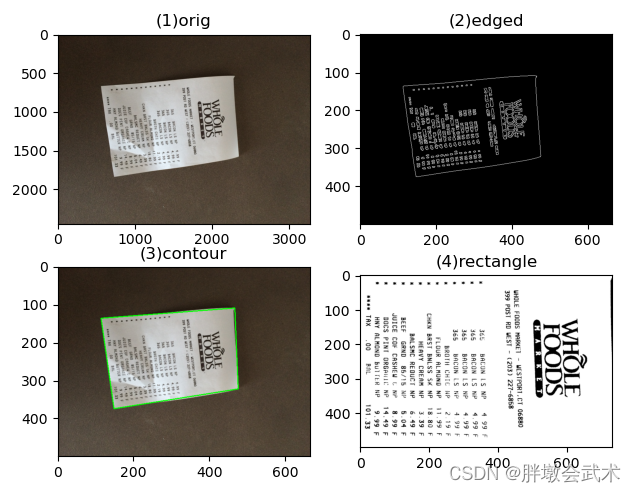

(ii) document scanning OCR recognition — cv2.getPerspectiveTransform() + cv2.warpPerspective(), np.argmin(), np.argmax(), np.diff()

Calculate the length of the contour: cv2.arcLength(curve, closed)

Find the polygonal fit curve of the contour: approxPolyDP(contourMat, 10, true)

Find the index corresponding to the minimum value: np.argmin()

Find the index corresponding to the maximum value: np.argmax()

Find the difference between columns (in the same row): np.diff()

import numpy as np

import cv2

import matplotlib.pyplot as plt # Matplotlib is RGB

"""######################################################################

# Compute the chi-square transform matrix: cv2.getPerspectiveTransform(rect, dst)

# Input parameters rect Four points (four corners) of the input image

# dst outputs the four points of the image (the four corners corresponding to the square image)

######################################################################

# Affine transform: cv2.warpPerspective(src, M, dsize, dst=None, flags=None, borderMode=None, borderValue=None)

# Perspective transform: cv2.warpAffine(src, M, dsize, dst=None, flags=None, borderMode=None, borderValue=None)

# src: input image dst: output image

# M: 2×3 transformation matrix

# dsize: size of the output image after transformation

# flag: interpolation method

# borderMode: border pixel flare mode

# borderValue: border pixel interpolation, filled with 0 by default

#

# (Affine Transformation) can be rotated, translated, scaled, and the parallel lines remain parallel after the transformation.

# (Perspective Transformation) The transformation of the same object from different viewpoints in a pixel coordinate system, where straight lines are not distorted, but parallel lines may no longer be parallel.

#

# Note: cv2.warpAffine needs to be used with cv2.getPerspectiveTransform.

######################################################################"""

def order_points(pts):

rect = np.zeros((4, 2), dtype="float32") # 4 coordinate points in total

# Find the coordinates 0123 in order # - Upper left, upper right, lower right, lower left.

# Calculate top left, bottom right

s = pts.sum(axis=1)

rect[0] = pts[np.argmin(s)] # np.argmin() find the index corresponding to the minimum value

rect[2] = pts[np.argmax(s)] # np.argmax() find the index corresponding to the maximum value

# Calculate upper right and lower left

diff = np.diff(pts, axis=1) # np.diff finds the difference between columns (on the same row)

rect[1] = pts[np.argmin(diff)]

rect[3] = pts[np.argmax(diff)]

return rect

def four_point_transform(image, pts):

rect = order_points(pts) # get input coordinate points

(tl, tr, br, bl) = rect # Get the four points of the quadrilateral, each with two values corresponding to (x, y) coordinates

# Calculate the input w and h values

widthA = np.sqrt(((br[0] - bl[0]) ** 2) + ((br[1] - bl[1]) ** 2))

widthB = np.sqrt(((tr[0] - tl[0]) ** 2) + ((tr[1] - tl[1]) ** 2))

maxWidth = max(int(widthA), int(widthB)) # Take the maximum width of the top and bottom sides of the quadrilateral.

heightA = np.sqrt(((tr[0] - br[0]) ** 2) + ((tr[1] - br[1]) ** 2))

heightB = np.sqrt(((tl[0] - bl[0]) ** 2) + ((tl[1] - bl[1]) ** 2))

maxHeight = max(int(heightA), int(heightB)) # take the largest height of the left and right sides of the quadrilateral

# Transformed coordinate positions

dst = np.array([[0, 0], [maxWidth - 1, 0], [maxWidth - 1, maxHeight - 1], [0, maxHeight - 1]], dtype="float32")

"""###############################################################################

# Compute the chi-square transform matrix: cv2.getPerspectiveTransform(rect, dst)

###############################################################################"""

M = cv2.getPerspectiveTransform(rect, dst)

"""###############################################################################

# Perspective transformation (multiply input rectangle by (chi-square transformation matrix) to get output matrix)

###############################################################################"""

warped = cv2.warpPerspective(image, M, (maxWidth, maxHeight))

return warped

def resize(image, width=None, height=None, inter=cv2.INTER_AREA):

dim = None

(h, w) = image.shape[:2]

if width is None and height is None:

return image

if width is None:

r = height / float(h)

dim = (int(w * r), height)

else:

r = width / float(w)

dim = (width, int(h * r))

resized = cv2.resize(image, dim, interpolation=inter)

return resized

##############################################

image = cv2.imread(r'images\receipt.jpg')

ratio = image.shape[0] / 500.0 # After resize, the coordinates will change the same, so record the ratio of the image.

orig = image.copy()

image = resize(orig, height=500)

##############################################

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) # Convert to gray scale image

gray = cv2.GaussianBlur(gray, (5, 5), 0) # Gaussian filtering operation

edged = cv2.Canny(gray, 75, 200) # Canny algorithm (edge detection)

##############################################

print("STEP 1: Edge Detection")

cv2.imshow("Image", image)

cv2.imshow("Edged", edged)

cv2.waitKey(0)

cv2.destroyAllWindows()

##############################################

# Profile Detection

cnts, hierarchy = cv2.findContours(edged.copy(), cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE) # Profile Detection

cnts = sorted(cnts, key=cv2.contourArea, reverse=True)[:5] # Select the top five contours of all contours and sort them

for c in cnts:

peri = cv2.arcLength(c, True) # Calculate contour approximation

approx = cv2.approxPolyDP(c, 0.02 * peri, True) # find the polygonal fit curve of the contour

if len(approx) == 4: # If the current contour is four points (rectangles), then the current contour is the desired target.

screenCnt = approx

break

##############################################

print("STEP 2: Get Outline")

cv2.drawContours(image, [screenCnt], -1, (0, 255, 0), 2) # draw the detected contours on the original image

cv2.imshow("Outline", image)

cv2.waitKey(0)

cv2.destroyAllWindows()

##############################################

# Perspective transformations

warped = four_point_transform(orig, screenCnt.reshape(4, 2) * ratio) # get the outline to multiply by the scaled size of the image

warped = cv2.cvtColor(warped, cv2.COLOR_BGR2GRAY) # convert to grayscale

ref = cv2.threshold(warped, 100, 255, cv2.THRESH_BINARY)[1] # binary processing

ref = resize(ref, height=500)

##############################################

print("STEP 3: Chiral Transformation")

cv2.imshow("Scanned", ref)

cv2.waitKey(0)

cv2.destroyAllWindows()

##############################################

# The color channel for contour plotting is BGR; but Matplotlib is RGB; so when plotting, (0, 0, 255) will be converted from BGR to RGB (red - blue)

orig = cv2.cvtColor(orig, cv2.COLOR_BGR2RGB) # BGR to RGB conversion

edged = cv2.cvtColor(edged, cv2.COLOR_BGR2RGB)

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

ref = cv2.cvtColor(ref, cv2.COLOR_BGR2RGB)

plt.subplot(2, 2, 1), plt.imshow(orig), plt.title('orig')

plt.subplot(2, 2, 2), plt.imshow(edged), plt.title('edged')

plt.subplot(2, 2, 3), plt.imshow(image), plt.title('contour')

plt.subplot(2, 2, 4), plt.imshow(ref), plt.title('rectangle')

plt.show()

"""######################################################################

# Calculate the length of the curve: retval = cv2.arcLength(curve, closed)

# Input parameter: curve Contour (curve).

# closed If true, the outline is closed; if false, it is open. (Boolean type)

# Output parameters: retval The length (perimeter) of the contour.

######################################################################

# Find the polygonal fit curve of the contour: approxCurve = approxPolyDP(contourMat, 10, true)

# Input parameters: contourMat: contour point matrix (set)

# epsilon: (double type) the specified precision, i.e. the maximum distance between the original curve and the approximated curve.

# closed: (bool type) If true, the approximation curve is closed; conversely, if false, it is disconnected.

# Output parameters: approxCurve: contour point matrix (set); the current point set is the one that minimally accommodates the specified point set. The drawing is a polygon;

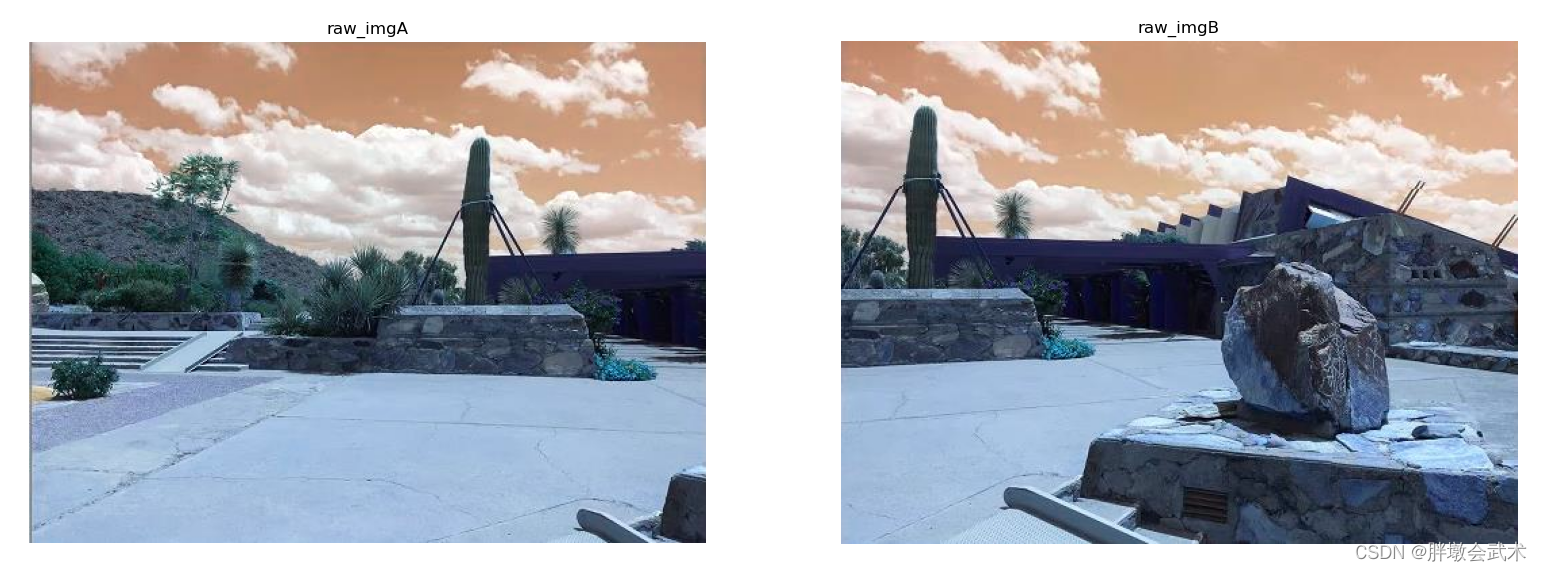

######################################################################"""detectAndDescribe(), matchKeypoints(), cv2.findHomography(), cv2.warpPerspective(), drawMatches()

Function function: using the sift algorithm, implement the panoramic stitching algorithm, will be given two pictures spliced into one.

11:Detecting key points and extracting (sift) local invariant features from two input images.

22:Features between the two images matched (Lowe’s algorithm: comparing nearest neighbor distance to next nearest neighbor distance)

33:Estimation of the single mapping transformation matrix (homography: uni-responsiveness) using the RANSAC algorithm (Randomized Sampling Consistent Algorithm) with matching eigenvectors.

44:Apply a perspective transformation using the single-image matrix obtained from 33.

import cv2

import numpy as np

"""#########################################################

# Predefined framework descriptions

# Define a Stitcher class: stitch(), detectAndDescribe(), matchKeypoints(), drawMatches()

# stitch() stitch function

# detectAndDescribe() detects the SIFT key feature points of the image and computes the feature descriptors

# matchKeypoints() matches all the feature points of both images

# cv2.findHomography() computes the mono-mapping transformation matrix

# cv2.warpPerspective() Perspective transform (role: stitch image)

# drawMatches() builds a visualization of matches for straight line keypoints

#

# Note: cv2.warpPerspective() needs to be used with cv2.findHomography().

#########################################################"""

class Stitcher:

##################################################################################

def stitch(self, images, ratio=0.75, reprojThresh=4.0, showMatches=False):

(imageB, imageA) = images # Get the input images

(kpsA, featuresA) = self.detectAndDescribe(imageA) # Detect the SIFT key feature points of images A and B and compute the feature descriptors

(kpsB, featuresB) = self.detectAndDescribe(imageB)

M = self.matchKeypoints(kpsA, kpsB, featuresA, featuresB, ratio, reprojThresh) # Match all the feature points of the two images and return the match.

if M is None: # If the return result is null, there is no successful feature match, exit the algorithm

return None

# Otherwise, extract the match #

(matches, H, status) = M # H is the 3x3 view transformation matrix

result = cv2.warpPerspective(imageA, H, (imageA.shape[1] + imageB.shape[1], imageA.shape[0])) # transform image A into perspective, result is the transformed image

result[0: imageb.Shape [0], 0: imageb.shape [1]] = imageB # Passes imageB to the leftmost end of the result image

if showMatches: # Detect if you need to show image matches

vis = self.drawMatches(imageA, imageB, kpsA, kpsB, matches, status) # Generate matching images

return (result, vis)

return result

##################################################################################

def detectAndDescribe(self, image):

# gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) # Convert a color image to grayscale

descriptor = cv2.xfeatures2d.SIFT_create() # Create SIFT generator

"""#####################################################

# In case of OpenCV3.X, the cv2.xfeatures2d.SIFT_create method is used for DoG keypoint detection and SIFT feature extraction.

# In case of OpenCV 2.4, use cv2.FeatureDetector_create method for keypoint detection (DoG).

#####################################################"""

(kps, features) = descriptor.detectAndCompute(image, None) # detect SIFT feature points and compute descriptors

kps = np.float32([kp.pt for kp in kps]) # convert the result to a NumPy array

return (kps, features) # return the set of feature points, and the corresponding descriptive features

##################################################################################

def matchKeypoints(self, kpsA, kpsB, featuresA, featuresB, ratio, reprojThresh):

matcher = cv2.BFMatcher() # build the brute force matcher

rawMatches = matcher.knnMatch(featuresA, featuresB, 2) # Detect SIFT feature matching pairs from A and B graphs using KNN, K=2

matches = []

for m in rawMatches:

if len(m) == 2 and m[0].distance < m[1].distance * ratio: # keep the pair if the ratio of the closest distance to the next closest distance is less than the value of ratio

matches.append((m[0].trainIdx, m[0].queryIdx)) # store the indexes of the two points in featuresA, featuresB

if len(matches) > 4: # Calculate the perspective transformation matrix when the filtered matched pairs are greater than 4

# Projective transformation matrix: 3*3. has eight parameters corresponding to eight equations, one of which is 1 for normalization. Corresponds to four pairs, each pair (x, y)

ptsA = np.float32([kpsA[i] for (_, i) in matches]) # Get the coordinates of the points of the matched pairs

ptsB = np.float32([kpsB[i] for (i, _) in matches])

(H, status) = cv2.findHomography(ptsA, ptsB, cv2.RANSAC, reprojThresh) # Estimate the monoimage matrix using the RANSAC algorithm using the matching eigenvectors (homography: uni-responsiveness)

return (matches, H, status)

return None # If the pair is less than 4, return None.

##################################################################################

def drawMatches(self, imageA, imageB, kpsA, kpsB, matches, status):

(hA, wA) = imageA.shape[:2]

(hB, wB) = imageB.shape[:2]

vis = np.zeros((max(hA, hB), wA + wB, 3), dtype="uint8")

vis[0:hA, 0:wA] = imageA # Connect the left and right of the A and B maps together

vis[0:hB, wA:] = imageB

for ((trainIdx, queryIdx), s) in zip(matches, status):

if s == 1: # draw to visualization when point pair matching is successful

ptA = (int(kpsA[queryIdx][0]), int(kpsA[queryIdx][1]))

ptB = (int(kpsB[trainIdx][0]) + wA, int(kpsB[trainIdx][1]))

cv2.line(vis, ptA, ptB, (0, 255, 0), 1)

return vis # Return visualization results

##################################################################################

if __name__ == '__main__':

# Read the spliced images

imageA = cv2.imread("left_01.png")

imageB = cv2.imread("right_01.png")

# Stitching images into panoramas

stitcher = Stitcher() # call the splice function

(result, vis) = stitcher.stitch([imageA, imageB], showMatches=True)

# Show all images

cv2.imshow("Image A", imageA)

cv2.imshow("Image B", imageB)

cv2.imshow("Keypoint Matches", vis)

cv2.imshow("Result", result)

cv2.waitKey(0)

cv2.destroyAllWindows()OpenCV based panoramic stitching (Python) SIFT/SURF

(iv) Parking lot space detection (Keras-based CNN classification) — pickle.dump(), pickle.load(), cv2.fillPoly(), cv2.bitwise_and(), cv2.circle(), cv2. HoughLinesP(), cv2.line()

The project is divided into three py files:Parking.py (defining all the functional functions), train.py (training the neural network), park_test.py (starting to detect the state of the parking space)

(1)Parking.py

#####################################################

# Parking.py

#####################################################

import matplotlib.pyplot as plt

import cv2

import os

import glob

import numpy as np

class Parking:

def show_images(self, images, cmap=None):

cols = 2

rows = (len(images)+1)//cols

plt.figure(figsize=(15, 12))

for i, image in enumerate(images):

plt.subplot(rows, cols, i+1)

cmap = 'gray' if len(image.shape) == 2 else cmap

plt.imshow(image, cmap=cmap)

plt.xticks([])

plt.yticks([])

plt.tight_layout(pad=0, h_pad=0, w_pad=0)

plt.show()

def cv_show(self, name, img):

cv2.imshow(name, img)

cv2.waitKey(0)

cv2.destroyAllWindows()

def select_rgb_white_yellow(self, image):

# Image background information filtering (i.e., intercept the image, specified range of colors)

lower = np.uint8([120, 120, 120])

upper = np.uint8([255, 255, 255])

# (1) lower_red and the portion above upper_red each becomes 0

# (2) The value between lower_red and upper_red becomes 255.

white_mask = cv2.inRange(image, lower, upper)

self.cv_show('white_mask', white_mask)

masked = cv2.bitwise_and(image, image, mask=white_mask)

self.cv_show('masked', masked)

return masked

def convert_gray_scale(self, image):

return cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

def detect_edges(self, image, low_threshold=50, high_threshold=200):

return cv2.Canny(image, low_threshold, high_threshold)

def filter_region(self, image, vertices):

# Functions: eliminates unwanted places, extracts polygons (positions of all parking spaces), pure white display

#####################################################

# zeros_like(array, dtype=float, order='C')

# Function: Returns a data of the given shape and type, filled with all zeros.

# Input parameters (1) array: input data

# (2) dtype: return the data type of the array (optional parameter, default float)

# (3) order: C for row first; F for column first (optional parameter)

#####################################################

mask = np.zeros_like(image) # new template (0: solid black)

if len(mask.shape) == 2:

#####################################################

# Fill arbitrary polygons: cv2.fillPoly(img, ppt, Scalar);

# Input Parameters (1) img Draws on this image.

# (2) ppt vertex sets of polygons

# (5) Scarlar Fill polygon color (255, 255, 255), i.e. RGB value is white

#####################################################

cv2.fillPoly(mask, vertices, 255) # fill polygon (255: pure white)

self.cv_show('mask', mask)

#####################################################

# cv2.bitwise_and() # bitwise and

# cv2.bitwise_or() # or

# cv2.bitwise_not() # not

# cv2.bitwise_xor() # hetero-ortho

###################################

# dst = cv2.bitwise_and(src1, src2, mask=mask)

# src1/src2 are images of the same type and size

# mask=mask indicates the area to be extracted (optional parameter)

# (1&1=1,1&0=0,0&1=0,0&0=0)

#####################################################

return cv2.bitwise_and(image, mask) # bitwise and operation

def select_region(self, image):

# Function: (Manual) Select Area - In the original drawing, filter out the locations of all parking spaces and frame them into a polygon.

# Polygon keypoint positions are set by customization as follows.

rows, cols = image.shape[:2] # Get the length and width of the image

pt_1 = [cols*0.05, rows*0.90] # position point 1

pt_2 = [cols*0.05, rows*0.70] # position point 2

pt_3 = [cols*0.30, rows*0.55] # position point 3

pt_4 = [cols*0.6, rows*0.15] # position point 4

pt_5 = [cols*0.90, rows*0.15] # position point 5

pt_6 = [cols*0.90, rows*0.90] # position point 6

vertices = np.array([[pt_1, pt_2, pt_3, pt_4, pt_5, pt_6]], dtype=np.int32) # convert the data to a numpy array

point_img = image.copy()

point_img = cv2.cvtColor(point_img, cv2.COLOR_GRAY2RGB) # convert to grayscale

for point in vertices[0]: # Iterate over all position points

"""#####################################################

# Draw circle shape: cv2.circle(image, center, radius, color, thickness)

# Input Parameters (1) Image: Plot on this image.

# (2) Circle center: The coordinates of the center of the circle. The coordinates are represented as a tuple of two values, i.e. (X coordinate value, Y coordinate value).

# (3) Radius: the radius of a circle.

# (4) Color: The color of the circle's boundary line. For BGR, we pass a tuple. For example: (255, 0, 0) for blue color.

# (5) Thickness: A positive number indicates the thickness of the line. Where: -1 indicates a solid circle.

#####################################################"""

cv2.circle(point_img, (point[0], point[1]), 10, (0, 0, 255), 4) # draw a hollow circle at each position point

self.cv_show('point_img', point_img)

return self.filter_region(image, vertices)

def hough_lines(self, image):

"""#####################################################

# Detect all lines in the image: cv2.HoughLinesP(image, rho=0.1, theta=np.pi / 10, threshold=15, minLineLength=9, maxLineGap=4)

# image The input image needs to be the result of edge detection

# minLineLengh (the shortest length of the line, anything shorter than this is ignored)

# MaxLineCap (the maximum spacing between two lines, less than which a line is considered to be a straight line)

# rho distance accuracy

# theta angular accuracy

# threshod exceeds the set threshold to be detected as a line segment

#####################################################"""

return cv2.HoughLinesP(image, rho=0.1, theta=np.pi/10, threshold=15, minLineLength=9, maxLineGap=4)

def draw_lines(self, image, lines, color=[255, 0, 0], thickness=2, make_copy=True):

# Function: Draw all lines on the original drawing that satisfy the condition

if make_copy:

image = np.copy(image)

cleaned = []

for line in lines:

for x1, y1, x2, y2 in line: # A line consists of two points, each with coordinates (x, y)

# abs(y2-y1) <= 1 ---- The images are all straight lines with slopes converging to 0

# abs(x2-x1) >= 25 and abs(x2-x1) <= 55 ---- Line Segment Custom Filtering (set up as appropriate)

if abs(y2-y1) <= 1 and abs(x2-x1) >= 25 and abs(x2-x1) <= 55:

cleaned.append((x1, y1, x2, y2)) # save all lines that satisfy the condition

cv2.line(image, (x1, y1), (x2, y2), color, thickness) # on the original image, draw all lines that satisfy the condition

print(" No lines detected: ", len(cleaned))

return image

def identify_blocks(self, image, lines, make_copy=True):

#####################################################

# Function: Recognizes all parking spaces

# Step 1: Filter some of the straight lines and extract the valid ones (i.e. the ones corresponding to the parking spaces)

# Step 2: Sorting Straight Lines

# Step 3: Find multiple columns, each corresponding to a row of cars

# Step 4: Get the coordinates of each column of rectangles

# Step 5: Draw the column rectangles out

#####################################################

if make_copy:

new_image = np.copy(image)

# Step 1: Filter some of the straight lines and extract the valid ones (i.e. the ones corresponding to the parking spaces)

cleaned = []

for line in lines:

for x1, y1, x2, y2 in line:

if abs(y2-y1) <=1 and abs(x2-x1) >=25 and abs(x2-x1) <= 55:

cleaned.append((x1, y1, x2, y2)) # save all lines that satisfy the condition

# Step 2: Sorting Straight Lines

import operator

list1 = sorted(cleaned, key=operator.itemgetter(0, 1)) # label all lines with order (sort: top to bottom, left to right)

# Step 3: Find multiple columns, each corresponding to a row of cars

clusters = {} # find all lines in the same column

dIndex = 0

clus_dist = 10 # Distance between column and nearest column (set as appropriate)

for i in range(len(list1) - 1):

distance = abs(list1[i+1][0] - list1[i][0])

if distance <= clus_dist:

if not dIndex in clusters.keys(): clusters[dIndex] = []

clusters[dIndex].append(list1[i])

clusters[dIndex].append(list1[i + 1])

else:

dIndex += 1 # Summarize lines in same column, otherwise skip

# Step 4: Get the coordinates of each column of rectangles

rects = {}

i = 0

for key in clusters: column # 12

all_list = clusters[key]

cleaned = list(set(all_list))

if len(cleaned) > 5: # If the number is greater than 5, define it as a column

cleaned = sorted(cleaned, key=lambda tup: tup[1])

avg_y1 = cleaned[0][1] # Extract the first line of each column. [0] indicates the first line

avg_y2 = cleaned[-1][1] # Extract the last line of each column [-1] means last line

avg_x1 = 0 # Take the mean value since the x's of the different lines are not uniformly hierarchical

avg_x2 = 0

for tup in cleaned:

avg_x1 += tup[0]

avg_x2 += tup[2]

avg_x1 = avg_x1/len(cleaned) # x1 is the start of the rectangle

avg_x2 = avg_x2/len(cleaned) # x2 is the termination point of the rectangle

rects[i] = (avg_x1, avg_y1, avg_x2, avg_y2) # get the four point coordinates of each column of rectangles

i += 1

print("Num Parking Lanes: ", len(rects)) # There are 12 rectangles in total

# Step 5: Draw the column rectangles out

buff = 7

for key in rects: # key indicates the number of columns

tup_topLeft = (int(rects[key][0] - buff), int(rects[key][1]))

tup_botRight = (int(rects[key][2] + buff), int(rects[key][3]))

cv2.rectangle(new_image, tup_topLeft, tup_botRight, (0, 255, 0), 3)

return new_image, rects

def draw_parking(self, image, rects, make_copy=True, color=[255, 0, 0], thickness=2, save=True):

if make_copy:

new_image = np.copy(image)

gap = 15.5 # Fix the distance interval between every two parking spaces (y-axis)

spot_dict = {} # Dictionary: one space corresponds to one location

tot_spots = 0

# Fine-tuning --- Since the detected rectangles have a certain degree of error, human manipulation is performed to achieve precision

adj_y1 = {0: 20, 1: -10, 2: 0, 3: -11, 4: 28, 5: 5, 6: -15, 7: -15, 8: -10, 9: -30, 10: 9, 11: -32}

adj_y2 = {0: 30, 1: 50, 2: 15, 3: 10, 4: -15, 5: 15, 6: 15, 7: -20, 8: 15, 9: 15, 10: 0, 11: 30}

adj_x1 = {0: -8, 1: -15, 2: -15, 3: -15, 4: -15, 5: -15, 6: -15, 7: -15, 8: -10, 9: -10, 10: -10, 11: 0}

adj_x2 = {0: 0, 1: 15, 2: 15, 3: 15, 4: 15, 5: 15, 6: 15, 7: 15, 8: 10, 9: 10, 10: 10, 11: 0}

for key in rects: # key indicates the number of columns

tup = rects[key]

x1 = int(tup[0] + adj_x1[key])

x2 = int(tup[2] + adj_x2[key])

y1 = int(tup[1] + adj_y1[key])

y2 = int(tup[3] + adj_y2[key])

cv2.rectangle(new_image, (x1, y1), (x2, y2), (0, 255, 0), 2) # draw the fine-tuned rectangle on the image

num_splits = int(abs(y2-y1)//gap) # Calculate how many cars can be parked in each column on average (averaging estimate due to errors that do not identify exact parking spaces)

for i in range(0, num_splits+1): # Cutting six cuts gives seven parking spaces

y = int(y1 + i*gap)

#####################################################

Draw a straight line: cv2.line(img, pt1, pt2, color, thickness)

# Input parameter img The image where the line to be drawn is located.

# pt1 Straight line starting point

# pt2 Straight end

# color The color of the straight line

thickness=1 line thickness

#####################################################

# Draw the horizontal lines of all parking spaces

cv2.line(new_image, (x1, y), (x2, y), color, thickness)

if 0 < key < len(rects) - 1: # The first and last columns are single rows, the rest are double rows (depending on the situation).

# Draw vertical lines between double rows of parking spaces #

x = int((x1 + x2)/2) # The midpoint of the two locations is the corresponding vertical line coordinates

cv2.line(new_image, (x, y1), (x, y2), color, thickness)

# of calculations

if key == 0 or key == (len(rects) - 1): # If it's a single-row parking space, just +1 it.

tot_spots += num_splits + 1

else: # If it's a double row of parking spaces, +1, then multiply by 2.

tot_spots += 2*(num_splits + 1)

# One-to-one key-value pairs for each parking space with a dictionary

if key == 0 or key == (len(rects) - 1): # First or last column (single row parking)

for i in range(0, num_splits+1):

cur_len = len(spot_dict)

y = int(y1 + i*gap)

spot_dict[(x1, y, x2, y+gap)] = cur_len + 1 # Coordinates of the first and last parking spot columns

else: # double rows of parking spaces

for i in range(0, num_splits+1):

cur_len = len(spot_dict)

y = int(y1 + i*gap)

x = int((x1 + x2)/2) # Midpoint position corresponding to double row of parking spaces

spot_dict[(x1, y, x, y+gap)] = cur_len + 1 # Coordinates of the left row of a double row of parking spaces

spot_dict[(x, y, x2, y+gap)] = cur_len + 2 # Coordinates of the right row of a double row of parking spaces

print("total parking spaces: ", tot_spots, cur_len)

if save:

filename = 'with_parking.jpg'

cv2.imwrite(filename, new_image)

return new_image, spot_dict

def assign_spots_map(self, image, spot_dict, make_copy=True, color=[255, 0, 0], thickness=2):

if make_copy:

new_image = np.copy(image)

for spot in spot_dict.keys():

(x1, y1, x2, y2) = spot

cv2.rectangle(new_image, (int(x1), int(y1)), (int(x2), int(y2)), color, thickness)

return new_image

def save_images_for_cnn(self, image, spot_dict, folder_name='cnn_data'):

# Function: crop the parking spaces to get the images corresponding to all the parking spaces and save them under the specified folder path. (Provide data for CNN training. The images need to be manually filtered into two categories before training: whether the parking space is occupied or not.)

for spot in spot_dict.keys(): # Iterate over all parking spots -- indexed by the dictionary's keys

(x1, y1, x2, y2) = spot

(x1, y1, x2, y2) = (int(x1), int(y1), int(x2), int(y2))) # Coordinate values are rounded

spot_img = image[y1:y2, x1:x2] # crop the parking space

spot_img = cv2.resize(spot_img, (0, 0), fx=2.0, fy=2.0) # image crop (original image is in too mini)

spot_id = spot_dict[spot] # Coordinates of the parking space - the value corresponding to the key

filename = 'spot' + str(spot_id) + '.jpg' # Name each parking space with a key (so that subsequent indexing requirements can be followed)

print(spot_img.shape, filename, (x1, x2, y1, y2))

cv2.imwrite(os.path.join(folder_name, filename), spot_img) # save the parking spot image to the specified folder (cnn_data) path

def make_prediction(self, image, model, class_dictionary):

# Pre-processing

img = image/255.

# Converted to 4D tensor

image = np.expand_dims(img, axis=0)

# Train with a trained model

class_predicted = model.predict(image)

inID = np.argmax(class_predicted[0])

label = class_dictionary[inID]

return label

def predict_on_image(self,image, spot_dict , model,class_dictionary,make_copy=True, color=[0, 255, 0], alpha=0.5):

if make_copy:

new_image = np.copy(image)

overlay = np.copy(image)

self.cv_show('new_image', new_image)

cnt_empty = 0

all_spots = 0

for spot in spot_dict.keys():

all_spots += 1

(x1, y1, x2, y2) = spot

(x1, y1, x2, y2) = (int(x1), int(y1), int(x2), int(y2))

spot_img = image[y1:y2, x1:x2]

spot_img = cv2.resize(spot_img, (48, 48))

label = self.make_prediction(spot_img,model,class_dictionary) # Predict whether the image (parking space) is occupied or not

if label == 'empty':

cv2.rectangle(overlay, (int(x1), int(y1)), (int(x2), int(y2)), color, -1)

cnt_empty += 1

cv2.addWeighted(overlay, alpha, new_image, 1-alpha, 0, new_image) # Image fusion

cv2.putText(new_image, "Available: %d spots" % cnt_empty, (30, 95), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (255, 255, 255), 2)

cv2.putText(new_image, "Total: %d spots" % all_spots, (30, 125), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (255, 255, 255), 2)

save = False

if save:

filename = 'with_marking.jpg'

cv2.imwrite(filename, new_image)

self.cv_show('new_image', new_image)

return new_image

def predict_on_video(self, video_name, final_spot_dict, model, class_dictionary, ret=True):

cap = cv2.VideoCapture(video_name)

count = 0

while ret:

ret, image = cap.read()

count += 1

if count == 5: # Judge every four images

count = 0

new_image = np.copy(image)

overlay = np.copy(image)

cnt_empty = 0

all_spots = 0

color = [0, 255, 0]

alpha = 0.5

for spot in final_spot_dict.keys():

all_spots += 1

(x1, y1, x2, y2) = spot

(x1, y1, x2, y2) = (int(x1), int(y1), int(x2), int(y2))

spot_img = image[y1:y2, x1:x2]

spot_img = cv2.resize(spot_img, (48,48))

label = self.make_prediction(spot_img, model, class_dictionary) # Predict whether the frame image (parking space) is occupied or not

if label == 'empty':

cv2.rectangle(overlay, (int(x1), int(y1)), (int(x2), int(y2)), color, -1)

cnt_empty += 1

cv2.addWeighted(overlay, alpha, new_image, 1 - alpha, 0, new_image)

cv2.putText(new_image, "Available: %d spots" % cnt_empty, (30, 95), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (255, 255, 255), 2)

cv2.putText(new_image, "Total: %d spots" % all_spots, (30, 125), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (255, 255, 255), 2)

cv2.imshow('frame', new_image)

if cv2.waitKey(10) & 0xFF == ord('q'):

break

cv2.destroyAllWindows()

cap.release()(2)train.py

#####################################################

# train.py

#####################################################

import numpy

import os

from keras import applications

from keras.preprocessing.image import ImageDataGenerator

from keras import optimizers

from keras.models import Sequential, Model

from keras.layers import Dropout, Flatten, Dense, GlobalAveragePooling2D

from keras import backend as k

from keras.callbacks import ModelCheckpoint, LearningRateScheduler, TensorBoard, EarlyStopping

from keras.models import Sequential

from keras.layers.normalization import BatchNormalization

from keras.layers.convolutional import Conv2D

from keras.layers.convolutional import MaxPooling2D

from keras.initializers import TruncatedNormal

from keras.layers.core import Activation

from keras.layers.core import Flatten

from keras.layers.core import Dropout

from keras.layers.core import Dense

files_train = 0

files_validation = 0

########################################

cwd = os.getcwd() # Get the current working path

folder = 'train_data/train' # Training data

for sub_folder in os.listdir(folder):

path, dirs, files = next(os.walk(os.path.join(folder, sub_folder))) # Read data

files_train += len(files)

########################################

folder = 'train_data/test' # Test data

for sub_folder in os.listdir(folder):

path, dirs, files = next(os.walk(os.path.join(folder, sub_folder))) # Read data

files_validation += len(files)

########################################

print(files_train, files_validation)

########################################

# CNN training parameters specified

img_width, img_height = 48, 48

train_data_dir = "train_data/train"

validation_data_dir = "train_data/test"

nb_train_samples = files_train

nb_validation_samples = files_validation

batch_size = 32

epochs = 15

num_classes = 2

# Call the VGG16 network in applications in the kera framework. Several network models are encapsulated in there (VGG16, VGG19, Resnet50, MobileNet, etc.)

# weights='imagenet' means call imagenet-trained weights directly (current data is too small)

model = applications.VGG16(weights='imagenet', include_top=False, input_shape=(img_width, img_height, 3))

########################################

# Freeze the first 10 layers of the network #

for layer in model.layers[:10]:

layer.trainable = False

x = model.output

x = Flatten()(x)

predictions = Dense(num_classes, activation="softmax")(x)

model_final = Model(input=model.input, output=predictions)

model_final.compile(loss="categorical_crossentropy", optimizer = optimizers.SGD(lr=0.0001, momentum=0.9), metrics=["accuracy"])

########################################

# Data enhancement

train_datagen = ImageDataGenerator(rescale=1./255, horizontal_flip=True, fill_mode="nearest",

zoom_range=0.1, width_shift_range=0.1, height_shift_range=0.1, rotation_range=5)

test_datagen = ImageDataGenerator(rescale=1./255, horizontal_flip=True, fill_mode="nearest",

zoom_range=0.1, width_shift_range=0.1, height_shift_range=0.1, rotation_range=5)

train_generator = train_datagen.flow_from_directory(train_data_dir, target_size=(img_height, img_width), batch_size=batch_size, class_mode="categorical")

validation_generator = test_datagen.flow_from_directory(validation_data_dir, target_size=(img_height, img_width), class_mode="categorical")

########################################

checkpoint = ModelCheckpoint("car1.h5", monitor='val_acc', verbose=1, save_best_only=True, save_weights_only=False, mode='auto', period=1)

early = EarlyStopping(monitor='val_acc', min_delta=0, patience=10, verbose=1, mode='auto')

history_object = model_final.fit_generator(train_generator, samples_per_epoch=nb_train_samples, epochs=epochs,

validation_data=validation_generator, nb_val_samples=nb_validation_samples, callbacks=[checkpoint, early])

# Files are automatically generated after training: car1.h5

# Note: The final training result is only ninety percent. (1) There is still room for improvement in data preprocessing (2) The neural network model can be optimized(3)park_test.py

#####################################################

# park_test.py

#####################################################

from __future__ import division

import matplotlib.pyplot as plt

import cv2

import os

import glob

import numpy as np

from keras.applications.imagenet_utils import preprocess_input

from keras.models import load_model

from keras.preprocessing import image

from PIL import Image

# Pillow (PIL) is a more basic image processing library in Python, mainly used for basic image processing, such as cropping images, resizing images and image color processing.

# Compared with Pillow, OpenCV and Scikit-image are more feature-rich and therefore more complex to use, and are mainly used in machine vision and image analysis, such as the well-known "face recognition" applications.

import pickle

from Parking import Parking # Import a custom library

cwd = os.getcwd()

def img_process(test_images, park): # park: instantiated class

white_yellow_images = list(map(park.select_rgb_white_yellow, test_images)) # select_rgb_white_yellow(): filters image background information

park.show_images(white_yellow_images)

########################################################################################################

gray_images = list(map(park.convert_gray_scale, white_yellow_images)) # convert_gray_scale(): Convert to grayscale

park.show_images(gray_images)

########################################################################################################

edge_images = list(map(lambda image: park.detect_edges(image), gray_images)) # detect_edges(): Use the cv2.Canny algorithm for edge detection

park.show_images(edge_images)

########################################################################################################

roi_images = list(map(park.select_region, edge_images)) # select_region(): filter out polygons (parking lot locations) and remove redundant regions from the image

park.show_images(roi_images)

########################################################################################################

list_of_lines = list(map(park.hough_lines, roi_images)) # hough_lines(): detect all lines in the image

########################################################################################################

line_images = []

for image, lines in zip(test_images, list_of_lines):

line_images.append(park.draw_lines(image, lines)) # draw_lines(): draw all lines in the image that satisfy the condition (i.e. parking lines)

park.show_images(line_images)

########################################################################################################

rect_images = [] # new_image draws the image with all rectangles

rect_coords = [] # rects The coordinates of the four points of each rectangle.

for image, lines in zip(test_images, list_of_lines):

new_image, rects = park.identify_blocks(image, lines) # identify_blocks(): Draw rectangles for each column

rect_images.append(new_image)

rect_coords.append(rects)

park.show_images(rect_images) # draw (rectangular) images

########################################################################################################

delineated = [] # new_image draws an image with all rectangles after delineation, and all parking spaces

spot_pos = [] # spot_dict Coordinates of each parking space (dictionary - data structure)

for image, rects in zip(test_images, rect_coords):

new_image, spot_dict = park.draw_parking(image, rects) # draw_parking: Draw the parking space

delineated.append(new_image)

spot_pos.append(spot_dict)

park.show_images(delineated) # draw (rectangle + parking space) images

final_spot_dict = spot_pos[1] # Fetch all values of the dictionary (key-value pairs) (each value corresponds to the coordinate position of a parking spot). [1]: values

print(len(final_spot_dict)) # print all parking spots

########################################################################################################

with open('spot_dict.pickle', 'wb') as handle: # Open the file (open) and close it automatically (with)

"""#####################################################

# The pickle module in Python implements basic data serialization and deserialization. Serializing an object allows you to save the object on disk and read it out when needed. Any object can perform serialization operations.

#####################################################

# (1) serialize-archive: pickle.dump(obj, file, protocol)

# Input parameters Object: that's what you're storing, the type can be list, string and any other type

# File: This is the destination file where the object will be stored.

# Protocols used: 3, index 0 is ASCII (default), 1 is old-style binary, 2 is new-style binary protocols

# fw = open("pickleFileName.txt", "wb")

# pickle.dump("try", fw)

#####################################################

# (2) Deserialize-read file: pickle.load(file)

# fr = open("pickleFileName.txt", "rb")

# result = pickle.load(fr)

#####################################################"""

pickle.dump(final_spot_dict, handle, protocol=pickle.HIGHEST_PROTOCOL) # Save the current preprocessing result and call it directly later.

########################################################################################################

park.save_images_for_cnn(test_images[0], final_spot_dict) # save_images_for_cnn(): call CNN neural network to recognize if the parking space is occupied or not

return final_spot_dict

########################################################################################################

def keras_model(weights_path):

# from keras.models import load_model

model = load_model(weights_path) # load_model() car1.h5 (model generated after neural network training)

return model

def img_test(test_images, final_spot_dict, model, class_dictionary):

for i in range(len(test_images)):

predicted_images = park.predict_on_image(test_images[i], final_spot_dict, model, class_dictionary)

# predict_on_image():

def video_test(video_name, final_spot_dict, model, class_dictionary):

name = video_name

cap = cv2.VideoCapture(name)

park.predict_on_video(name, final_spot_dict, model, class_dictionary, ret=True)

if __name__ == '__main__':

# Folder name: test_images

test_images = [plt.imread(path) for path in glob.glob('test_images/*.jpg')]

weights_path = 'car1.h5'

video_name = 'parking_video.mp4'

# class_dictionary = {0: 'empty', 1: 'occupied'} # Occupied parking space

class_dictionary = {} # Parking space occupancy status

class_dictionary[0] = 'empty' # Car parking space is empty

class_dictionary[1] = 'occupied' # Occupied

park = Parking() # Instantiation of class

park.show_images(test_images)

final_spot_dict = img_process(test_images, park) # Image preprocessing

model = keras_model(weights_path) # load neural network trained model

img_test(test_images, final_spot_dict, model, class_dictionary)

video_test(video_name, final_spot_dict, model, class_dictionary)Introduction to 8 Mainstream Deep Learning Frameworks

The PIL Library in Python

(v) Answer key recognition and marking — cv2.putText(), cv2.countNonZero()

import cv2 # opencv reads in BGR format

import numpy as np

import matplotlib.pyplot as plt # Matplotlib is RGB

def order_points(pts):

# There are four coordinate points

rect = np.zeros((4, 2), dtype="float32")

# Find the coordinates 0123 in order # - Upper left, upper right, lower right, lower left.

# Calculate top left, bottom right

s = pts.sum(axis=1)

rect[0] = pts[np.argmin(s)]

rect[2] = pts[np.argmax(s)]

# Calculate upper right and lower left

diff = np.diff(pts, axis=1)

rect[1] = pts[np.argmin(diff)]

rect[3] = pts[np.argmax(diff)]

return rect

def four_point_transform(image, pts):

# Get input coordinate points

rect = order_points(pts)

(tl, tr, br, bl) = rect

# Calculate the input w and h values

widthA = np.sqrt(((br[0] - bl[0]) ** 2) + ((br[1] - bl[1]) ** 2))

widthB = np.sqrt(((tr[0] - tl[0]) ** 2) + ((tr[1] - tl[1]) ** 2))

maxWidth = max(int(widthA), int(widthB))

heightA = np.sqrt(((tr[0] - br[0]) ** 2) + ((tr[1] - br[1]) ** 2))

heightB = np.sqrt(((tl[0] - bl[0]) ** 2) + ((tl[1] - bl[1]) ** 2))

maxHeight = max(int(heightA), int(heightB))

# Transformed coordinate positions

dst = np.array([[0, 0], [maxWidth - 1, 0], [maxWidth - 1, maxHeight - 1], [0, maxHeight - 1]], dtype="float32")

# Calculate the transformation matrix

M = cv2 getPerspectiveTransform (the rect, DST) # : calculate the homogeneous transformation matrix cv2. GetPerspectiveTransform (DST) the rect,

warped = cv2.warpPerspective(image, M, (maxWidth, maxHeight)) # Perspective transform: (multiply input rectangle by (chi-square transform matrix) to get output matrix)

return warped

def sort_contours(cnts, method="left-to-right"):

reverse = False

i = 0

if method == "right-to-left" or method == "bottom-to-top":

reverse = True

if method == "top-to-bottom" or method == "bottom-to-top":

i = 1

boundingBoxes = [cv2.boundingRect(c) for c in cnts]

(cnts, boundingBoxes) = zip(*sorted(zip(cnts, boundingBoxes), key=lambda b: b[1][i], reverse=reverse))

return cnts, boundingBoxes

"""#############################################################

# if __name__ == '__main__':

# (1) "__name__" is a built-in Python variable that refers to the current module.

# (2) The value of "__name__" of a module is "__main__" when that module is directly executed.

# (3) When imported into another module, the value of "__name__" is the real name of the module.

#############################################################"""

# Need to be given the correct answer for the option corresponding to each image (dictionary: keys correspond to rows, values correspond to answers for each row)

ANSWER_KEY = {0: 1, 1: 4, 2: 0, 3: 3, 4: 1}

# Image preprocessing

image = cv2.imread(r"images/test_01.png")

contours_img = image.copy()

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) # Convert to gray scale image

blurred = cv2.GaussianBlur(gray, (5, 5), 0) # Gaussian filter - removes noise

edged = cv2.Canny(blurred, 75, 200) # Canny operator edge detection

cnts, hierarchy = cv2.findContours(edge.copy (), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) # profile detection

Cv2. DrawContours (contours_img, CNTS, 1, (0, 0, 255), 3) # draw the contour (sheet)

###################################################################

# Extracting the answer key and making perspective changes

docCnt = None

if len(cnts) > 0:

cnts = sorted(cnts, key=cv2.contourArea, reverse=True) # sort by contour size

for c in cnts: # iterate over each contour

peri = cv2.arcLength(c, True) # Calculate the length of the outline

approx = cv2.approxPolyDP(c, 0.02*peri, True) # find the polygonal fit curve of the contour

if len(approx) == 4: # the found outline is a quadrilateral (corresponding to four vertices)

docCnt = approx

break

warped = four_point_transform(gray, docCnt.reshape(4, 2)) # Perspective transform (chi-square transform matrix)

warped1 = warped.copy()

thresh = cv2.threshold(warped, 0, 255, cv2.THRESH_BINARY_INV | cv2.THRESH_OTSU)[1] # 0: means the system determines it automatically; THRESH_OTSU: adaptive threshold setting

###############################

thresh_Contours = thresh.copy()

cnts, hierarchy = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) # find each circle outline

Cv2. DrawContours (thresh_Contours, CNTS, 1, (0, 0, 255), 3) # draw all contours

###################################################################

# Extract all valid choices in the answer key (circle)

questionCnts = [] # Extract the outline of each option

for c in cnts:

(x, y, w, h) = cv2.boundingRect(c) # Get the dimensions of the outline

ar = w / float(h) # Calculate the ratio

if w >= 20 and h >= 20 and 0.9 <= ar <= 1.1: # Customize the set size (as appropriate)

questionCnts.append(c)

questionCnts = sort_contours(questionCnts, method="top-to-bottom")[0] # Sort all options from top to bottom

###############################

correct = 0

for (q, i) in enumerate(np.arange(0, len(questionCnts), 5)): # There are 5 options per row

cnts = sort_contours(questionCnts[i:i + 5])[0] # sort for each row

bubbled = None

for (j, c) in enumerate(cnts): # iterate over the five results corresponding to each row

mask = np.zeros(thresh.shape, dtype="uint8") # use mask to determine the result (all black: 0) indicating that the scribbled answer is correct

Cv2. DrawContours (mask, [c], 1, 255, 1) # 1 said filling

# cv_show('mask', mask) # Show each option

mask = cv2.bitwise_and(thresh, thresh, mask=mask) # mask=mask indicates the region to extract (optional parameter)

total = cv2.countNonZero(mask) # count whether to choose this answer by counting the number of nonzero points

if bubbled is None or total > bubbled[0]: # Record the maximum number

bubbled = (total, j)

color = (0, 0, 255) # Compare to the correct answer.

k = ANSWER_KEY[q]

# The judgment is right

if k == bubbled[1]:

color = (0, 255, 0)

correct += 1

cv2.drawContours(warped, [cnts[k]], -1, color, 3) # Draw the outline

###################################################################

# Show results

score = (correct / 5.0) * 100 # Calculate total score

print("[INFO] score: {:.2f}%".format(score))

"""###################################################################

# Add text content to the image: cv2.putText(img, str(i), (123,456), cv2.FONT_HERSHEY_PLAIN, 2, (0,255,0), 3)

# The parameters are, in order: image, added text, top-left corner coordinates, font type, font size, color, font thickness