This paper will take the direction of Python based license plate recognition system implementation, introduce the basic principles of license plate recognition technology, common algorithms and methods, and explain in detail how to use Python language to implement a complete license plate recognition system.

catalogs

introductory

Application Scenarios of License Plate Recognition Technology

License plate recognition technology has a wide range of application scenarios, and it plays an important role in traffic management, security monitoring and smart city construction.

- Traffic Management: License plate recognition technology plays a vital role in traffic management. Through real-time automatic recognition of vehicle license plate numbers, traffic management departments can accurately record the information of each vehicle, realize the functions of violation monitoring and electronic toll collection, etc., and improve the efficiency and safety of traffic processes.

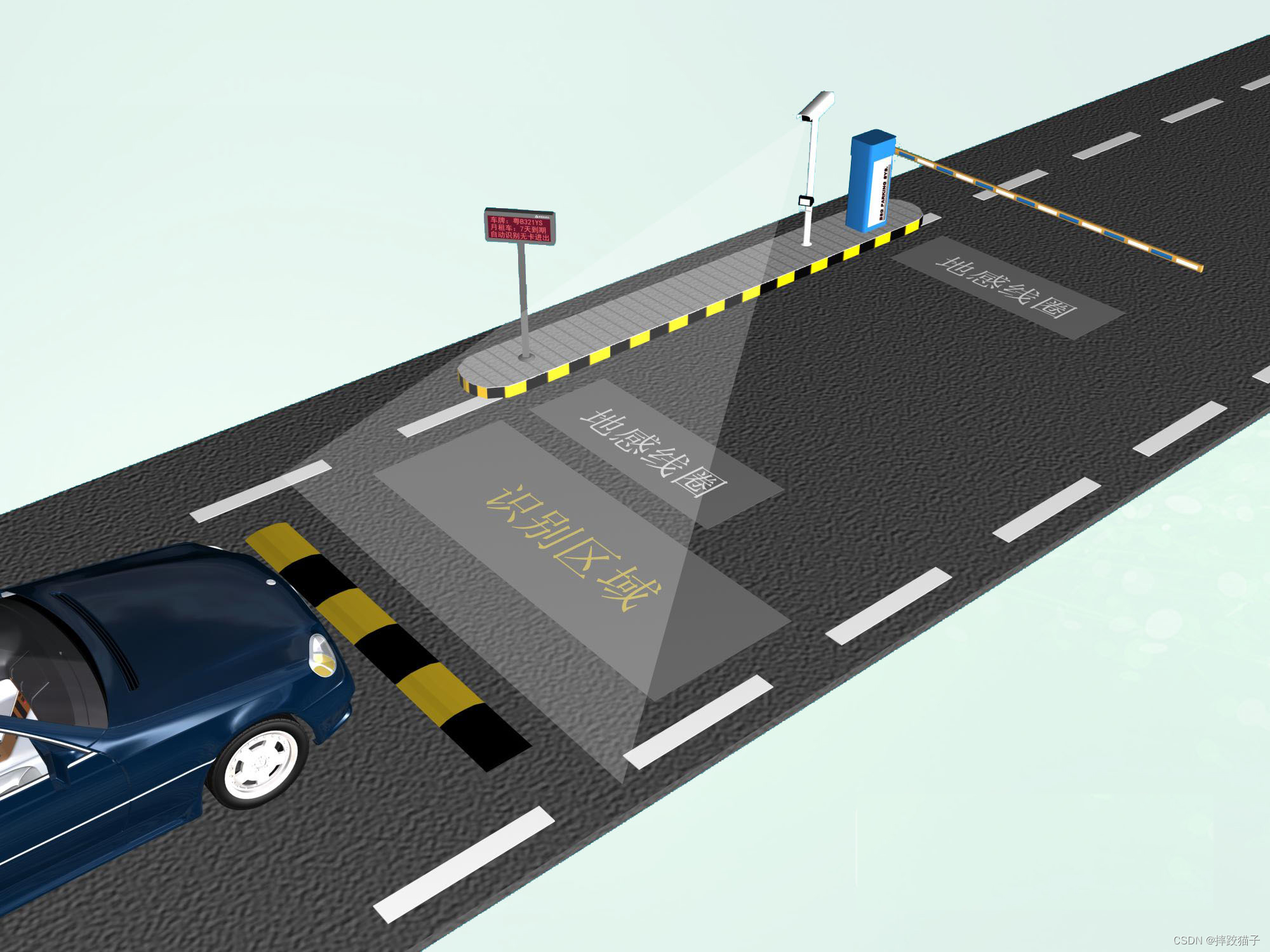

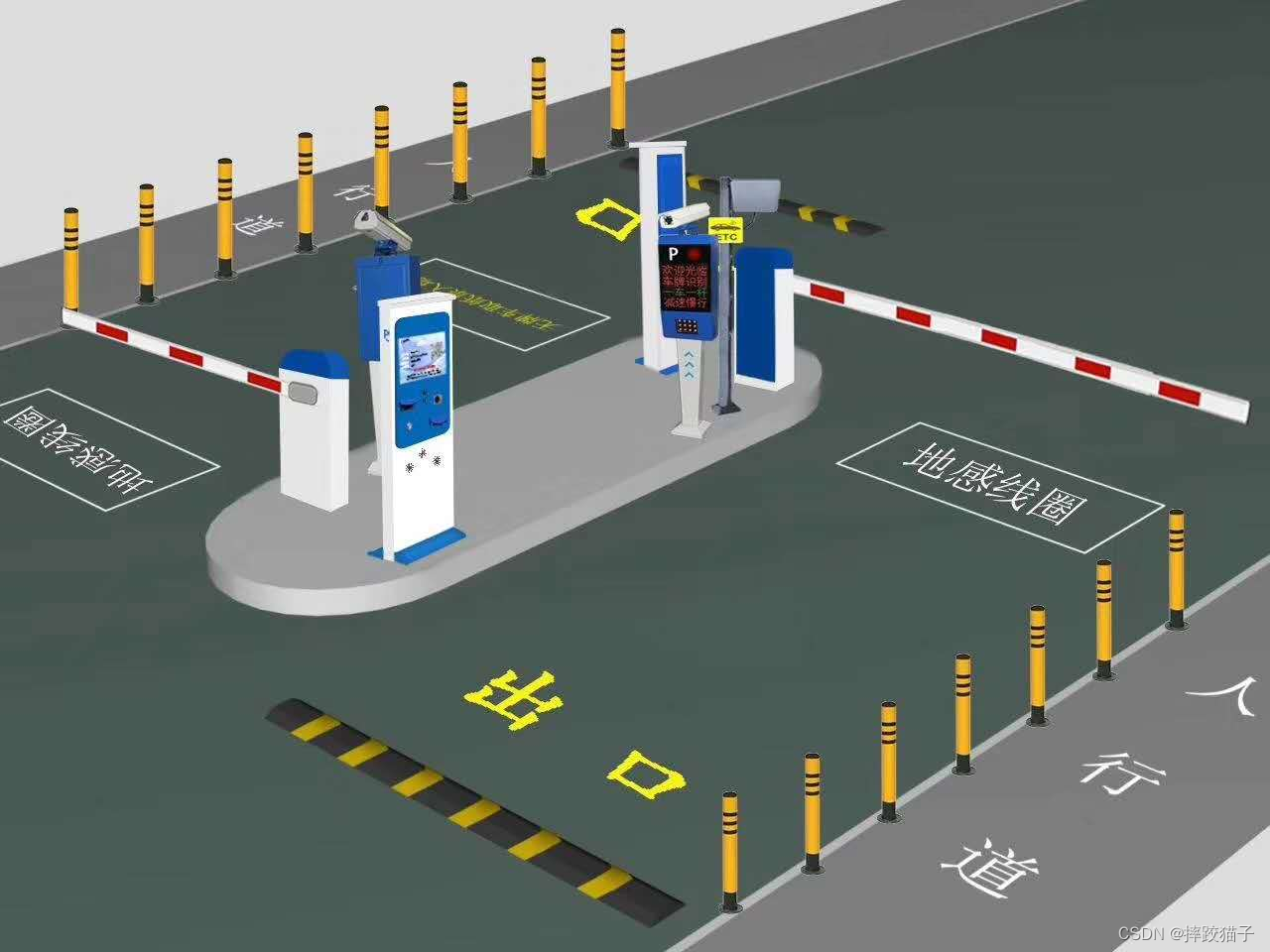

- Intelligent Parking System: License plate recognition technology can be applied to the intelligent parking system, which realizes the automatic identification and billing of vehicles entering and leaving the parking lot by recognizing the license plate number of vehicles and provides navigation and guidance services to simplify the parking process and enhance the convenience and efficiency of parking management.

- Security monitoring: license plate recognition technology is widely used in the field of security monitoring. By detecting and recognizing the license plate information of vehicles, it can realize real-time monitoring and recording of vehicle import and export, assist security personnel in tracking and investigating suspicious vehicles, and improve the effect of social security maintenance.

- Logistics management: License plate recognition technology can be applied in logistics management to help logistics companies achieve automatic identification and tracking of transport vehicles, improve the accuracy and timeliness of goods distribution, and optimize the logistics and transportation process.

- Smart city construction: license plate recognition technology is an important part of smart city construction. Through large-scale application of license plate recognition technology, traffic congestion monitoring and regulation, intelligent traffic light control, intelligent parking management and other functions can be realized, providing more efficient and intelligent solutions for urban traffic operation and management.

Advantages of Python in License Plate Recognition

Python has the advantages of rich open source resources, concise and easy-to-read syntax, cross-platform, strong community support, and extensibility in the field of license plate recognition. These features make the development of license plate recognition systems using Python more efficient, flexible and convenient.

- Rich open source libraries and tools: Python has many excellent open source image processing and machine learning libraries, such as OpenCV, Pillow, Scikit-learn, etc. These libraries provide a wealth of image processing and machine learning algorithms, which enable developers to easily implement the functions and processes of the license plate recognition system.

- Simple and easy to read syntax: Python language is known for its simple and easy to read syntax, which makes the development and debugging of license plate recognition systems more efficient and convenient.Python code is also easier for beginners and novices to understand and master, lowering the threshold of learning and use.

- Cross-platform: Python is a cross-platform programming language that works well on different operating systems such as Windows, Linux and MacOS. This means that developers can develop and deploy license plate recognition systems in different environments, providing greater flexibility and adaptability.

- Strong Community Support: Python has a large and active developer community that provides a wealth of tutorials, documentation, and sample code. Both beginners and experienced developers can get support and solve problems from the community to speed up the development process and improve system performance.

- Extensibility: Python is an extensible language that can integrate modules and libraries written in other programming languages (such as C++). For license plate recognition systems that need to handle large-scale data and complex algorithms, developers can improve the operational efficiency and performance of the system by calling the underlying C/C++ libraries.

Overview of License Plate Recognition Technology

Fundamentals of image processing and computer vision

The basic principles of image processing and computer vision are interrelated and complementary, and are often used in combination to extract, analyze, and recognize license plate information in images in applications such as license plate recognition. These principles provide important methodological and technical support for realizing accurate and efficient image processing and computer vision applications.

Fundamentals of image processing:

-

Image Acquisition: Image data acquired by camera or other devices.

-

Image pre-processing: perform operations such as denoising, enhancing, and adjusting the brightness contrast of the original image in order to optimize the image quality and facilitate subsequent processing.

-

Feature extraction: extract useful feature information from the image, such as edges, corner points, texture, etc.. Commonly used methods include Canny edge detection, Harris corner point detection, Gabor filtering and so on.

-

Image segmentation: dividing an image into different regions or objects. Common methods include threshold segmentation, edge detection, region growing, etc.

-

Target recognition and tracking: recognizing and tracking the target or region of interest in an image. Commonly used methods include template matching, feature matching, and target detection algorithms (e.g., Haar features, HOG features, deep learning).

-

Image Reconstruction and Synthesis: Reconstructing a complete or high-resolution image based on existing image information, or by synthesizing multiple images into a single image.

Fundamentals of computer vision: -

Feature extraction and description: extracting useful features from an image and describing them, such as corner points, edges, texture, etc. Common feature description algorithms include SIFT, SURF, ORB, etc.

-

Object detection and recognition: automatic detection and recognition of objects in images. Commonly used methods include feature-based classifiers (e.g., support vector machines, random forests), cascade classifiers, deep learning (e.g., convolutional neural networks), etc.

-

3D Reconstruction and Camera Measurement: Deducing the 3D structure of an object or reconstructing a 3D scene through images from multiple viewpoints. Commonly used methods include stereo matching, structured light, parallax method, and multi-view geometry.

-

Motion analysis and tracking: motion analysis and target tracking of image sequences. Common methods include optical flow method, Kalman filtering, particle filtering and so on.

-

Image Retrieval and Classification: retrieval and classification of images according to their content for image library management and fast retrieval of image information. Common methods include color histograms, local binary patterns, deep learning features, etc.

The basic process of license plate recognition

The basic process of license plate recognition can be divided into steps such as image acquisition, preprocessing, license plate localization, character segmentation, character recognition, etc. In practical applications, it is also necessary to consider the processing of various abnormal situations, such as light, occlusion, license plate deformation and other factors. At the same time, different algorithms and technologies differ in each step, and need to be adjusted according to the specific scenarios and application requirements to choose the appropriate methods and parameters.

Commonly used license plate recognition algorithms and methods

There are many kinds of license plate recognition algorithms and methods, different algorithms and methods are suitable for different application scenarios and datasets, which need to be selected and optimized according to the actual needs, and here is a brief introduction to several commonly used ones:

-

Color feature based license plate localization algorithm:

The algorithm extracts the color features of the license plate region, such as blue, yellow, etc., and then performs binarization and morphological transformation on the image, and finally selects the eligible region as the license plate region. The algorithm is simple and easy to understand but sensitive to color and light changes. -

Deep learning based license plate localization and recognition algorithm:

Deep learning methods are widely used in the fields of image processing and computer vision, and the basic idea is to automatically generate feature representations or build models to realize tasks such as target detection or recognition by learning from massive data. In license plate recognition, deep learning models such as convolutional neural networks (CNN) can be used to achieve license plate localization and character recognition. The algorithm has high recognition accuracy, but requires a large amount of training data and computational resources. -

Algorithm for license plate character segmentation based on morphological transformation:

License plate character segmentation is a key step in license plate recognition, which aims to segment the characters on the license plate to facilitate subsequent character recognition. Morphological transformation based approach is a commonly used character segmentation algorithm, and its basic idea is to use different morphological structural elements in the image to perform expansion and erosion operations on the character region so as to obtain a clear character outline, and then segment the characters by the number of vertical projection points or other features. -

Character recognition algorithm based on SVM and feature extraction:

Support Vector Machine (SVM) is a commonly used classification algorithm, which can realize the classification and recognition of license plate characters by feature extraction and training of data. The commonly used feature extraction algorithms are Gray Level Covariance Matrix (GLCM), Local Binary Pattern (LBP), etc. These features mainly describe the texture and shape information of the characters, and have high robustness and accuracy for character recognition.

preliminary

Installation and configuration of the Python environment

The steps to install and configure the Python environment are as follows:

-

Download Python: First you need to download the Python installation package from the official Python website (https://www.python.org). Choose the corresponding version according to your operating system, and it is generally recommended to download the latest stable version.

-

Run the installer: Double-click the downloaded installer and run it, it will open the Python installation wizard.

-

Select Installation Options: In the installation wizard, you can select options such as customizing the installation path, adding Python to system environment variables, and so on. If you are not familiar with it, you can install it using the default options.

-

Complete Installation: Wait for the installer to complete the installation process, which may take some time.

-

Verify Installation: After the installation is complete, open a command line tool (e.g., Command Prompt or PowerShell for Windows, or Terminal for Mac/Linux) and enter the following command to verify successful installation:

python --version

If the version number of Python is displayed, the installation was successful.

-

Configure environment variables (optional): If you did not choose to add Python to the system environment variables during installation, you can configure them manually. Add the Python installation path to the system PATH environment variable so that you can use python commands directly from any location.

-

Install third-party libraries (optional): Depending on your specific needs, you can use the pip tool to install Python’s third-party libraries. For example, use the following command to install NumPy, a popular scientific computing library:

pip install numpy

Data set preparation

To implement license plate recognition based on Python, you first need to prepare the dataset required for training and testing.

-

Collecting License Plate Image Data: collect image data containing license plates, which can be obtained in different ways, such as on-site photography, public datasets, etc. Ensure that the dataset contains license plate images of multiple types and angles to improve the robustness of the algorithm.

-

Dataset division: the collected dataset is divided into a training set and a test set. Usually, most of the data is used to train the model and a small amount of data is used to evaluate the performance of the model. The dataset can be divided according to a 70-30 or 80-20 ratio, or more complex divisions such as cross-validation can be used.

-

Labeling data: Each image is labeled to frame out the license plate area and provide the corresponding license plate character labels. Labeling can be done using image processing tools or specialized labeling tools. Ensure that the labeling is accurate and consistent so that the model learns the location and character information of the license plate.

-

Data Enhancement (optional): data enhancement operations are performed on the training set to expand the dataset and increase the diversity of the data. For example, operations such as rotation, translation, scaling, and brightness adjustment can be performed to improve the generalization ability of the model.

-

Data preprocessing: Perform preprocessing operations on image data, such as resizing, normalizing, grayscaling, etc. Ensure that the size and format of all images match the model requirements.

-

Data loading: write Python code that loads image data using a suitable library (e.g. OpenCV, PIL) and converts it to an input format acceptable to the model (e.g. NumPy array or tensor).

Image Preprocessing

Image reading and grayscale conversion

You can use Python’s OpenCV library to read the image and perform a grayscale conversion.

import cv2

# Read the image

img = cv2.imread('image.jpg')

# Convert images to grayscale

gray_img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)In the code, thecv2.imread('image.jpg')function is used to read the image named ‘image.jpg’, you can modify the file name and path according to your own actual situation.cv2.cvtColor()function is used to convert the read color image to grayscale image, the first parameter is the original image, the second parameter is the conversion method.cv2.COLOR_BGR2GRAYIndicates that the image in BGR color space is converted to a grayscale image.

There is only one value for each pixel in a grayscale image, ranging from 0 to 255, so the output grayscale image should be single channel. Moreover, when performing image processing, it is better to use grayscale images for processing because grayscale images are less computationally intensive and faster to process.

Image Enhancement and Filtering

Image enhancement and filtering are commonly used techniques in image processing and can be implemented using the OpenCV library.

Image Enhancement:

import cv2

import numpy as np

# Read the image

img = cv2.imread('image.jpg')

# Increase contrast and brightness

alpha = 1.5 # multiplier for contrast increase

beta = 30 # value of brightness increase

enhanced_img = cv2.convertScaleAbs(img, alpha=alpha, beta=beta)In the code, thecv2.convertScaleAbs()function is used to increase the contrast and brightness of an image.alphaThe parameter indicates the multiplier of the contrast, the larger the higher the contrast;betaThe parameter indicates the increase value of the brightness, the larger the higher the brightness.

Image Filtering:

import cv2

import numpy as np

# Read the image

img = cv2.imread('image.jpg')

# Use mean filtering

kernel_size = (5, 5) # Filter size

filtered_img = cv2.blur(img, kernel_size)In the code, thecv2.blur()function is used to perform mean filtering.kernel_sizeparameter indicates the size of the filter, where(5, 5)Indicates that the filter is a square filter of 5×5 size. Mean filter smoothes (blurs) the image by calculating the average value of the neighborhood around each pixel in the image.

Edge Detection and Contour Extraction

Edge Detection:

import cv2

# Read the image and do a grayscale conversion

img = cv2.imread('image.jpg')

gray_img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Edge detection using the Canny algorithm

edges = cv2.Canny(gray_img, threshold1=30, threshold2=100)In the code, thecv2.Canny()function is used to perform edge detection.gray_imgis the input grayscale image.threshold1andthreshold2is the threshold parameter, which is used to control the sensitivity of edge detection. These two thresholds are adjusted according to the actual situation to get a suitable edge image.

Contour Extraction:

import cv2

# Read the image and do a grayscale conversion

img = cv2.imread('image.jpg')

gray_img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Edge detection using the Canny algorithm

edges = cv2.Canny(gray_img, threshold1=30, threshold2=100)

# Finding the silhouette

contours, _ = cv2.findContours(edges, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

# Drawing outlines

contour_img = cv2.drawContours(img.copy(), contours, -1, (0, 255, 0), 2)In the code, thecv2.findContours()function is used to find the contour of the image. The first parameter is the edge image, generally using the image after edge detection as input; the second parameter is the retrieval mode of the contour, thecv2.RETR_EXTERNALindicates that only the outermost contour is extracted; the third parameter is the method of approximating the contour, thecv2.CHAIN_APPROX_SIMPLEIndicates the use of a simplified outline representation. The function returns thecontoursis a list of all profiles.

Then, you can use thecv2.drawContours()function draws the contours onto the original image for visualization.img.copy()Used to create a copy of the image on which the outline is drawn, the(0, 255, 0)Indicates the color of the drawn outline, and 2 indicates the thickness of the drawn outline line.

For OpenCV version 4 and above, the return value of the cv2.findContours() function is slightly different and needs to be modified:

contours, _ = cv2.findContours(edges, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)character recognition

Feature Extraction and Selection

Feature extraction and selection is an important step in the field of machine learning and data mining and can be accomplished by different methods.

Feature Extraction:

-

Direct Extraction: Extracting specific features directly from the raw data. For example, for image data features can be extracted using color histograms, texture features, or shape descriptors.

-

Statistical features: statistical features are extracted by statistical analysis of the data. For example, mean, variance, maximum value, minimum value, etc.

-

Frequency domain features: the data is converted to the frequency domain and the frequency domain features are extracted. Commonly used methods include Fast Fourier Transform (FFT) and Wavelet Transform.

-

Model-based feature extraction: features are extracted by training a model. For example, the output of the convolutional layer of a convolutional neural network (CNN) is used as image features.

Feature Selection:

-

Filtering method: By evaluating and ranking the features, the features with high correlation with the target variable are selected. Commonly used indicators include mutual information, chi-square test, correlation coefficient, etc.

-

Wraparound methods: feature selection is viewed as a subset selection problem, where the best subset of features is selected by trying different subsets of features and evaluating the model performance. Commonly used methods include recursive feature elimination (RFE), genetic algorithms, etc.

-

Embedding method: feature selection is embedded into the model training, and the best features are selected during the training process. Commonly used methods include L1 regularization, feature importance of decision trees, etc.

Sample code demonstrating feature extraction and selection using the sklearn library:

from sklearn.feature_selection import SelectKBest, chi2

from sklearn.linear_model import LogisticRegression

from sklearn.pipeline import Pipeline

# Assume that there are x and y as input data and target variables

# Create feature selectors

feature_selector = SelectKBest(score_func=chi2, k=10)

# Create classifiers

classifier = LogisticRegression()

# Create pipelines, combining feature selection and classifiers

pipeline = Pipeline([('selector', feature_selector), ('classifier', classifier)])

# Training models

pipeline.fit(X, y)

# Predictions using selected features

predictions = pipeline.predict(X)In the code, theSelectKBestis used as a feature selector.chi2as an evaluation metric. k parameter indicates the number of features selected. Then, the number of features selected by thePipelineCombining feature selectors and classifiers into a pipeline allows for direct training and prediction on the data.

Classifier training and optimization

The training and optimization of classifiers is a key step in machine learning, and is demonstrated with sample code using the sklearn library for classifier training and optimization:

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

# Assume that there are x and y as input data and target variables

# Create classifiers

classifier = RandomForestClassifier()

# Set the range of parameters to be tuned

param_grid = {

'n_estimators': [100, 200, 300],

'max_depth': [None, 5, 10],

'min_samples_split': [2, 5, 10]

}

# Parameter optimization and model selection using GridSearchCV

grid_search = GridSearchCV(classifier, param_grid=param_grid, cv=5)

grid_search.fit(X, y)

# Output optimal parameters and corresponding model performance

print("Best Parameters: ", grid_search.best_params_)

print("Best Score: ", grid_search.best_score_)

# Models with optimal parameters are used for training and prediction

best_classifier = grid_search.best_estimator_

best_classifier.fit(X, y)

predictions = best_classifier.predict(X_new)In the code, create a classifier objectRandomForestClassifier(). Then, the parameter range to be tuned, param_grid, is defined, containing a list of parameters and their possible values that are desired to be optimized.

Next, use theGridSearchCVclass for parameter optimization and model selection. the cv parameter is used to specify the number of folds for cross-validation; here, a 5-fold cross-validation is chosen.GridSearchCVAll parameter combinations are automatically traversed and model performance is evaluated using cross-validation.

In the case of a call tofit()After the method has been trained, it can be passedbest_params_ and best_score_attribute to obtain the optimal parameters and corresponding model performance.

Models with optimal parameters can be used for training and prediction.best_estimator_attribute returns the classifier object with the best parameters. Use the object’sfit()method to train the model, which can then be usedpredict()Methods to make predictions.

Character Recognition Implementation and Performance Evaluation

Character recognition is a common machine learning task, and cross-validation can be used to more accurately assess model performance, as well as experiment with different feature extraction methods, tuning classifier hyperparameters, etc. to improve performance.

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.svm import SVC

# Assume that there are x and y as input data and target variables

# Divide the dataset into a training set and a test set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Create classifiers

classifier = SVC()

# Training models

classifier.fit(X_train, y_train)

# Predictions on test sets

predictions = classifier.predict(X_test)

# Calculation accuracy

accuracy = accuracy_score(y_test, predictions)

print("Accuracy: ", accuracy)In the code, the dataset is first divided into a training set and a test set, where thetest_sizeparameter is used to control the proportion of the test set, here it is set to 0.2 to indicate that 20% of the data will be used as the test set.

Create a classifier objectSVC(), Support Vector Machines have been chosen as the classifier here, you can also choose other classifiers (e.g. Decision Trees, Random Forests, etc.).

Next, the model is trained using the training set by calling the fit() method. The trained model is used to predict the test set and the accuracy of the classifier on the test set is calculated using the accuracy_score() function and finally, the performance of the classifier can be evaluated by outputting the accuracy.