catalogs

Preface:

- System: ubuntu 18.04

- Graphics card: A100-80G (dabble, hehehe~)

(This time the main record is how to quickly fine-tune commands for large models)

1. Selected works: lit-llama

- Address: https://github.com/Lightning-AI/lit-llama

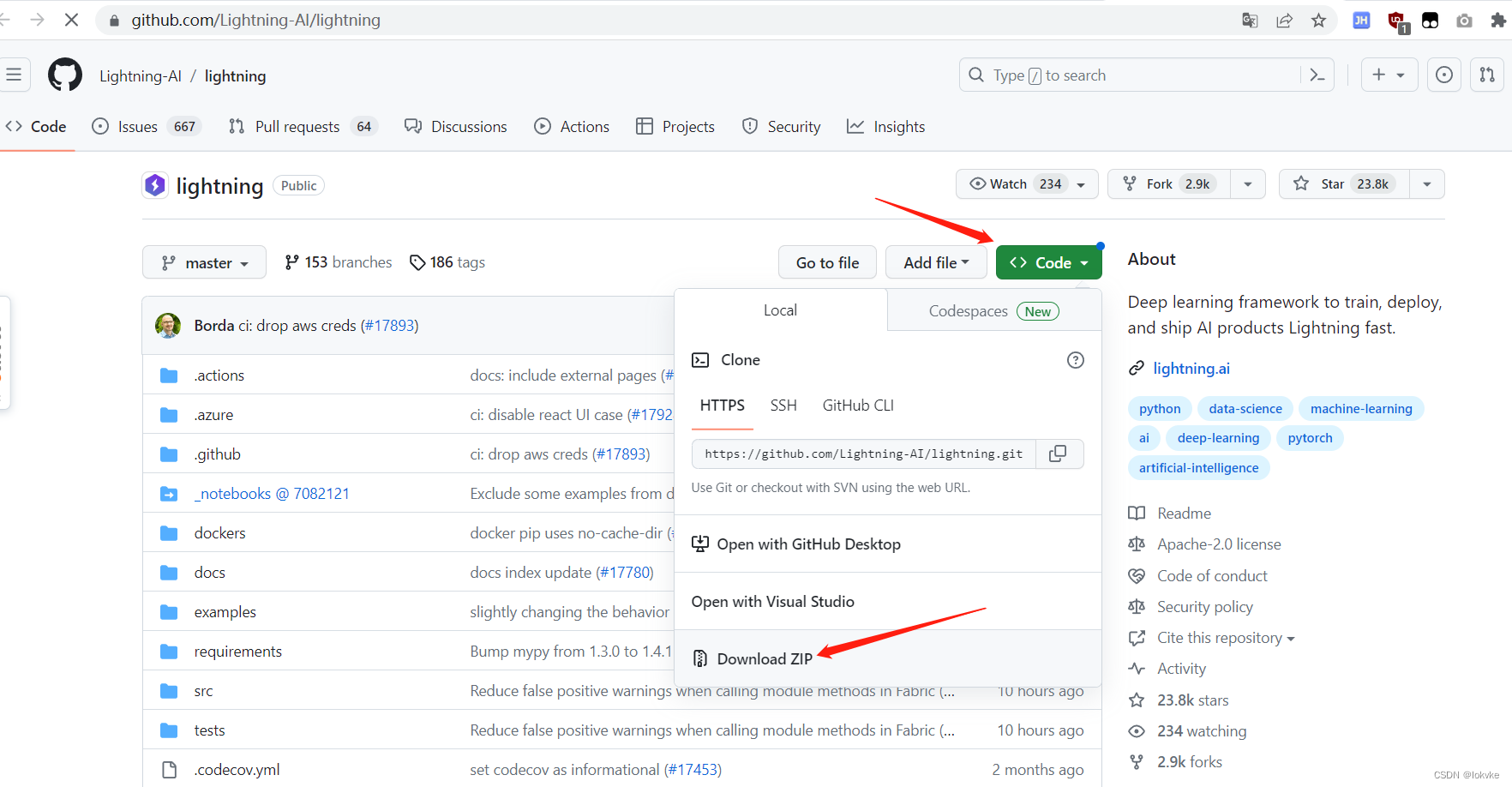

2. Downloading the project

git clone https://github.com/Lightning-AI/lit-llama.git3. Installation environment

- Switch to the project directory

cd ./lit-llama- Installation of dependent libraries using pip

pip install -r requirements.txt(Of course, there may be network issues here that prevent the installation of lightning)

It can be installed using the following method:

-

Download lightning project

-

Unzip into the project directory and install using the following command

python setup.py install -

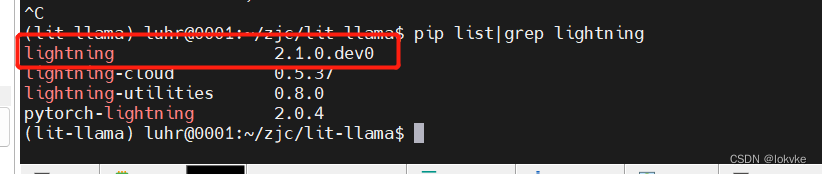

Check if lightning was installed successfully:

pip list|grep lightning(Note here that lightning version is 2.1.0)

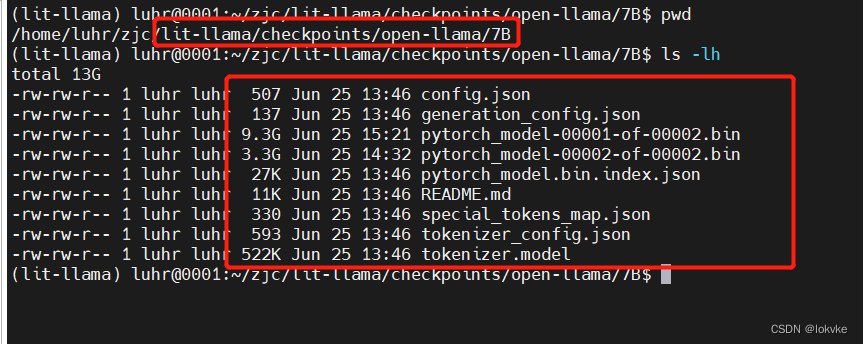

4. Download the LLAMA-7B model

Here we are going to do instruction fine-tuning based on LLAMA-7B, so we have to download the model weights first and do a series of transformations.

- Switch to the location of lit-llama and use the following command to download the weights:

python scripts/download.py --repo_id openlm-research/open_llama_7b --local_dir checkpoints/open-llama/7B

(The file is a bit large and needs to wait some time)

- After downloading, you will get the following file:

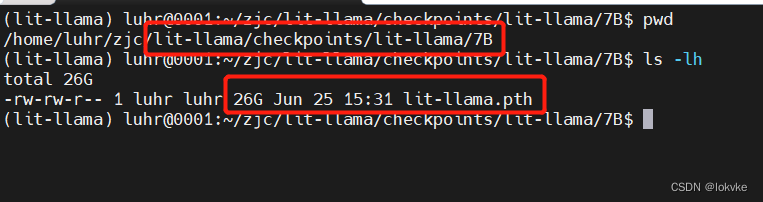

5. Doing model conversions

- Still switching to the location of lit-llama, use the following command to convert it

python scripts/convert_hf_checkpoint.py --checkpoint_dir checkpoints/open-llama/7B --model_size 7B - After the conversion, you will get a lit-llama.pth file of about 26G in lit-llama/checkpoints/lit-llama/7B, and a tokenizer.pth file in the previous directory (lit-llama/checkpoints/lit-llama). model file in the upper directory (lit-llama/checkpoints/lit-llama).

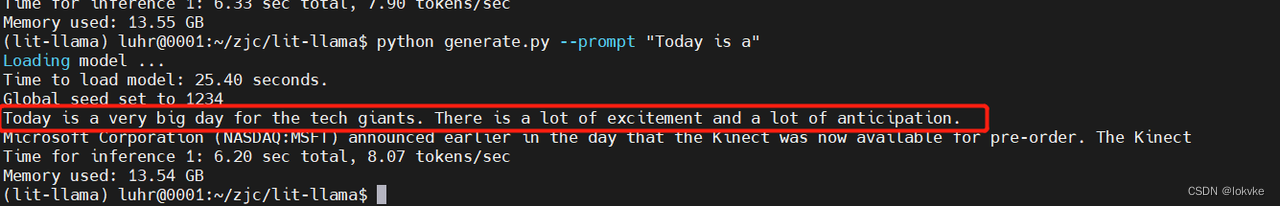

6. Preliminary testing

-

At the command line, run with the following command:

python generate.py --prompt "Today is a"

-

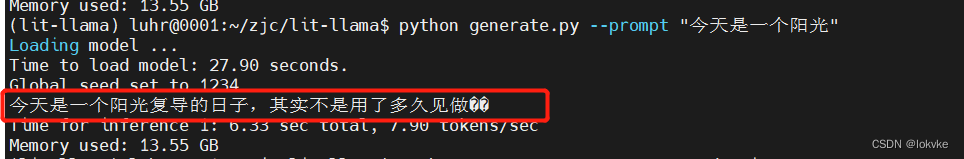

Tested with Chinese and found it didn’t work well (started talking nonsense~)

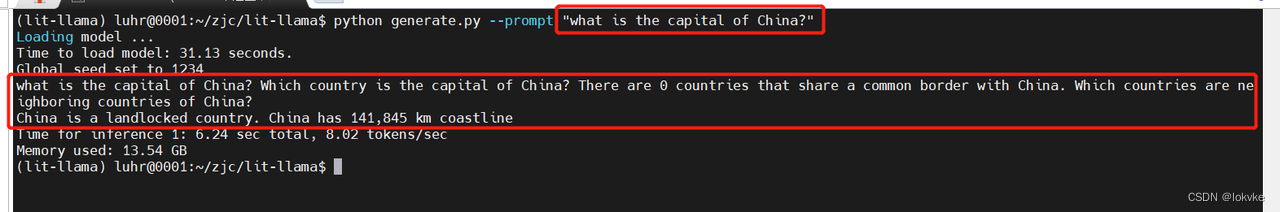

7. Why is instruction fine-tuning necessary?

- Because the original pre-trained LLAMA model is a big language model (crap~) that predicts the next word based on the previous word, if you ask it a question, it won’t answer you correctly, and if you ask it a question, it might continue some sentence that is the same as yours, e.g.

- Prompt for “What is the capital of China?” and its response is shown below:

8. Commencement of instruction fine-tuning

8.1 Data preparation

-

The 52k instruction data from alpaca is used here for fine-tuning, using the following instructions:

python scripts/prepare_alpaca.py -

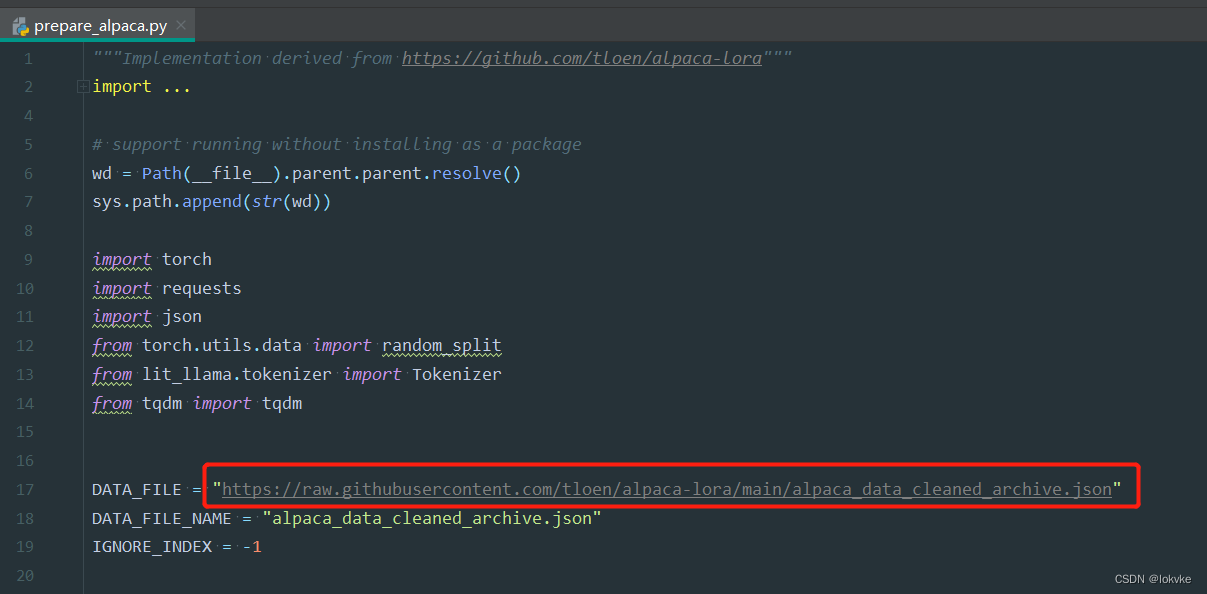

If the download doesn’t work, we’ll just open the scripts/prepare_alpaca.py file as follows:

-

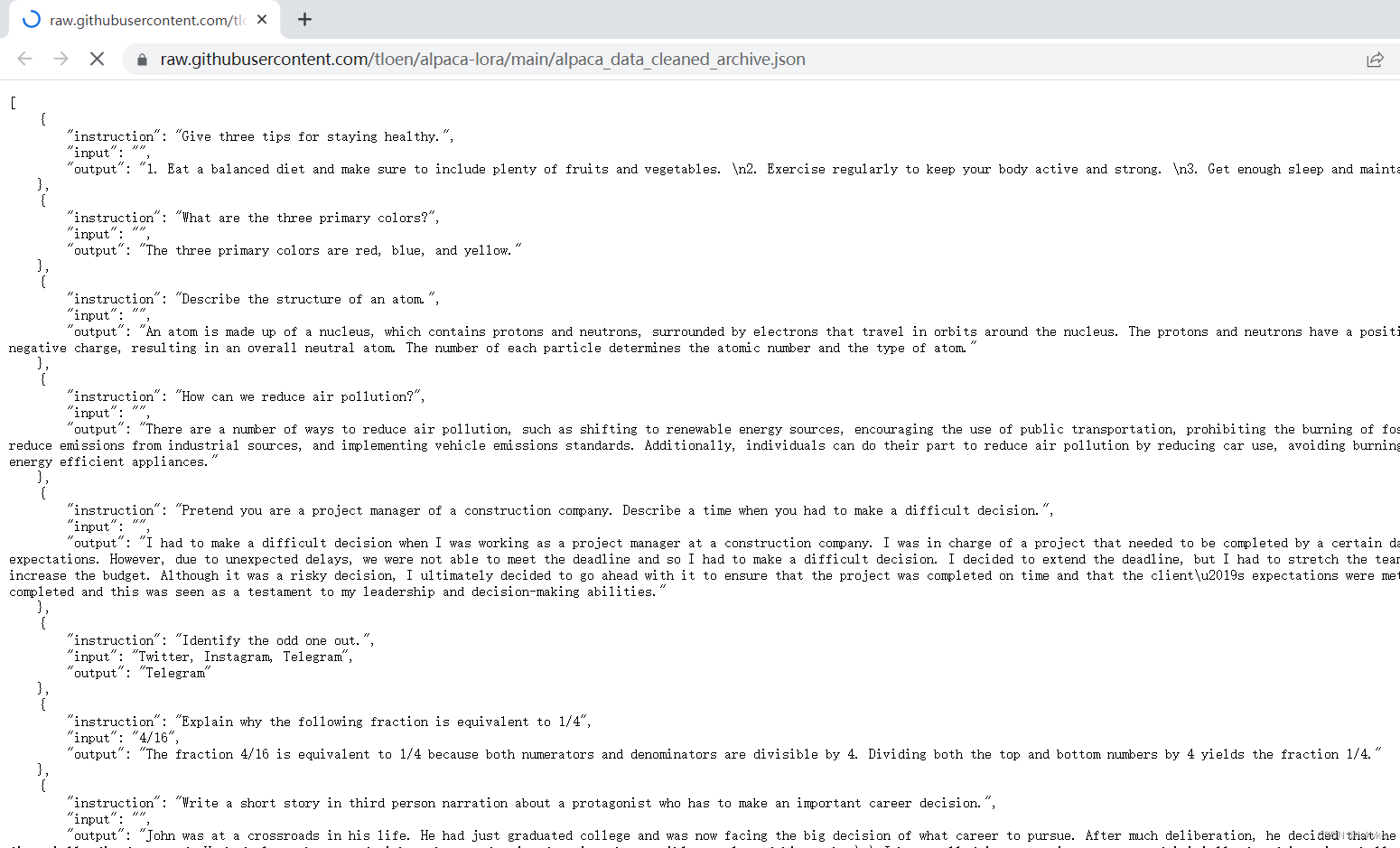

Open the link https://raw.githubusercontent.com/tloen/alpaca-lora/main/alpaca_data_cleaned_archive.json directly, then select the page in its entirety to copy it, then save it to a newly created file.

-

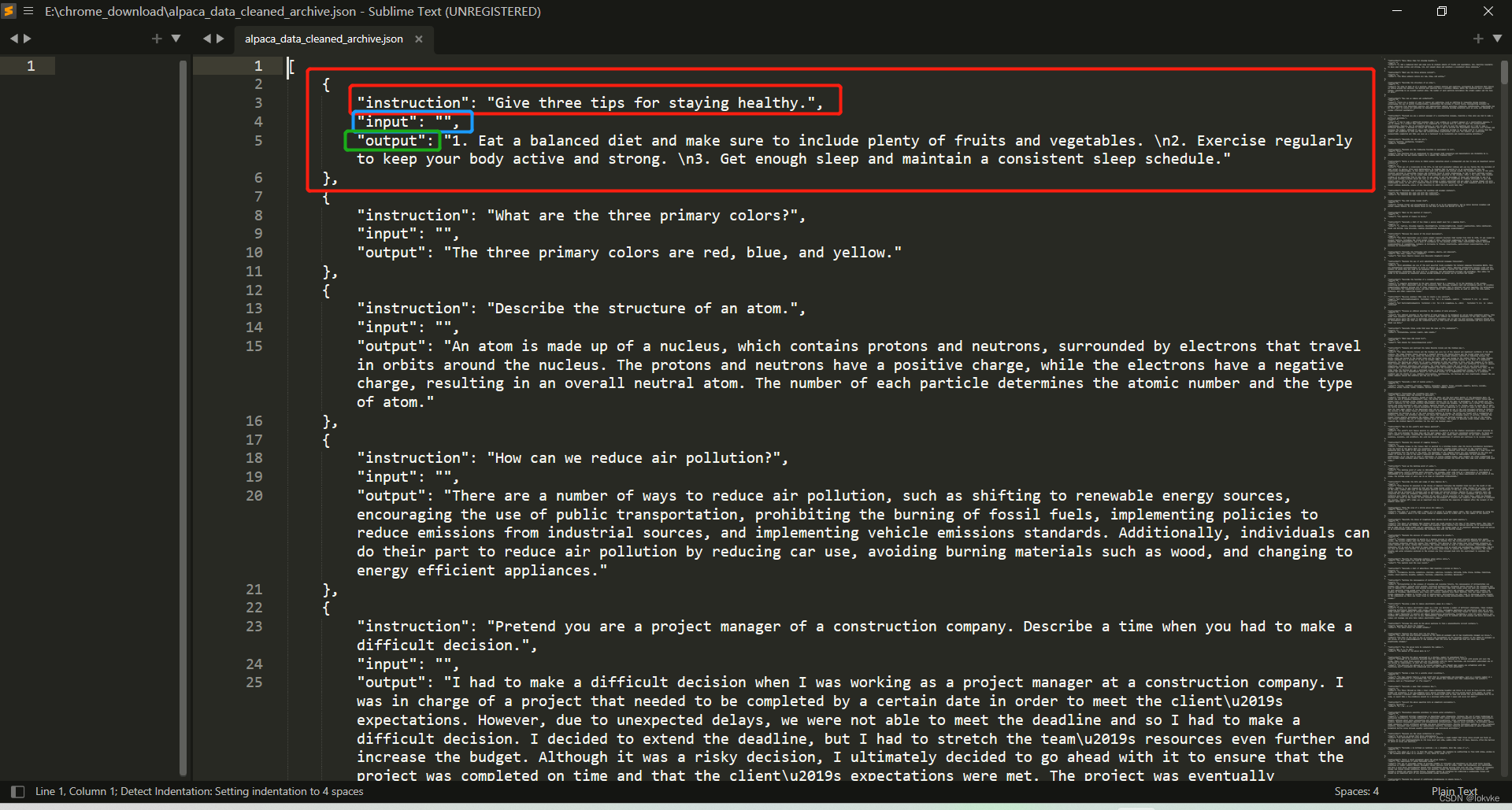

get alpaca_data_cleaned_archive.json (see the name of the clean should be cleaned), each instruction contains “instruction”, “input”, “output” three keywords, this time not to specifically expand the explanation. As shown below:

-

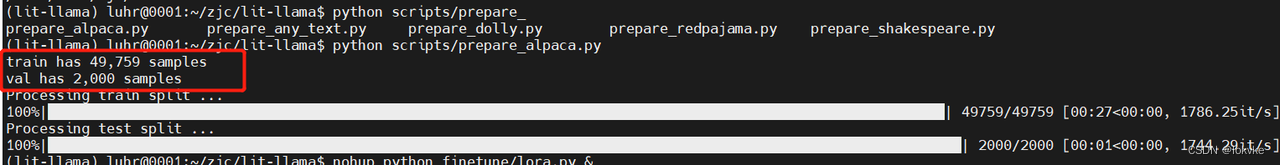

After running the command, data division will also be done. train-49759, val-2000 is shown below:

-

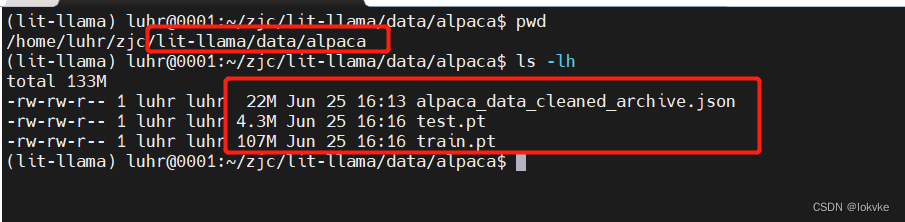

Finally, you will get the following file under the path lit-llama/data/alpaca:

8.2 Starting Model Training

-

Use the following command:

python finetune/lora.py -

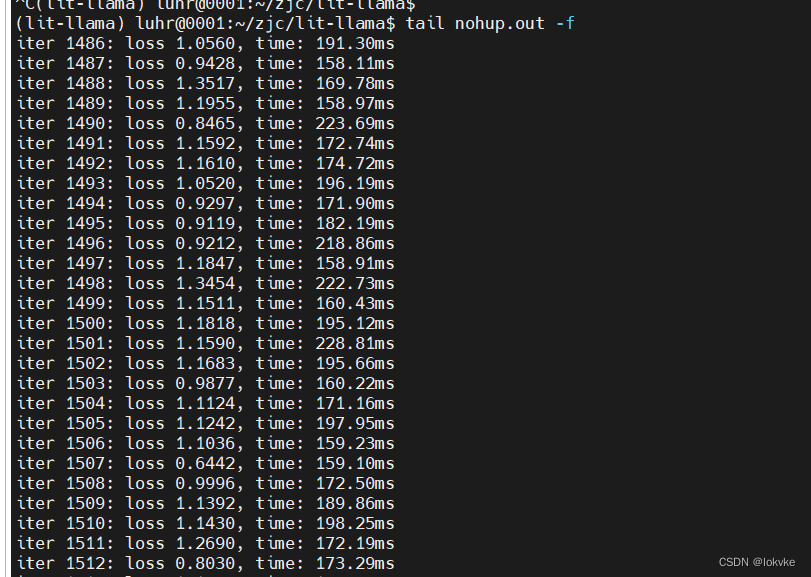

The training process is shown below.

-

The whole training process takes about 1.5 hours (16:51 ~ 18:22)

-

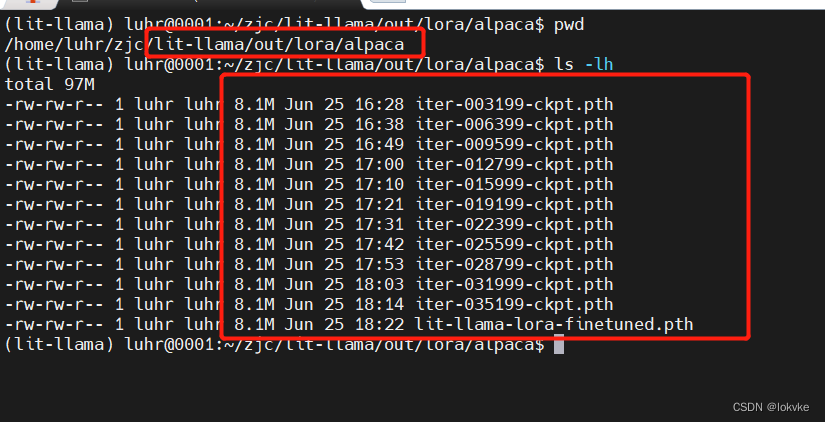

After training, a series of lora weight files are obtained at out/lora/alpaca as shown below:

8.3 Model Testing

-

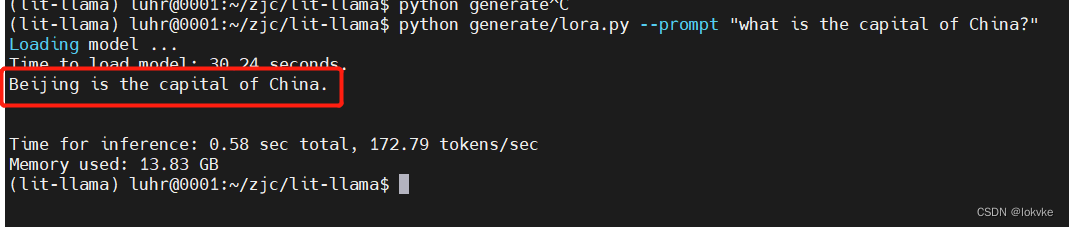

Use the following instructions to ask a question where the prompt is “what is the capital of China?”.

python generate/lora.py --prompt "what is the capital of China?" -

The results obtained are shown below

-

As you can see, after fine-tuning the commands, the model is ready to answer our questions properly.

(ps: you can refer to the README of the project for other details, it’s written very clearly~)

The end.