Article Catalog

In order to illustrate the testing and dataset adaptation of these SLAM frameworks above, the M2DGR dataset is taken as an example for the respective configuration and running, which is convenient for me to adapt my own dataset in the future.The SLAM frameworks that are run include: ORB-SLAM2, ORB-SLAM3, VINS-Mono, A-LOAM, LeGO-LOAM, LIO-SAM, LVI -SAM, FAST-LIO2, FAST-LIVO, Faster-LIO, hdl_graph_slam. The experiment is based on Ubuntu 20.04, in which the installation of ORB, VINS-Mono, and LOAM series refer to my previous blog, with detailed installation methods, and the other frameworks will be briefly introduced later to the installation methods

Because the learning is not deep enough, the main purpose is to record the parameter configuration method, some parameter configuration may be wrong and lead to data running problems.If you find any errors or problems, please feel free to discuss themI’ll fix it.

I. The M2DGR dataset

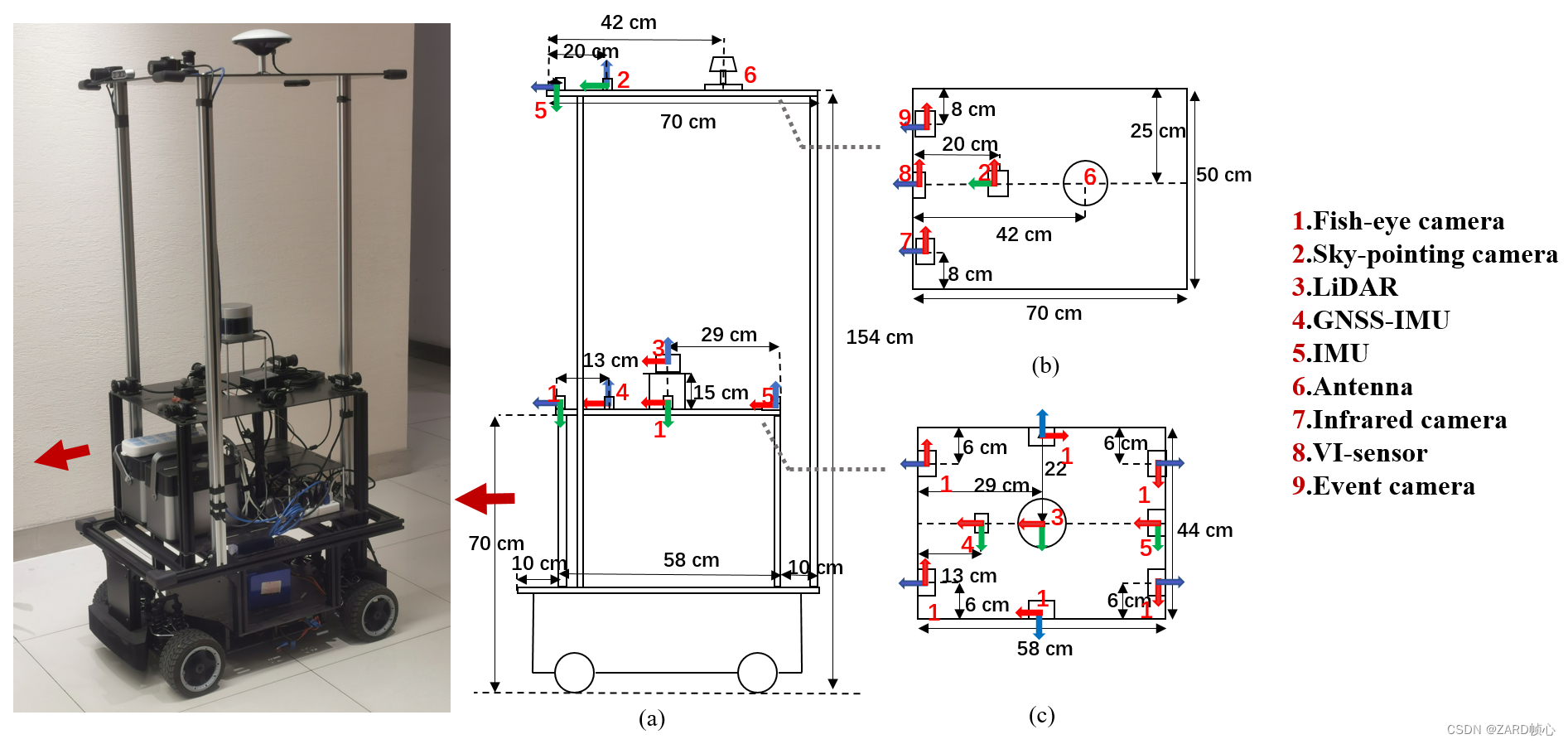

M2DGRis a novel large-scale dataset collected by a ground-based robot with a complete sensor suite including six fisheye and one sky-pointing RGB cameras, infrared cameras, event cameras, visual inertial sensors (VI-sensors), inertial measurement units (IMUs), LiDARs, consumer-grade Global Navigation Satellite System (GNSS) receivers, and a real-time kinetic (RTK) signaling GNSS-IMU navigation system. All of these sensors are well calibrated and synchronized and their data is recorded simultaneously. The trajectory truth values were obtained by motion capture equipment, laser 3D tracker and RTK. The dataset consists of 36 sequences (about 1 TB) that were captured in different scenarios including indoor and outdoor environments.

The sensor parameters are as follows:

- LIDAR Velodyne VLP-32C, 360 Horizontal Field of View (FOV),-30 to +10 vertical FOV,10Hz,Max Range 200 m,Range Resolution 3 cm, Horizontal Angular Resolution 0.2°.

- RGB Camera FLIR Pointgrey CM3-U3-13Y3C-CS,fish-eye lens,1280*1024,190 HFOV,190 V-FOV, 15 Hz

- GNSS Ublox M8T, GPS/BeiDou, 1Hz

- Infrared Camera,PLUG 617,640*512,90.2 H-FOV,70.6 V-FOV,25Hz;

- V-I Sensor,Realsense d435i,RGB/Depth 640*480,69H-FOV,42.5V-FOV,15Hz;IMU 6-axix, 200Hz

- Event Camera Inivation DVXplorer, 640*480,15Hz;

- IMU,Handsfree A9,9-axis,150Hz;

- GNSS-IMU Xsens Mti 680G. GNSS-RTK,localization precision 2cm,100Hz;IMU 9-axis,100 Hz;

- Laser Scanner Leica MS60, localization 1mm+1.5ppm

- Motion-capture System Vicon Vero 2.2, localization accuracy 1mm, 50 Hz;

Later we have to configure the parameters, refer to the calibration filecalibration_results.txt, each data topic of the rosbag sequence is as follows:

- LIDAR:

/velodyne_points - RGB Camera:

/camera/left/image_raw/compressed,

/camera/right/image_raw/compressed,

/camera/third/image_raw/compressed,

/camera/fourth/image_raw/compressed,

/camera/fifth/image_raw/compressed,

/camera/sixth/image_raw/compressed,

/camera/head/image_raw/compressed - GNSS Ublox M8T:

/ublox/aidalm,

/ublox/aideph,

/ublox/fix,

/ublox/fix_velocity,

/ublox/monhw,

/ublox/navclock,

/ublox/navpvt,

/ublox/navsat,

/ublox/navstatus,

/ublox/rxmraw - Infrared Camera:

/thermal_image_raw - V-I Sensor:

/camera/color/image_raw/compressed,/camera/imu - Event Camera:

/dvs/events,/dvs_rendering/compressed - IMU:

/handsfree/imu

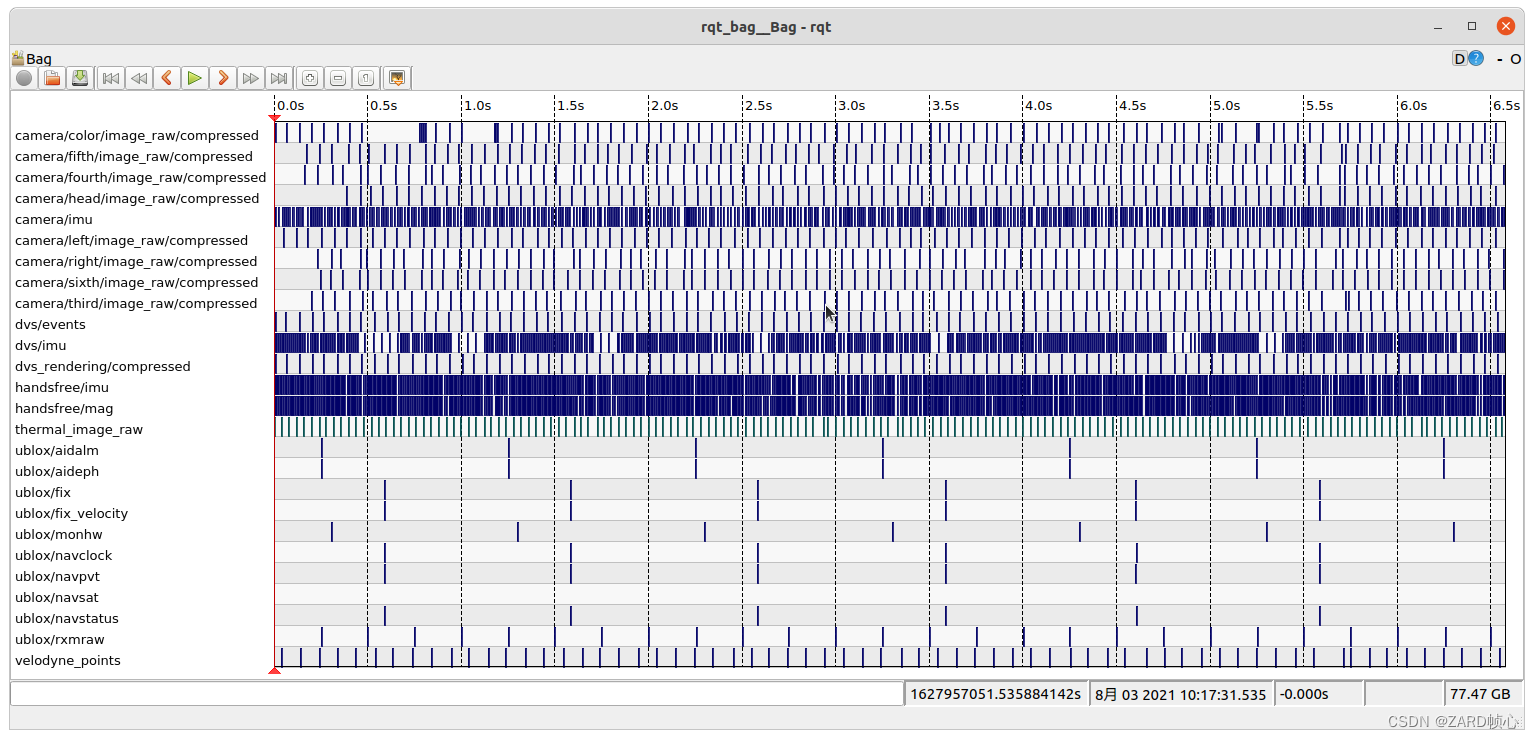

utilizationstreet_02.bagTo test the individual codes, we first pass therqt_bagcommand to visualize the bag, you can see that the time synchronization of this data is not very good

roscore

rqt_bag street_02.bag

(ii) ORB-SLAM2

2.1 Configuration parameters

inExamples/Monocular/Add a parameter file to the folderM2DGR.yamlmodeled afterExamples/Monocular/TUM1.yamlFile modification parameters, mainly modify the camera internal parameters, the rest of the parameters adjusted as needed

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 617.971050917033

Camera.fy: 616.445131524790

Camera.cx: 327.710279392468

Camera.cy: 253.976983707814

Camera.k1: -0.28340811

Camera.k2: 0.07395907

Camera.p1: 0.00019359

Camera.p2: 1.76187114e-05

Camera.width: 640

Camera.height: 480

# Camera frames per second

Camera.fps: 15.02.2 Monocular

According to the image message above, the monocularros_mono.ccFile subscriptions.

//ros::Subscriber sub = nodeHandler.subscribe("/camera/image_raw", 1, &ImageGrabber::GrabImage,&igb);

// Replace with:

ros::Subscriber sub = nodeHandler.subscribe("/camera/color/image_raw", 1, &ImageGrabber::GrabImage,&igb);Recompile ros node

./build_ros.sh

Open four terminals and run each

roscore

# Open a terminal in the ORB_SLAM2 directory and run ORB-SLAM2

rosrun ORB_SLAM2 Mono Vocabulary/ORBvoc.txt Examples/Monocular/M2DGR.yaml

# **Note**: the image in the bag is in compressed format, use the image_transport package to decompress the image

rosrun image_transport republish compressed in:=/camera/color/image_raw raw out:=/camera/color/image_raw

# Open a terminal in the folder where bag is located and start playing the dataset:

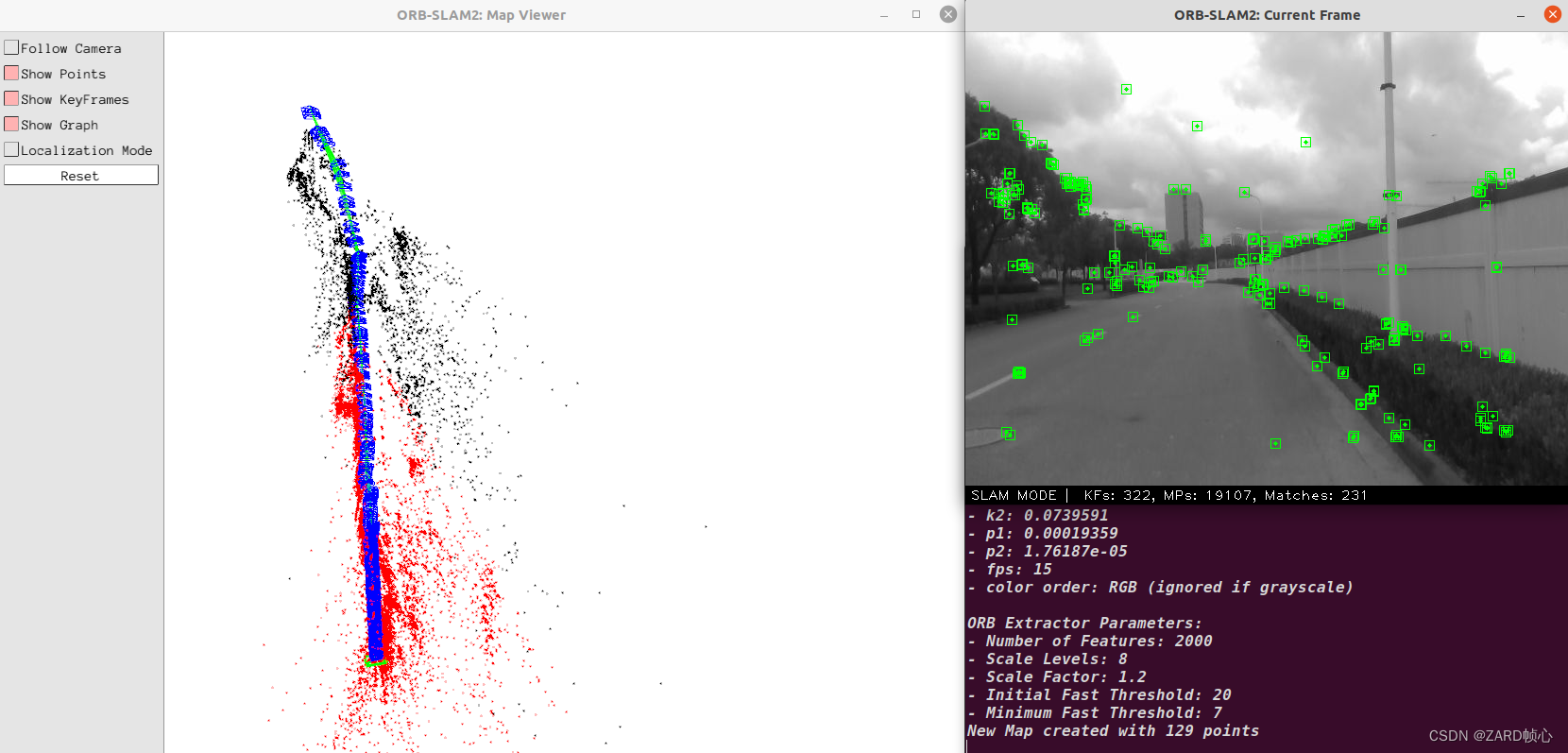

rosbag play street_02.bagThe problem with pure vision is that it is slow to initialize and particularly susceptible to tracking loss (too fast motion, exposure, and interference from dynamic objects), in addition to severe single-purpose scale drift, which fails completely

The scale becomes smaller and squeezes a lump!

Three, ORB-SLAM3

3.1 Configuration parameters

inExamples/Monocular-Inertial/Add a parameter file to the folderUrbanNav.yamlmodeled afterExamples/Monocular-Inertial/EuRoC.yamlDocumentation, based onzed2_intrinsics.yaml、xsens_imu_param.yamlandextrinsic.yamlFile modification parameters, mainly modify the camera and IMU internal parameters and joint calibration, the rest of the parameters are adjusted as required

Camera.type: "PinHole"

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 617.971050917033

Camera.fy: 616.445131524790

Camera.cx: 327.710279392468

Camera.cy: 253.976983707814

Camera.k1: 0.148000794688248

Camera.k2: -0.217835187249065

Camera.p1: 0.0

Camera.p2: 0.0

Camera.width: 640

Camera.height: 480

# Camera frames per second

Camera.fps: 15.0

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Transformation from camera to body-frame (imu)

Tbc: !!opencv-matrix

rows: 4

cols: 4

dt: f

data: [0.0, 0.0, 1.0, 0.57711,

-1.0, 0.0, 0.0, -0.00012,

0.0, -1.0, 0.0, 0.83333,

0.0, 0.0, 0.0, 1.0]

# IMU noise

IMU.NoiseGyro: 2.1309311394972831e-02 #1.6968e-04

IMU.NoiseAcc: 1.2820343288774358e-01 #2.0e-3

IMU.GyroWalk: 3.6603917782528627e-04

IMU.AccWalk: 1.3677912958097768e-02

IMU.Frequency: 1503.2 Operational monocular + IMU

Modify ros_mono_inertial.cc’s IMU with image message subscription to:

// ros::Subscriber sub_imu = n.subscribe("/imu0", 1000, &ImuGrabber::GrabImu, &imugb);

// ros::Subscriber sub_img0 = n.subscribe("/cam0/image_raw", 100, &ImageGrabber::GrabImage,&igb);

ros::Subscriber sub_imu = n.subscribe("/handsfree/imu", 1000, &ImuGrabber::GrabImu, &imugb);

ros::Subscriber sub_img0 = n.subscribe("/camera/color/image_raw", 100, &ImageGrabber::GrabImage,&igb);Compile, then run

./build_ros.sh

# Configure the environment:

source Examples/ROS

Open a terminal in the #ORB_SLAM3 directory and run ORB-SLAM3

rosrun ORB_SLAM3 Mono_Inertial Vocabulary/ORBvoc.txt Examples/Monocular-Inertial/M2DGR.yaml

# **Note**: the image in the bag is in compressed format, use the image_transport package to decompress the image

rosrun image_transport republish compressed in:=/camera/color/image_raw raw out:=/camera/color/image_raw

# Open a terminal in the folder where bag is located and start playing the dataset:

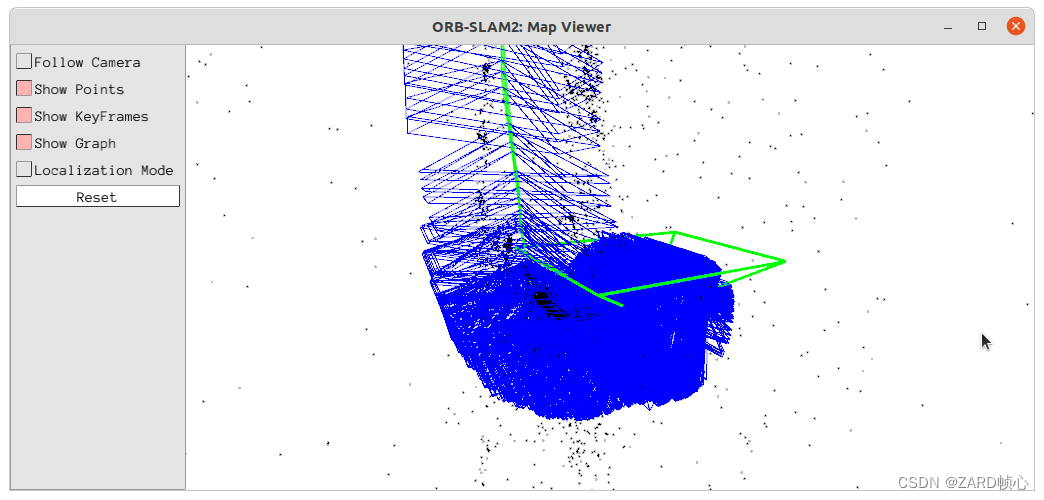

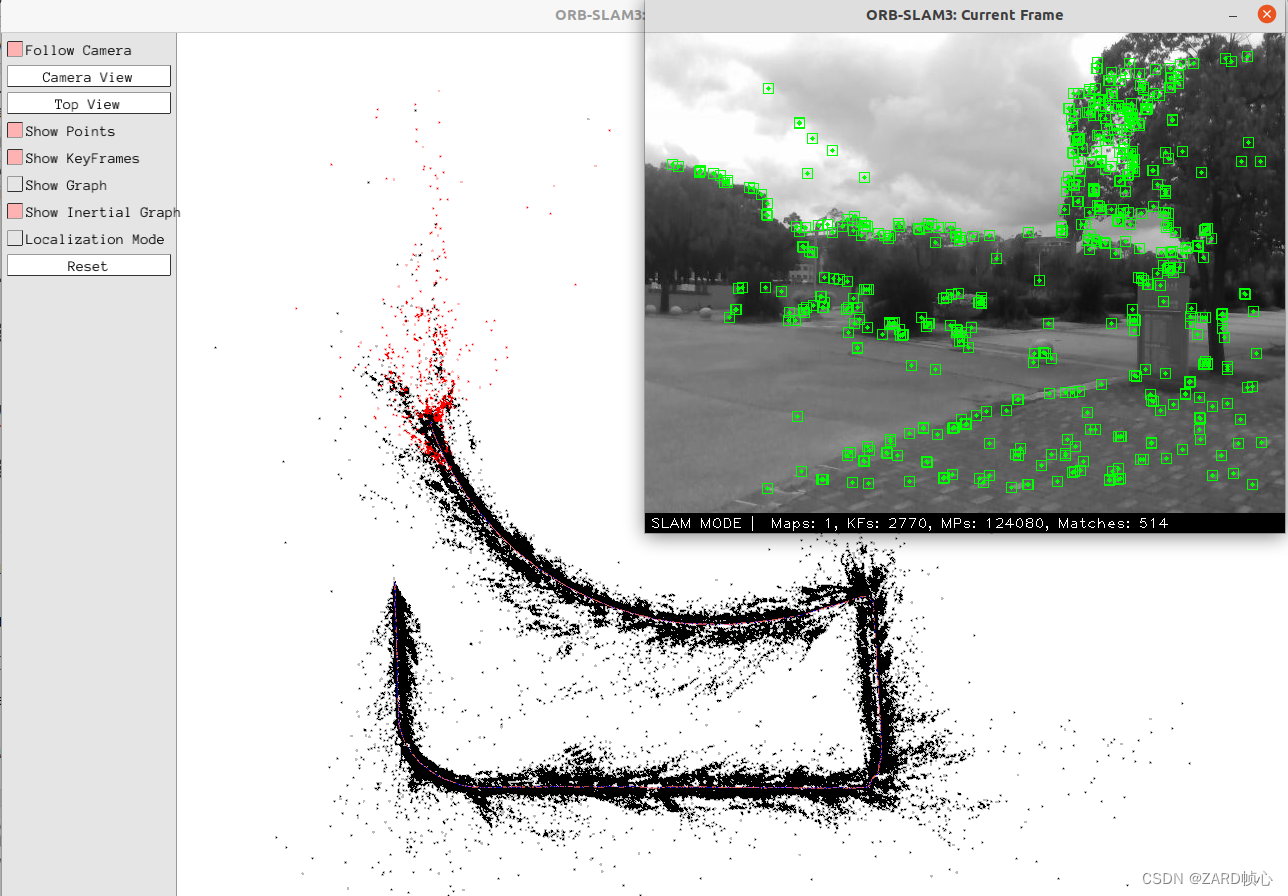

rosbag play street_02.bagYou can see that the drift is very severe and there are scale changes during the run. No cause was analyzed (guessing it could be the/camera/color/image_raw(timestamp jumps too badly), and will be analyzed in more detail later. Anyway, the run failed

4, VINS-Mono

4.1 Configuration parameters

inconfigAdd a parameter file to the folderM2DGR.yamlmodeled afterconfig/euroc/euroc_config.yamlDocumentation, based onmy_params_camera.yamlFile modification parameters, mainly to modify the image and IMU data topics, results output path, camera and IMU internal parameters and joint calibration results, and the rest of the parameters are adjusted as needed.

#common parameters

imu_topic: "/handsfree/imu"

image_topic: "/camera/color/image_raw"

output_path: "/home/zard/Downloads/VINS-Mono"

# camera model

model_type: PINHOLE

camera_name: camera

image_width: 640

image_height: 480

distortion_parameters:

k1: 0.148000794688248

k2: -0.217835187249065

p1: 0.0

p2: 0.0

projection_parameters:

fx: 617.971050917033

fy: 616.445131524790

cx: 327.710279392468

cy: 253.976983707814

#imu parameters The more accurate parameters you provide, the worse performance

acc_n: 1.2820343288774358e-01 # accelerometer measurement noise standard deviation. #0.2

gyr_n: 2.1309311394972831e-02 # gyroscope measurement noise standard deviation. #0.05

acc_w: 1.3677912958097768e-02 # accelerometer bias random work noise standard deviation. #0.02

gyr_w: 3.6603917782528627e-04 # gyroscope bias random work noise standard deviation. #4.0e-5

g_norm: 9.805 # gravity magnitude

# Extrinsic parameter between IMU and Camera.

estimate_extrinsic: 0 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it.

# 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess.

# 2 Don't know anything about extrinsic parameters. You don't need to give R,T. We will try to calibrate it. Do some rotation movement at beginning.

#Rotation from camera frame to imu frame, imu^R_cam

extrinsicRotation: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 0.0, 0.0, 1.0,

-1.0, 0.0, 0.0,

0.0, -1.0, 0.0]

#Translation from camera frame to imu frame, imu^T_cam

extrinsicTranslation: !!opencv-matrix

rows: 3

cols: 1

dt: d

data: [0.57711, -0.00012, 0.83333]imitatevins_estimator/launch/euroc.launchincreasevins_estimator/launch/M2DGR.launchStart the file, change the path to the parameter file, and be careful not to forget the command to decompress the image

<!-- <arg name="config_path" default = "$(find feature_tracker)/../config/euroc/euroc_config.yaml" /> -->

<arg name="config_path" default = "$(find feature_tracker)/../config/M2DGR.yaml" />

<! -- Image decompression -->

<node pkg="image_transport" type="republish" name="image_republish" args="compressed in:=/camera/color/image_raw raw out:=/camera/color/image_raw" output="screen" respawn="true"/>4.2 Operational monocular + IMU

Open three terminals, source and then type in

# Run the feature_tracker node and the estimator node, subscribe to the image and IMU data, and publish bitmap, 3D feature point, etc. to RVIZ for display.

source devel/setup.bash

roslaunch vins_estimator M2DGR.launch

# rviz visualization node

source devel/setup.bash

roslaunch vins_estimator vins_rviz.launch

# Open a terminal in the folder where bag is located and start playing the dataset:

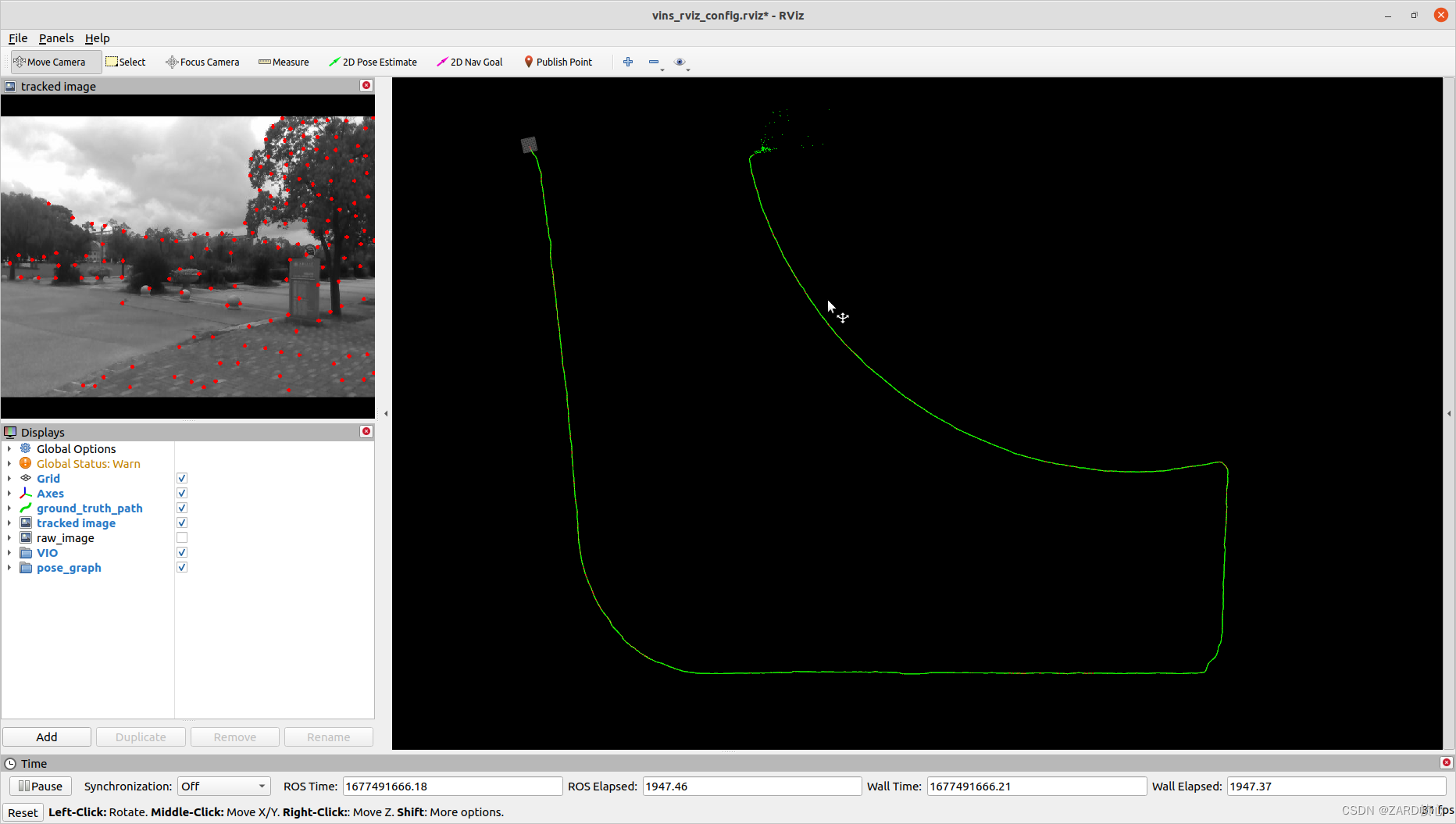

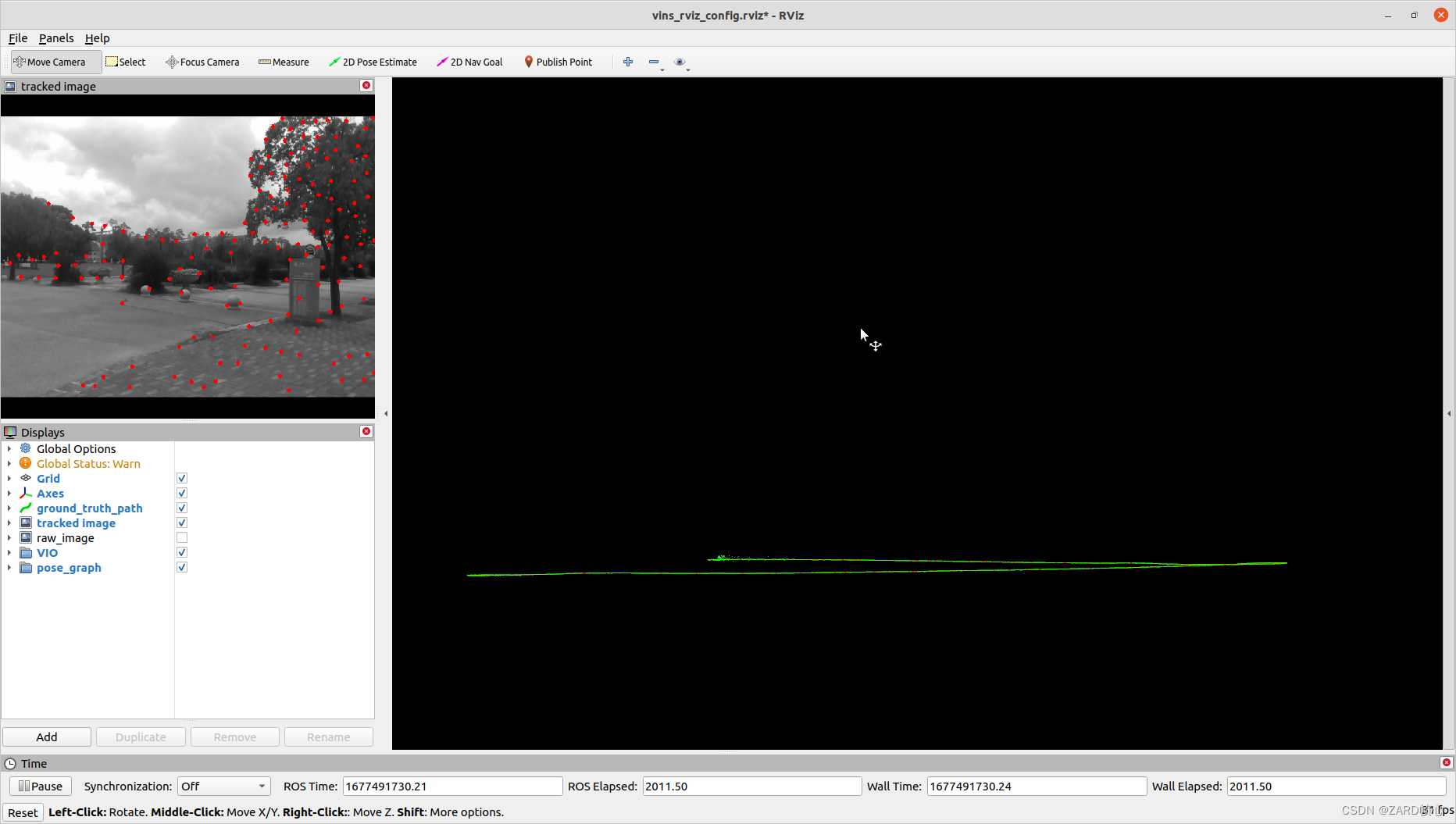

rosbag play street_02.bagThe red trace is the front-end odometer, the green trace is the result of local optimization and loopback, this data has no loopback and has a large drift (guessing it’s also an image timestamp issue)

V. DM-VIO

5.1 Installation

Installation of gtsam, OpenCV and pangolin is not repeated, and DM-VIO is compiled directly:

git clone https://github.com/lukasvst/dm-vio.git

cd dm-vio

mkdir build && cd build

cmake ..

make -j8After compiling, you can see the executable dmvio_dataset in the build/bin directory, next install the ros plugin. Go to a random directory, the recommended one is a subdirectory of the dm-vio you just installed:

git clone https://github.com/lukasvst/dm-vio-ros.gitIn order for this plugin to find the dm-vio.bashrc just compiled add an environment variable:

sudo gedit ~/.bashrc

# Add at the end.

export DMVIO_BUILD=/YOURPATH/dm-vio/buildinCMakeLists.txtAdd it after line 85, otherwise the compiler will report an error because it can’t find the generated msg format:

add_dependencies(${PROJECT_NAME}_node ${PROJECT_NAME}_generate_messages_cpp)Compile:

catkin_make

source devel/setup.bash5.2 Configuration runs

The first step is to add the M2DGR camera model to config:

echo -e "RadTan 617.971050917033 616.445131524790 327.710279392468 253.976983707814 0.148000794688248 -0.217835187249065 0.0 0.0\n640 480\nfull\n640 480\n" > M2DGR.txtmodeled oneuroc.yamlConfiguration Parameter FileM2DGR.yaml

accelerometer_noise_density: 1.2820343288774358e-01

gyroscope_noise_density: 2.1309311394972831e-02

accelerometer_random_walk: 1.3677912958097768e-02

gyroscope_random_walk: 3.6603917782528627e-04

integration_sigma: 0.316227(of a computer) run

roscore

source devel/setup.bash

rosrun dmvio_ros node calib=/PATH/M2DGR.txt settingsFile=/PATH/dm-vio/configs/M2DGR.yaml mode=1 nogui=0 preset=1 useimu=1 quiet=1 init_requestFullResetNormalizedErrorThreshold=0.8 init_pgba_skipFirstKFs=1

# **Note**: the image in the bag is in compressed format, use the image_transport package to decompress the image

rosrun image_transport republish compressed in:=/camera/color/image_raw raw out:=/camera/color/image_raw

# Open a terminal in the folder where bag is located and start playing the dataset:

rosbag play street_02.bag /camera/color/image_raw:=cam0/image_raw /handsfree/imu:=imu0But it doesn’t run, it doesn’t respond, I don’t know why, if anyone knows how to fix it please let me know in the comments section, thanks! Let’s run the EuRoC data here

echo -e "458.654 457.296 367.215 248.375 -0.28340811 0.07395907 0.00019359 1.76187114e-05\n752 480\ncrop\n640 480\n" > camera.txtrosrun dmvio_ros node calib=/PATH/camera.txt settingsFile=/PATH/dm-vio/configs/euroc.yaml mode=1 nogui=0 preset=1 useimu=1 quiet=1 init_requestFullResetNormalizedErrorThreshold=0.8 init_pgba_skipFirstKFs=1

rosbag play V1_01_easy.bag

VI. A-LOAM

A-LOAM is simple to run and requires no special configuration, LiDAR topics are/velodyne_pointsCan be run directly:

source devel/setup.bash

roslaunch aloam_velodyne aloam_velodyne_VLP_32.launch

# Open a terminal in the folder where bag is located and start playing the dataset:

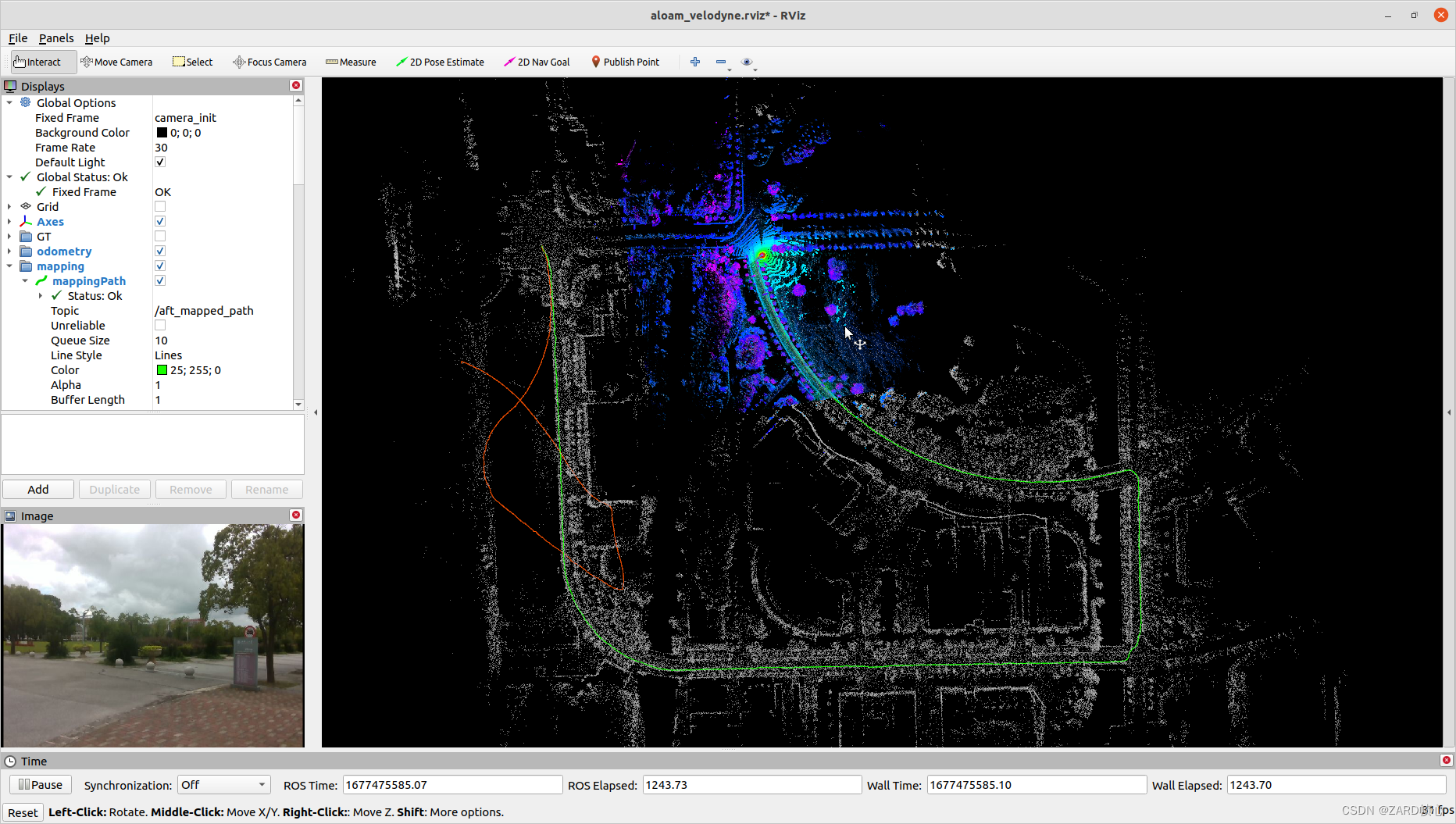

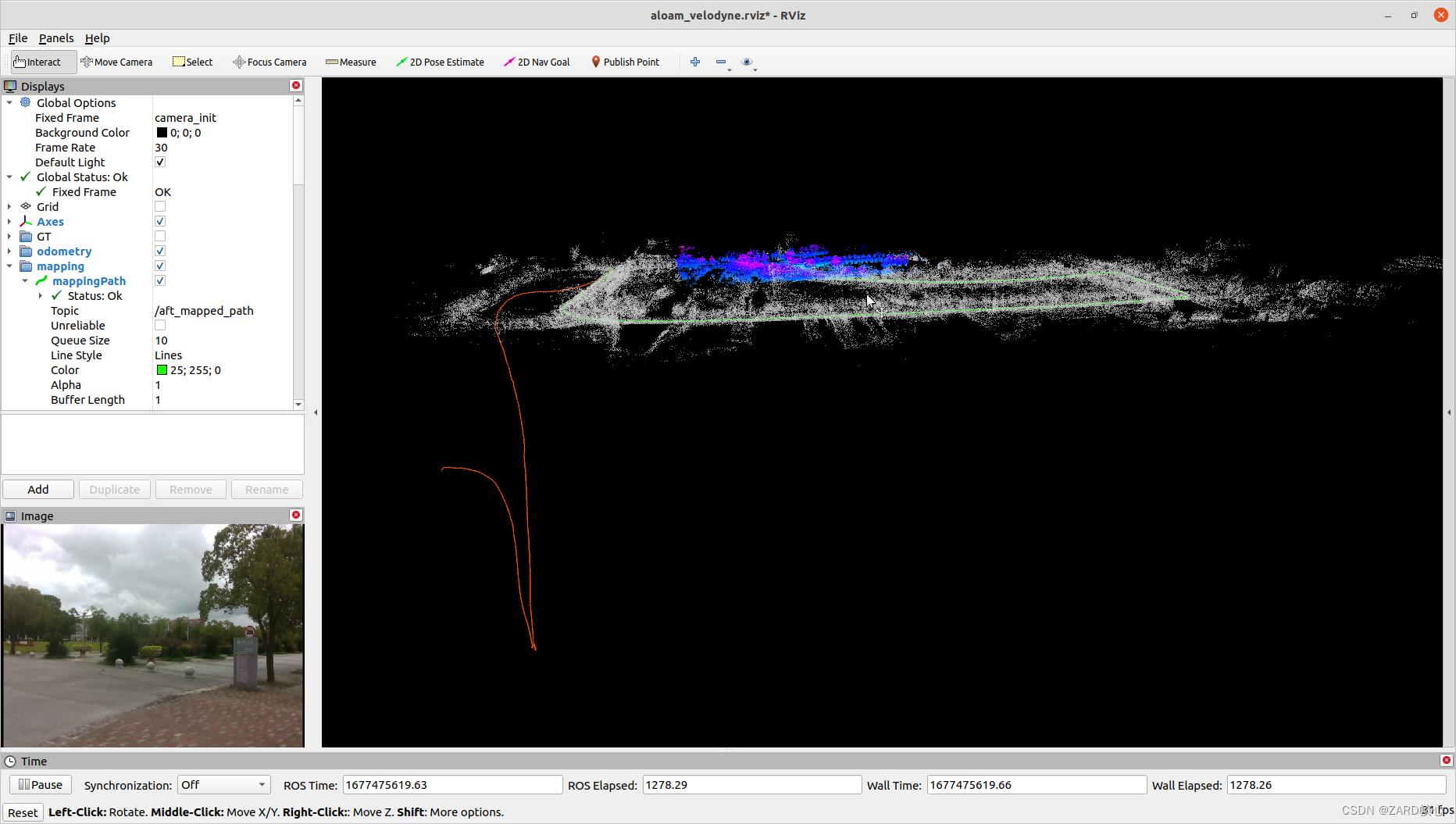

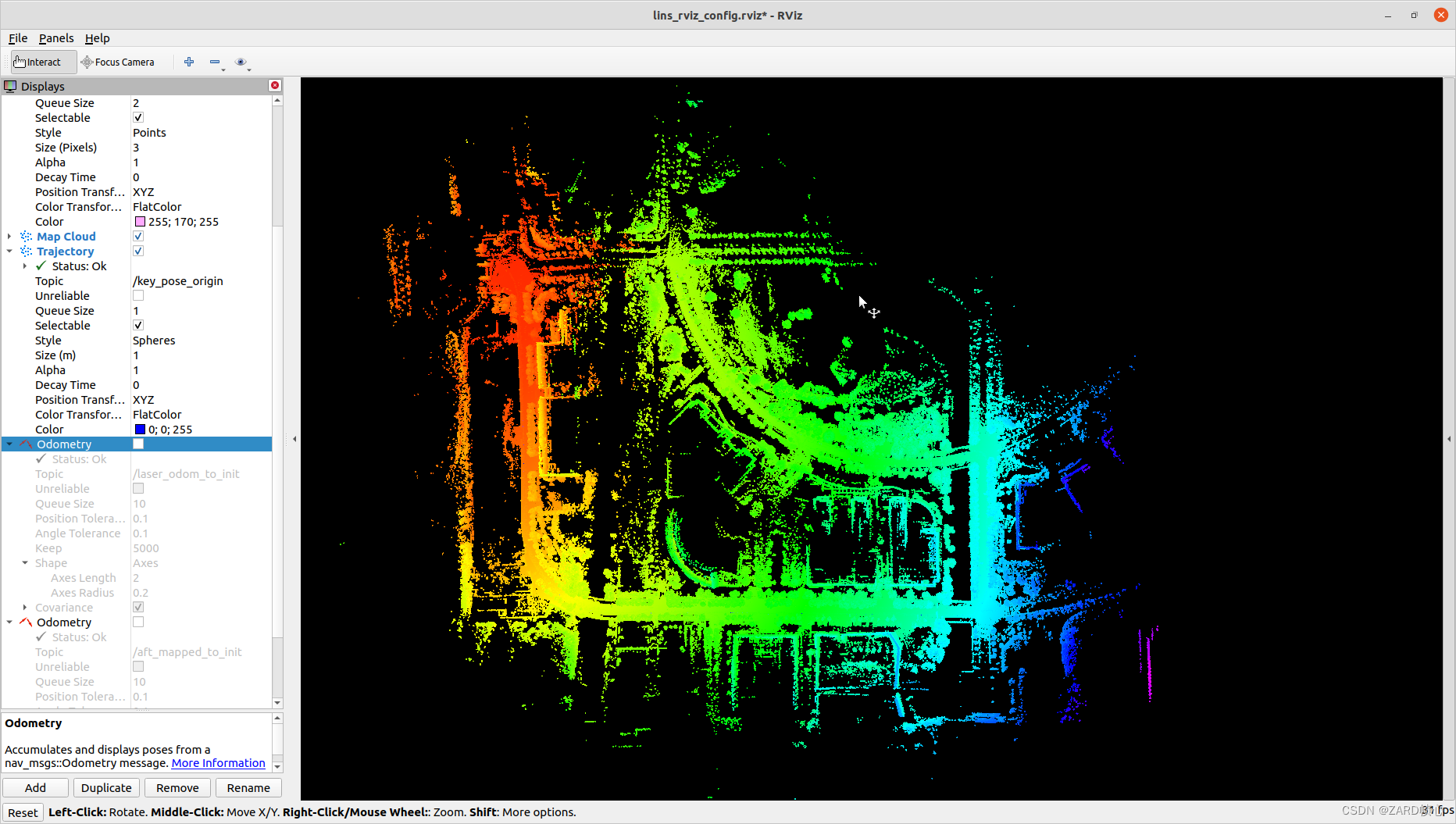

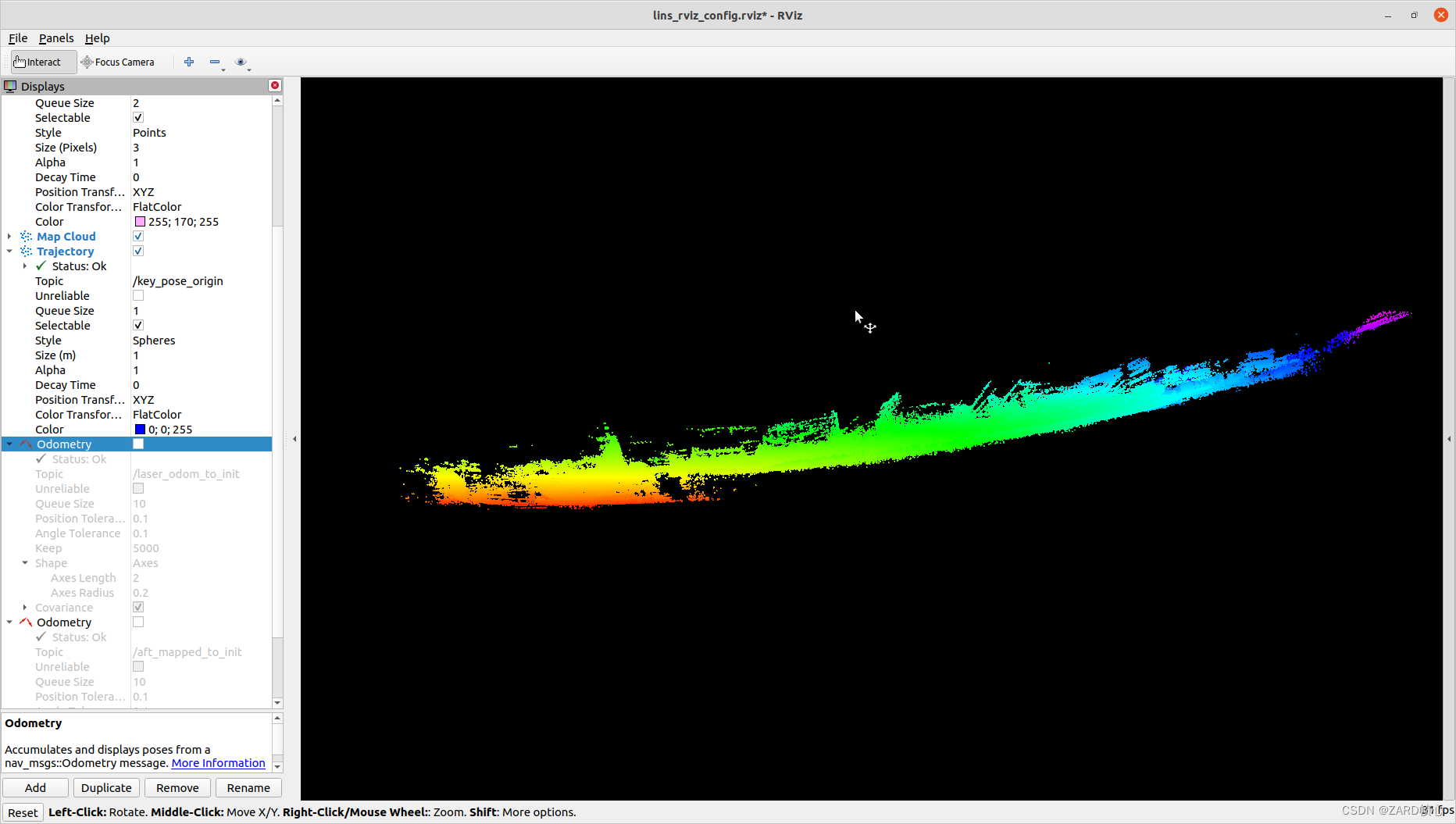

rosbag play street_02.bagAs shown in the figure below, the red trace is the front-end odometer and the green trace is the local optimization result, A-LOAM still works very well on this dataset

The side view shows that the front-end odometer drift is very severe, illustrating the importance of local optimization in the back-end

7, LeGO-LOAM

LeGO-LOAM is also simple to run, with LiDAR topics as/velodyne_points, IMU Topics/handsfree/imurenamed/imu/data:

source devel/setup.bash

roslaunch lego_loam run.launch

# Open a terminal in the folder where bag is located and start playing the dataset:

rosbag play street_02.bag --clock /handsfree/imu:=/imu/dataAs shown in the figure below, LeGO-LOAM has a large drift

8, LIO-SAM

8.1 Configuration parameters

inconfigAdd a parameter file to the folderconfig/params_street.yamlmodeled afterconfig/params.yamlfile, rootxsens_imu_param.yamlandextrinsic.yamlFile modification parameters, mainly modify LiDAR and IMU data topics, results output path, IMU internal reference and joint calibration results, the rest of the parameters are adjusted according to need.

pointCloudTopic: "velodyne_points" # Point cloud data

imuTopic: "/handsfree/imu" # IMU data

# Export settings

savePCD: true # https://github.com/TixiaoShan/LIO-SAM/issues/3

savePCDDirectory: "~/Output/" # in your home folder, starts and ends with "/". Warning: the code deletes "LOAM" folder then recreates it. See "mapOptimization" for implementation

# Sensor Settings

sensor: velodyne # lidar sensor type, either 'velodyne' or 'ouster'

N_SCAN: 32 # number of lidar channel (i.e., 16, 32, 64, 128)

Horizon_SCAN: 1800 # lidar horizontal resolution (Velodyne:1800, Ouster:512,1024,2048)

downsampleRate: 1 # default: 1. Downsample your data if too many points. i.e., 16 = 64 / 4, 16 = 16 / 1

lidarMinRange: 1.0 # default: 1.0, minimum lidar range to be used

lidarMaxRange: 1000.0

# IMU Settings

imuAccNoise: 3.7686306102624571e-02

imuGyrNoise: 2.3417543020438883e-03

imuAccBiasN: 1.1416642385952368e-03

imuGyrBiasN: 1.4428407712885209e-05

# Shanghai is located at 31°12′ N. The exact value of the acceleration of gravity g is 9.7940 m/s².

imuGravity: 9.7940

imuRPYWeight: 0.01

# Extrinsics (lidar -> IMU)

# is given as IMU -> lidar, but the rotation matrix is a unitary matrix, so it's straightforward to take negative

extrinsicTrans: [0.27255, -0.00053, 0.17954]

extrinsicRot: [1, 0, 0,

0, 1, 0,

0, 0, 1]

extrinsicRPY: [1, 0, 0,

0, 1, 0,

0, 0, 1]inlaunch/run.launchModify the parameter text path in

<!-- <rosparam file="$(find lio_sam)/config/params.yaml" command="load" /> -->

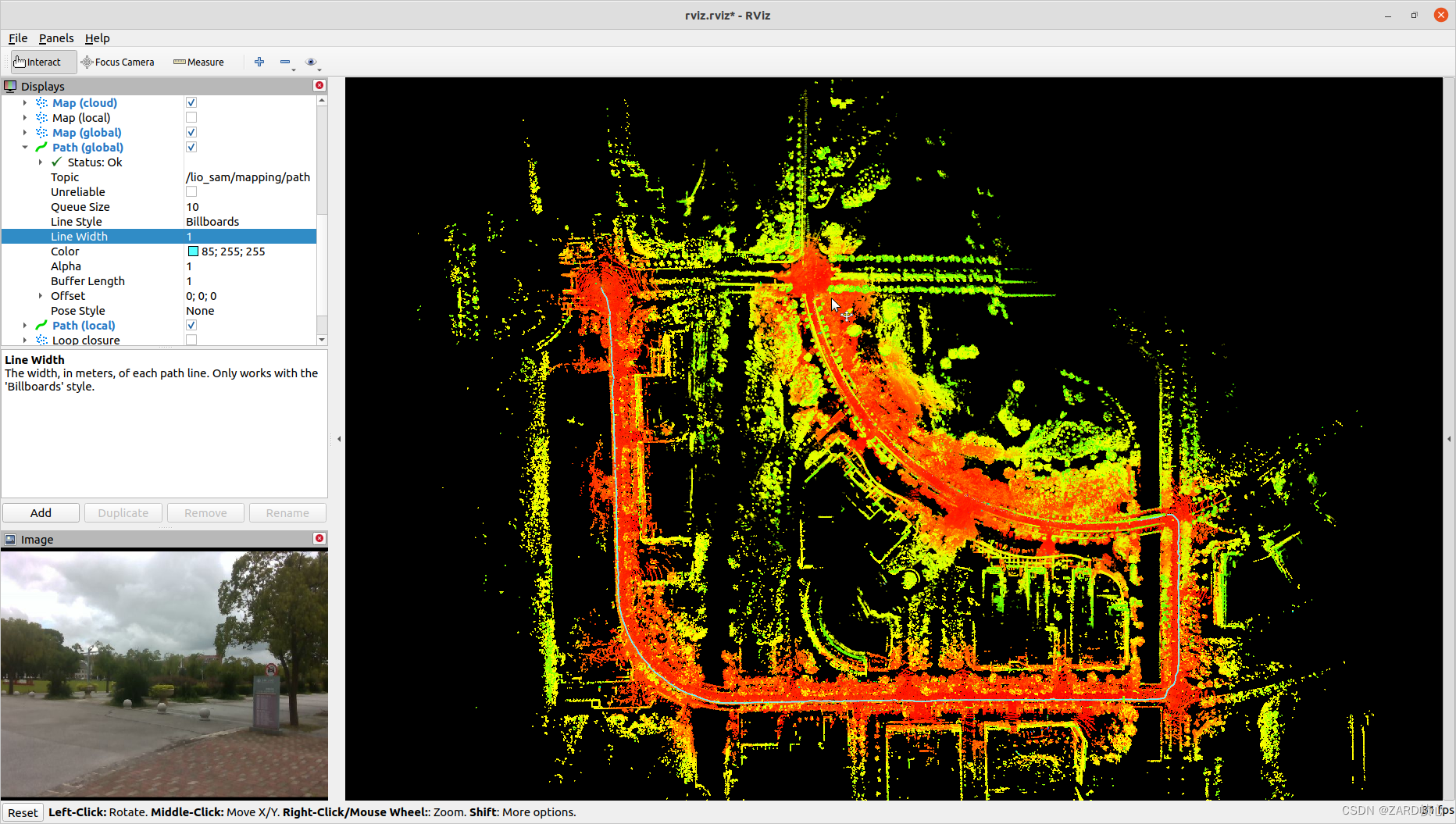

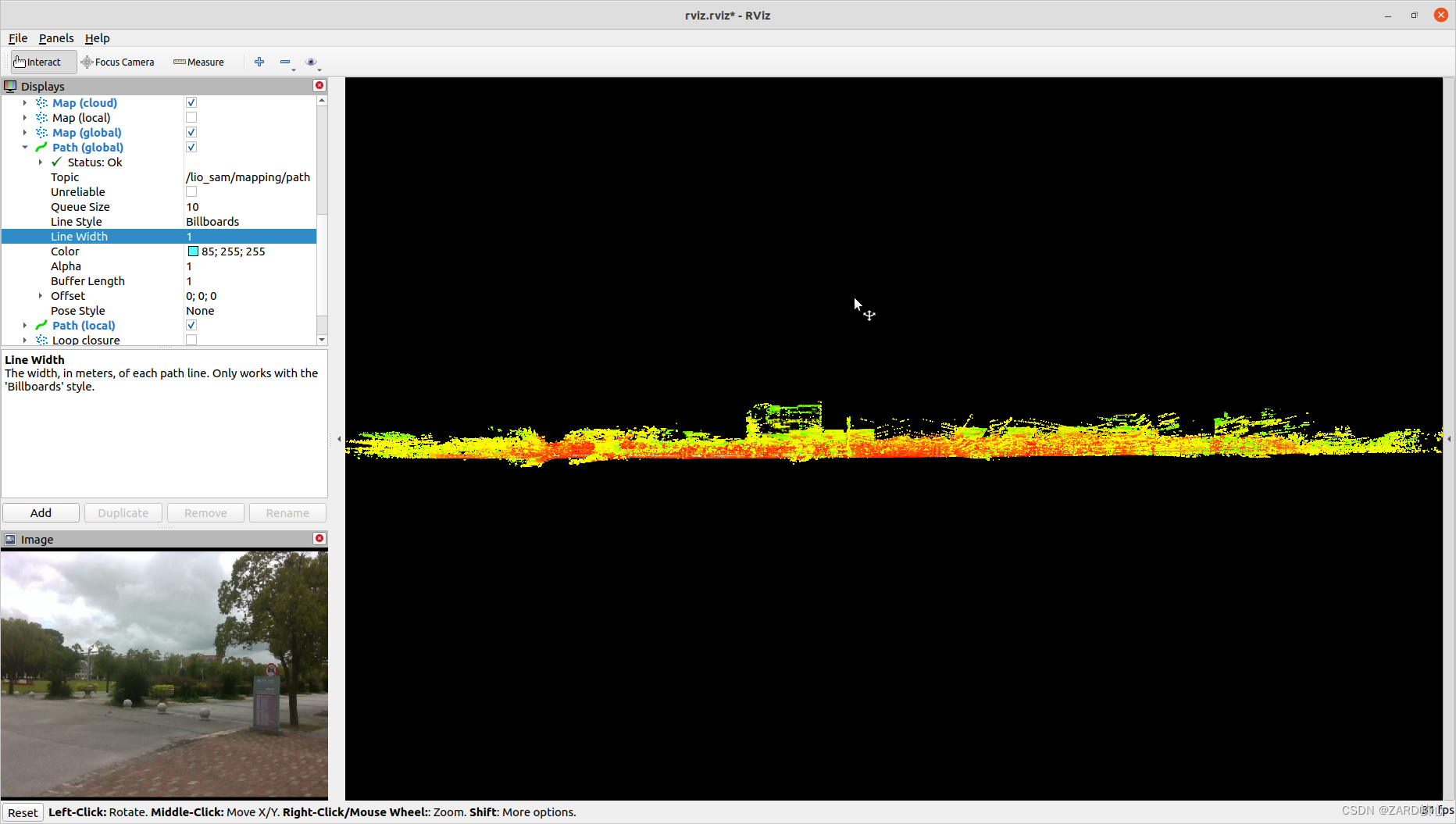

<rosparam file="$(find lio_sam)/config/params_street.yaml" command="load" />8.2 Operation

source devel/setup.bash

roslaunch lio_sam run.launch

# Open a terminal in the folder where bag is located and start playing the dataset:

rosbag play street_02.bagFor some reason, the LIO-SAM results don’t look as good as A-LOAM, which will be analyzed later on

Nine, LVI-SAM

9.1 Configuration parameters

The authors of M2DGR have provided a parameter profile for LVI-SAMmodule_sam.launch、my_params_camera.yaml、my_params_lidar.yaml, the main thing to keep in mind is the following parameters:

# my_params_camera.yaml

#common parameters

imu_topic: "/handsfree/imu"

image_topic: "/camera/color/image_raw"

# lidar to camera extrinsic

lidar_to_cam_tx: 0.27255

lidar_to_cam_ty: -0.00053

lidar_to_cam_tz: 0.17954

lidar_to_cam_rx: 0.0

lidar_to_cam_ry: 0.0

lidar_to_cam_rz: 0.0

# camera model

model_type: PINHOLE

camera_name: camera

# Mono camera config

image_width: 640

image_height: 480

distortion_parameters:

k1: 0.148000794688248

k2: -0.217835187249065

p1: 0

p2: 0

projection_parameters:

fx: 617.971050917033

fy: 616.445131524790

cx: 327.710279392468

cy: 253.976983707814

#imu parameters The more accurate parameters you provide, the worse performance

acc_n: 1.2820343288774358e-01 # accelerometer measurement noise standard deviation. #0.2

gyr_n: 2.1309311394972831e-02 # gyroscope measurement noise standard deviation. #0.05

acc_w: 1.3677912958097768e-02 # accelerometer bias random work noise standard deviation. #0.02

gyr_w: 3.6603917782528627e-04 # gyroscope bias random work noise standard deviation. #4.0e-5

g_norm: 9.805 # gravity magnitude

# Extrinsic parameter between IMU and Camera.

estimate_extrinsic: 0

extrinsicRotation: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [ 0.0, 0.0, 1.0,

-1.0, 0.0, 0.0,

0.0, -1.0, 0.0]

#Translation from camera frame to imu frame, imu^T_cam

extrinsicTranslation: !!opencv-matrix

rows: 3

cols: 1

dt: d

data: [0.57711, -0.00012, 0.83333]

#feature traker paprameters

freq: 20# my_params_lidar.yaml

# Topics

pointCloudTopic: "velodyne_points" # Point cloud data

imuTopic: "handsfree/imu" # IMU data

# Heading

useImuHeadingInitialization: false # if using GPS data, set to "true"

# Export settings

savePCD: true # https://github.com/TixiaoShan/LIO-SAM/issues/3

savePCDDirectory: "~/Output/" # in your home folder, starts and ends with "/". Warning: the code deletes "LOAM" folder then recreates it. See "mapOptimization" for implementation

# Sensor Settings

N_SCAN: 32 # number of lidar channel (i.e., 16, 32, 64, 128)

Horizon_SCAN: 1800 # lidar horizontal resolution (Velodyne:1800, Ouster:512,1024,2048)

timeField: "time" # point timestamp field, Velodyne - "time", Ouster - "t"

downsampleRate: 1 # default: 1. Downsample your data if too many points. i.e., 16 = 64 / 4, 16 = 16 / 1

# IMU Settings

imuAccNoise: 3.7686306102624571e-02

imuGyrNoise: 2.3417543020438883e-03

imuAccBiasN: 1.1416642385952368e-03

imuGyrBiasN: 1.4428407712885209e-05

imuGravity: 9.805

# Extrinsics (lidar -> IMU)

extrinsicTrans: [0.27255, -0.00053,0.17954]

extrinsicRot: [1, 0, 0, 0, 1, 0, 0, 0, 1]

extrinsicRPY: [1, 0, 0, 0, 1, 0, 0, 0, 1]<! -- Image decompression -->

<node pkg="image_transport" type="republish" name="image_republish" args="compressed in:=/camera/color/image_raw raw out:=/camera/color/image_raw" output="screen" respawn="true"/>

<!-- Lidar odometry param -->

<rosparam file="$(find lvi_sam)/config/my_params_lidar.yaml" command="load" />

<!-- VINS config -->

<param name="vins_config_file" type="string" value="$(find lvi_sam)/config/my_params_camera.yaml" />9.2 Operation

Since M2DGR uses a different sensor coordinate system than the LVI-SAM data (actually the LVI-SAM IMU is more specific), which is also provided by the M2DGR authors, a slight modification is needed in the internal code, which is modified as follows:

- src/visual_odometry/visual_estimator/initial/initial_alignment.h

// n(n_in)

// {

// q_lidar_to_cam = tf::Quaternion(0, 1, 0, 0);

// q_lidar_to_cam_eigen = Eigen::Quaterniond(0, 0, 0, 1);

// }

n(n_in)

{

q_lidar_to_cam = tf::Quaternion(0, 0, 0, 1);

q_lidar_to_cam_eigen = Eigen::Quaterniond(1,0,0,0);

}// tf::Quaternion q_odom_cam = tf::createQuaternionFromRPY(0, 0, M_PI) * (q_odom_lidar * q_lidar_to_cam);

tf::Quaternion q_odom_cam = tf::createQuaternionFromRPY(0, 0, M_PI / 2.0) * (q_odom_lidar * q_lidar_to_cam);- src/visual_odometry/visual_estimator/utility/visualization.cpp

// tf::Quaternion q_cam_to_lidar(0, 1, 0, 0);

tf::Quaternion q_cam_to_lidar(0, 0, 0, 1);

// static tf::Transform t_odom_world = tf::Transform(tf::createQuaternionFromRPY(0, 0, M_PI), tf::Vector3(0, 0, 0));

static tf::Transform t_odom_world = tf::Transform(tf::createQuaternionFromRPY(0, 0, 0), tf::Vector3(0, 0, 0));- src/visual_odometry/visual_feature/feature_tracker_node.cpp**

// Comment the following code:

// pcl::PointCloud<PointType>::Ptr laser_cloud_offset(new pcl::PointCloud<PointType>());

// Eigen::Affine3f transOffset = pcl::getTransformation(L_C_TX, L_C_TY, L_C_TZ, L_C_RX, L_C_RY, L_C_RZ);

// pcl::transformPointCloud(*laser_cloud_in, *laser_cloud_offset, transOffset);

// *laser_cloud_in = *laser_cloud_offset;Recompile after modification:

cd ~/catkin_ws

catkin_make -j1(of a computer) run

source devel/setup.bash

roslaunch lvi_sam run.launch

# Open a terminal in the folder where bag is located and start playing the dataset:

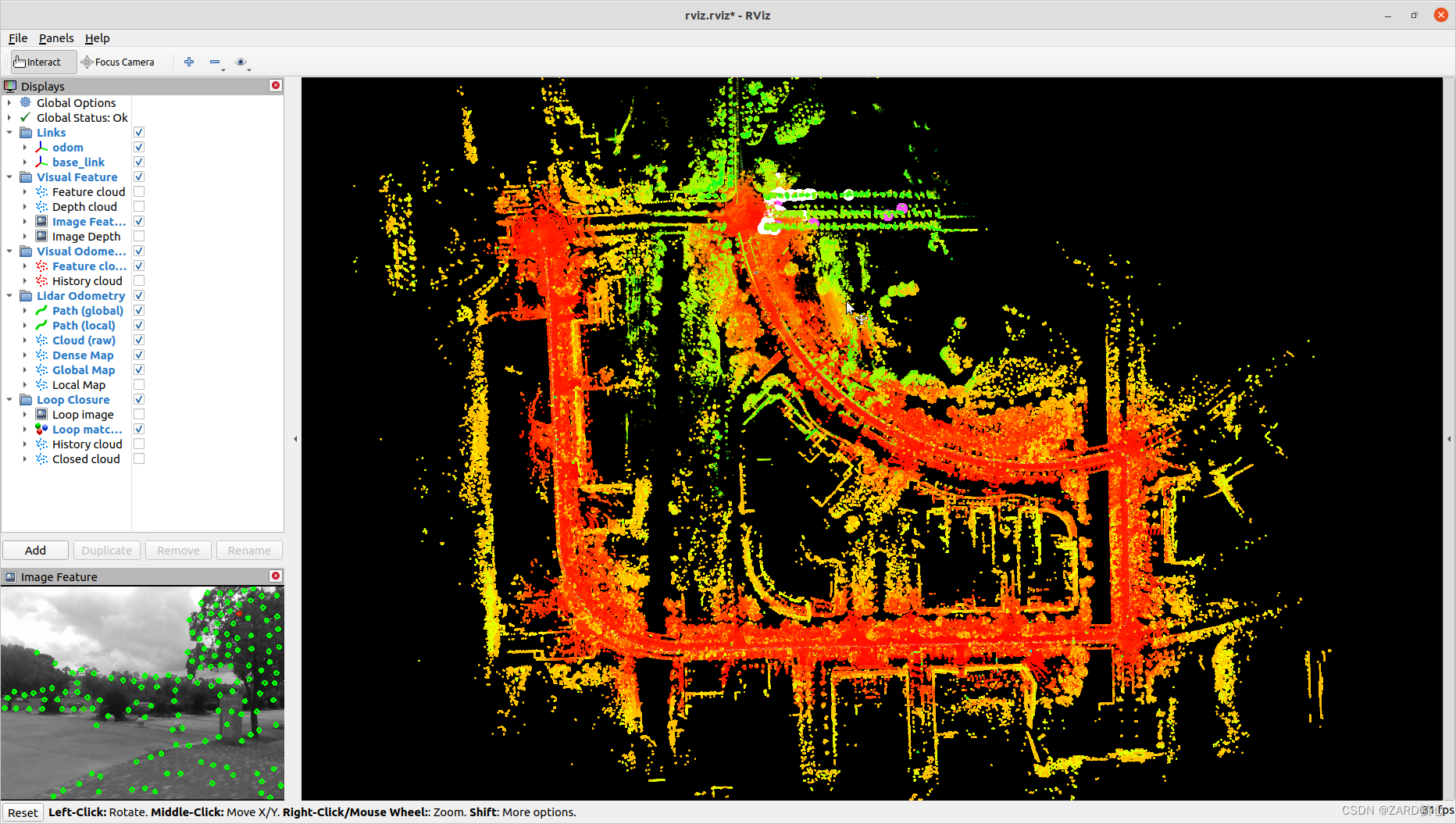

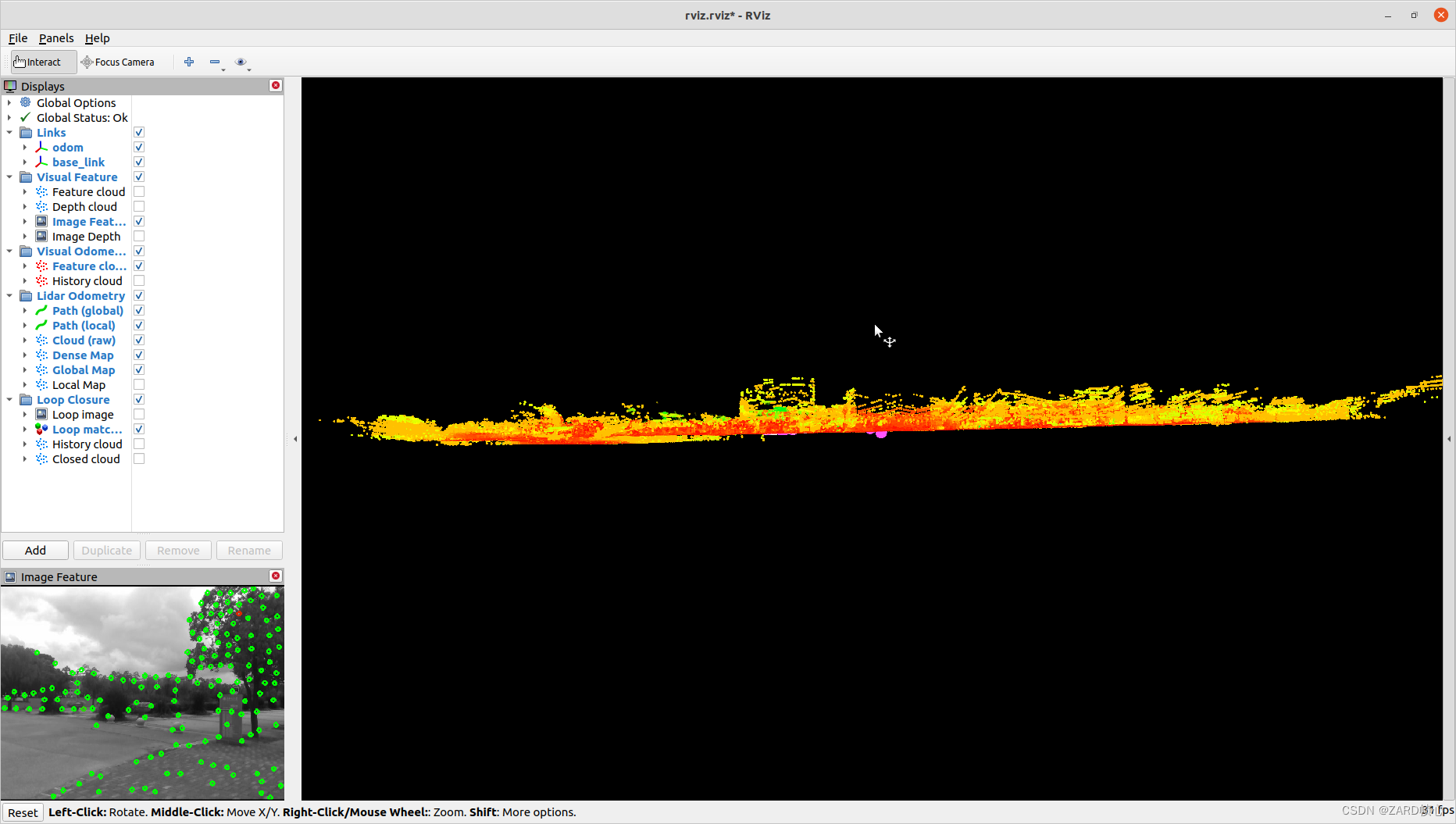

rosbag play street_02.bagAs with LIO-SAM, it doesn’t work as well and looks even worse than LIO-SAM

10, LINS

10.1 Installation

With ROS, gtsam and OpenCV already installed:

cd ~/catkin_ws/src

git clone https://github.com/ChaoqinRobotics/LINS---LiDAR-inertial-SLAM.git

cd ..

catkin_make -j1Note that as with LeGO-LOAM, the first time you compile the code, you need to add the “catkin_make“-j1” is added to generate some message types. Subsequent compilations do not require “-j1”, and message names will conflict if placed in a function package with LeGO-LOAM (optionally, the message name can be changed, or the function package for which one of the messages was compiled can be deleted).

- Changed C++ standard to 14 due to PCL version 1.10:

#set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++11 -O3")

set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++14 -O3")- Encountering errors:

error: ‘string’ in namespace ‘std::__cxx11’ does not name a typewilllins/src/lib/parameters.cppin the function arguments in lines 134 and 141 of thestd::__cxx11::stringadapt (a story to another medium)std::string - Four similar mistakes:

/usr/bin/ld: cannot find -lBoost::serialization, couldn’t find four libraries for lBoost.CMakeLists.txtAdd:

find_package(Boost REQUIRED COMPONENTS timer thread serialization chrono)10.2 Configuration parameters

acc_n: 3.7686306102624571e-02

gyr_n: 2.3417543020438883e-03

acc_w: 1.1416642385952368e-03

gyr_w: 1.4428407712885209e-05

# extrinsic parameters

init_tbl: !!opencv-matrix

rows: 3

cols: 1

dt: d

data: [0.27255, -0.00053,0.17954]

init_rbl: !!opencv-matrix

rows: 3

cols: 3

dt: d

data: [1, 0, 0,

0, 1, 0,

0, 0, 1]<arg name="config_path" default = "$(find lins)/config/exp_config/M2DGR.yaml" />10.3 Operation

source devel/setup.bash

roslaunch lins run_M2DGR.launch

# Open a terminal in the folder where bag is located and start playing the dataset:

rosbag play street_02.bag --clock /handsfree/imu:=/imu/dataRuntime error:[ERROR] [1677898050.385013867, 1627957054.444385592]: Error transforming odometry 'Odometry' from frame '/camera_init' to frame 'map'inlidar_mapping_node.cpp、transform_fusion_node.cpp、Estimator.cppThe code searches for all/camera_initadapt (a story to another medium)camera_init

Larger drift in the vertical direction

XI. FAST-LIO2

FAST-LIOis a highly efficient LiDAR inertial odometry framework from the Mars Lab at the University of Hong Kong that uses a tightly coupled iteratively extended Kalman filter to fuse LiDAR feature points with IMU data to allow robust navigation in fast-moving, noisy or cluttered environments where degradation occurs. Compared to the first generation version, the second generation uses ikd-Tree incremental mapping to achieve support for LiDAR rates in excess of 100 Hz and support for a wider range of LiDAR and device

11.1 Installation

Without modifying the source code, first install the dependencies:

- ROS (melodic or noetic), refer to my previous blog for installation instructions

- glog:

sudo apt-get install libgoogle-glog-dev - eigen:

sudo apt-get install libeigen3-dev - pcl:

sudo apt-get install libpcl-dev - yaml-cpp:

sudo apt-get install libyaml-cpp-dev

(1) Livox-sdk Installation

git clone https://github.com/Livox-SDK/Livox-SDK.git

cd Livox-SDK

cd build && cmake ..

make

sudo make install(2) Livox_ros_driver installation

# Open a terminal and execute the following

mkdir ws_livox/src -p

# Open the configuration file

gedit ~/.bashrc

# Add the following to the end of the document

source ~/ws_livox/devel/setup.bash

# clone livox_ros_driver to ws_livox/src

cd ~/ws_livox/src

git clone https://github.com/Livox-SDK/livox_ros_driver.git

cd ..

catkin_make

source devel/setup.sh(3) FAST_LIO Installation

cd ~/ws_livox/src

git clone https://github.com/hku-mars/FAST_LIO.git

cd ..

catkin_make11.2 Configuration parameters

Id:

common:

lid_topic: "/velodyne_points"

imu_topic: "/handsfree/imu"

preprocess:

lidar_type: 2 # 1 for Livox serials LiDAR, 2 for Velodyne LiDAR, 3 for ouster LiDAR,

scan_line: 32

scan_rate: 10 # only need to be set for velodyne, unit: Hz,

timestamp_unit: 2 # the unit of time/t field in the PointCloud2 rostopic: 0-second, 1-milisecond, 2-microsecond, 3-nanosecond.

blind: 2

mapping:

acc_cov: 3.7686306102624571e-02

gyr_cov: 2.3417543020438883e-03

b_acc_cov: 1.1416642385952368e-03

b_gyr_cov: 1.4428407712885209e-05

extrinsic_est_en: false # true: enable the online estimation of IMU-LiDAR extrinsic,

extrinsic_T: [ 0.27255, -0.00053,0.17954]

extrinsic_R: [ 1, 0, 0,

0, 1, 0,

0, 0, 1]

publish:

# Publishing path

path_en: true

pcd_save:

# Don't save cloud maps if you don't have to, they're too big #

pcd_save_en: false<rosparam command="load" file="$(find fast_lio)/config/velodyne_M2DGR.yaml" />11.3 Operation

source devel/setup.bash

roslaunch fast_lio mapping_velodyne_M2DGR.launch

# Open a terminal in the folder where bag is located and start playing the dataset:

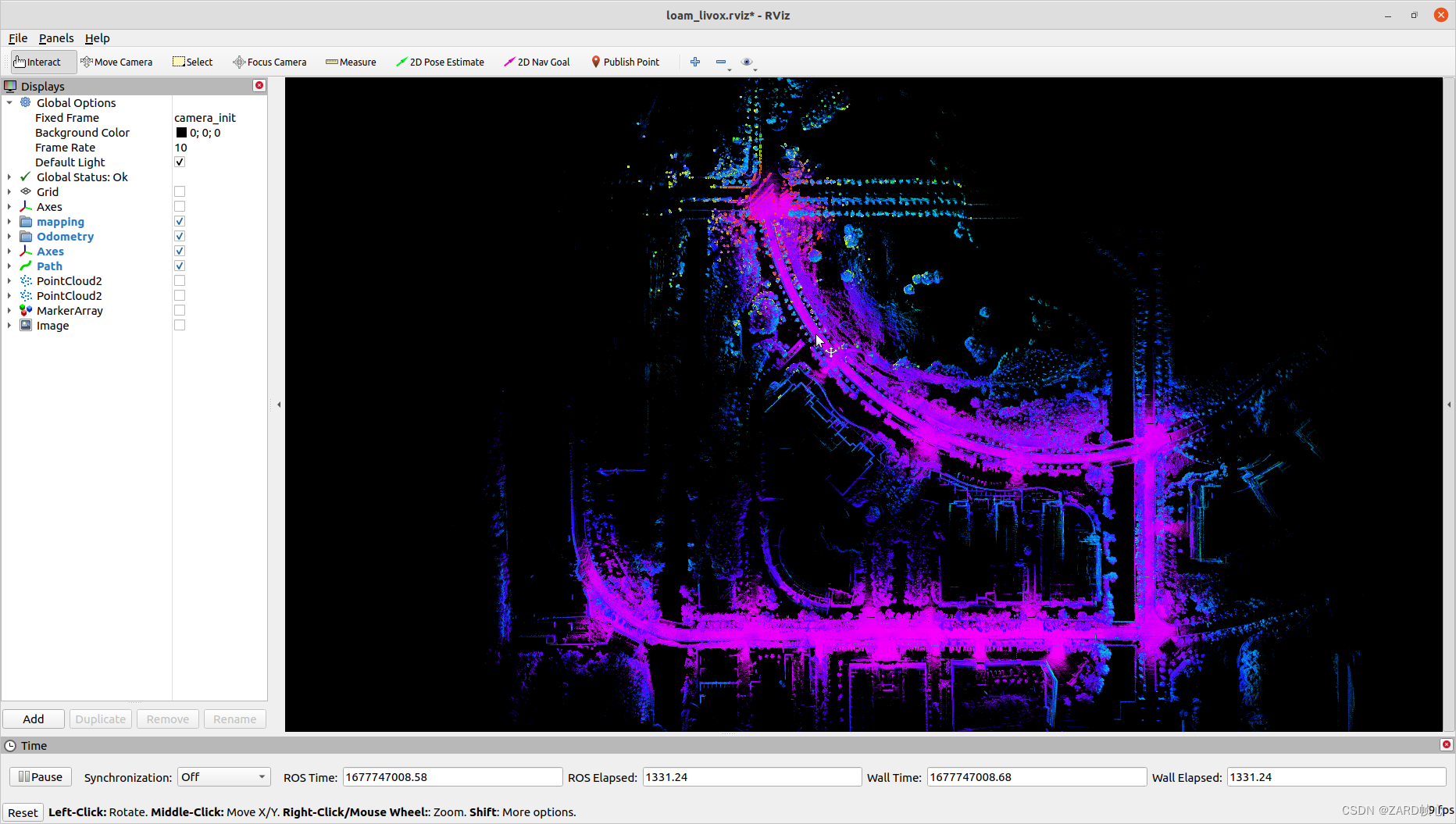

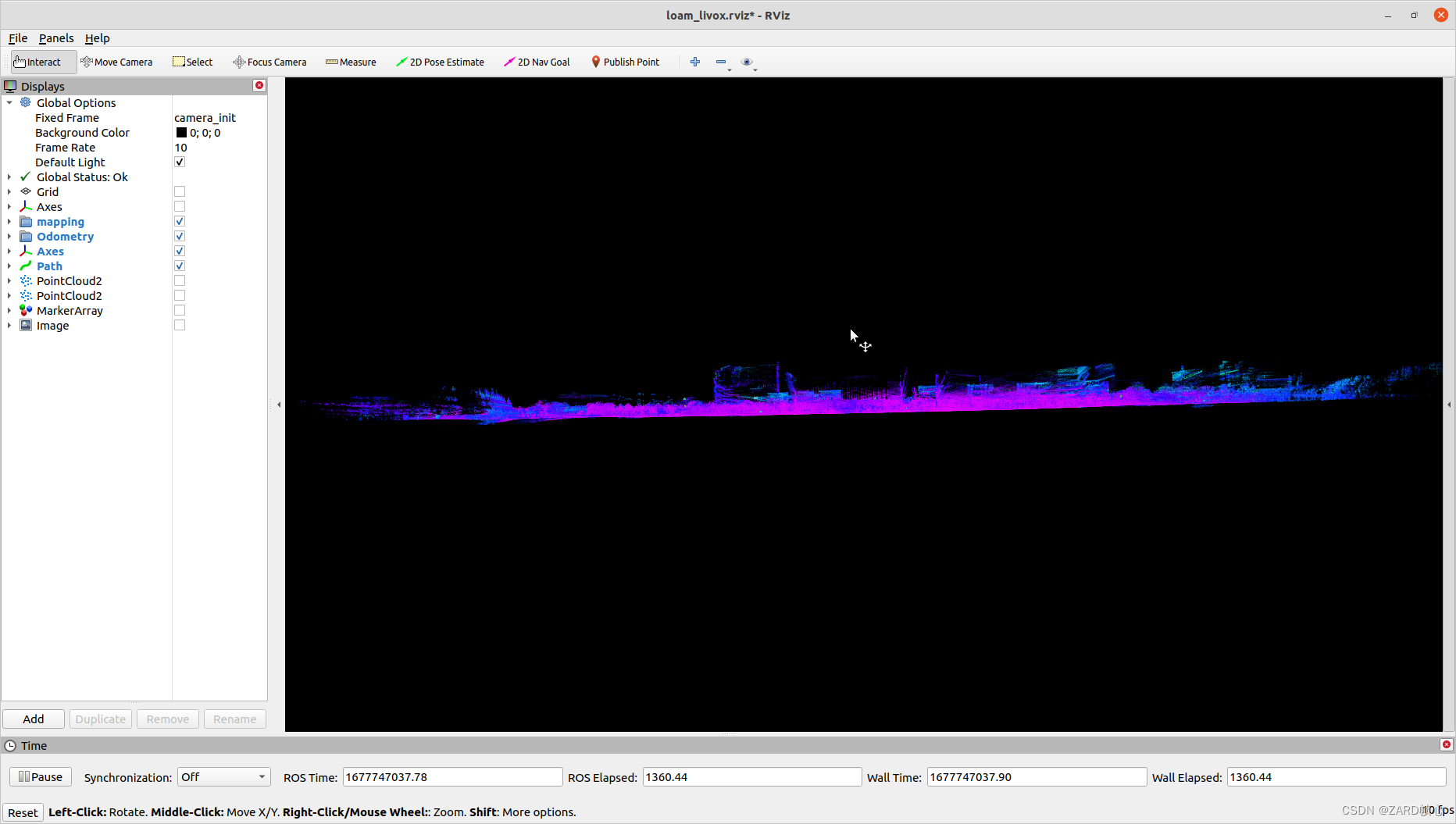

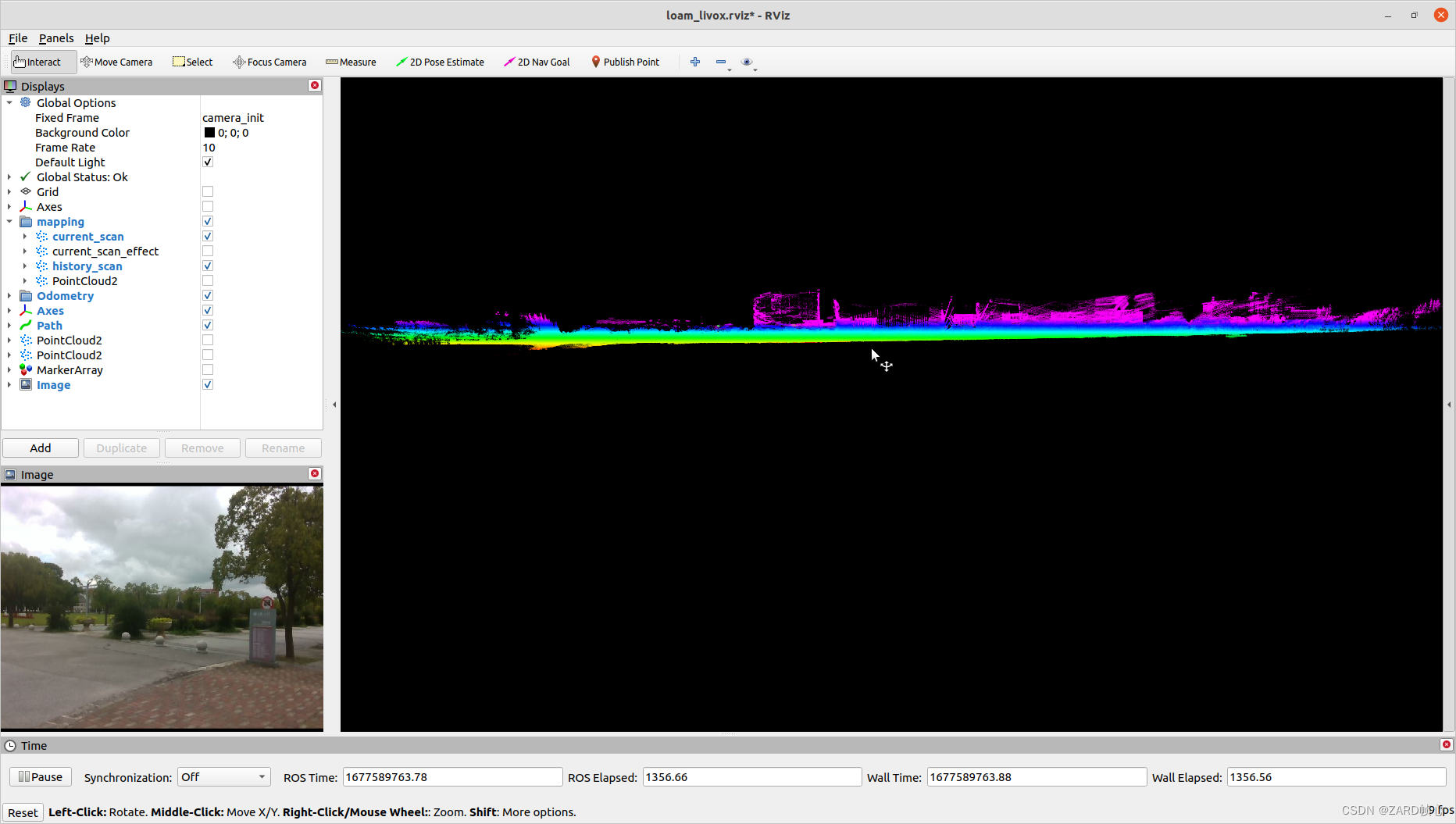

rosbag play street_02.bagIt looks okay.

(xii) FAST-LIVO

12.1 Installation

(1) Installed:

- Ubuntu 16.04~20.04. ROS Installation.

- PCL>=1.6, Follow PCL Installation.

- Eigen>=3.3.4, Follow Eigen Installation.

- OpenCV>=3.2, Follow Opencv Installation.

- Livox-sdk

- Livox_ros_driver

(2) Install the non-template Sophus:

git clone https://github.com/strasdat/Sophus.git

cd Sophus

# version back

git checkout a621ff

mkdir build && cd build

cmake ..

makeBefore making, modify the bugs in this version of Sophus, open the Sophus/sophus/so2.cpp file, and modify the code as follows

// unit_complex_.real() = 1.;

// unit_complex_.imag() = 0.;

unit_complex_.real(1.);

unit_complex_.imag(0.);make

# If you have already installed the template class do not worry, the installation does not affect each other, *.h and *.hpp can coexist in a folder

sudo make install(3) Install Vikit

cd ~/catkin_ws/src

git clone https://github.com/uzh-rpg/rpg_vikit.git(4) Installation of FAST-LIVO

cd ~/catkin_ws/src

git clone https://github.com/hku-mars/FAST-LIVO

cd ..

catkin_makeIf the OpenCV error is reported, change the CMakeLists.txt

FIND_PACKAGE(OpenCV REQUIRED)

# Change to

FIND_PACKAGE(OpenCV 4 REQUIRED)Replace the parameter that reported the error

// CV_RANSAC

cv::RANSAC

// CV_INTER_LINEAR // Bilinear interpolation

cv::INTER_LINEAR

// CV_WINDOW_AUTOSIZE

cv::WINDOW_AUTOSIZEIf a large number of similar errors occur:/usr/bin/ld: /home/zard/catkin_ws_LiDAR/devel/lib/libvikit_common.so: undefined reference to Sophus::SE3::operator*(Eigen::Matrix<double, 3, 1, 0, 3, 1> const&) constIf you are running FIND_PACKAGE(sophus REQUIRED), libSophus.so should be linked to Sophus_LIBRARIES, but cmake is not (for unknown reasons), hence this error. Explicitly link Sophus_LIBRARIES to libSophus.so:

FIND_PACKAGE(Sophus REQUIRED)

set(Sophus_LIBRARIES libSophus.so)12.2 Operation

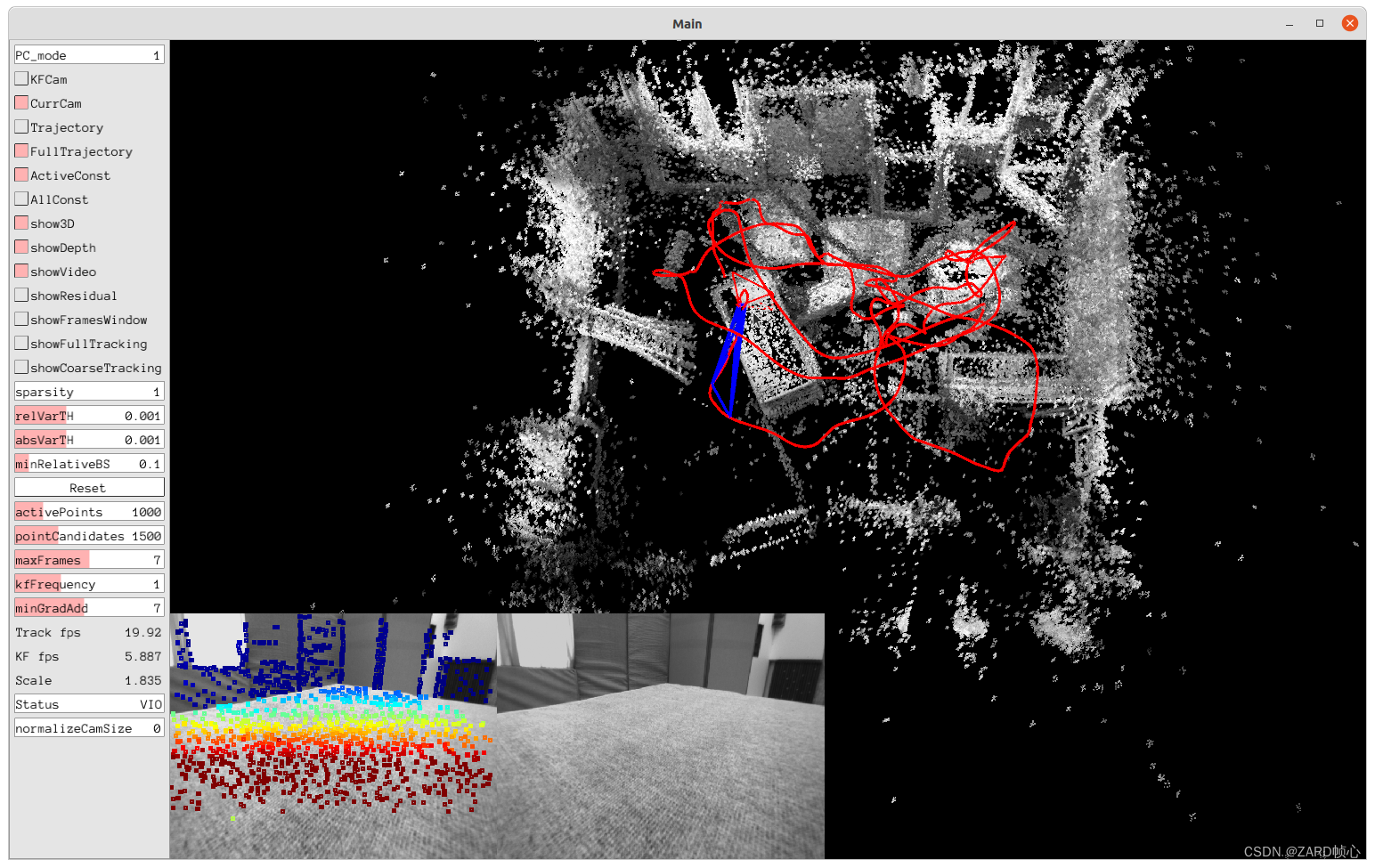

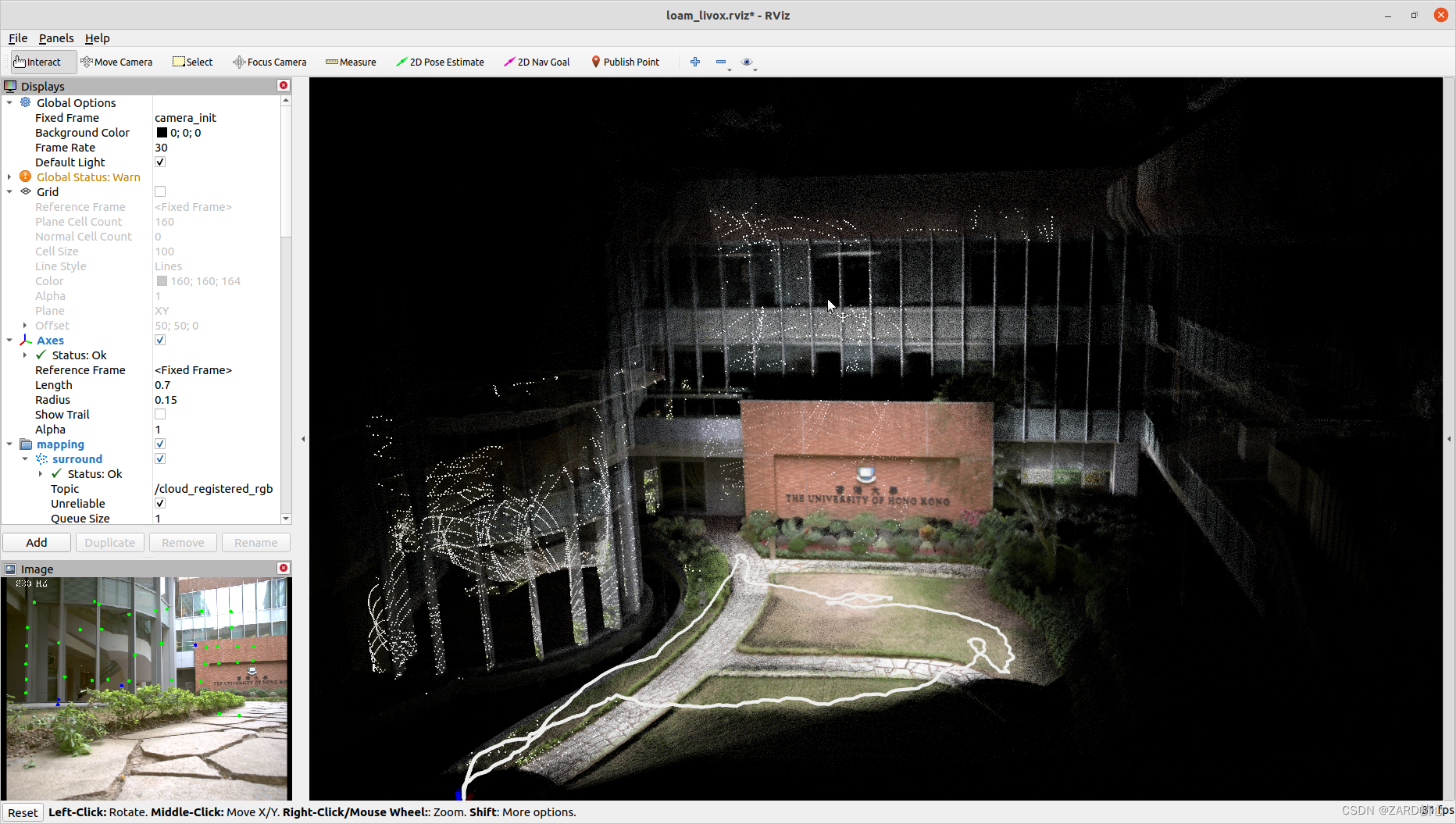

Write here found that due to FAST-LIVO need strict time synchronization, M2DGR data is not very good, but write and do not delete, here to run the sample data:

roslaunch fast_livo mapping_avia.launch

rosbag play hk1.bagThe figure is very beautifully built and stable

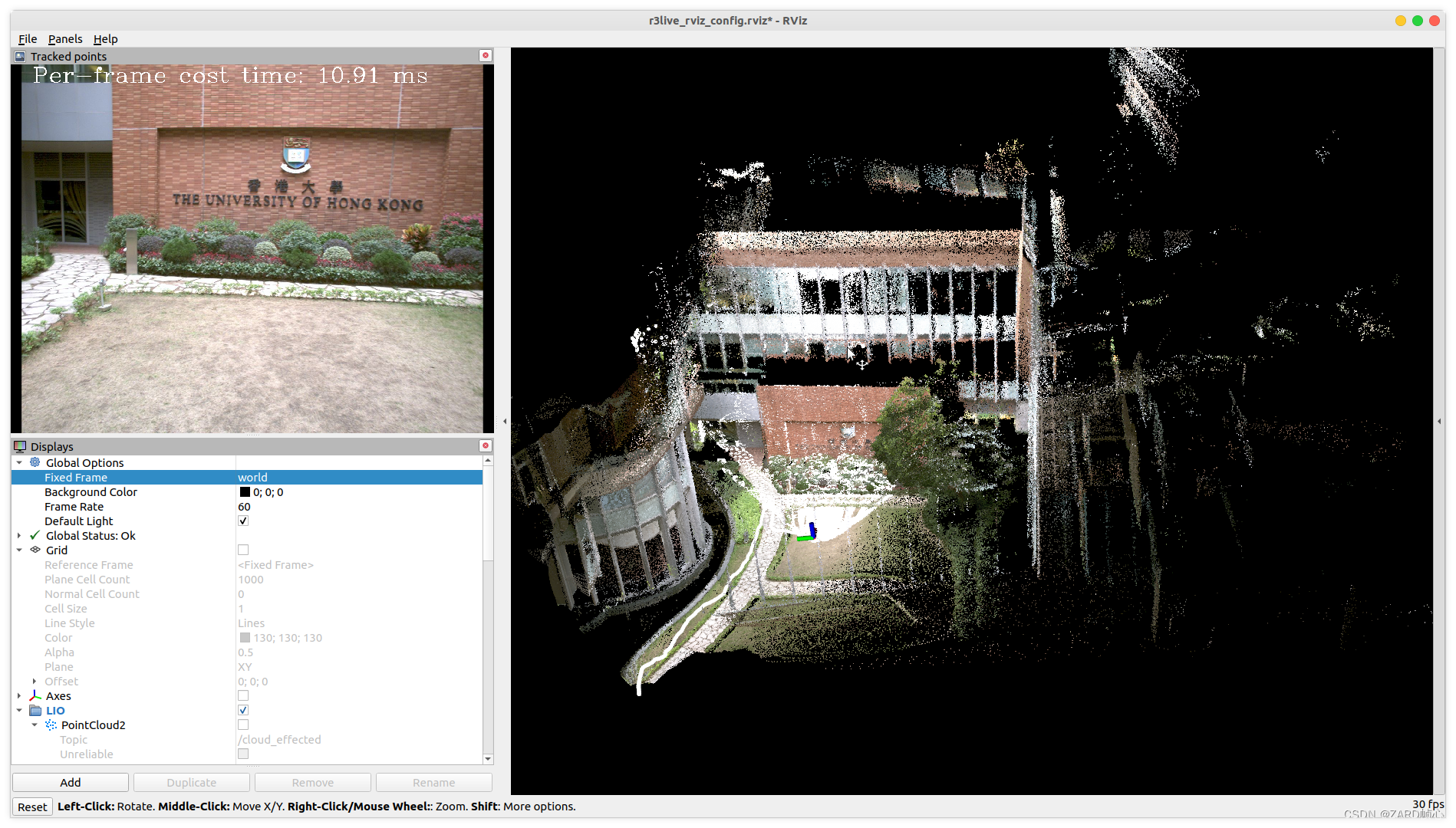

12.3 R3LIVE

Here I’ll add something similar, R3LIVE compiled and running with Livox-sdk, Livox_ros_driver already installed

(1)CGAL

sudo apt-get install libcgal-dev(2) Compilation

cd ~/catkin_ws/src

git clone https://github.com/hku-mars/r3live.git

catkin_make

source ~/catkin_ws/devel/setup.bash(3) Running

roslaunch r3live r3live_bag.launch

rosbag play YOUR.bag

Thirteen, Faster-LIO

Faster_LIOIt is an open source SLAM improved by Gobo on FAST_LIO, which makes SLAM more efficient.

13.1 Faster-LIO Installation

The compilation of Faster-LIO is very simple, Gobo has already tested it in 20.04, first install the dependencies:

- ROS (melodic or noetic), refer to my previous blog for installation instructions

- glog:

sudo apt-get install libgoogle-glog-dev - eigen:

sudo apt-get install libeigen3-dev - pcl:

sudo apt-get install libpcl-dev - yaml-cpp:

sudo apt-get install libyaml-cpp-dev

It can be compiled afterwards:

# Be careful not to be in the same workspace as Livox_ros_driver, otherwise Livox_ros_driver node name conflict

mkdir catkin_ws/src -p

cd catkin_ws

catkin_make13.2 Configuration parameters

Add the configuration file and the launch file, modifying the following

common:

dataset: "M2DGR"

lid_topic: "velodyne_points"

imu_topic: "handsfree/imu"

time_sync_en: false # ONLY turn on when external time synchronization is really not possible

preprocess:

lidar_type: 2 # 1 for Livox serials LiDAR, 2 for Velodyne LiDAR, 3 for ouster LiDAR,

scan_line: 32

blind: 4

time_scale: 1e3 # Compatible with different dataset time units

mapping:

acc_cov: 3.7686306102624571e-02

gyr_cov: 2.3417543020438883e-03

b_acc_cov: 1.1416642385952368e-03

b_gyr_cov: 1.4428407712885209e-05

fov_degree: 180

det_range: 100.0

extrinsic_est_en: true # true: enable the online estimation of IMU-LiDAR extrinsic

extrinsic_T: [ 0.27255, -0.00053,0.17954]

extrinsic_R: [ 1, 0, 0,

0, 1, 0,

0, 0, 1]<!-- Launch file for velodyne32 VLP-32 LiDAR -->

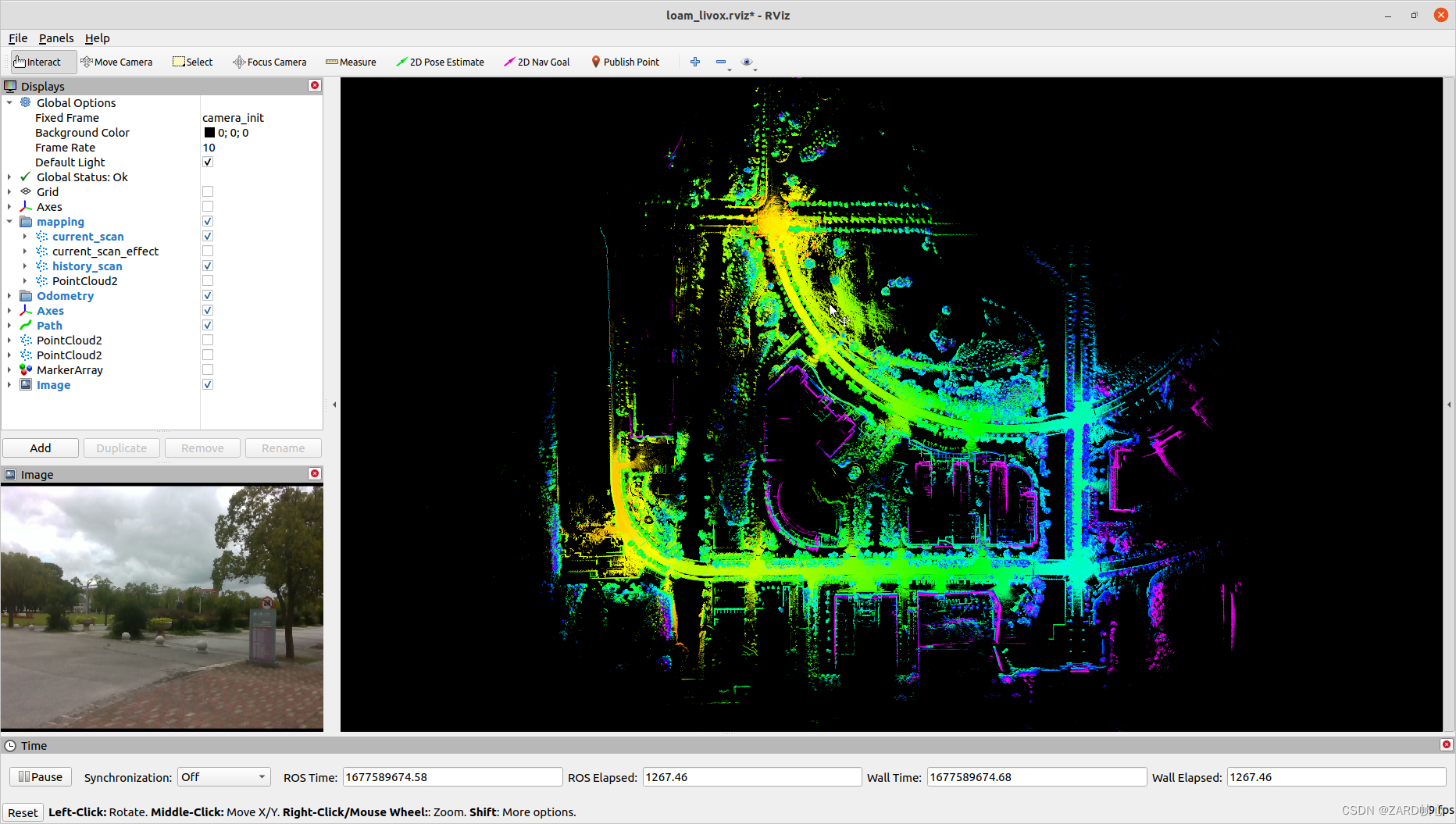

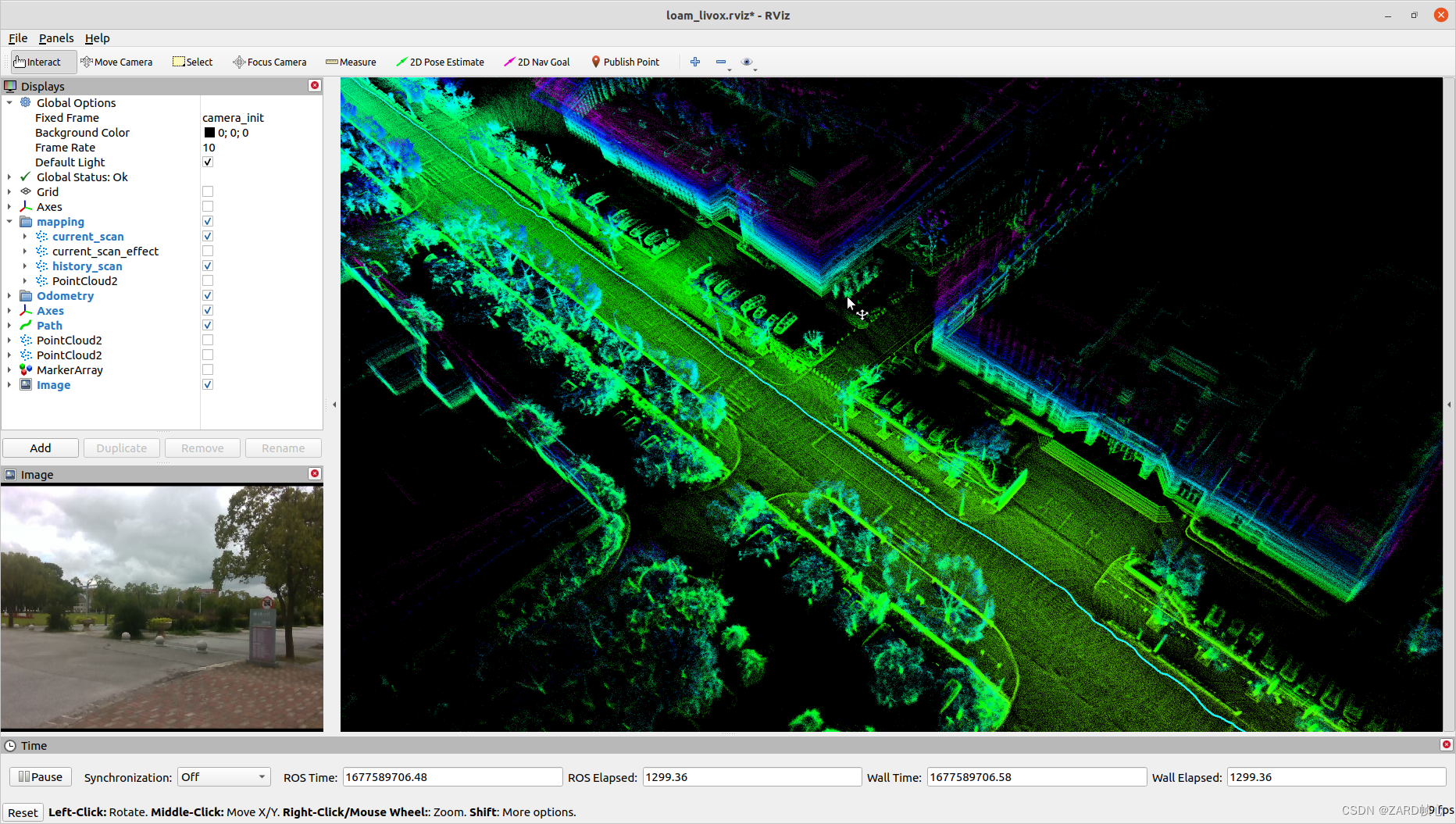

<rosparam command="load" file="$(find faster_lio)/config/velodyne_M2DGR.yaml" />13.3 Operation

source devel/setup.bash

roslaunch faster_lio mapping_velodyne_M2DGR.launch

# Open a terminal in the folder where bag is located and start playing the dataset:

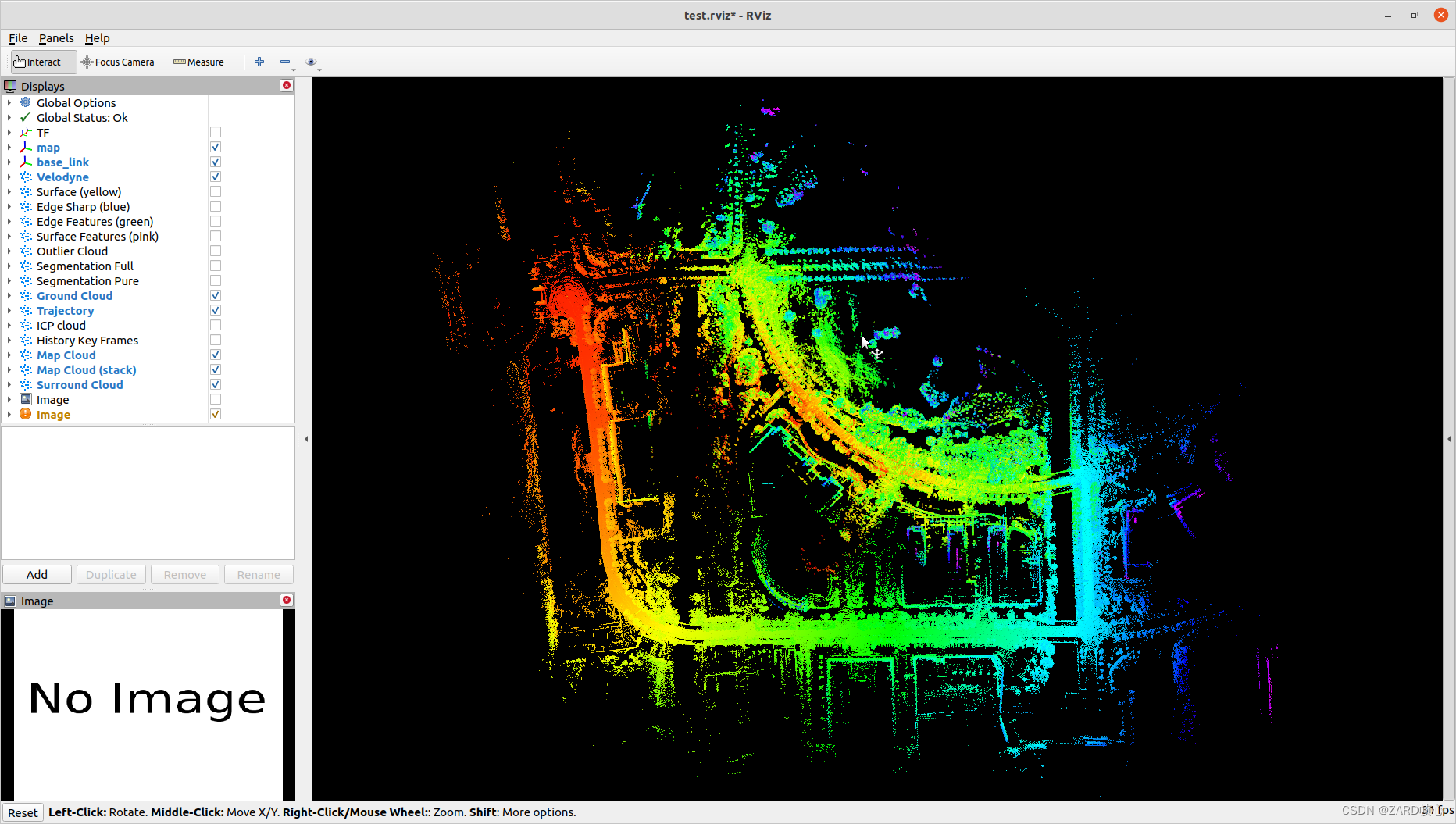

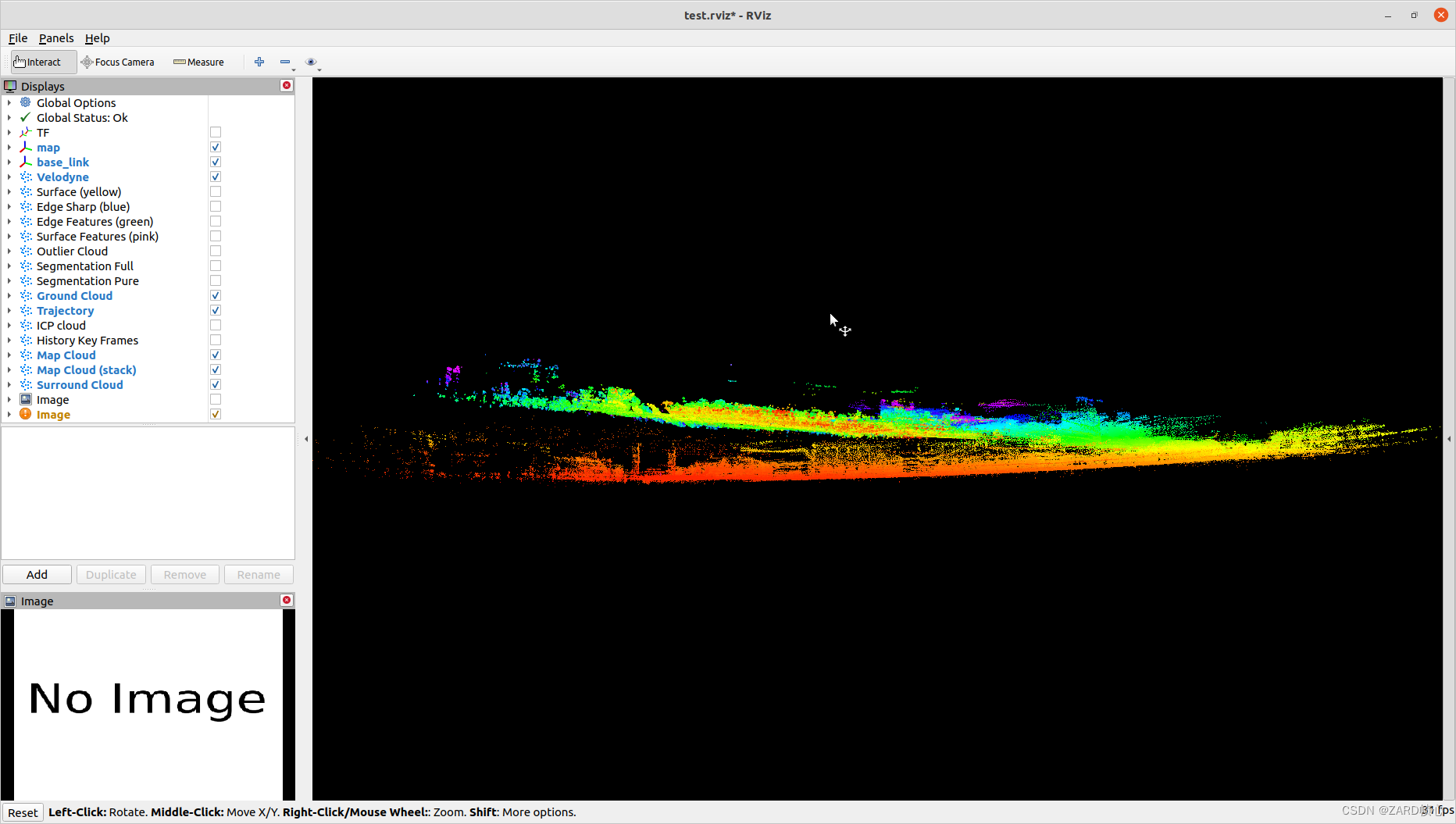

rosbag play street_02.bagThe Faster-Lio effect looks surprisingly good visually, with beautiful details in the built-up diagrams. Specific accuracy depends on the back of the comparison, but the running efficiency of the pro-measurement is indeed much higher than FAST-LIO!

xiv. hdl_graph_slam

hdl-graph-slam not only integrates multiple inputs such as imu, gps, planar, laser, etc., but also carries back-end processing such as closed-loop detection, i.e., a complete one-slam architecture.

14.1 Installation

First we installed ROS and then

sudo apt-get install ros-noetic-geodesy ros-noetic-pcl-ros ros-noetic-nmea-msgs ros-noetic-libg2o

cd catkin_ws/src

git clone https://github.com/koide3/ndt_omp.git

git clone https://github.com/SMRT-AIST/fast_gicp.git --recursive

git clone https://github.com/koide3/hdl_graph_slam

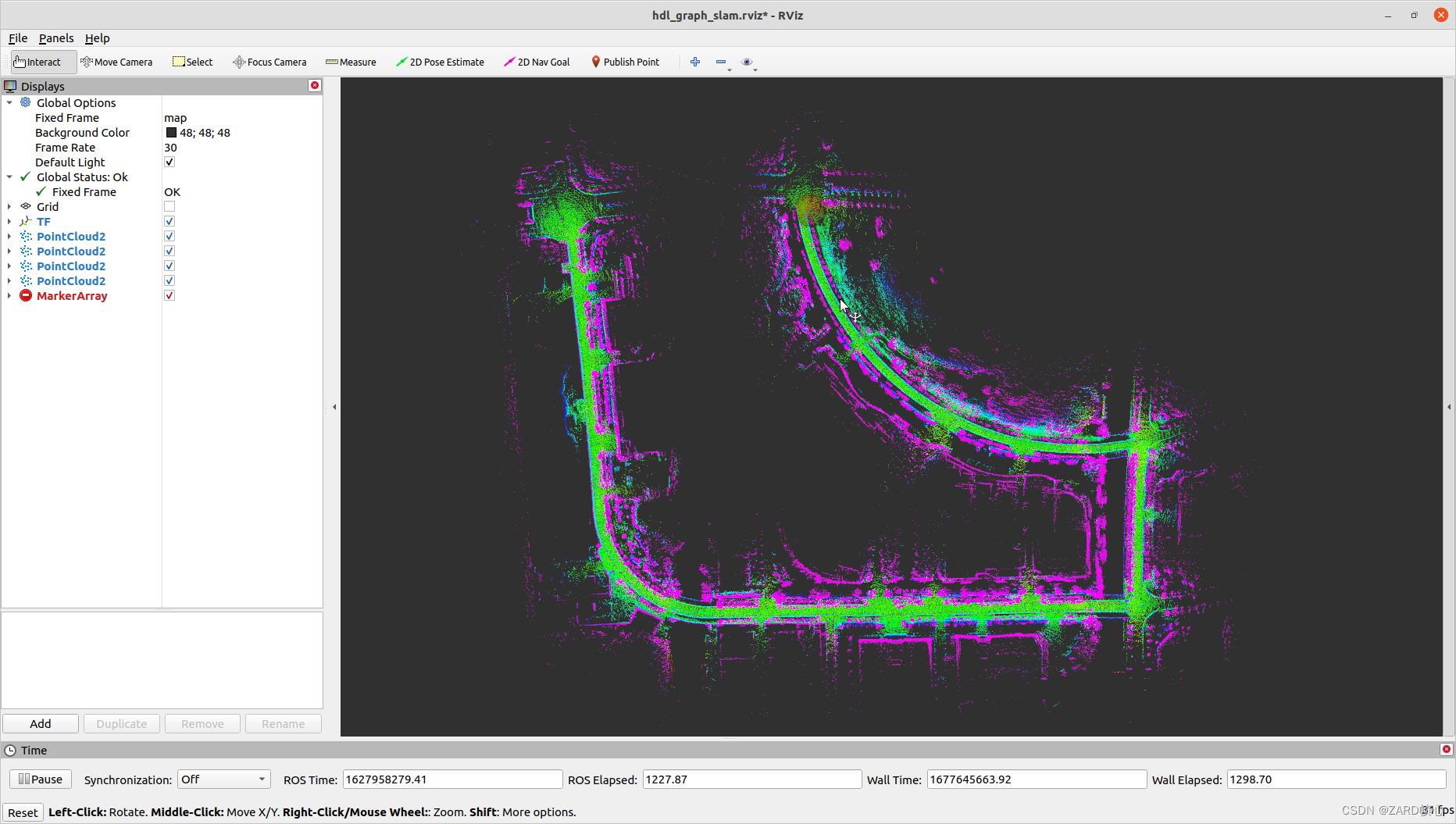

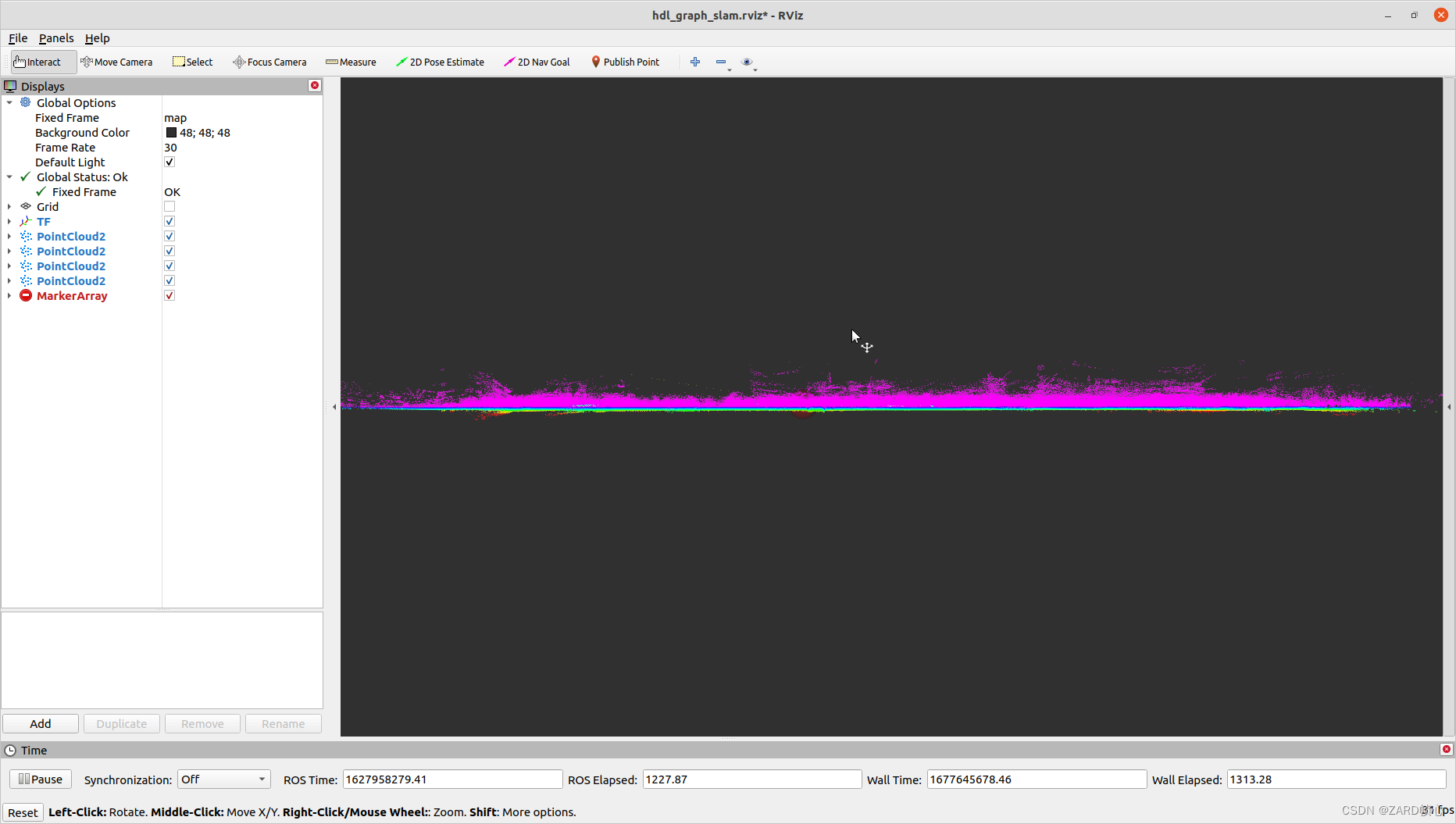

cd .. && catkin_make -DCMAKE_BUILD_TYPE=Release14.2 Pure laser

Open four terminals, each

# Start the master node

roscore

# Set the use_sim_time parameter and run

rosparam set use_sim_time true

roslaunch hdl_graph_slam hdl_graph_slam_400.launch

# Start rviz visualization

roscd hdl_graph_slam/rviz

rviz -d hdl_graph_slam.rviz

# Open a terminal in the folder where bag is located and start playing the dataset:

rosbag play street_02.bag --clock

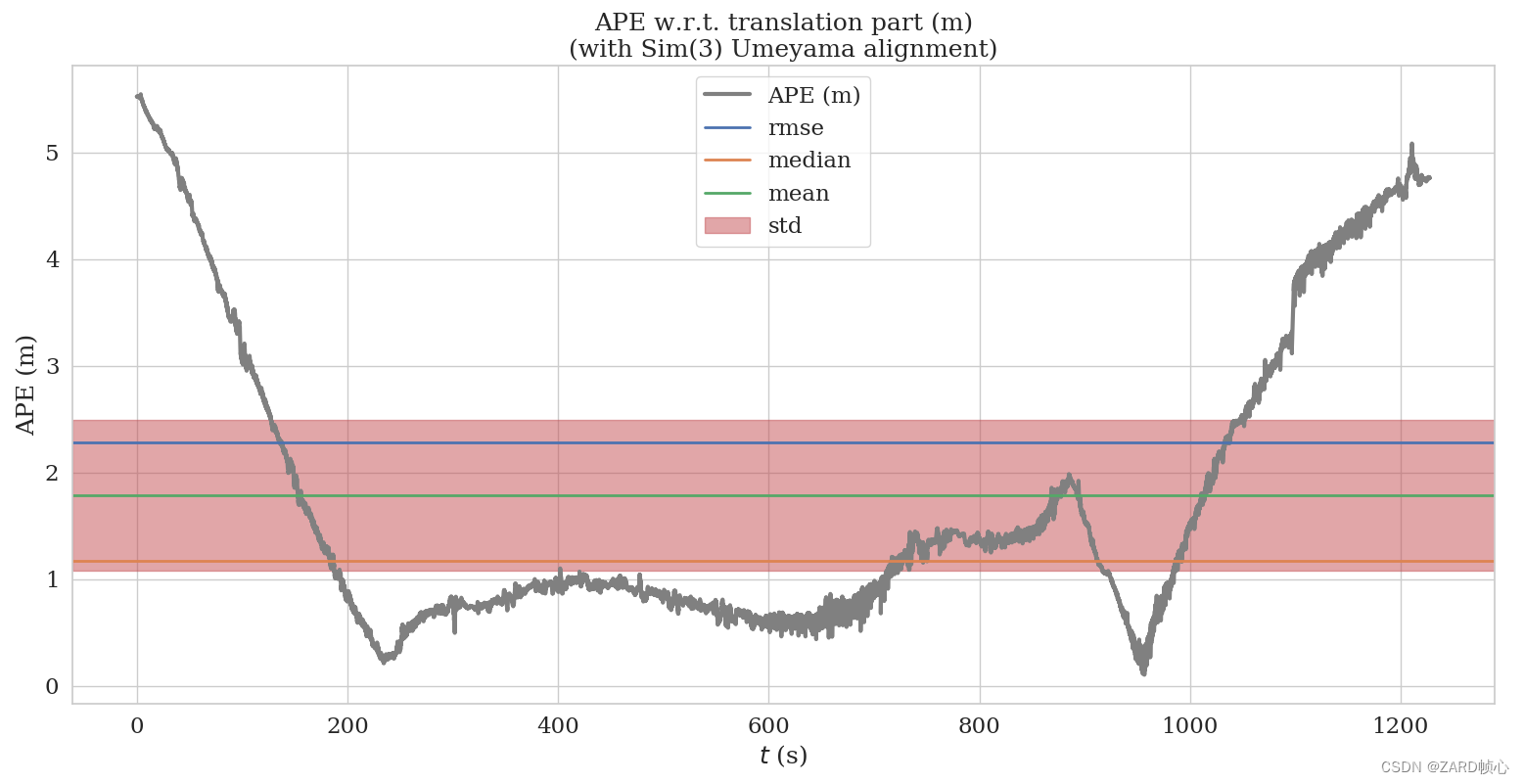

XV. Quality assessment and comparison of positioning results

As for the saving of trajectories, I have added the code for saving TUM format bit poses in the build module, so I won’t write about it in detail here.

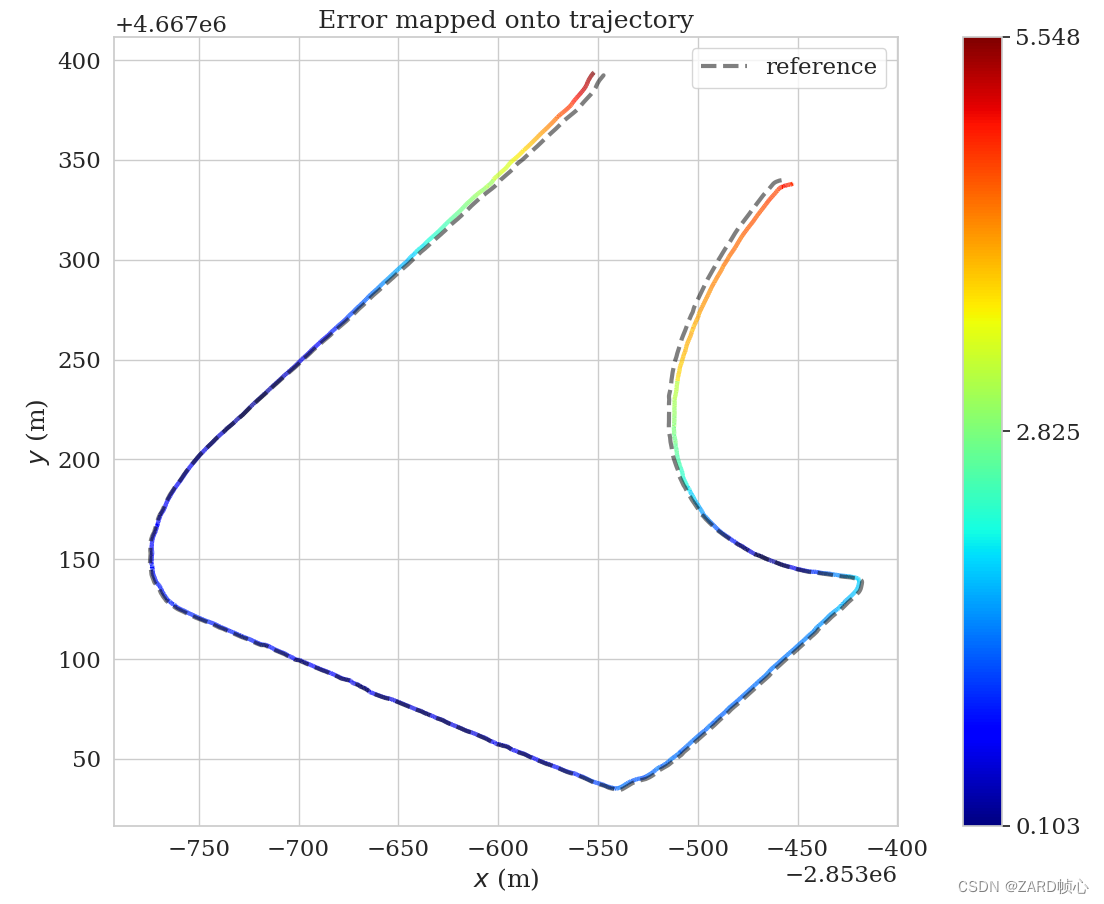

First find the absolute error of each trajectory (A-LOAM for example, thestreet_02.txt(is the trajectory truth value and A-LOAM is the A-LOAM trajectory output):

evo_ape tum street_02.txt A-LOAM -va -s --plot --plot_mode=xy --save_results A-LOAM.zip

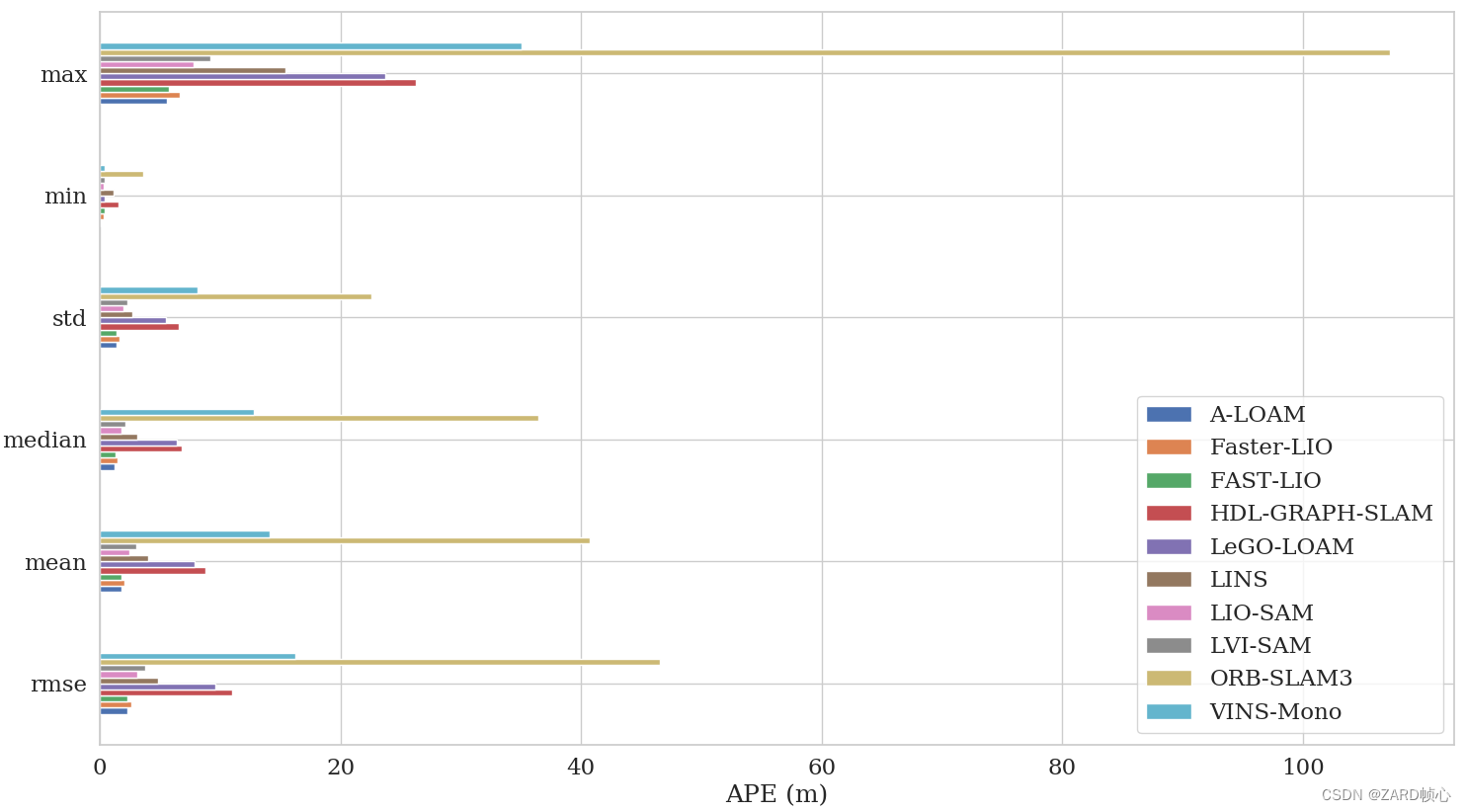

The same method was used to obtain the absolute trajectory error results for the other trajectories, which were placed in the same folder and compared:

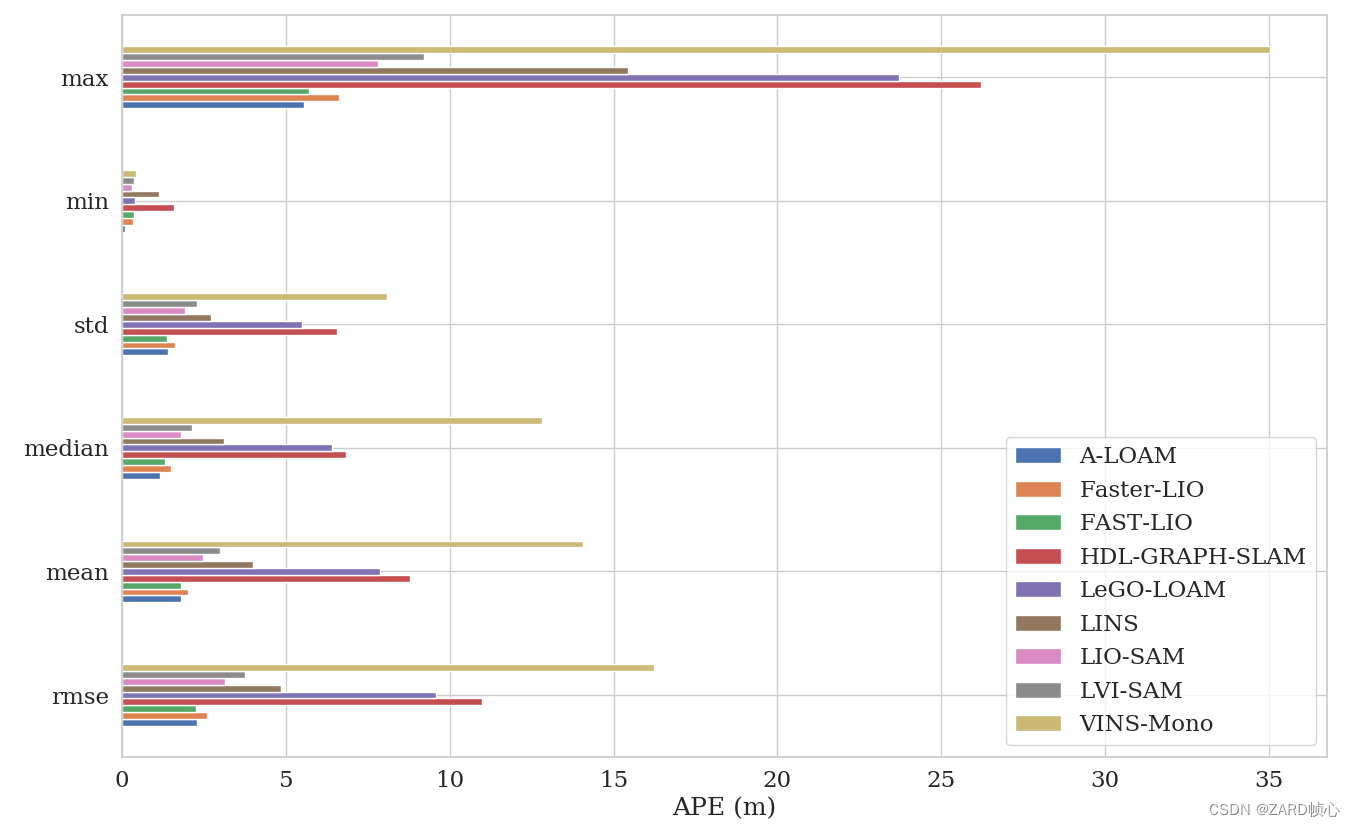

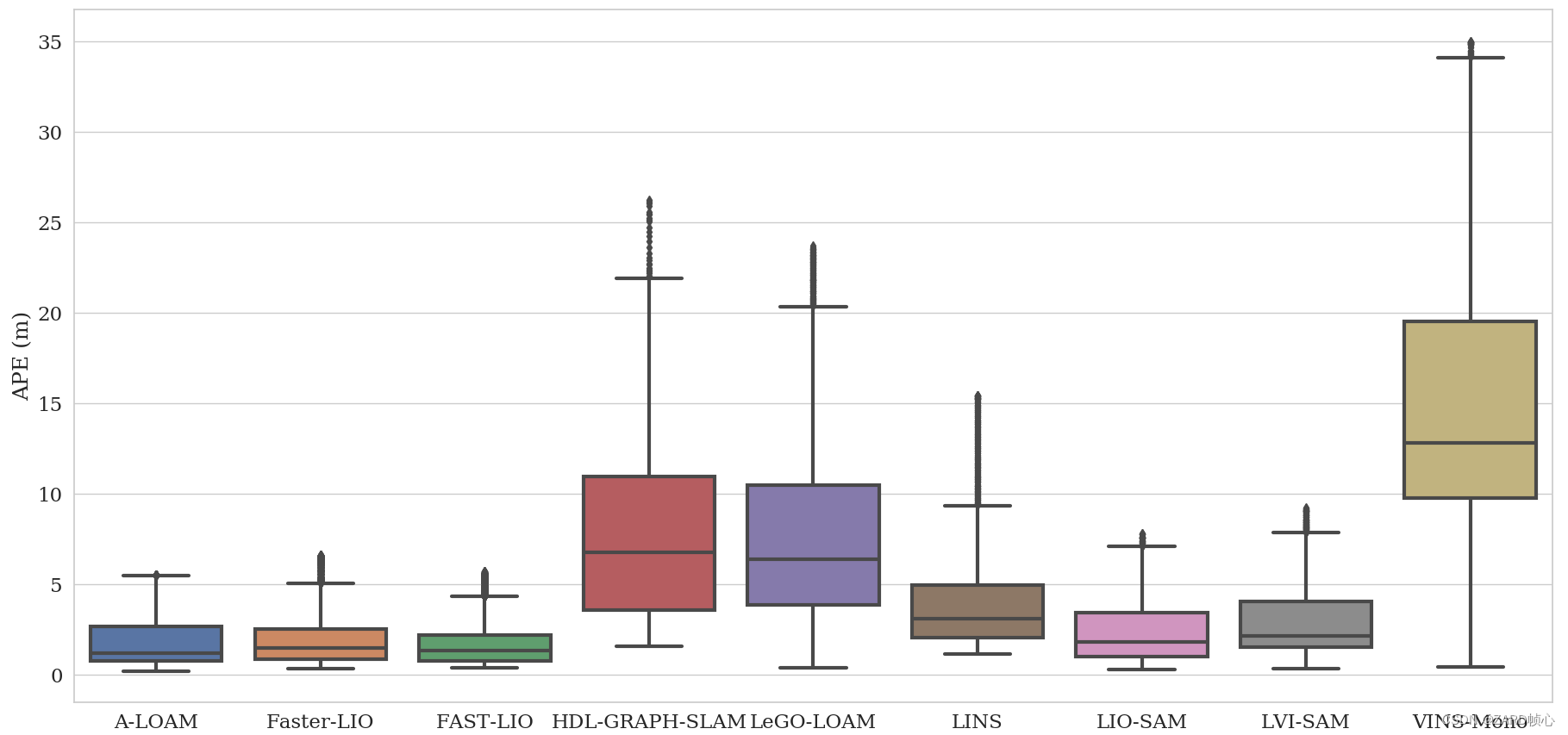

You can see that the error of ORB-SLAM3 is too large, and we remove it to compare other

For this data, the visual results are not so good, guessing that it might be the image timestamp jumping heavily and at a low frequency. The data from other sensors are also mediocre in all algorithms, pulling all algorithms to the same level, most multi-source fusion algorithms are even worse than pure laser A-LOAM, hahahahahahahaha