Article Series Catalog

Getting Started first pass, hand to teach you to install kafka and visualization tools kafka-eagle

What is Kafka and how to use SpringBoot to interface with Kafka

Architecture Essential Capabilities – kafka Selection Comparison and Application Scenarios

Message queues are an integral part of modern big data architectures. Previously, we introduced Kafka, a high-throughput, low-latency distributed message queuing system that is popular for its reliability, scalability, and flexibility. This blog will compare the differences between Kafka and mainstream competitors, and list typical application scenarios of Kafka and its advantages over its competitors

Author: Tomahawk, engaged in the financial IT industry, with many years of front-line development, architecture experience; hobby, willing to share, is committed to creating more high-quality content!

This article is included inkafka Columns, for those who need them, you can subscribe directly to the columns to get updates in real time!

High-quality columnscloud native、RabbitMQ、Spring Family Bucket etc. are still being updated and guidance is welcome

📙Zookeeper Redis dubbo Many frameworks such as docker netty, as well as architectural and distributed topics will be available soon, so stay tuned!

I. Kafka’s model and advantages

1. Kafka model

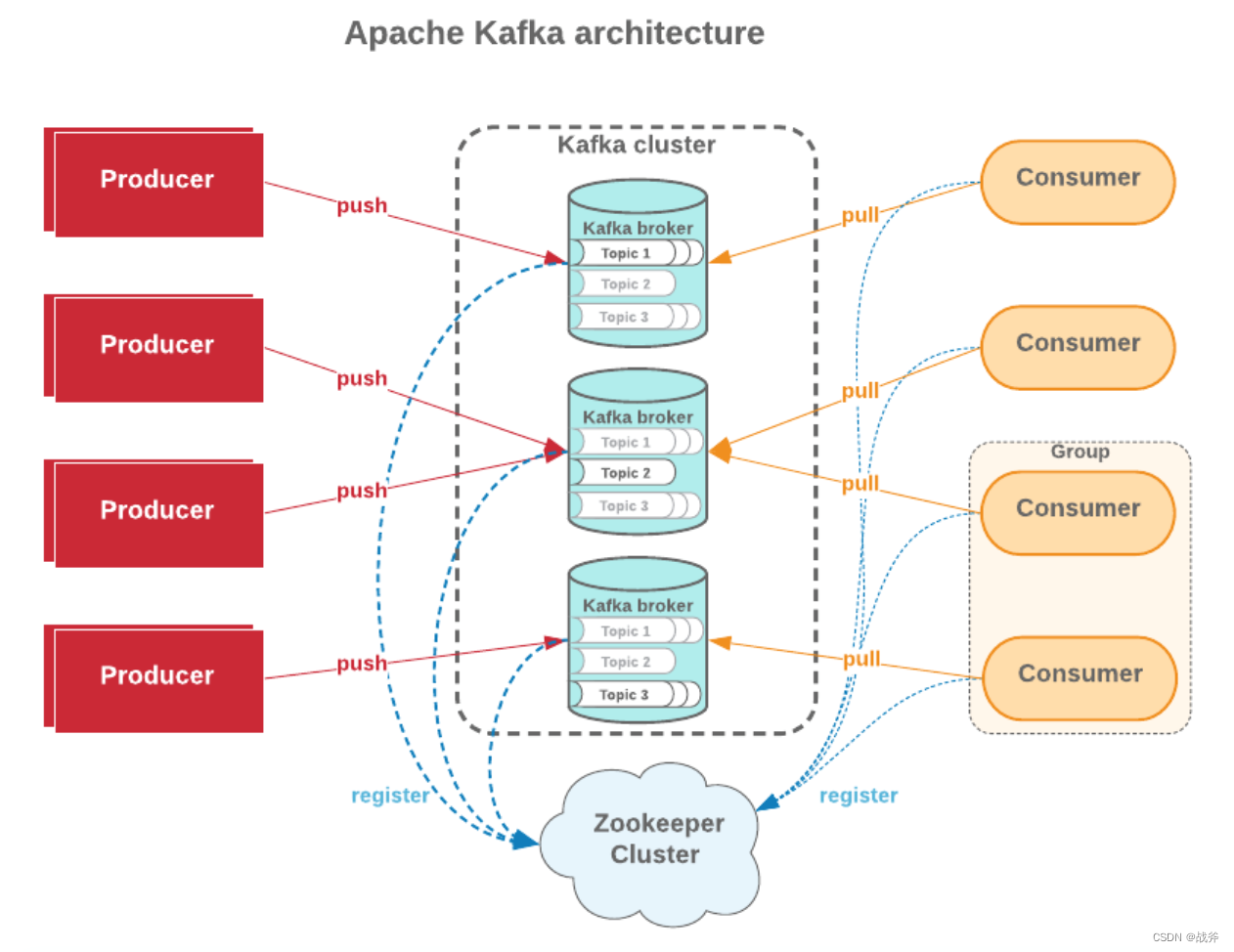

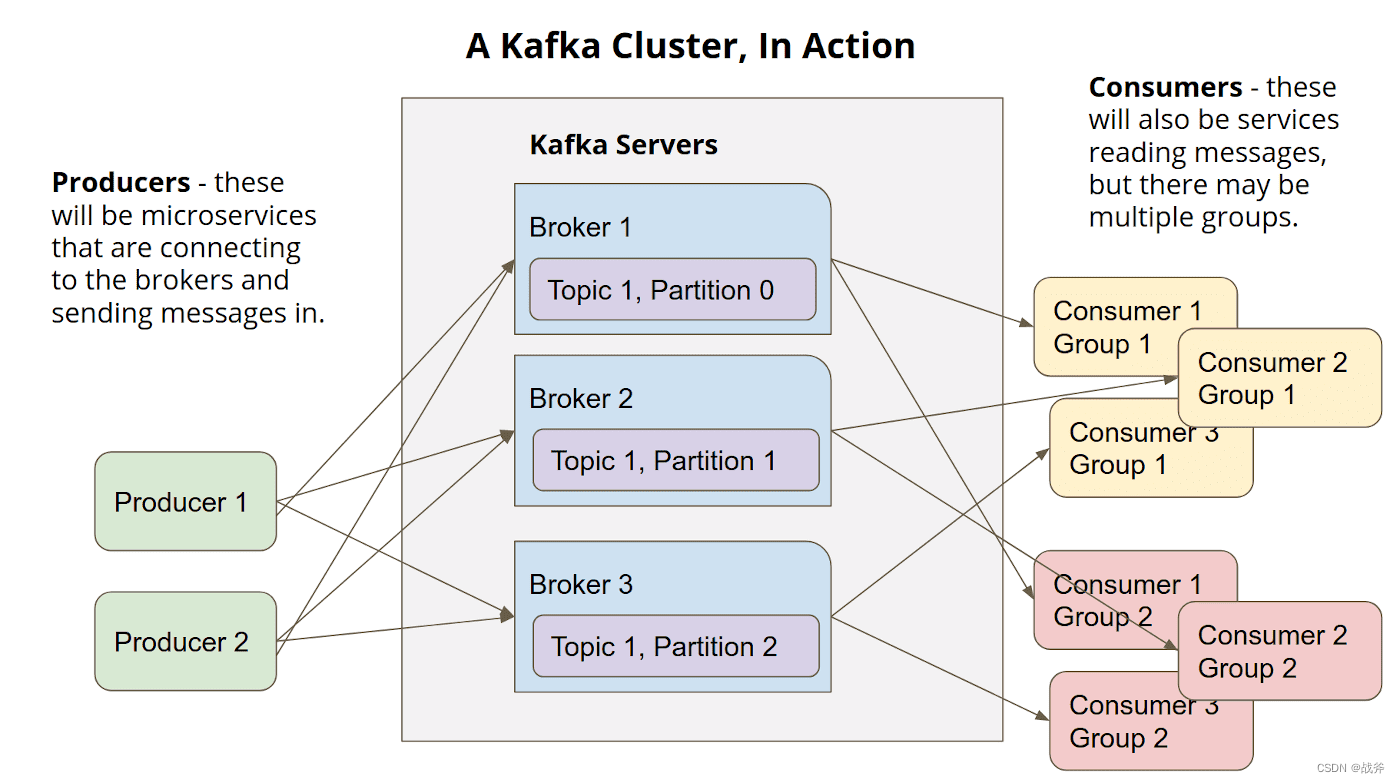

There are several key concepts to understand in Kafka, includingbroke、topic、partitionEtc.

-

Broker

A broker is a node in a Kafka cluster, which can be interpreted as a Kafka instance.A Kafka cluster consists of multiple Brokers. the Broker is responsible for storing the data, processing client requests, and coordinating various tasks in a distributed environment. -

Topic

A Topic is the basic unit of a message in Kafka and is equivalent to a classification of messages. Each Topic contains a number of messages that are stored in one or more Partitions in the Broker.A Topic can have multiple Partitions, while each Partition can only belong to one Topic.The Topic name is a string that usually represents the business or function that the Topic represents, such as “order”, “log”, etc. The Topic name is a string that usually represents the business or function that the Topic represents. -

Partition

Partition is a concept in Kafka that represents a physical unit of storageA Topic can be partitioned into multiple Partitions, each of which can store a certain number of messages. A Topic can be partitioned into multiple Partitions, each of which can store a certain number of messages.The messages in a Partition are ordered, and each message has a unique number called Offset.Offset is the unique identifier of a message in a Partition, and a client can read a message from a Partition based on Offset. The client can read the message from the Partition based on the Offset.

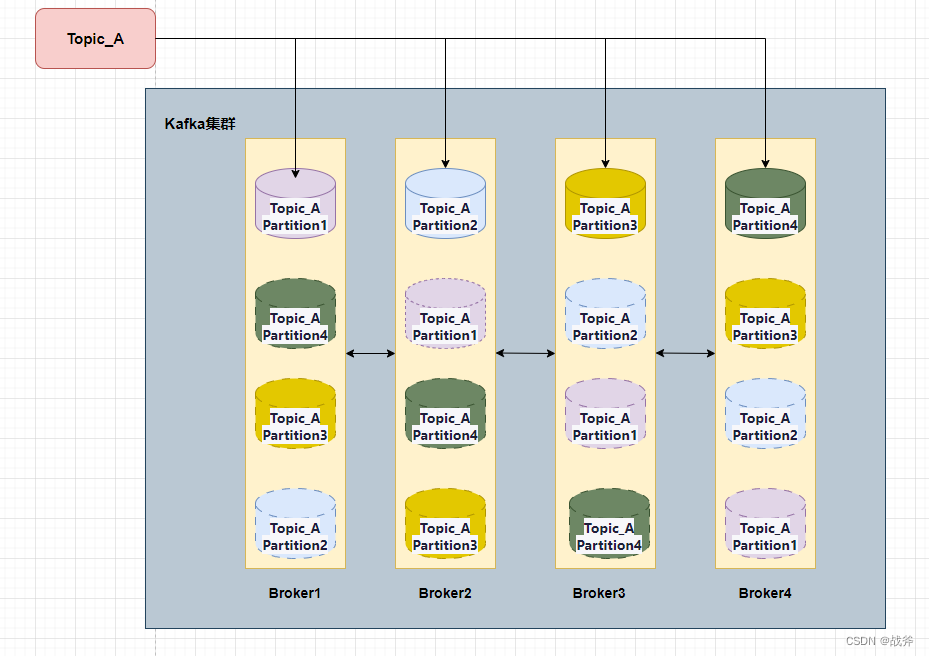

Sure.Partition There are actually categories, with a master-preparedness relationship.As shown in the figure above, Partition1 is the primary partition (Leader) in Broker1 as indicated by the solid line. Partition1 is also present in other partitions, but they are all follower partitions, shown by dotted lines.。

2. Kafka advantages

It is not difficult to see that the design of Kafka is very much like a distributed file system, because the natural thing is to have multiple Broker nodes, so it has a great throughput capacity. Coupled with the optional number of backups, with efficient data storage, making it a strong performance, we can summarize several advantages of Kafka

-

high throughput rate

Kafka’s high throughput is its most prominent advantage. In Kafka’s design, each partition has multiple replicas, and each replica can be independently served to the outside world if needed. This design allows Kafka to easily scale to thousands of nodes, enabling high throughput data transfer. In addition, Kafka supports batch messaging, which allows small messages to be combined into one large batch message, thus reducing network transmission overhead. -

High reliability

Kafka’s distributed design andMulti-copy mechanismHigh data reliability can be guaranteed. Each partition has multiple replicas, and once a replica fails, other replicas will automatically take over the service. In addition, Kafka supports persistent storage of messages, so even if there is an interruption in message transmission or a node crash, the data can be re-transmitted after the node is recovered. -

High flexibility

Kafka’s flexibility is also one of its advantages.Kafka can be used not only as a messaging middleware but also as a platform for log collection and data processing. In addition, Kafka’s storage model is flexible, supports many different data types and formats, and can be customized with message formats and processing logic.

Of course, in addition to excellent performance, theThe Kafka ecosystem is also rich, with a variety of different consumer and producer clients and support for multiple programming languages, such as Java, Python, and Go. In addition, Kafka providesKafka ConnectandKafka Streams APIKafka can be integrated with different external systems and supports real-time data processing and streaming computation.

Second, the difference between Kafka and its competitors

1. Compared to RabbitMQ

In a previous postMessage Queue Selection – Why RabbitMQ? We’ve actually done some comparisons of Kafka RabbitMQ, and I’ll put up the comparison table from that time here:

RabbitMQ is a popular AMQP messaging agent that provides good messaging performance and also provides better support in terms of high reliability and transactionality. However, compared to Kafka, RabbitMQ is not powerful enough in terms of scalability and performance when dealing with large amounts of data. However, for many big data applications, Kafka’sscalabilityandPerformance AdvantagesMake it a better choice.

2. Compared to ActiveMQ

ActiveMQ is Apache’s distributed message broker that provides good Java integration and reliability.

| comparison term | ActiveMQ | Kafka |

|---|---|---|

| application scenario | Applications for intra-enterprise messaging, integration, asynchronous communication, etc. | Applications in large-scale data processing, streaming computing, etc. |

| Message Storage Mode | Messages are sent to a queue or topic, stored on disk | Messages are stored on disk in the Kafka cluster in a partitioned manner |

| message consumption | Messages are deleted after being consumed | Messages are not deleted immediately after they are consumed, but are retained on disk according to a set retention time |

| throughput | Relatively low throughput | Relatively high throughput |

| scalability | relatively poor | relatively good |

| Message Assurance | Supports message transactions, which ensures the reliability of messages | Supports at least one message delivery, no guarantee of message reliability |

| Message Order Guarantee | Support for Message Order Guarantee | Supports partition-based message order assurance |

| Manage Maintenance | Relatively simple | Relatively complex |

| ecosystems | Relatively well-developed ecosystems | Relatively homogenous ecosystems |

| development difficulty | Relatively difficult to develop | Relatively low development difficulty |

| Message Delivery Method | The transfer method is based on the TCP protocol | The transfer method is based on TCP protocol and supports Zero-copy technology. |

| message filter | SQL-like message filter support | Message filters are not supported |

| Message distribution mechanism | Consumers need to poll the server for messages | Messages are actively distributed to consumers by the server through push mode |

| Message Repeat Consumption Issues | relatively small | relatively more |

However, as opposed to Kafka, theActiveMQ The lack of performance when dealing with large amounts of data and problems with lag and scalability mean that Kafka has a strong advantage when it comes to high-performance, large-scale data processing.

3. Compared to RocketMQ

Kafka and RocketMQ are both popular distributed message queuing systems that can be used for data transfer and processing, and some of their features compare as follows

| characterization | Kafka | RocketMQ |

|---|---|---|

| Applicable Scenarios | Large-scale real-time data processing, high throughput, low latency | Large-scale distributed messaging and processing |

| data model | Log-based messaging model with message ordering | JMS-based messaging model with support for batch messaging |

| Storage Methods | Messages are stored using a queue, and a replica mechanism ensures data reliability. | Messages are stored using topics, with support for multiple storage methods |

| zoning | Distributed partitioning for easy horizontal expansion | Distributed partitioning with horizontal and vertical expansion support |

| performance | High throughput, low latency, better handling of large data streams | Handles high concurrency and large data streams better |

| dependability | Ensure data reliability with multiple copies and good fault tolerance | Based on distributed architecture with strong reliability and fault tolerance |

| Community Support | Open source community support is extensive, documentation is rich, and plugins are extensible | Independent open source community support, relatively little documentation and plug-ins |

Overall, RocketMQ is comparable to Kafka in terms of performance. As for the community , both are now Apache Software Foundation’s top projects , Kafka was originally developed by LinkedIn Corporation , while RocketMQ was originally developed by Alibaba Corporation , but contributed to the Apache Software Foundation a little later , so the relative activity is a little lower , but its application is very widespread in China .

4. Comparison with Pulsar

Apache Pulsar and Apache Kafka are both scalable and reliable streaming data platforms. They both have high availability, concurrency and throughput and support distributed subscription and publishing, some of their comparisons are listed below:

| comparison term | Apache pulsar | Kafka |

|---|---|---|

| Release time | 2017 | 2011 |

| multilingualism | Java | Scala |

| cluster mode | multi-tenant | high density tenant population |

| scalability | Low latency and high capacity | Extremely scalable |

| (political, economic etc) affairs | be in favor of | unsupported |

| message sequence | successive | successive |

| Multi-language client | be in favor of | be in favor of |

| Cross-Data Center Replication | be in favor of | be in favor of |

| batch file | be in favor of | be in favor of |

| multi-tenant security | be in favor of | unsupported |

| Community Support | Relatively new, but growing rapidly | Relatively mature community support |

| performance | Pulsar excels in latency, throughput, and scalability, especially in multi-tenancy and cross-data center replication. | Kafka excels in throughput and scalability and is a reliable and efficient messaging system. |

In a nutshell, Apache pulsar and Kafka are both high performance distributed messaging systems for real-time data transfer. They both have different features and performance characteristics.Apache pulsar has more features such as offsite replication, multi-tenant design etc. but Kafka has higher performance and more mature community support.

Typical application scenarios of Kafka

1. Common scenarios

-

message queue

Kafka can be used as an alternative to traditional message queues. It can transmit large numbers of messages quickly, keep messages reliable and sequential, and allow multiple consumers to read messages thatDespite being slightly less characterized in terms of MQ functionality, Kafka has better scalability and throughput compared to other MQ plugins.

-

Log collection

Kafka can be an ideal platform for log collection. Due to its reliability and scalability, Kafka can collect logs in real-time across hundreds of servers that can be subsequently processed and analyzed.Kafka’s efficient processing capabilities make it the best choice for collecting real-time logs. We’ve written about Kafka in theCan’t get Log4j2 to work? Hands-on with Log4j2

》 It also mentions that you can configure theAppendersTransfer logs to the Kafka server.Compared to other MQ plugins, Kafka comes preconfigured for this scenario, is easier to use, and can handle higher data volumes and faster data transfer rates.

-

stream processing

Kafka’s stream processing capabilities make it the platform of choice for building real-time processing systems. It allows developers to automate triggering and responding to events by processing unlimited streams and can use various data processing steps in the streams.Kafka uses distributed stream processing to handle large amounts of data and provide greater reliability than other MQ plugins. -

event-driven

Kafka can be used as the backend of an event-driven architecture to help process large amounts of event data, including user behavior data, transaction data, log data, and more.Kafka has better scalability and fault tolerance than other MQ plugins。

2. Case studies

Scenario: A large e-commerce website needs to monitor users’ purchasing behavior in real time in order to adjust product recommendation strategies and promotions in time to increase users’ purchase rate. This website has tens of millions of users and millions of products, and generates thousands of purchase behavior events per second. How to efficiently collect, process and analyze this data is a very challenging problem.

Solution: Use Kafka to build a real-time data processing system that contains the following components:

1.Data collection: In an application for an e-commerce website, use Kafka’s Producer API to send data about a user’s purchasing behavior to Kafka’s Topics.

2.data processing: On the consumer side of Kafka, one or more consumer processes run to process the data. Consumer processes can use Kafka Connect to write data to data storage systems such as NoSQL databases, Hadoop clusters, and so on. When processing data, consumers need to be aware of the following key points:

- Ensure data reliability: Use Kafka’s message acknowledgment mechanism to ensure that data is not lost or processed repeatedly.

- Support for distributed processing: use Kafka’s partitioning mechanism to achieve efficient horizontal scaling and avoid the impact of a single point of failure.

- Timestamp management: When processing data, you need to record the timestamp of the data into Kafka to ensure correctness.

3.data analysis: Use real-time stream processing tools such asApache Storm、JStormorApache Flinkthat analyze and process the data in real time and output the results into real-time reports and dashboards. When using these tools, there are several key points to keep in mind:

- Window Mechanism: Use the window mechanism to control the time period over which data is processed for aggregation, analysis and statistics.

- Data source management: the same as Kafka , real-time stream processing tools also need to support distributed processing , and can be achieved through Kafka Connect to manage the data source .

- Visualization of processing result data: Use visualization tools, such as Grafana, Kibana, etc., to visualize processing results and output them to real-time reports and dashboards, making it easy for business people and technicians to understand real-time data changes.

summarize

After the above explanation, it is not difficult to know that Kafka has a wide range of application scenarios, you can just use him as an MQ component, or you can use it for log transfer or stream processing. It is also characterized by very distinctive features, that is, powerful throughput, scalability and reliability. Of course it is compared with traditional MQ components , it will be more troublesome to use in complex scenarios . However, it is widely used in the field of big data, for example, it is often used as a data source for Hadoop, transferring data to Hadoop for storage and processing.

Of course, in the actual selection we often have to consider more issues, in addition to clear requirements and scenarios, but also consider the technology stack has been used, development language support, version updates. There is no one framework is a panacea. For some of the requirements of the scene is relatively thin, there may be many frameworks can meet the requirements, then easy to use and easy to maintain will become the key to selection!