catalogs

Machine translation is the field of technology that enables computers to convert one language into another. This paper provides an in-depth analysis of various machine translation strategies, starting with an introduction, rule-based, statistical and neural network approaches. Also, various criteria and tools for evaluating the performance of machine translation, including BLEU, METEOR, etc., are discussed in detail to ensure the accuracy and quality of translation.

Focus on TechLead to share the full dimension of AI knowledge. The author has 10+ years of internet service architecture, AI product development experience, team management experience, Tongji Ben Fudan Master, Fudan Robotics Intelligence Laboratory member, Aliyun certified senior architect, project management professionals, hundreds of millions of revenue AI product development leader.

I. Introduction to Machine Translation

Machine translation, as a core area of natural language processing, has always been the focus of researchers’ attention. The goal is to realize that computers automatically translate one language into another without human involvement.

1. What is Machine Translation (MT)?

Machine Translation (MT) is a technology that automatically translates text from a source language into a target language. It uses specific algorithms and models to try to achieve the best semantic mapping between different languages.

typical example: When you type “Hello, world!” into Google Translate and translate it from English to French, you get “Bonjour le monde!”. This is a simple example of machine translation.

2. Source and target languages

- source language: The language of the original text you want to translate.

typical example: In the previous example, the language of “Hello, world!”, English, is the source language.

- target language: The language into which you want to translate the source language text.

typical example: In the above example, French is the target language.

3. Translation models

At the heart of machine translation is a translation model, which can be rule-based, statistically-based, or neural network-based. All of these models try to find the best translation, but they work differently and have different emphases.

typical example:: A rule-based translation model may have a lexicon to look up direct correspondences of words. So, it may translate the English word “cat” directly into the French word “chat”. A statistical-based model may consider the frequency of phrases and sentences in the corpus to determine whether “cat” should be translated into “chat” in a certain context.

4. Importance of context

In machine translation, word translation alone is usually not enough. Context is critical to obtaining an accurate translation. Some words may have different meanings and translations in different contexts.

typical exampleThe English word “bank” can mean either “riverbank” or “bank”. If the context refers to “money”, then the correct translation is probably “bank”. If the context mentions “river”, then “bank” should be translated as “riverbank”.

The above provides a brief introduction to machine translation. From definitions to various details, each section is designed to help the reader better understand this complex but exciting area of technology.

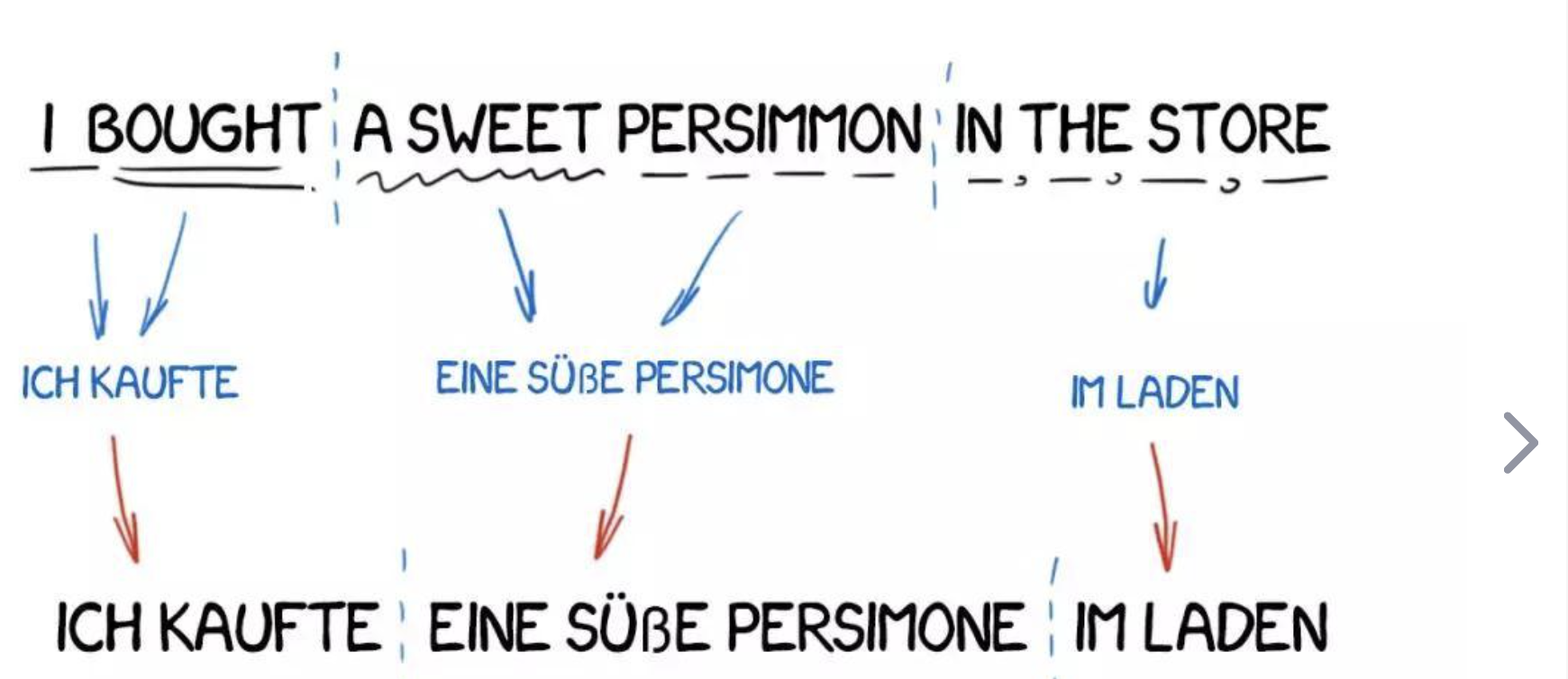

II. Rule-Based Machine Translation (RBMT)

Rule-based machine translation (RBMT) is a technique that utilizes linguistic rules to convert source language text into target language text. These rules are usually hand-written by linguists and cover grammar, vocabulary and other language-related features.

1. Rule-making

In RBMT, linguists need to write a large number of transformation rules for both source and target languages. These rules describe how to convert the source language to the target language based on its syntactic structure.

typical example:: In English-French translations, English adjectives usually precede nouns, while French adjectives usually follow nouns. Thus, the rules may direct that “red apple” be translated as “pomme rouge”.

2. Dictionaries and vocabulary selection

In addition to syntactic transformation rules, RBMT relies on detailed bilingual dictionaries. These dictionaries contain correspondences of words and phrases between the source and target languages.

typical example:: Dictionaries may indicate that the English word “book” can be translated into the French word “livre”.

3. Constraints and challenges

Although RBMT can provide relatively accurate translations in some domains and applications, it faces some limitations. The number of rules can become very large and difficult to maintain; and, for certain complex and ambiguous sentences, the rules may not provide accurate translations.

typical example:: “I read books on the bank.” Does “bank” in this sentence mean “riverbank” or “bank”? Without context, a rule-based translation system may struggle to make an accurate choice.

4. PyTorch implementation

While modern machine translation systems rarely rely entirely on RBMT, we can simply use PyTorch to model a simplified version of an RBMT system.

import torch

# Suppose we've got an English-French dictionary #

dictionary = {

"red": "rouge",

"apple": "pomme"

}

def rule_based_translation(sentence: str) -> str:

translated_words = []

for word in sentence.split():

translated_words.append(dictionary.get(word, word))

return ' '.join(translated_words)

# Input and output examples

sentence = "red apple"

print(rule_based_translation(sentence)) # Output: rouge pommeIn this simple example, we define a basic English-French dictionary and create a function to perform rule-based translation. This is a very simplified example; a real RBMT system would involve more complex syntax and structure transformation rules.

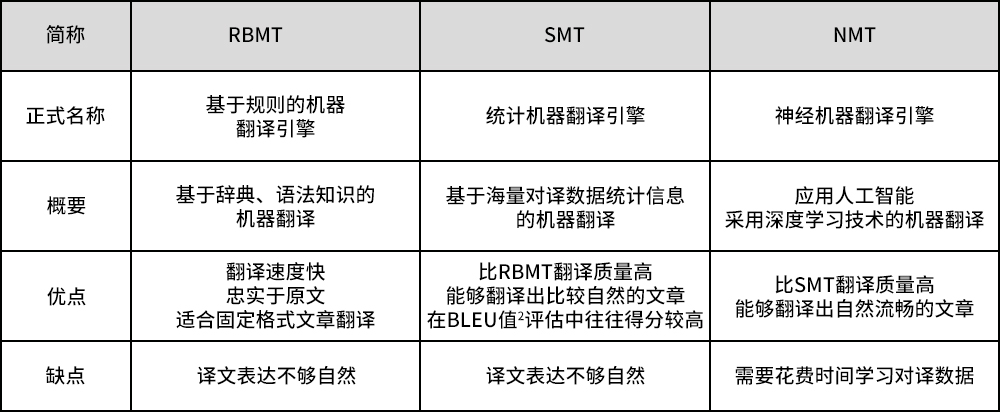

III. Statistical Machine Translation (SMT)

Statistical-based machine translation (SMT) utilizes statistical models to learn how to translate from the source language to the target language from large amounts of bilingual text data. Unlike RBMT, which relies on linguists writing rules by hand, SMT automatically learns translation rules and patterns from data.

1. Data-driven

SMT systems typically learn from bilingual corpora (containing source language texts and their corresponding target language translations). By analyzing thousands of sentence pairs, the system learns the most likely translations of words, phrases and sentences.

typical example:: If “cat” is often translated as “chat” in many different sentence pairs, the system will learn this correspondence.

2. Phrase alignment

SMT usually uses so-called “phrase lists”, which are automatically extracted lists of phrase alignments from a bilingual corpus.

typical example:: The system may learn from sentence pairs that “take a break” corresponds to “prendre une pause” in French.

3. Scoring and selection

SMT uses several statistical models to evaluate and select the best translation. These include language models (to evaluate the fluency of translations in the target language) and translation models (to evaluate the accuracy of translations).

typical example:: When translating “apple pie”, the system may generate multiple candidate translations and choose the one with the highest rating.

4. PyTorch implementation

Complete SMT systems are complex, involving multiple components and complex models. But to illustrate, we can create a simplified statistically-based word alignment model using PyTorch:

import torch

import torch.nn as nn

import torch.optim as optim

# Suppose we have some bilingual sentence pairs of data #

source_sentences = ["apple", "red fruit"]

target_sentences = ["pomme", "fruit rouge"]

# Convert sentences into word indexes

source_vocab = {"apple": 0, "red": 1, "fruit": 2}

target_vocab = {"pomme": 0, "fruit": 1, "rouge": 2}

source_indices = [[source_vocab[word] for word in sentence.split()] for sentence in source_sentences]

target_indices = [[target_vocab[word] for word in sentence.split()] for sentence in target_sentences]

# Simple alignment models

class AlignmentModel(nn.Module):

def __init__(self, source_vocab_size, target_vocab_size, embedding_dim=8):

super(AlignmentModel, self).__init__()

self.source_embedding = nn.Embedding(source_vocab_size, embedding_dim)

self.target_embedding = nn.Embedding(target_vocab_size, embedding_dim)

self.alignment = nn.Linear(embedding_dim, embedding_dim, bias=False)

def forward(self, source, target):

source_embed = self.source_embedding(source)

target_embed = self.target_embedding(target)

scores = torch.matmul(source_embed, self.alignment(target_embed).transpose(1, 2))

return scores

model = AlignmentModel(len(source_vocab), len(target_vocab))

criterion = nn.CosineEmbeddingLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

# Training models

for epoch in range(1000):

total_loss = 0

for src, tgt in zip(source_indices, target_indices):

src = torch.LongTensor(src)

tgt = torch.LongTensor(tgt)

scores = model(src.unsqueeze(0), tgt.unsqueeze(0))

loss = criterion(scores, torch.ones_like(scores), torch.tensor(1.0))

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_loss += loss.item()

if epoch % 100 == 0:

print(f"Epoch {epoch}, Loss: {total_loss}")

# Enter: apple

# Output: pomme (choose the best match based on score)This code is a simple word alignment model which tries to learn the alignment relationship between source and target words. This is just the tip of the iceberg for SMT; a complete system would involve sentence-level alignment, phrase extraction, multiple statistical models, and more.

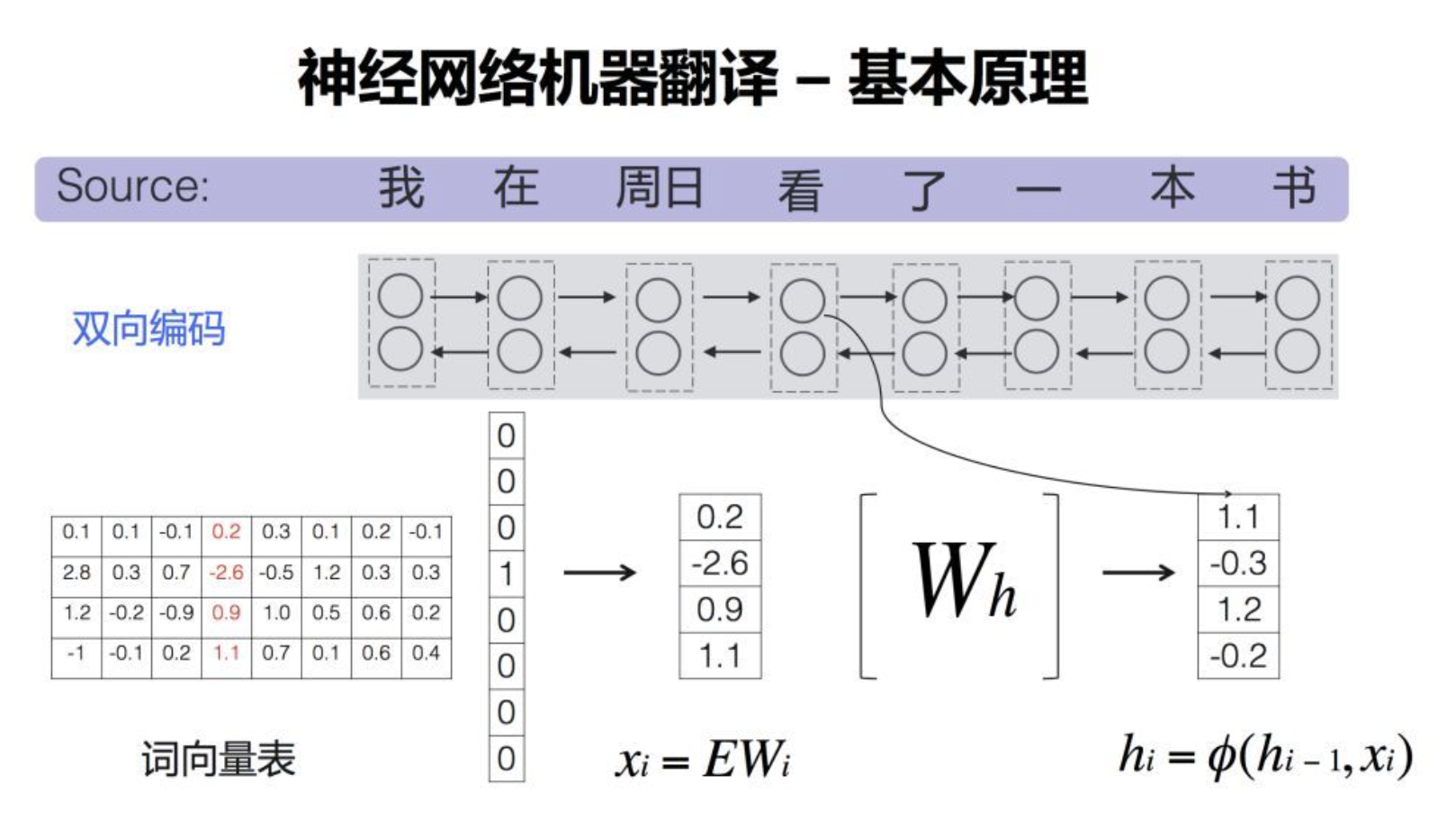

IV. Neural network-based machine translation

Neural network-based machine translation (NMT) uses deep learning techniques, in particular Recurrent Neural Networks (RNN), Long Short-Term Memory Networks (LSTM), or Transformer structures, to translate in an end-to-end manner. It maps sentences or sequences directly from the source language to the target language without complex feature engineering or intermediate steps.

1. Encoder-Decoder structure

At the heart of NMT is the Encoder-Decoder architecture. the Encoder encodes the source utterance as a fixed-size vector, and the Decoder decodes this vector into the target utterance.

typical example: In translating the English sentence “I am learning” into French “Je suis en train d’apprendre”, Encoder first converts the English sentence into The Encoder first converts the English sentence into a vector, and then the Decoder uses this vector to generate the French sentence.

2. Attention mechanism

The Attention mechanism allows the model to “focus” on different parts of the source sentence when decoding. This results in more accurate translations, especially for longer sentences.

typical example: When translating “I am learning to translate with neural networks”, when the model generates the word “réseaux” (networks), it may pay special attention to the source sentence’s in the source sentence.

3. Word embedding

Word embeddings are techniques that convert words into vectors that capture the semantic information of the words.NMT models typically use pre-trained word embeddings such as Word2Vec or GloVe, but it is also possible to learn word embeddings during the training process.

typical exampleThe vectors : “king” and “queen” may be close together in vector space because they are both about royalty.

4. PyTorch implementation

The following is a simple example of an NMT model implementation based on LSTM and Attention:

import torch

import torch.nn as nn

import torch.optim as optim

# For simplicity, define a small glossary and data

source_vocab = {"<PAD>": 0, "<SOS>": 1, "<EOS>": 2, "I": 3, "am": 4, "learning": 5}

target_vocab = {"<PAD>": 0, "<SOS>": 1, "<EOS>": 2, "Je": 3, "suis": 4, "apprenant": 5}

source_sentences = [["<SOS>", "I", "am", "learning", "<EOS>"]]

target_sentences = [["<SOS>", "Je", "suis", "apprenant", "<EOS>"]]

# Parameters

embedding_dim = 256

hidden_dim = 512

vocab_size = len(source_vocab)

target_vocab_size = len(target_vocab)

# Encoder

class Encoder(nn.Module):

def __init__(self):

super(Encoder, self).__init__()

self.embedding = nn.Embedding(vocab_size, embedding_dim)

self.lstm = nn.LSTM(embedding_dim, hidden_dim, batch_first=True)

def forward(self, x):

x = self.embedding(x)

outputs, (hidden, cell) = self.lstm(x)

return outputs, (hidden, cell)

# Attention and Decoder

class DecoderWithAttention(nn.Module):

def __init__(self):

super(DecoderWithAttention, self).__init__()

self.embedding = nn.Embedding(target_vocab_size, embedding_dim)

self.lstm = nn.LSTM(embedding_dim + hidden_dim, hidden_dim, batch_first=True)

self.attention = nn.Linear(hidden_dim + hidden_dim, 1)

self.fc = nn.Linear(hidden_dim, target_vocab_size)

def forward(self, x, encoder_outputs, hidden, cell):

x = self.embedding(x)

seq_length = encoder_outputs.shape[1]

hidden_repeat = hidden.repeat(seq_length, 1, 1).permute(1, 0, 2)

attention_weights = torch.tanh(self.attention(torch.cat((encoder_outputs, hidden_repeat), dim=2)))

attention_weights = torch.softmax(attention_weights, dim=1)

context = torch.sum(attention_weights * encoder_outputs, dim=1).unsqueeze(1)

x = torch.cat((x, context), dim=2)

outputs, (hidden, cell) = self.lstm(x, (hidden, cell))

x = self.fc(outputs)

return x, hidden, cell

# Training loop

encoder = Encoder()

decoder = DecoderWithAttention()

optimizer = optim.Adam(list(encoder.parameters()) + list(decoder.parameters()), lr=0.001)

criterion = nn.CrossEntropyLoss(ignore_index=0

)

for epoch in range(1000):

for src, tgt in zip(source_sentences, target_sentences):

src = torch.tensor([source_vocab[word] for word in src]).unsqueeze(0)

tgt = torch.tensor([target_vocab[word] for word in tgt]).unsqueeze(0)

optimizer.zero_grad()

encoder_outputs, (hidden, cell) = encoder(src)

decoder_input = tgt[:, :-1]

decoder_output, _, _ = decoder(decoder_input, encoder_outputs, hidden, cell)

loss = criterion(decoder_output.squeeze(1), tgt[:, 1:].squeeze(1))

loss.backward()

optimizer.step()

if epoch % 100 == 0:

print(f"Epoch {epoch}, Loss: {loss.item()}")

# Input: <SOS> I am learning <EOS>

# Output: <SOS> Je suis apprenant <EOS>This code demonstrates an attention-based NMT model that maps from source statements to target statements. This is just the basis of NMT, more advanced models such as Transformer will have more details and technical points.

V. Evaluation and assessment methodology

Evaluation of machine translation is a key part of measuring model performance. Accurate, fluent and natural translation output is the goal, but how do we quantify these goals and determine the quality of the model?

1. BLEU Score

The BLEU (Bilingual Evaluation Understudy) score is the most commonly used automatic evaluation method in machine translation. It works by comparing the n-gram overlap between the machine translation output and multiple reference translations.

typical example: Suppose the machine output is “the cat is on the mat” and the reference output is “the cat is sitting on the mat”. 1-gram accuracy is 5/6, 2-gram accuracy is 4/5, and so on.BLEU scores take these levels of precision into account.

2. METEOR

METEOR (Metric for Evaluation of Translation with Explicit ORdering) is another method for evaluating machine translation that takes into account synonym matching, stemming matching, and word order.

typical example: If the machine output is “the pet is on the rug” and the reference translation is “the cat is on the mat”, even though some of the words don’t match exactly, METEOR will assume that there is some similarity between “pet” and “cat”, “rug” and “mat”. cat” and “rug” and “mat”. Although some words do not match exactly, METEOR will assume that there is some similarity between “pet” and ” cat” and “rug” and “mat”.

3. ROUGE

ROUGE (Recall-Oriented Understudy for Gisting Evaluation) is usually used for evaluating automated digests, but can also be used for machine translation. It considers the recall of the n-gram between the machine translation output and the reference translation.

typical exampleFor the same sentences “the cat is on the mat” and “the cat is sitting on the mat”, the ROUGE-1 recall rate is 6/7.

4. TER

TER (Translation Edit Rate) measures the minimum number of edits (e.g., insertions, deletions, substitutions, etc.) required to convert machine translation output into a reference translation.

typical example: For “the cat sat on the mat” and “the cat is sitting on the mat”, the TER is 1/7 because an “is” needs to be added.

5. Manual assessment

Although automated evaluation methods provide quick feedback, human evaluation remains the gold standard for ensuring translation quality. Evaluators typically rate translations based on accuracy, fluency and fidelity to the source text.

typical example: A sentence may receive a perfect BLEU score, but if its translated content does not match the intent of the source content or reads unnaturally, it may be rated lower by a human evaluator.

Overall, evaluating the performance of machine translation is a multifaceted task involving a variety of tools and methods. Ideally, researchers and developers will combine multiple evaluation methods to gain a comprehensive understanding of the model’s performance.

Focus on TechLead to share the full dimension of AI knowledge. The author has 10+ years of internet service architecture, AI product development experience, team management experience, Tongji Ben Fudan Master, Fudan Robotics Intelligence Laboratory member, Aliyun certified senior architect, project management professionals, hundreds of millions of revenue AI product development leader.