Many students think that speech recognition is very difficult, but it is not, at first I also think so, but later found that speech recognition is the easiest, because students may not know that Python has an audio processing library Librosa, this library is very powerful, can be audio processing,spectrogramRepresentation, amplitude conversion, time-frequency conversion, feature extraction (timbre, pitch extraction) and so on, about Librosa’s more introduction or application need you to go to the official website or check other blog information, here I will simply install, and then the speech recognition explanation.

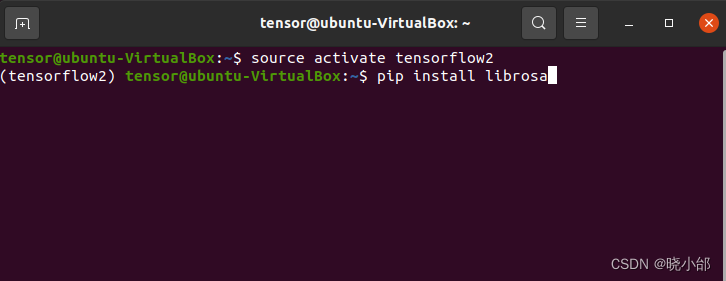

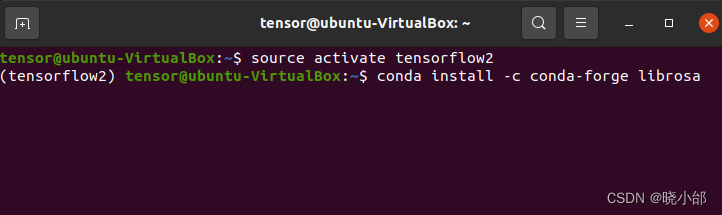

Step 1: Install the Librosa library in the terminal

Method 1: Use the pip command

pip install librosa

Method 2: Use the conda command

conda install -c conda-forge librosa

Step 2: Open jupyter and import the libraries for this guide

import librosa

import numpy as np

from sklearn import svm

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn.linear_model import LogisticRegression

from sklearn.model_selection import train_test_split

import IPython.display as ipd

Step 3: produce voice data, here means that they record audio recordings of different people’s voices, the length of each recording does not require, of course, I personally think that the recording time of 20-30 seconds can be, at least 3 recordings of audio, because I use the following method is a multi-classification training method, must be 3 audio, and Librosa audio format is generally WAV, MP3 (here to pay attention to the) is that if you record the audio file was not MP3 or WAV format, do not rename the file to change the suffix, to use a special tool to change the suffix, or report an error, I tried), the following is my 3 recordings to train the audio file were tbb-01.mp3 (I speak directly to the voice), the 3 audio to replace the sound of your own recordings, if you still don’t understand the comment area we see. If you still don’t understand, let’s see you in the comments section.

# Load data sets

def load_data():

# Load the audio data of the three instruments tbb, aichen, xsc

tbb, sr1 = librosa.load('tbb-01.mp3')

aichen, sr2 = librosa.load('aichen-01.mp3')

xsc, sr3 = librosa.load('xsc-01.mp3')

# Extraction of MFCC features, in this case different human voice timbre extraction

tbb_mfcc = librosa.feature.mfcc(y=tbb, sr=sr1)

aichen_mfcc = librosa.feature.mfcc(y=aichen, sr=sr2)

xsc_mfcc = librosa.feature.mfcc(y=xsc, sr=sr3)

# Combine MFCC features of different human voice color into one dataset

X = np.concatenate((tbb_mfcc.T, aichen_mfcc.T, xsc_mfcc.T), axis=0)

# Generate label vectors

y = np.concatenate((np.zeros(len(tbb_mfcc.T)), np.ones(len(aichen_mfcc.T)), 2*np.ones(len(xsc_mfcc.T))))

return X, yExecute the function and output

# Load data sets

X, y = load_data()

y![]() Why is this result starting from 0 to 2? Because there are 3 audios here, which can be said to be the default labels of the generated dataset, the first one has a label of 0, the second one has a label of 1, the third one has a label of 2, and so on and so forth, as many as there are, so why are there multiple 0s, 1s, and 2s? Because the audio will be split into segments to be labeled when this dataset is being made, so that The more datasets you make, the better the training will be!

Why is this result starting from 0 to 2? Because there are 3 audios here, which can be said to be the default labels of the generated dataset, the first one has a label of 0, the second one has a label of 1, the third one has a label of 2, and so on and so forth, as many as there are, so why are there multiple 0s, 1s, and 2s? Because the audio will be split into segments to be labeled when this dataset is being made, so that The more datasets you make, the better the training will be!

Step 4: Training with the dataset processed above

# Training models

def train(X, y):

# Split the dataset into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Multi-category classification using logistic regression algorithms

model = LogisticRegression(multi_class='ovr')

# Training models

model.fit(X_train, y_train)

return modelexecutable function

# Training models

model = train(X, y)Step 5: Perform model testing

# Test models

def predict(model, audio_file):

# Load audio files and extract MFCC features

y, sr = librosa.load(audio_file)

mfcc = librosa.feature.mfcc(y=y, sr=sr)

# Multi-category classification predictions made

label = model.predict(mfcc.T)

proba = model.predict_proba(mfcc.T)

# Get the category tag with the highest probability

max_prob_idx = np.argmax(proba[0])

max_prob_label = label[max_prob_idx]

return max_prob_labelExecute the function, here I re-recorded one of my own voices for the test

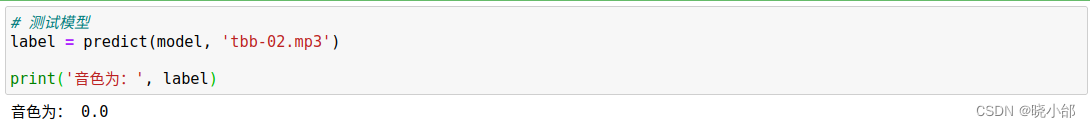

# Test models

label = predict(model, 'tbb-02.mp3')

print('Tone is:', label)The results are as follows:

The identified label is 0, which is indeed correct

Then speech recognition is actually here at the end, of course, I only do here timbre recognition, is to recognize the voice of different people speak, Librosa library can also be other recognition, waiting for you to understand the

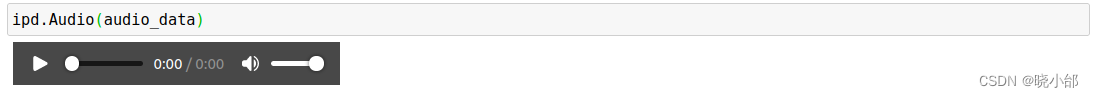

One more library here is IPython.display, as follows

import IPython.display as ipdThis one allows for direct audio playback in jupyter

audio_data = 'nideyangzi.mp3'

ipd.Audio(audio_data)The results are as follows:

Well, that’s it for this speech recognition, thanks again for your support!

Well, that’s it for this speech recognition, thanks again for your support!