1. Introduction to the project

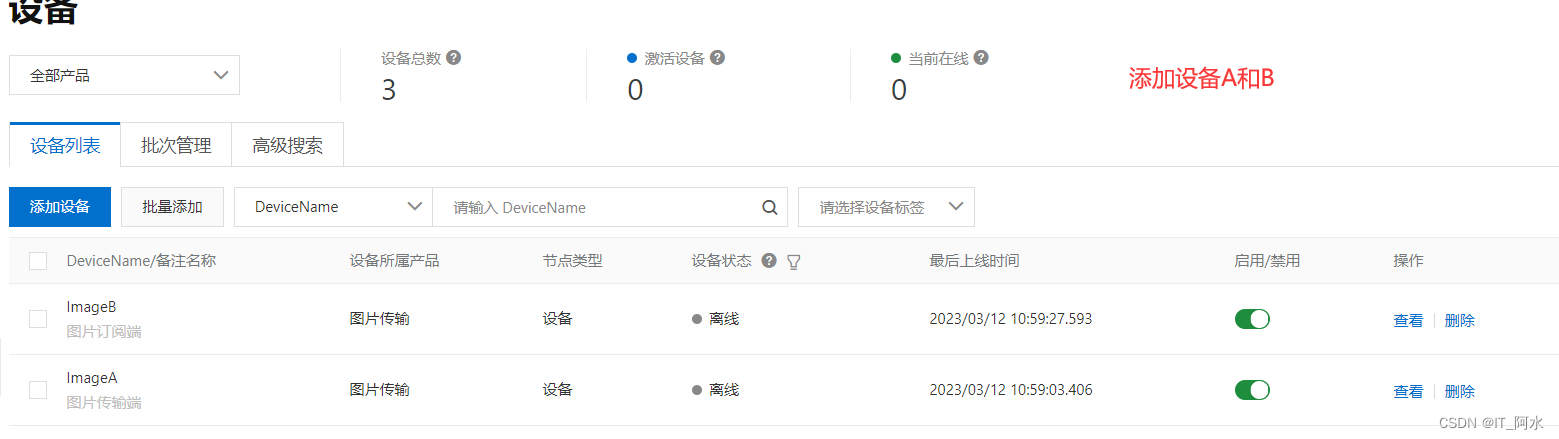

This project is based on the video surveillance project of IoT Volume Platform Remote, which realizes data reporting and subscription between two devices through MQTT protocol. This project demonstrates how two MQTT devices can subscribe to each other for message flow. Create two devices on the AliCloud server, divided into device A and device B; device A is responsible for collecting local camera images and uploading them, and device B is responsible for receiving the data uploaded by device A and then parsing and displaying it. On the AliCloud server, you need to configure the cloud product flow, so that the data of device A is automatically sent to device B after uploading, thus completing the flow of video screen data. However, because of the maximum data limitation of AliCloud, the maximum data sent each time is 10240 bytes.

1.1 Hardware Platform

Operating System: Ubuntu18.04

Hardware: PC’s own or USB drive-free webcam (V4L2 frame)

Server: AliCloud IoT Platform (based on MQTT protocol)

Image Rendering: GTK2.0

1.2 Development process

Equipment A: Get camera data -> scale to 240*320 -> encode to JPEG format -> base64-encode -> combine to MQTT message -> publish to server.

Device B: Subscribe to the data uploaded by device A -> base64 decoding -> decode JPEG data format -> GTK image rendering.

2. Introduction to the MQTT protocol

MQTT (Message Queuing Telemetry Transport) is a messaging protocol based on the publish/subscribe paradigm under the ISO standard (ISO/IEC PRF 20922). It works on the TCP/IP protocol family and is a publish/subscribe messaging protocol designed for remote devices with low hardware performance and poor network conditions, for which it requires a messaging middleware .

MQTT is a client-server based message publish/subscribe transport protocol. Released by IBM in 1999, the MQTT protocol is lightweight, simple, open, and easy to implement, characteristics that make it suitable for a wide range of applications. In many cases, including constrained environments, as a low overhead, low bandwidth-hogging instant messaging protocol, making it more widely used in the Internet of Things, small devices, mobile applications, and so on. E.g., machine-to-machine (M2M) communication and Internet of Things (IoT). Its has been widely used in communicating sensors via satellite links, medical devices with occasional dialing, smart homes, and some miniaturized devices.

The biggest advantage of MQTT is that it provides real-time, reliable messaging services to connected remote devices with very little code and limited bandwidth.

2.1 MQTT Features

This protocol runs over TCP/IP, or other network connections that provide an organized, reliable, bi-directional connection.MQTT belongs to the application-layer protocols, which have the following characteristics:

- Using the publish/subscribe messaging pattern provides one-to-many message distribution and decoupling between applications.

- Messaging does not require knowledge of the load content.

- Three levels of service quality are offered: .

- QS0: “at most once” to distribute the message to the best of the effort that the operating environment can provide. Messages may be lost.

For example, this level can be used for environmental sensor data, where a single loss of data is okay because it will be sent again shortly. - QS1: “at least once” guarantees that the message will arrive, but may be repeated.

- QS2: “Once only”, ensures that a message arrives only once. This level can be used, for example, in a billing system where duplicate or lost messages would result in incorrect charges. Very small transmission consumption and protocol data exchange minimize network traffic.

When an MQTT connection is established, the client needs to connect to the MQTT server via TCP and negotiate a handshake that includes information such as protocol version, client identifier, will message, QoS level, and so on, to ensure that both parties can exchange data correctly. Once the handshake is successful, a persistent TCP connection is established between the client and the server, and messages can be transmitted at any time.

Since the TCP protocol itself already provides a certain degree of reliability assurance, the MQTT protocol only needs to implement publish/subscribe mechanism, QoS level control, reserved messages and other features on the basis of TCP, which makes it a lightweight and efficient IoT communication protocol.

2.2 MQTT Protocol Data Volume Limitations

The MQTT protocol itself does not limit the packet size, but it needs to follow the limitations and constraints of the underlying transport protocol (TCP/IP). In practice, the amount of effective data that the MQTT protocol can transmit is influenced by a variety of factors, such as network bandwidth, QoS level, MQTT message header information, etc. Generally, by default, the MQTT protocol has a limit on the payload of a single message, i.e., no more than 256 MB. this limit is mainly determined by the message length field of the MQTT protocol, which has a maximum value of 4 bytes, so the maximum message length that can be represented is 2^32-1 bytes, i.e., about 4 GB. however, in practice, due to the limitations of the network bandwidth and the performance of equipment However, in practical applications, it is difficult to realize the transmission of such a huge message due to the limitations of network bandwidth and device performance.

In addition, it should be noted that if a higher level of QoS is used, such as “at least once” or “exactly once”, the MQTT protocol will acknowledge and retransmit each message, which may result in more network traffic and delay. Therefore, when choosing the QoS level, it is necessary to optimize and adjust it according to the actual situation of the application scenario and the network environment, in order to make full use of the features and advantages of the MQTT protocol.

3. AliCloud Internet of Things platform construction

3.1 Build the AliCloud IoT platform

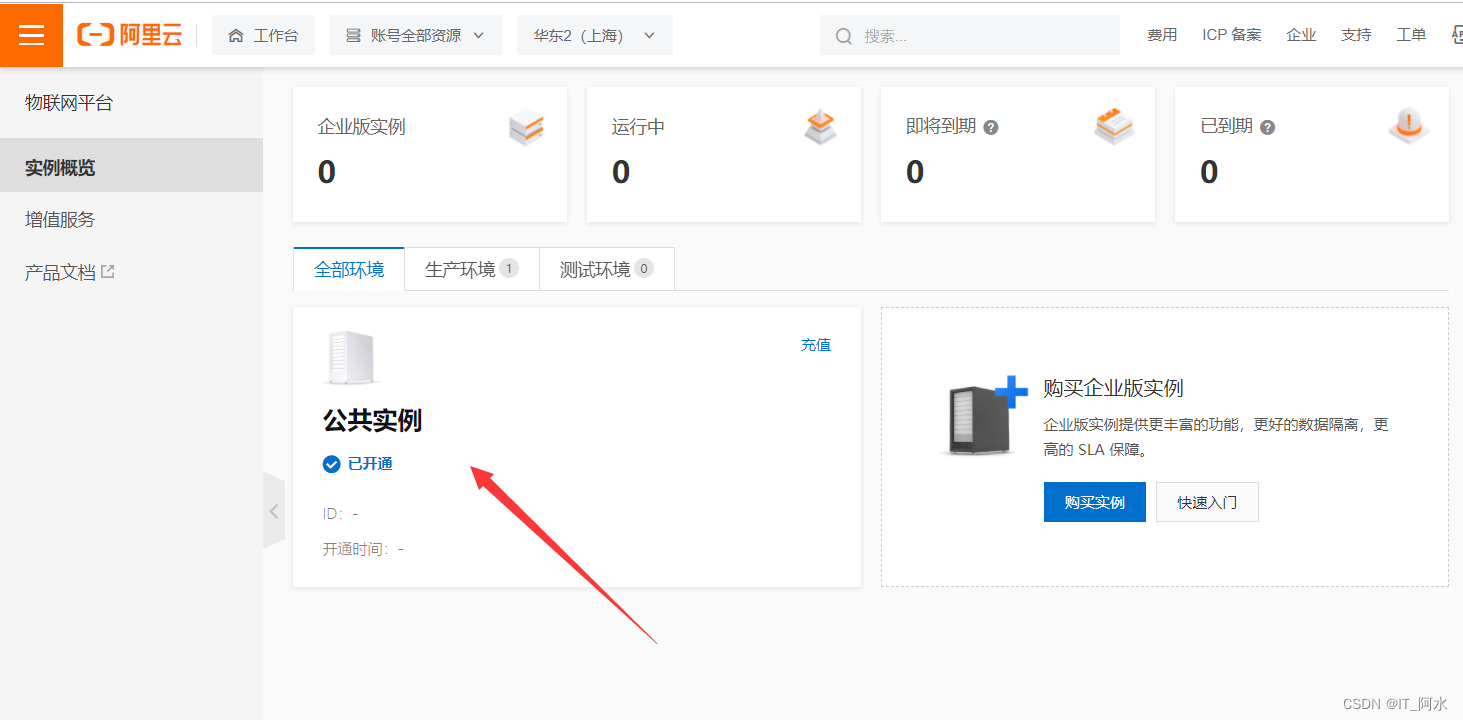

1. Login to AliCloud IoT platform

2.Create products

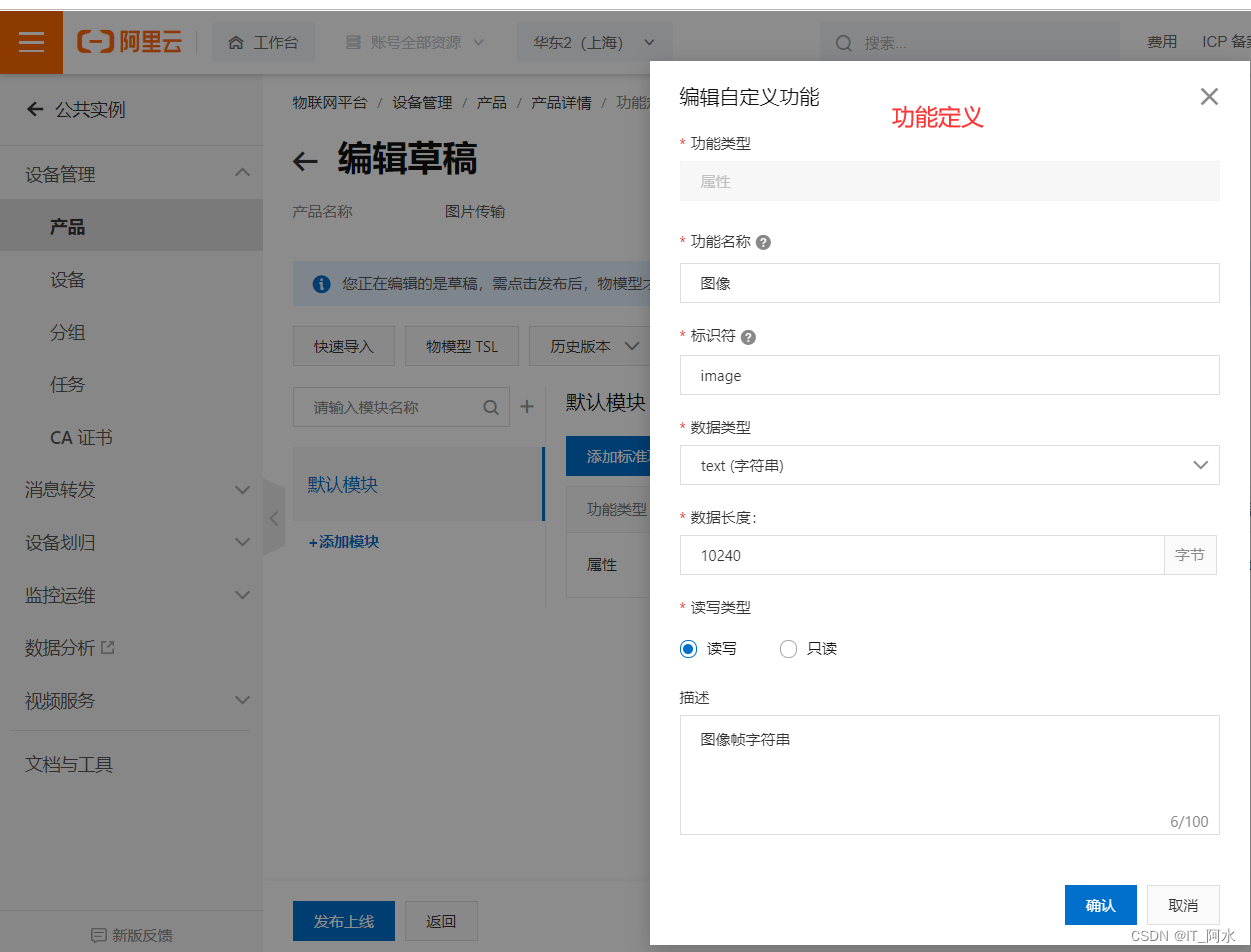

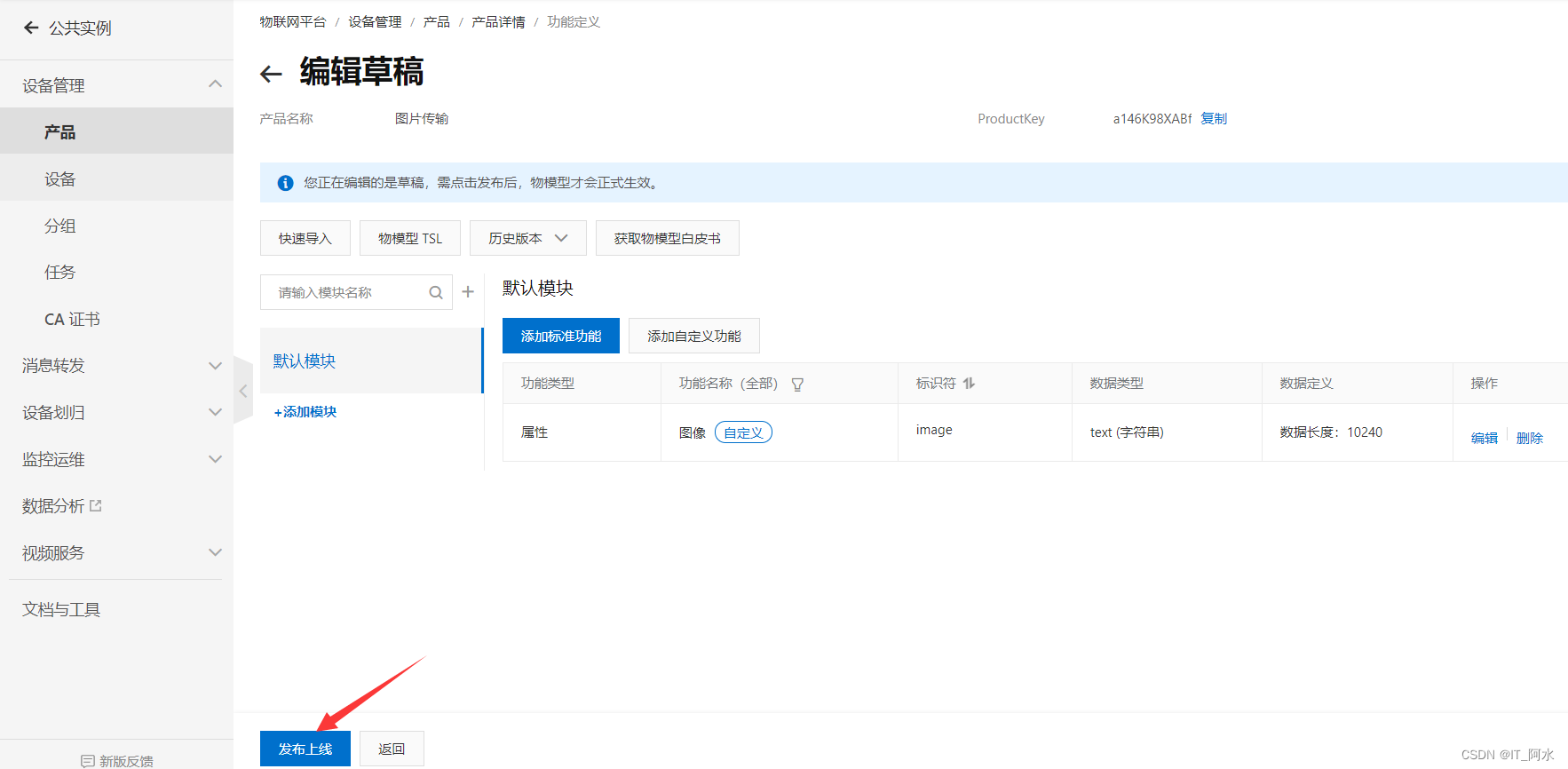

3. Function definition, add customized functions, publish online.

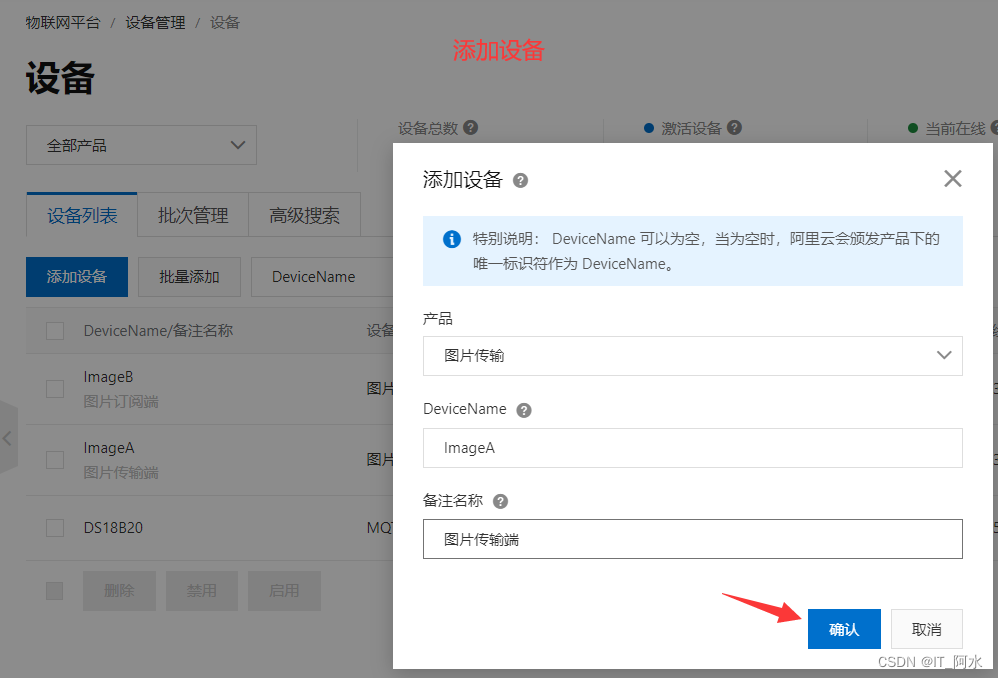

4. Add equipment

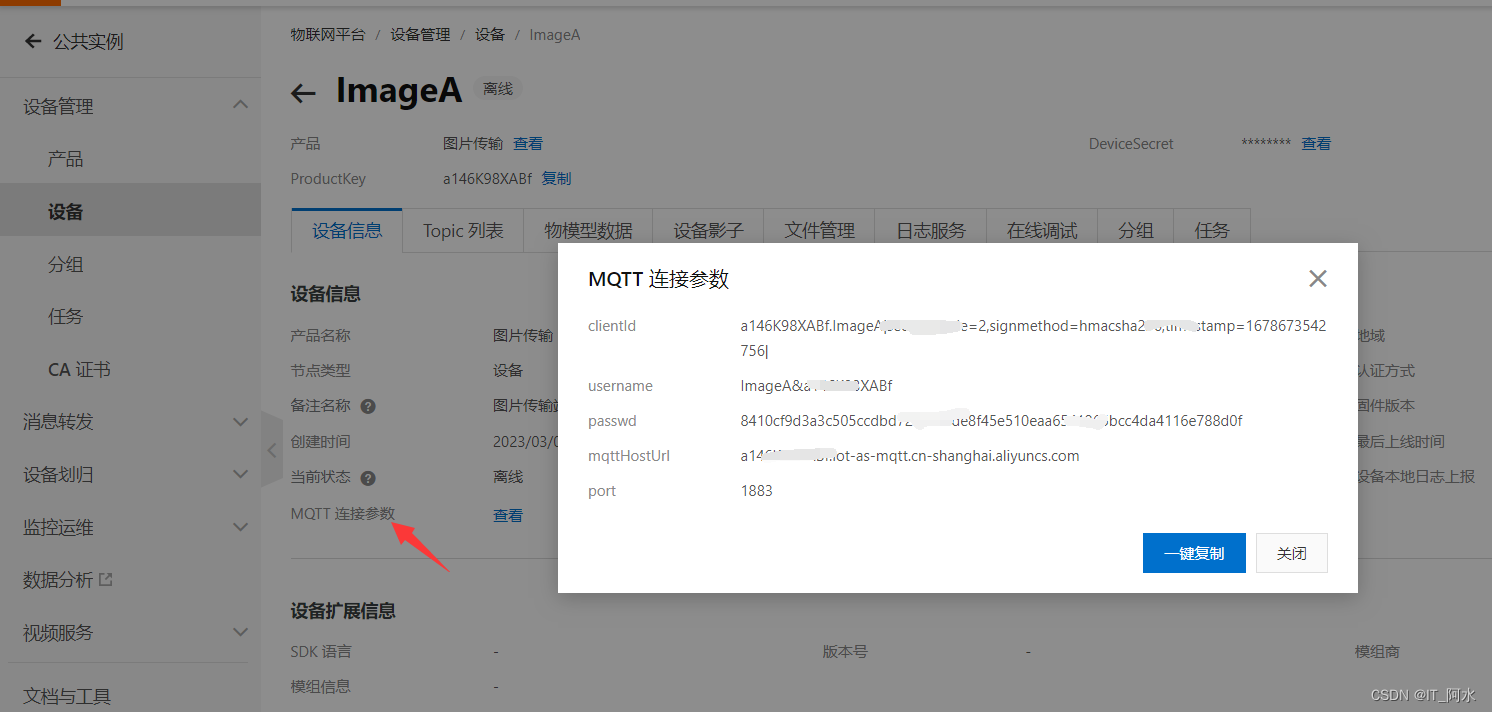

3.2 Device Login

MQTT requires three parameters to connect to Aliyun: client id, username, and password. This information can be obtained directly in the device.

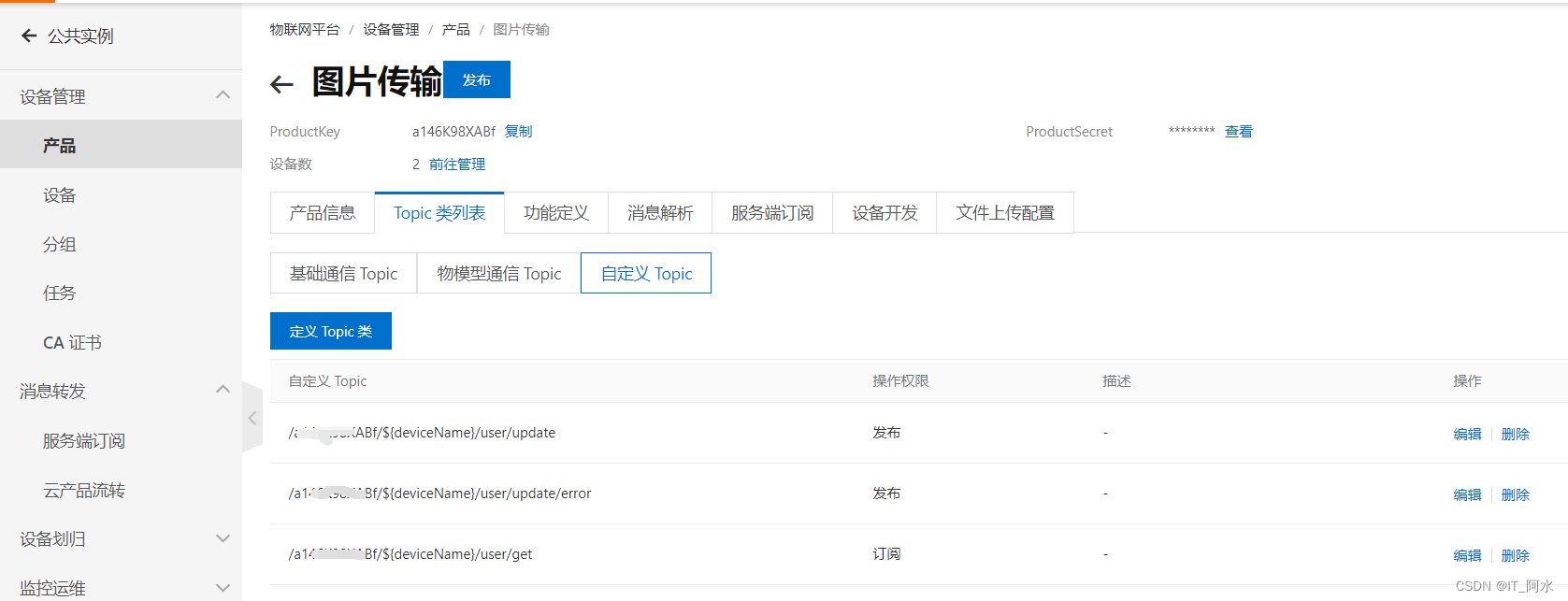

3.3 Message Subscription and Message Publishing

Topic is the transmission intermediary between message publisher (Pub) and subscriber (Sub). Devices can send and receive messages through Topics, thus realizing the communication between the service side and the device side. In order to facilitate the communication of massive devices based on Topics and simplify the authorization operation, the IoT platform defines the Product Topic class and Device Topic.

3.4 Data reporting format

The MQTT reported data format is JSON. The content format is as follows:

Post subject: "/sys/{product id}/{device name}/thing/service/property/set"

Data content format: {"method" {"image": "hello,world"}}

The device side subscribes to the message content:

Subscribe to the thread: "/sys/{product-id}/{device-name}/thing/event/property/post"

4. Linux socket programming to connect to the AliCloud Internet of Things platform

#define SERVER_IP "asfdda.iot-as-mqtt.cn-shanghai.aliyuncs.com"// Server IP address

#define SERVER_PORT 1883 // Port number

#define ClientID "aasfsaXABf.Imasfas|securemode=2,signmethod=hmacsha256,timestamp=1678323607797|"

#define Username "ImsfeA&a1sadf8XABf"

# define the Password "15566 ab496e81da728a3792ebe532fd4a3f4026a2b831df5af24da06" // ciphertext

# define SET_TOPIC "/ sys/a14dXABf/ImagfA/thing/service/property/set" // subscription

# define POST_TOPIC "/ sys/a14sdf8XABf ImdfeA/thing/event/property/post" // release

int main()

{

pthread_t id;

signal(SIGPIPE,SIG_IGN);/* ignore SIGPIPE signal */

signal(SIGALRM,signal_func); /* Alarm clock signal */

sockfd=socket(AF_INET,SOCK_STREAM,0);

if(sockfd==-1)

{

printf("Failed to open network socket\n");;

return 0;

}

/*Set send buffer size */

int nSendBuf=40*1024;//set to 20K

if(setsockopt(sockfd,SOL_SOCKET,SO_SNDBUF,(const char*)&nSendBuf,sizeof(int)))

{

printf("setsockopt(SO_SNDBUF) setup error! \n").

return 0;

}

/*Domain name resolution*/

struct hostent *hostent;

while(1)

{

hostent=gethostbyname(SERVER_IP);

if(hostent==NULL)

{

printf("Domain name resolution failure\n");;

sleep(1);

}

else break;

}

printf(" Host name :%s\n",hostent->h_name);

printf("Protocol type: %s\n",(hostent->h_addrtype == AF_INET)?" AF_INET": "AF_INET6");

printf("IP address length:%d\n",hostent->h_length);

char *ip;

for(int i=0;hostent->h_addr_list[i];i++)

{

ip=inet_ntoa(*(struct in_addr *)hostent->h_addr_list[i]);

printf("ip=%s\n",ip);

}

/* Connecting to the server */

struct sockaddr_in addr;

addr.sin_family=AF_INET;//IPV4

addr.sin_port=htons(SERVER_PORT);/*port number**/

addr.sin_addr.s_addr=inet_addr(ip);// server IP

if(connect(sockfd, (struct sockaddr *)&addr,sizeof(struct sockaddr_in))==0)

{

printf("Server connection succeeded\n");;

while(1)

{

MQTT_Init();

/*Login to server*/

if(MQTT_Connect(ClientID,Username,Password)==0)

{

break;

}

sleep(1);

printf("Server connection in progress .... \n").

}

printf("Connection successful \r\n");.

//Subscribe to IoT platform data

stat=MQTT_SubscribeTopic(SET_TOPIC,1,1);

if(stat)

{

close(sockfd);

printf("Subscription failed \r\n");.

exit(0);

}

printf("Subscription successful\r\n");.

/* Create thread */

pthread_create(&id, NULL,pth_work_func,NULL);

pthread_detach(id);//set the detach attribute

alarm(3);//alarm function, time arrival will generate SIGALRM signal

int a=0;

while(1)

{

sprintf(mqtt_message,"{\"method\" {\"image\":\ "AliCloud IoT platform test\"}}");;

MQTT_PublishData(POST_TOPIC,mqtt_message,0);//publish data

}

}

}

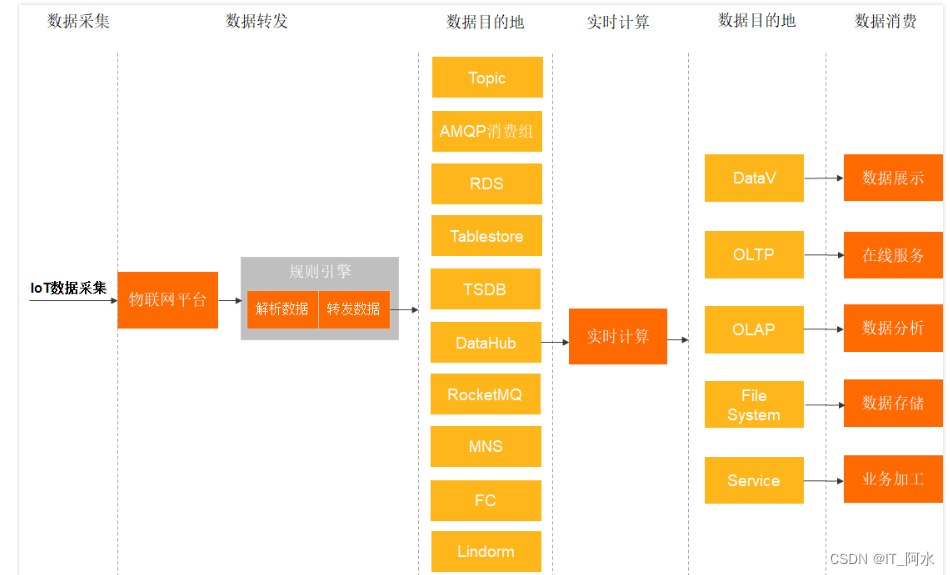

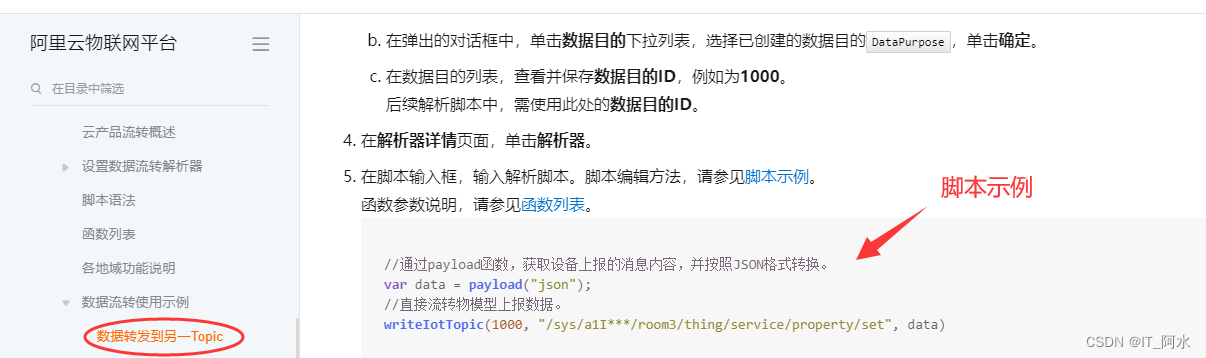

5. Cloud product flow

Cloud product flow documents:AliCloud Product Flow

5.1 What is Cloud Product Flow

When the device communicates with the IoT platform based on the Topic, you can write SQL to process the data in the Topic in the data flow and configure forwarding rules to forward the processed data to other device Topics or other services of AliCloud.

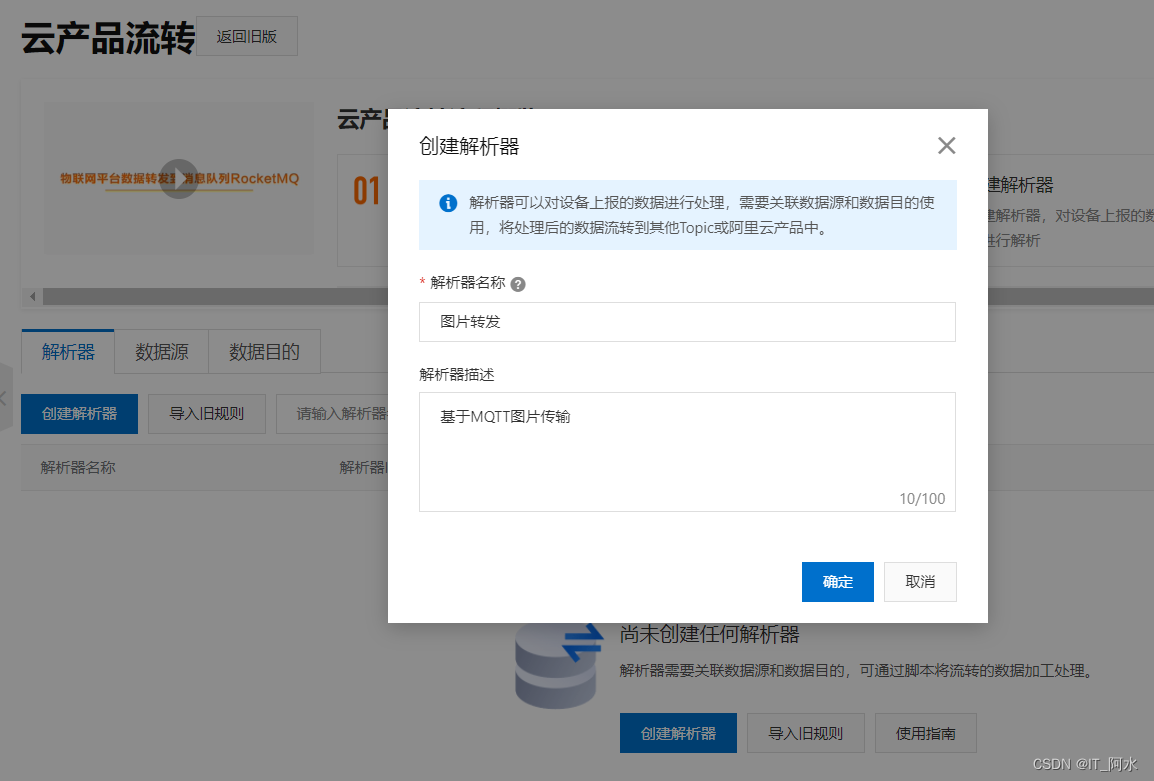

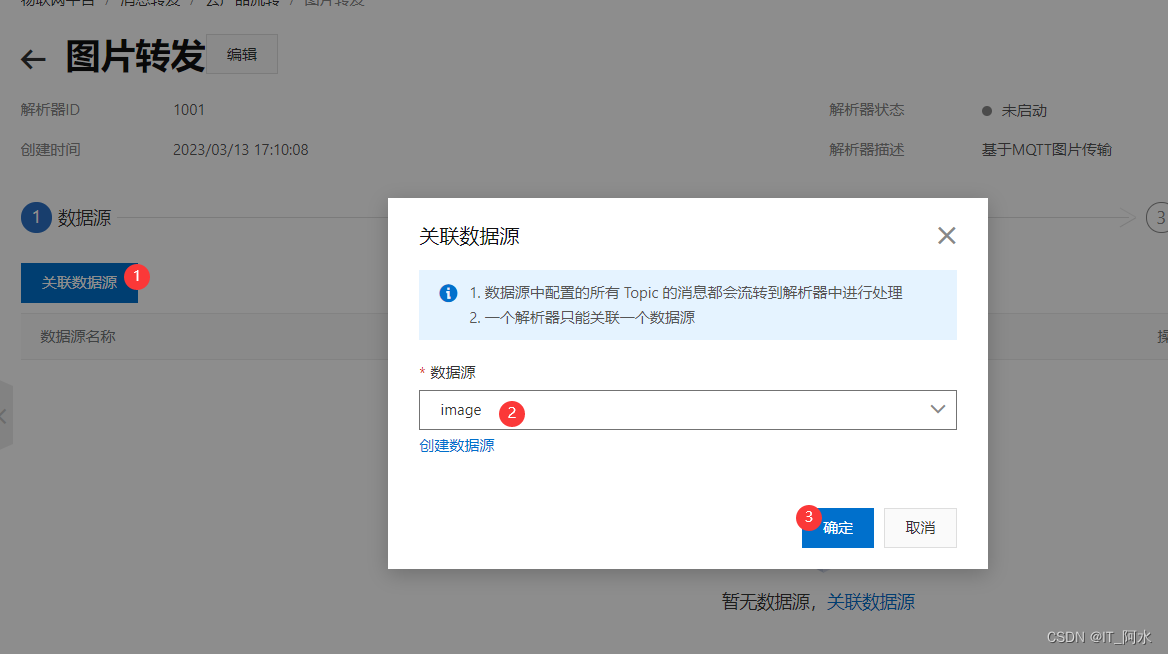

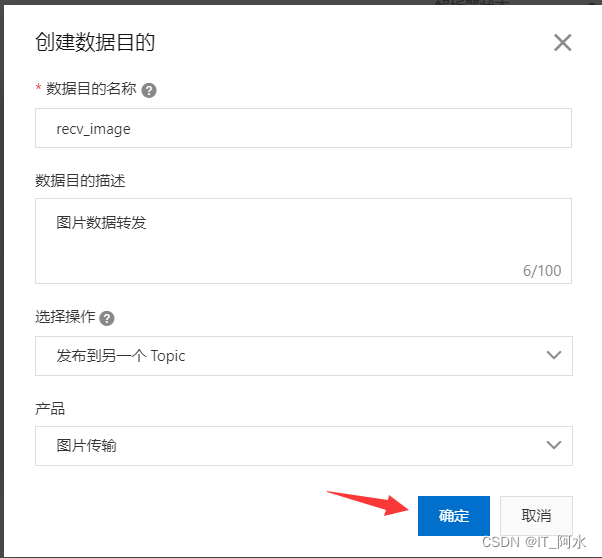

5.2 Cloud Product Flow Configuration

1. Create a parser

2. Linked data sources

4. Writing parser scripts

Parser Description Documentation:Parser Documentation

Formatting Example:

// Through the payload function, get the content of the message reported by the device and transform it according to the JSON format.

var data = payload("json"); // Directly stream the data reported by the object model.

writeIotTopic(1000, topic, data); topic is as follows:

Edit it and publish it. At this point, the configuration of the Ali IoT platform is complete.

6. Code realization

6.1 Device A sender

1.USB camera application programming

The V4L2 framework under Linux is used to initialize the USB camera and collect image data.

/*

Camera initialization

Return value: success returns the camera descriptor, failure returns a negative number

*/

int Video_Init(struct CAMERA *camera)

{

int video_fd;

int i=0;

/*1. Open the device node */

video_fd=open(VIDEO_DEV,O_RDWR);

if(video_fd==-1)return -1;

/*2. Setting the camera format */

struct v4l2_format format;

memset(&format,0,sizeof(format));

format.type=V4L2_BUF_TYPE_VIDEO_CAPTURE;//video capture format

format.fmt.pix.width=320;

format.fmt.pix.height=240;

format.fmt.pix.pixelformat=V4L2_PIX_FMT_YUYV;//image data format yuyv

if(ioctl(video_fd,VIDIOC_S_FMT,&format))return -2;

Image size: printf (" % d * % d \ n ", the format. The FMT. Pix. The width, the format. The FMT. Pix, height);

camera->image_w=format.fmt.pix.width;

camera->image_h=format.fmt.pix.height;

/*3. Request buffer from kernel */

struct v4l2_requestbuffers reqbuf;

memset(&reqbuf,0,sizeof(reqbuf));

reqbuf.count=4;/*number of buffers**/

reqbuf.type=V4L2_BUF_TYPE_VIDEO_CAPTURE;//video capture format

reqbuf.memory=V4L2_MEMORY_MMAP;/* memory mapping */

if(ioctl(video_fd,VIDIOC_REQBUFS,&reqbuf))return -3;

printf("Number of buffers: %d\n",reqbuf.count);

/*4. Map buffers to process space */

struct v4l2_buffer quebuff;

for(i=0;i<reqbuf.count;i++)

{

memset(&quebuff,0,sizeof(quebuff));

quebuff.index=i;//buffer array subscripts

quebuff.type=V4L2_BUF_TYPE_VIDEO_CAPTURE;//video capture format

quebuff.memory=V4L2_MEMORY_MMAP;/* memory mapping */

if(ioctl(video_fd,VIDIOC_QUERYBUF,&quebuff))return -4;

camera->mamp_buff[i]=mmap(NULL,quebuff.length,PROT_READ|PROT_WRITE,MAP_SHARED,video_fd,quebuff.m.offset);

printf("buff[%d]=%p\n",i,camera->mamp_buff[i]);

camera->mmap_size=quebuff.length;

}

/*5. Add buffer to capture queue */

for(i=0;i<reqbuf.count;i++)

{

memset(&quebuff,0,sizeof(quebuff));

quebuff.index=i;//buffer array subscripts

quebuff.type=V4L2_BUF_TYPE_VIDEO_CAPTURE;//video capture format

quebuff.memory=V4L2_MEMORY_MMAP;/* memory mapping */

if(ioctl(video_fd,VIDIOC_QBUF,&quebuff))return -5;

}

/*6. Turn on the camera*/

int type=V4L2_BUF_TYPE_VIDEO_CAPTURE;//video capture format

if(ioctl(video_fd,VIDIOC_STREAMON,&type))return -6;

return video_fd;

}2. Image encoding processing

Real-time acquisition of image data, encode the image data into jpg image format, and then into base64 format encoding.

Base64 encoding is a method of converting binary data to ASCII characters, which uses 64 characters to represent an arbitrary sequence of binary data.Base64 encoded data will be slightly longer than the original binary data, but can be easily converted to text format and transmitted over a network.

3.base64 format encoding

#include <string.h>

static const char * base64char = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/";

/*

Function function: encode the image into base64 format

Formal parameter: bindata Source image data

base64 encoded data

binlength --Source file size

Return value: return the encoded base64 data

*/

char * base64_encode( const unsigned char * bindata, char * base64, int binlength )

{

int i, j;

unsigned char current;

for ( i = 0, j = 0 ; i < binlength ; i += 3 )

{

current = (bindata[i] >> 2) ;

current &= (unsigned char)0x3F;

base64[j++] = base64char[(int)current];

current = ( (unsigned char)(bindata[i] << 4 ) ) & ( (unsigned char)0x30 ) ;

if ( i + 1 >= binlength )

{

base64[j++] = base64char[(int)current];

base64[j++] = '=';

base64[j++] = '=';

break;

}

current |= ( (unsigned char)(bindata[i+1] >> 4) ) & ( (unsigned char) 0x0F );

base64[j++] = base64char[(int)current];

current = ( (unsigned char)(bindata[i+1] << 2) ) & ( (unsigned char)0x3C ) ;

if ( i + 2 >= binlength )

{

base64[j++] = base64char[(int)current];

base64[j++] = '=';

break;

}

current |= ( (unsigned char)(bindata[i+2] >> 6) ) & ( (unsigned char) 0x03 );

base64[j++] = base64char[(int)current];

current = ( (unsigned char)bindata[i+2] ) & ( (unsigned char)0x3F ) ;

base64[j++] = base64char[(int)current];

}

base64[j] = '#include <string.h>

static const char * base64char = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/";

/*

Function function: encode the image into base64 format

Formal parameter: bindata Source image data

base64 encoded data

binlength --Source file size

Return value: return the encoded base64 data

*/

char * base64_encode( const unsigned char * bindata, char * base64, int binlength )

{

int i, j;

unsigned char current;

for ( i = 0, j = 0 ; i < binlength ; i += 3 )

{

current = (bindata[i] >> 2) ;

current &= (unsigned char)0x3F;

base64[j++] = base64char[(int)current];

current = ( (unsigned char)(bindata[i] << 4 ) ) & ( (unsigned char)0x30 ) ;

if ( i + 1 >= binlength )

{

base64[j++] = base64char[(int)current];

base64[j++] = '=';

base64[j++] = '=';

break;

}

current |= ( (unsigned char)(bindata[i+1] >> 4) ) & ( (unsigned char) 0x0F );

base64[j++] = base64char[(int)current];

current = ( (unsigned char)(bindata[i+1] << 2) ) & ( (unsigned char)0x3C ) ;

if ( i + 2 >= binlength )

{

base64[j++] = base64char[(int)current];

base64[j++] = '=';

break;

}

current |= ( (unsigned char)(bindata[i+2] >> 6) ) & ( (unsigned char) 0x03 );

base64[j++] = base64char[(int)current];

current = ( (unsigned char)bindata[i+2] ) & ( (unsigned char)0x3F ) ;

base64[j++] = base64char[(int)current];

}

base64[j] = '\0';

return base64;

}

';

return base64;

}4.base64 format decoding

/*

Function: Decode base64 format data.

Formal parameter: base64 base64 format data

bindata Saves the successfully decoded image data

Return Value: Successfully return the decoded image size

*/

static const char * base64char = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/";

int base64_decode( const char * base64, unsigned char * bindata )

{

int i, j;

unsigned char k;

unsigned char temp[4];

for ( i = 0, j = 0; base64[i] != '/*

Function: Decode base64 format data.

Formal parameter: base64 base64 format data

bindata Saves the successfully decoded image data

Return Value: Successfully return the decoded image size

*/

static const char * base64char = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+/";

int base64_decode( const char * base64, unsigned char * bindata )

{

int i, j;

unsigned char k;

unsigned char temp[4];

for ( i = 0, j = 0; base64[i] != '\0' ; i += 4 )

{

memset( temp, 0xFF, sizeof(temp) );

for ( k = 0 ; k < 64 ; k ++ )

{

if ( base64char[k] == base64[i] )

temp[0]= k;

}

for ( k = 0 ; k < 64 ; k ++ )

{

if ( base64char[k] == base64[i+1] )

temp[1]= k;

}

for ( k = 0 ; k < 64 ; k ++ )

{

if ( base64char[k] == base64[i+2] )

temp[2]= k;

}

for ( k = 0 ; k < 64 ; k ++ )

{

if ( base64char[k] == base64[i+3] )

temp[3]= k;

}

bindata[j++] = ((unsigned char)(((unsigned char)(temp[0] << 2))&0xFC)) |

((unsigned char)((unsigned char)(temp[1]>>4)&0x03));

if ( base64[i+2] == '=' )

break;

bindata[j++] = ((unsigned char)(((unsigned char)(temp[1] << 4))&0xF0)) |

((unsigned char)((unsigned char)(temp[2]>>2)&0x0F));

if ( base64[i+3] == '=' )

break;

bindata[j++] = ((unsigned char)(((unsigned char)(temp[2] << 6))&0xF0)) |

((unsigned char)(temp[3]&0x3F));

}

return j;

}

' ; i += 4 )

{

memset( temp, 0xFF, sizeof(temp) );

for ( k = 0 ; k < 64 ; k ++ )

{

if ( base64char[k] == base64[i] )

temp[0]= k;

}

for ( k = 0 ; k < 64 ; k ++ )

{

if ( base64char[k] == base64[i+1] )

temp[1]= k;

}

for ( k = 0 ; k < 64 ; k ++ )

{

if ( base64char[k] == base64[i+2] )

temp[2]= k;

}

for ( k = 0 ; k < 64 ; k ++ )

{

if ( base64char[k] == base64[i+3] )

temp[3]= k;

}

bindata[j++] = ((unsigned char)(((unsigned char)(temp[0] << 2))&0xFC)) |

((unsigned char)((unsigned char)(temp[1]>>4)&0x03));

if ( base64[i+2] == '=' )

break;

bindata[j++] = ((unsigned char)(((unsigned char)(temp[1] << 4))&0xF0)) |

((unsigned char)((unsigned char)(temp[2]>>2)&0x0F));

if ( base64[i+3] == '=' )

break;

bindata[j++] = ((unsigned char)(((unsigned char)(temp[2] << 6))&0xF0)) |

((unsigned char)(temp[3]&0x3F));

}

return j;

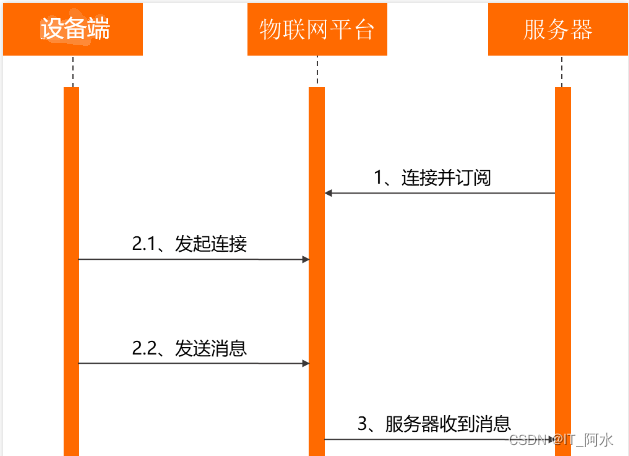

}5. Data reporting

Socket network programming under Linux, connecting to AliCloud servers, accessing AliCloud IoT platform, and reporting data in real time via MQTT protocol.

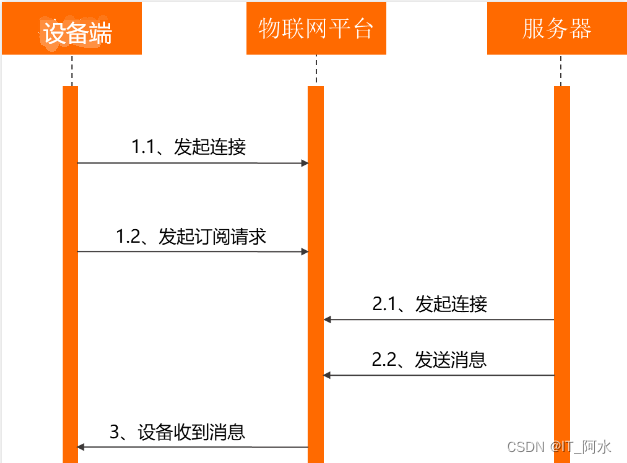

6.2 Device B Subscriber

1. Data acquisition

Socket network programming under Linux, connecting to AliCloud server, accessing AliCloud IoT platform, subscribing to messages sent from device A end.

2. Data parsing

The IOT platform sends messages in JSON format, parses the message data, extracts the image data, and decodes the image data in base64 format to get JPG image data.

3.JPG image parsing

Parses the JPG image to get the RGB color data.

// Show JPEG Compile with -ljpeg

int LCD_ShowJPEG(unsigned char *jpg_buffer,int size,struct ImageDecodingInfo *image_rgb)

{

struct jpeg_decompress_struct cinfo; //store the data of the image

struct jpeg_error_mgr jerr; //store error messages

unsigned char *buffer;

unsigned int i,j;

unsigned int color;

static int written;

/*init jpeg compression object error handler */

cinfo.err = jpeg_std_error(&jerr); //initialize standard error to hold error messages

jpeg_create_decompress(&cinfo); //create decompression structure information

jpeg_mem_src(&cinfo, (unsigned char *)jpg_buffer, size);

//jpeg_stdio_src(&cinfo, infile);

/* Read jpeg header */

jpeg_read_header(&cinfo, TRUE);

/*Start decompression*/

jpeg_start_decompress(&cinfo);

#if 0

printf("JPEG image height: %d\n",cinfo.output_height);

printf("JPEG image width: %d\n",cinfo.output_width);

printf("JPEG image color bits (in byte units): %d\n",cinfo.output_components);

#endif

image_rgb->Height=cinfo.output_height;

image_rgb->Width=cinfo.output_width;

unsigned char *rgb_data=image_rgb->rgb;

/* Allocate storage space for pixel points on a scan line, decode one line at a time */

int row_stride = cinfo.output_width * cinfo.output_components;

buffer = (unsigned char *)malloc(row_stride);

// display the content of the image to the framebuffer, cinfo.output_scanline indicates the position of the current line, read the data is automatically increased

i=0;

while(cinfo.output_scanline < cinfo.output_height)

{

// Read a line of data

jpeg_read_scanlines(&cinfo,&buffer,1);

memcpy(rgb_data + i * cinfo.output_width * 3, buffer, row_stride);

i++;

}

/* Completing decompression, destroying decompressed objects */

jpeg_finish_decompress(&cinfo); // End Decompression

jpeg_destroy_decompress(&cinfo); //free the space occupied by the structure

/* Release memory buffer */

free(buffer);

return 0;

}4. GTK window rendering

Create a GTK window to scale the original image and render the image data in real time.

/ * * * * * * * * * * * * * * * * * * * * * * * * * * * * * BMP picture zoom in * * * * * * * * * * * * * * * * * * * * * * * *

**image_rgb -- image structure information

**int lcd_width,int lcd_hight -- screen size

**Return value: 0 -- success; other values -- failure

*********************************************************************/

int ZoomInandOut(struct ImageDecodingInfo *image_rgb,int lcd_width,int lcd_hight)

{

//printf("Source image width:%d\n",image_rgb->Width);;

//printf("Source image height:%d\n",image_rgb->Height);;

u32 w=image_rgb->Width;

u32 h=image_rgb->Height;

u8 *src_rgb=image_rgb->rgb;//source image RGB value

unsigned long oneline_byte=w*3; // Number of bytes in a row

float zoom_count=0;

/* scaling */

zoom_count=(lcd_width/(w*1.0)) > (lcd_hight/(h*1.0)) ? (lcd_hight/(h*1.0)):(lcd_width/(w*1.0));

int new_w,new_h;

new_w=zoom_count*w;//new image width

new_h=zoom_count*h;//new image height

//printf("New image width: %d\n",new_w);

//printf("New picture height:%d\n", new_h);

//printf("Scaling:%.0f%%\n",(new_w*1.0/w)*100);

unsigned long new_oneline_byte=new_w*3;

unsigned char *newbmp_buff=(unsigned char *)malloc(new_h*new_oneline_byte);//dynamically allocate new picture RGB color data buffer

if(newbmp_buff==NULL)

{

printf("[%s line %d] dynamic space allocation failed\n",__FUNCTION__,__LINE__);;

return -1;

}

memset(newbmp_buff, 0, new_h*new_oneline_byte);

/ * * * * * * * * * * * * * * * * * * * * * * * * image processing algorithms (bilinear interpolation) * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * /

int i,j;

for(i=0;i<new_h;i++)//new image height

{

for(j=0;j<new_w;j++)//new picture width

{

double d_original_img_h = i*h / (double)new_h;

double d_original_img_w = j*w / (double)new_w;

int i_original_img_h = d_original_img_h;

int i_original_img_w = d_original_img_w;

double distance_to_a_x = d_original_img_w - i_original_img_w;//horizontal distance from point a in the original image

double distance_to_a_y = d_original_img_h - i_original_img_h;//perpendicular distance from point a in the original image

int original_point_a = i_original_img_h*oneline_byte + i_original_img_w * 3;//array position offset, corresponding to the starting point of each pixel RGB of the image, equivalent to the point A

int original_point_b = i_original_img_h*oneline_byte + (i_original_img_w + 1) * 3;//array positional offset, corresponding to the starting point of each pixel of the image RGB, equivalent to point B

int original_point_c = (i_original_img_h + 1)*oneline_byte + i_original_img_w * 3;//array position offset, corresponds to the starting point of each pixel RGB of the image, equivalent to the point C

int original_point_d = (i_original_img_h + 1)*oneline_byte + (i_original_img_w + 1) * 3;//array position offset, corresponding to the starting point of each pixel RGB of the image, equivalent to the point D

if (i_original_img_h +1== new_h - 1)

{

original_point_c = original_point_a;

original_point_d = original_point_b;

}

if (i_original_img_w +1== new_w - 1)

{

original_point_b = original_point_a;

original_point_d = original_point_c;

}

int pixel_point = i*new_oneline_byte + j*3;//map scale transform image array position offset

newbmp_buff[pixel_point] =

src_rgb[original_point_a] * (1 - distance_to_a_x)*(1 - distance_to_a_y) +

src_rgb[original_point_b] * distance_to_a_x*(1 - distance_to_a_y) +

src_rgb[original_point_c] * distance_to_a_y*(1 - distance_to_a_x) +

src_rgb[original_point_c] * distance_to_a_y*distance_to_a_x;

newbmp_buff[pixel_point + 1] =

src_rgb[original_point_a + 1] * (1 - distance_to_a_x)*(1 - distance_to_a_y) +

src_rgb[original_point_b + 1] * distance_to_a_x*(1 - distance_to_a_y) +

src_rgb[original_point_c + 1] * distance_to_a_y*(1 - distance_to_a_x) +

src_rgb[original_point_c + 1] * distance_to_a_y*distance_to_a_x;

newbmp_buff[pixel_point + 2] =

src_rgb[original_point_a + 2] * (1 - distance_to_a_x)*(1 - distance_to_a_y) +

src_rgb[original_point_b + 2] * distance_to_a_x*(1 - distance_to_a_y) +

src_rgb[original_point_c + 2] * distance_to_a_y*(1 - distance_to_a_x) +

src_rgb[original_point_c + 2] * distance_to_a_y*distance_to_a_x;

}

}

memcpy(image_rgb->rgb,newbmp_buff,new_h*new_oneline_byte);//new image RGB data

image_rgb->Width=new_w;//new image width

image_rgb->Height=new_h;//new image height

free(newbmp_buff);

return 0;

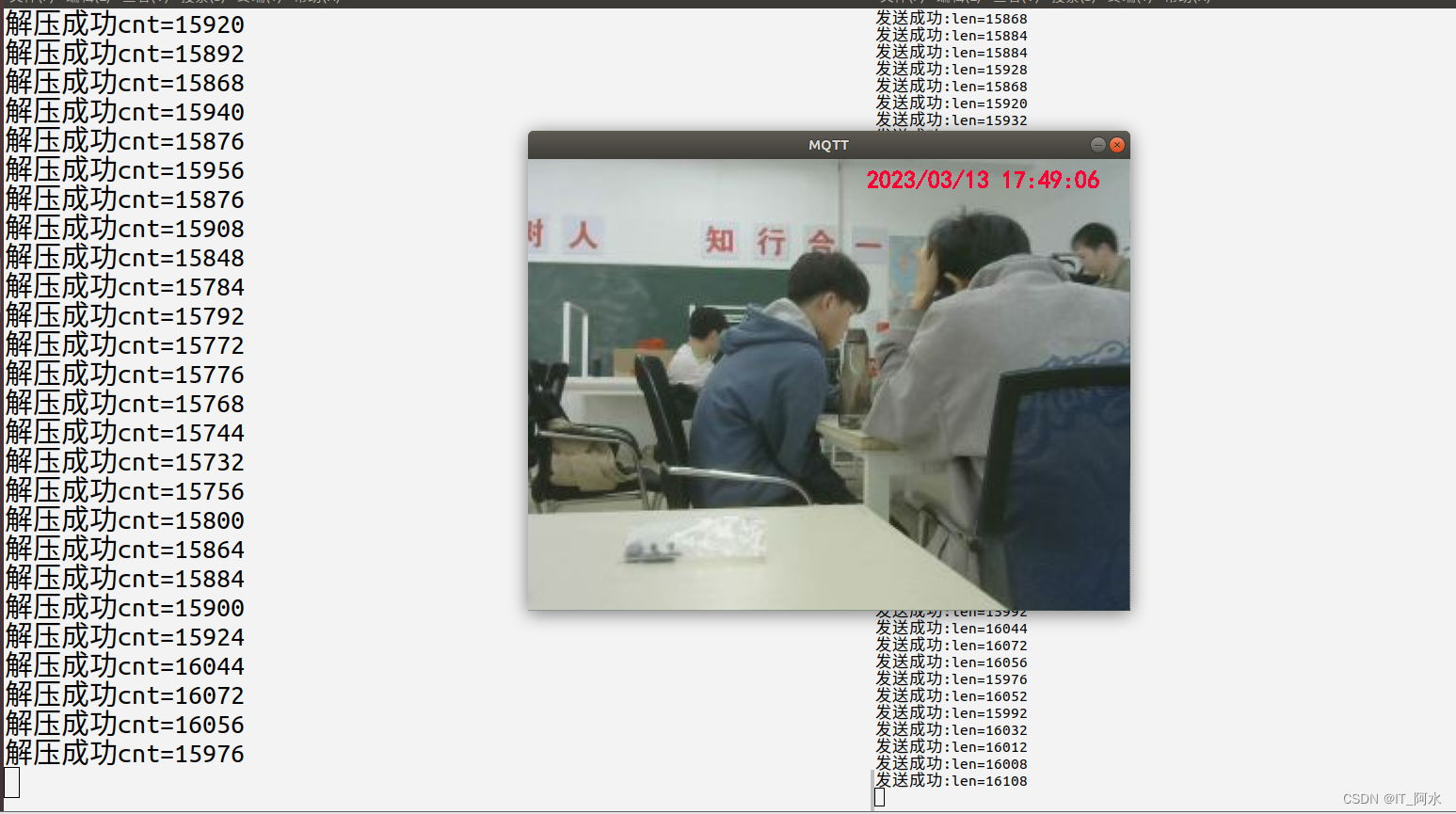

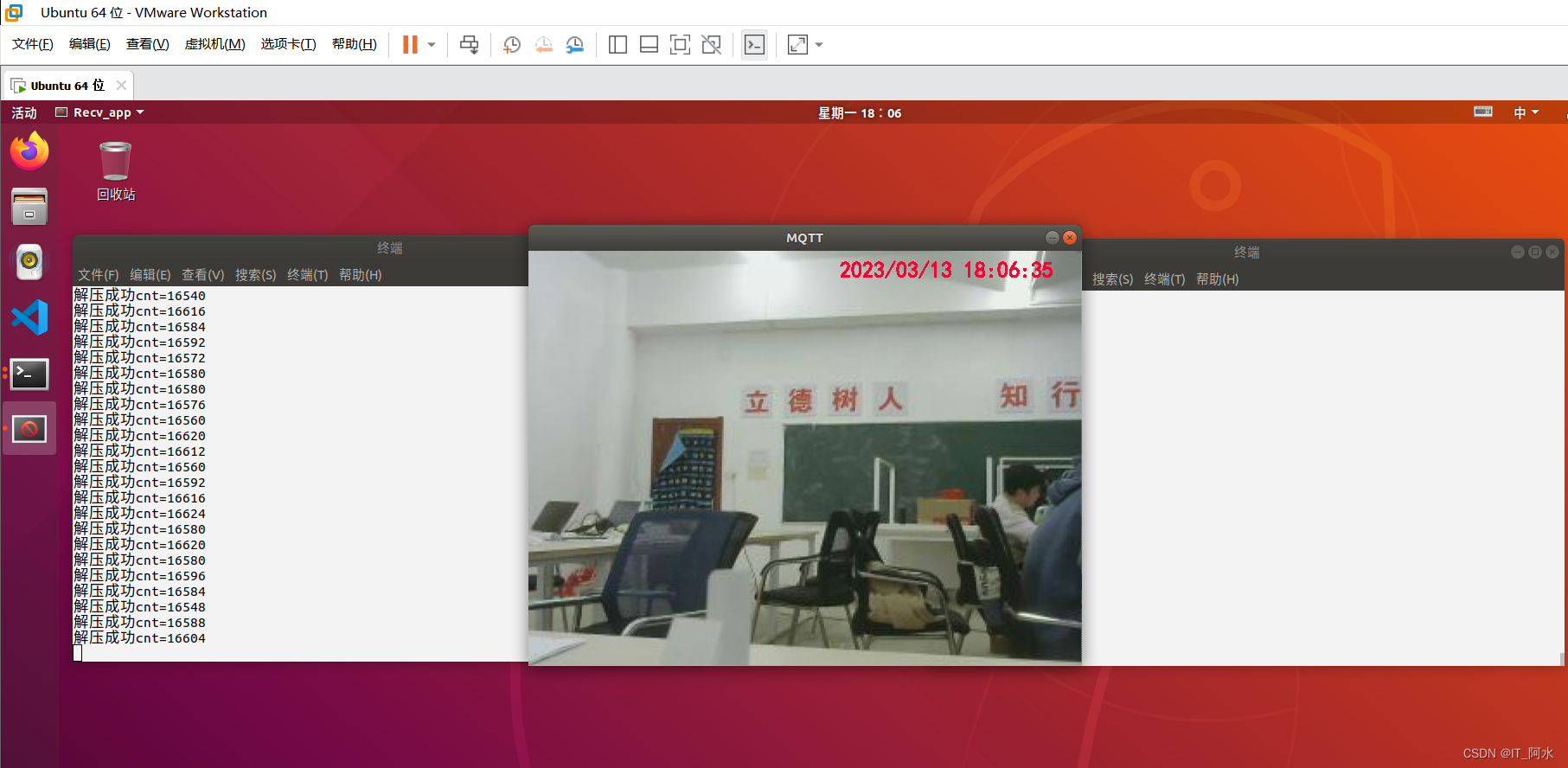

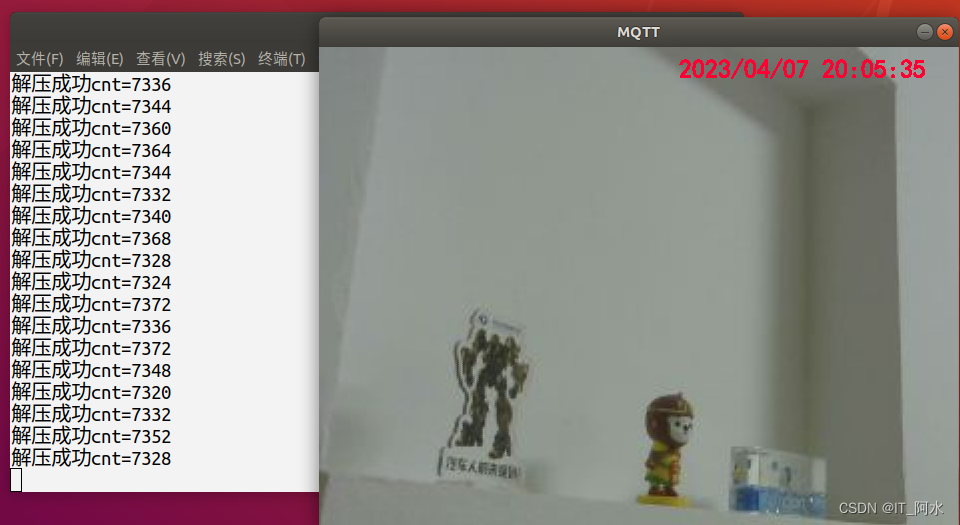

}6.3 Project Effectiveness

Capture the image on the Raspberry Pi and get the effect on the computer:

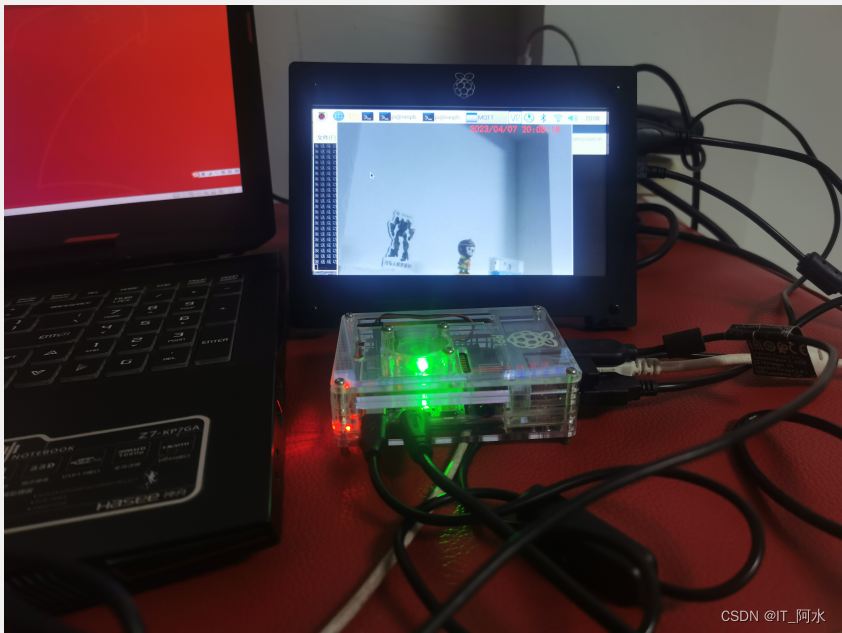

Running results on a Raspberry Pi:

Sample project: https://download.csdn.net/download/weixin_44453694/87575545