1 whisper Introduction

OpenAI, the company that owns the ChatGPT language model, has open-sourced the Whisper automated speech recognition system, and OpenAI emphasizes that Whisper’s speech recognition ability has reached the human level.

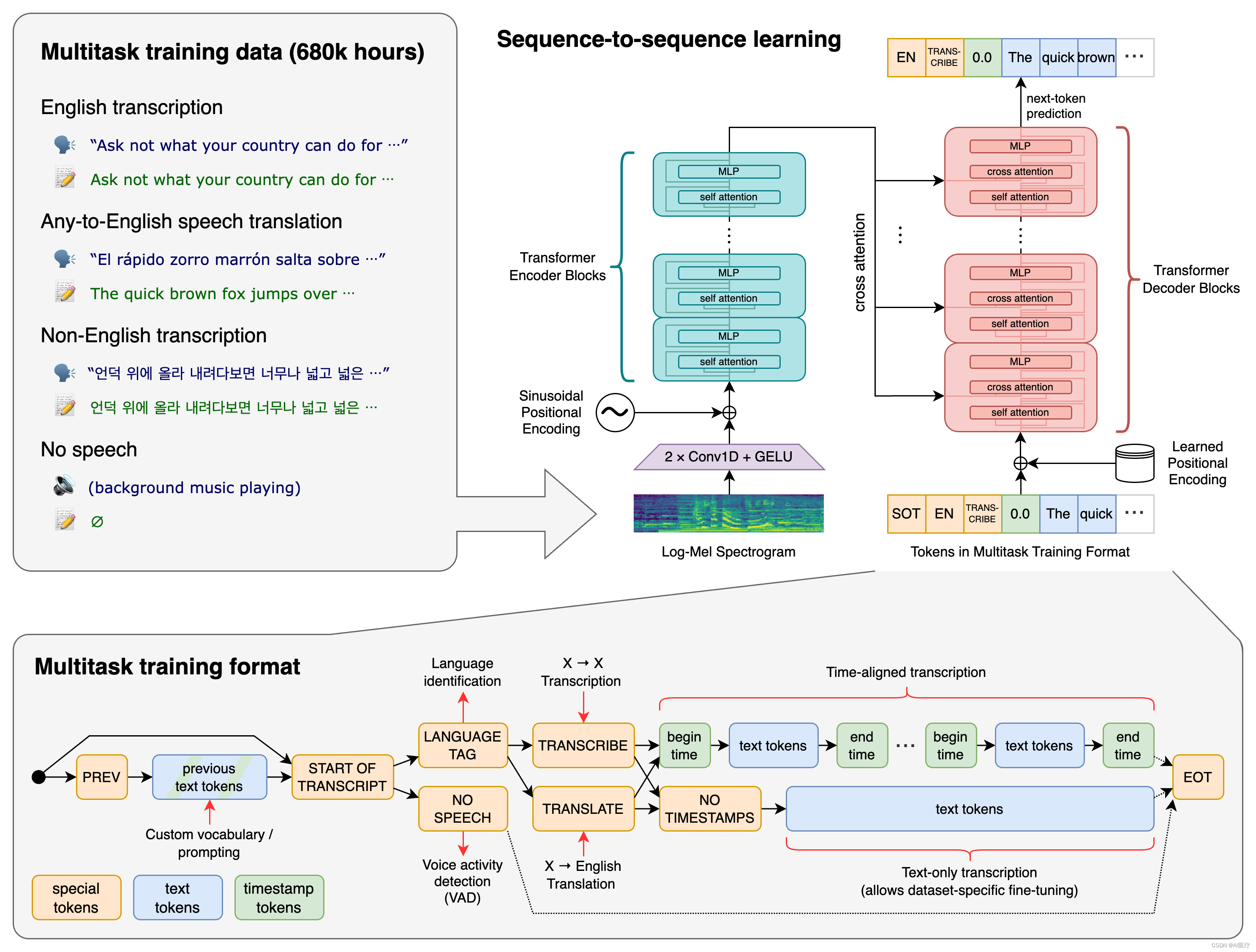

Whisper is a general-purpose speech recognition model trained using a large amount of multilingual and multi-task supervised data, capable of achieving near-human level robustness and accuracy in English speech recognition.Whisper also performs tasks such as multilingual speech recognition, speech translation, and linguistic recognition.The architecture of Whisper is a simple end-to-end approach that employs the Transformer model of an encoder-decoder that converts the input audio into corresponding text sequences and specifies different tasks based on special tokens.

OpenAI trained Whisper, an ASR (Automatic Speech Recognition) system, by collecting 680,000 hours of multilingual (98 languages) and multitask supervised data from the web.OpenAI believes that using such a OpenAI believes that using such a large and varied dataset will improve the ability to recognize accents, background noise, and technical terms. In addition to being used for speech recognition, Whisper is capable of transcribing multiple languages and translating them into English. openAI is opening up the model and inference code in the hope that developers can use Whisper as a basis for building useful applications and further research into speech processing technology.

Code Address:code address

2 whisper Model

The process by which Whisper performs an operation:

The input audio is segmented into 30-second segments, converted to log-Mel spectrograms, and passed to an encoder. The decoder is trained to predict the corresponding textual descriptions and mixes them with special tokens that guide a single model in performing tasks such as language recognition, phrase-level timestamping, multilingual speech transcription, and speech translation.

Compared to other existing methods currently on the market, they typically use smaller, more tightly paired ‘audio – text’ training datasets, or extensive but unsupervised audio pre-training sets. Because Whisper is trained on a large and diverse dataset without being fine-tuned for any particular dataset, while it does not beat models that specialize in LibriSpeech performance (the famous speech recognition benchmark test), measuring Whisper’s Zero-shot (no re-training on a new dataset, and (which gives good results without retraining on a new dataset) on many different datasets, the researchers found that it was much more robust than those models, making 50 percent fewer errors.

3 Multiple models of whisper

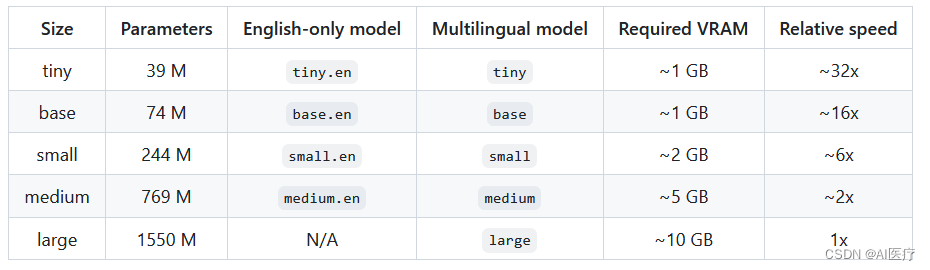

The whisper is available in five model sizes, providing a balance of speed and accuracy, with the English-only model offering four options. Below are the names of the available models, approximate memory requirements, and relative speeds.

The official download address for the model:

"tiny.en": "https://openaipublic.azureedge.net/main/whisper/models/d3dd57d32accea0b295c96e26691aa14d8822fac7d9d27d5dc00b4ca2826dd03/tiny.en.pt",

"tiny": "https://openaipublic.azureedge.net/main/whisper/models/65147644a518d12f04e32d6f3b26facc3f8dd46e5390956a9424a650c0ce22b9/tiny.pt",

"base.en": "https://openaipublic.azureedge.net/main/whisper/models/25a8566e1d0c1e2231d1c762132cd20e0f96a85d16145c3a00adf5d1ac670ead/base.en.pt",

"base": "https://openaipublic.azureedge.net/main/whisper/models/ed3a0b6b1c0edf879ad9b11b1af5a0e6ab5db9205f891f668f8b0e6c6326e34e/base.pt",

"small.en": "https://openaipublic.azureedge.net/main/whisper/models/f953ad0fd29cacd07d5a9eda5624af0f6bcf2258be67c92b79389873d91e0872/small.en.pt",

"small": "https://openaipublic.azureedge.net/main/whisper/models/9ecf779972d90ba49c06d968637d720dd632c55bbf19d441fb42bf17a411e794/small.pt",

"medium.en": "https://openaipublic.azureedge.net/main/whisper/models/d7440d1dc186f76616474e0ff0b3b6b879abc9d1a4926b7adfa41db2d497ab4f/medium.en.pt",

"medium": "https://openaipublic.azureedge.net/main/whisper/models/345ae4da62f9b3d59415adc60127b97c714f32e89e936602e85993674d08dcb1/medium.pt",

"large-v1": "https://openaipublic.azureedge.net/main/whisper/models/e4b87e7e0bf463eb8e6956e646f1e277e901512310def2c24bf0e11bd3c28e9a/large-v1.pt",

"large-v2": "https://openaipublic.azureedge.net/main/whisper/models/81f7c96c852ee8fc832187b0132e569d6c3065a3252ed18e56effd0b6a73e524/large-v2.pt",

"large": "https://openaipublic.azureedge.net/main/whisper/models/81f7c96c852ee8fc832187b0132e569d6c3065a3252ed18e56effd0b6a73e524/large-v2.pt",4 Installation of the runtime environment and use of whisper

(1) Conda environment installation

See also:annoconda installation

(2) Whisper environment construction

conda create -n whisper python==3.9

conda activate whisper

pip install openai-whisper

conda install ffmpeg

pip install setuptools-rust(3) Whisper use

whisper /opt/000001.wav --model baseThe output is as follows:

[00:00.000 --> 00:02.560] Artificial Intelligence Recognition System.When the command is executed, the model download is performed automatically, and the path where the automatically downloaded model is stored is as follows:

~/.cache/whisperIt is also possible to formulate a local model run from the command line:

Whisper /opt/000001.wav --model base --model_dir /opt/models --language ChineseSupported file formats: m4a, mp3, mp4, mpeg, mpga, wav, webm

(4) Use in code

import whisper

model = whisper.load_model("base")

result = model.transcribe("/opt/000001.wav")

print(result["text"])