PyTorch Deep Learning in Action (3) – Building Neural Networks with PyTorch

0. Preface

We’ve learned how toBuilding Neural Networks from Scratch, neural networks usually include basic components such as input layer, hidden layer, output layer, activation function, loss function and learning rate. In this section, we will learn how to use a simple dataset with thePyTorch A neural network is constructed and the network weights are updated using tensor object manipulation and gradient value computation.

1. PyTorch building neural network first experience

1.1 Building Neural Networks with PyTorch

In order to introduce how to use thePyTorch Building a neural network, we will try to solve the problem of adding two numbers.

(1) Initialize the dataset, define the inputs (x) and output (y) value:

import torch

x = [[1,2],[3,4],[5,6],[7,8]]

y = [[3],[7],[11],[15]]In the initialized input and output variables, the sum of the values of each list in the input is the corresponding value in the output list.

(2) Converts an input list into a tensor object:

X = torch.tensor(x).float()

Y = torch.tensor(y).float()In the above code, the tensor object is converted to a floating point object. In addition, converting the input (X ) and output (Y ) The data point is registered to thedevice Center:

device = 'cuda' if torch.cuda.is_available() else 'cpu'

X = X.to(device)

Y = Y.to(device)(3) Define the neural network architecture.

import (data)torch.nn module is used to construct neural network models:

from torch import nnCreating Neural Network Architecture ClassesMyNeuralNetInherited fromnn.Module,nn.Module is the base class for all neural network modules:

class MyNeuralNet(nn.Module):In the class, use the__init__ method initializes all the components of the neural network, calling thesuper().__init__() Ensuring class inheritancenn.Module:

def __init__(self):

super().__init__()Using the above code, you can specify thesuper().__init__() can be utilized asnn.Module All pre-built functions written to initialize components that will be used in theMyNeuralNet different methods in the class.

Define the network layers in a neural network:

self.input_to_hidden_layer = nn.Linear(2,8)

self.hidden_layer_activation = nn.ReLU()

self.hidden_to_output_layer = nn.Linear(8,1)In the above code, all the layers of the neural network are specified – a fully connected layer (self.input_to_hidden_layer), using theReLU The activation function (self.hidden_layer_activation), and finally still a fully connected layer (self.hidden_to_output_layer)。

Connect the initialized neural network components together and define the forward propagation method of the networkforward:

def forward(self, x):

x = self.input_to_hidden_layer(x)

x = self.hidden_layer_activation(x)

x = self.hidden_to_output_layer(x)

return xmust useforward as the name of the forward-propagating function, sincePyTorch Reserve this function as the method for performing forward propagation; using any other name will raise an error.

By printingnn.Linear method’s output to understand what the function does:

print(nn.Linear(2, 7))

# Linear(in_features=2, out_features=8, bias=True)In the above code, the fully-connected layer starts with2 values as inputs and outputs7 value and contains the bias parameter associated with it.

(4) Access the initial weights of each neural network component by executing the following code.

establishMyNeuralNet instance of the class object and register it with thedevice:

mynet = MyNeuralNet().to(device)The weights and biases for each layer can be accessed with similar code:

print(mynet.input_to_hidden_layer.weight)The output of the code is as follows:

Parameter containing:

tensor([[ 0.0984, 0.3058],

[ 0.2913, -0.3629],

[ 0.0630, 0.6347],

[-0.5134, -0.2525],

[ 0.2315, 0.3591],

[ 0.1506, 0.1106],

[ 0.2941, -0.0094],

[-0.0770, -0.4165]], device='cuda:0', requires_grad=True)The output is not the same each time it is executed because the neural network is initialized each time using random values. If you want to keep the same output each time the same code is executed, you need to create an instance of the class object before using theTorch hit the nail on the headmanual_seed method specifies a random seedtorch.manual_seed(0)。

(5) All the parameters of the neural network can be obtained with the following code:

mynet.parameters()The above code will return a generator object and finally get the parameters through the generator loop:

for param in mynet.parameters():

print(param)The output of the code is as follows:

Parameter containing:

tensor([[ 0.2955, 0.3231],

[ 0.5153, 0.1734],

[-0.6359, -0.1406],

[ 0.3820, -0.1085],

[ 0.2816, -0.2283],

[ 0.4633, 0.6564],

[-0.1605, -0.4450],

[ 0.0788, -0.0147]], device='cuda:0', requires_grad=True)

Parameter containing:

tensor([[ 0.2955, 0.3231],

[ 0.5153, 0.1734],

[-0.6359, -0.1406],

[ 0.3820, -0.1085],

[ 0.2816, -0.2283],

[ 0.4633, 0.6564],

[-0.1605, -0.4450],

[ 0.0788, -0.0147]], device='cuda:0', requires_grad=True)

Parameter containing:

tensor([-0.4761, 0.6370, 0.6744, -0.4103, -0.3714, 0.1491, -0.2304, 0.5571],

device='cuda:0', requires_grad=True)

Parameter containing:

tensor([[-0.0440, 0.0028, 0.3024, 0.1915, 0.1426, -0.2859, -0.2398, -0.2134]],

device='cuda:0', requires_grad=True)

Parameter containing:

tensor([-0.3238], device='cuda:0', requires_grad=True)The model has registered these tensors as special objects necessary for tracking forward and backward propagation in the__init__ Methods that define thenn When a neural network layer is created, it automatically creates the appropriate tensor and registers it at the same time, or you can use thenn.parameter(<tensor>) function manually registers these parameters. Thus, the neural network classes defined in this sectionmyNeuralNet Equivalent to the following code:

class MyNeuralNet(nn.Module):

def __init__(self):

super().__init__()

self.input_to_hidden_layer = nn.parameter(torch.rand(2,8))

self.hidden_layer_activation = nn.ReLU()

self.hidden_to_output_layer = nn.parameter(torch.rand(8,1))

def forward(self, x):

x = x @ self.input_to_hidden_layer

x = self.hidden_layer_activation(x)

x = x @ self.hidden_to_output_layer

return x(6) Define the loss function and use the mean square error as the loss function since it is required to predict the continuous output:

loss_func = nn.MSELoss()By passing the input values to the neural network object and then calculating the value of the loss function for a given input:

_Y = mynet(X)

loss_value = loss_func(_Y,Y)

print(loss_value)

# tensor(127.4498, device='cuda:0', grad_fn=<MseLossBackward>)In the above code, themynet(X) The output value is computed from the given inputs by means of a neural network thatloss_func The function calculation corresponds to the neural network prediction (_Y) and the actual value (Y)MSELoss Value. It is important to note that according to thePyTorch Conventionally, when calculating the loss, we always pass in the prediction first and then the actual marker value.

(7) Define the optimizer used to reduce the value of the loss, where the inputs to the optimizer are the parameters corresponding to the neural network (weights and biases) and the learning rate when updating the weights. In this section, we use stochastic gradient descent (Stochastic Gradient Descent, SGD). Fromtorch.optim Importing in the moduleSGD method, and then the neural network object (mynet) and the learning rate (lr) is passed as an argument to theSGD Methods:

from torch.optim import SGD

opt = SGD(mynet.parameters(), lr = 0.001)(8) anepoch The training process consists of the following steps:

- Calculate the loss values corresponding to the given inputs and outputs

- Calculate the gradient corresponding to the parameter

- Update the weights according to the learning rate and gradient of the parameters

- After updating the weights, make sure that the weights are updated in the next

epochRefresh the gradient calculated in the previous step before calculating the gradient

opt.zero_grad()

loss_value = loss_func(mynet(X),Y)

loss_value.backward()

opt.step()utilizationfor The loop repeats the above steps. In the following code, the execution of50 classifier for individual things or people, general, catch-all classifierepochIn addition, inloss_history The list stores eachepoch The value of the loss in the

loss_history = []

for _ in range(50):

opt.zero_grad()

loss_value = loss_func(mynet(X),Y)

loss_value.backward()

opt.step()

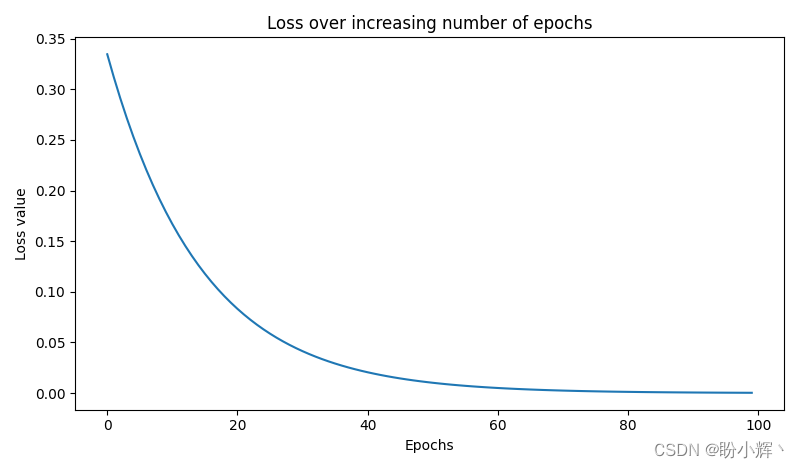

loss_history.append(loss_value)Plotting losses as a function ofepoch The changes in the situation:

import matplotlib.pyplot as plt

plt.plot(loss_history)

plt.title('Loss variation over increasing epochs')

plt.xlabel('epochs')

plt.ylabel('loss value')

plt.show()

1.2 Neural network data loading

Batch size (batch size) is an important hyperparameter in neural networks, and the batch size is the number of data samples considered for calculating loss values or updating weights. Assuming that there are millions of data samples in the dataset, it is not optimal to use all the data points at once for a single weight update as the memory may not be able to hold so much data. Using sampling samples can adequately represent the data, and batch sizes can be used to obtain multiple data samples that are sufficiently representative. In this section, we specify the batch size to be considered when computing the weight gradient to update the weights and then compute the updated loss values.

(1) Import the methods used to load the data and process the dataset:

from torch.utils.data import Dataset, DataLoader

import torch

import torch.nn as nn(2) Import data, convert them to floating point numbers, and register them with the appropriate device:

x = [[1,2],[3,4],[5,6],[7,8]]

y = [[3],[7],[11],[15]]

X = torch.tensor(x).float()

Y = torch.tensor(y).float()

device = 'cuda' if torch.cuda.is_available() else 'cpu'

X = X.to(device)

Y = Y.to(device)(3) Creating a dataset classMyDataset:

class MyDataset(Dataset):existMyDataset class, which stores data information so that a batch (batch) data points are bundled together (using theDataLoader) and update the weights by one forward and back propagation.

define__init__ method, which takes input and output pairs and converts them to theTorch Floating point objects:

def __init__(self,x,y):

self.x = x.clone().detach() # torch.tensor(x).float()

self.y = y.clone().detach() # torch.tensor(y).float()Specify the length of the input data set (__len__):

def __len__(self):

return len(self.x)__getitem__ method is used to obtain the specified data sample:

def __getitem__(self, ix):

return self.x[ix], self.y[ix]In the above code, theix Indicates the index of the data to be fetched from the dataset.

(4) Create instances of custom classes:

ds = MyDataset(X, Y)(5) pass (a bill or inspection etc)DataLoader Pass the dataset instance from the original input-output tensor objectbatch_size Data Points:

dl = DataLoader(ds, batch_size=2, shuffle=True)In the above code, specify the data to be retrieved from the original input dataset (ds) to get two (batch_size=2) Random samples (shuffle=True) data points.

cyclic traversaldl Get batch data information:

for x, y in dl:

print(x, y)The output is shown below:

tensor([[3., 4.],

[5., 6.]], device='cuda:0') tensor([[ 7.],

[11.]], device='cuda:0')

tensor([[1., 2.],

[7., 8.]], device='cuda:0') tensor([[ 3.],

[15.]], device='cuda:0')You can see that the above code generates two sets ofInput-OutputYes, because the original dataset has a total of4 data points, and the specified batch size is2。

(6) Define the neural network class:

class MyNeuralNet(nn.Module):

def __init__(self):

super().__init__()

self.input_to_hidden_layer = nn.Linear(2,8)

self.hidden_layer_activation = nn.ReLU()

self.hidden_to_output_layer = nn.Linear(8,1)

def forward(self, x):

x = self.input_to_hidden_layer(x)

x = self.hidden_layer_activation(x)

x = self.hidden_to_output_layer(x)

return x(7) Define the model object (mynet), loss function (loss_func) and the optimizer (opt):

mynet = MyNeuralNet().to(device)

loss_func = nn.MSELoss()

from torch.optim import SGD

opt = SGD(mynet.parameters(), lr = 0.001)(8) Finally, the loop traverses the batch of data points to minimize the loss value:

import time

loss_history = []

start = time.time()

for _ in range(50):

for data in dl:

x, y = data

opt.zero_grad()

loss_value = loss_func(mynet(x),y)

loss_value.backward()

opt.step()

loss_history.append(loss_value)

end = time.time()

print(end - start)

# 0.08548569679260254Although the above code is very similar to the code used in the previous subsection, compared to the previous subsection, each of theepoch The number of times the weights are updated becomes the original2 times, since the batch size used in this section is2, while the batch size in the previous subsection was4 (i.e., use all data points at once).

1.3 Model testing

In the previous subsection, we learned how to fit a model on known data points. In this section, we will learn how to utilize the previous subsection’s trainedmynet The forward propagation method defined in the modelforward to predict data points that the model has not seen (test data).

(1) Create data points for testing the model:

val_x = [[10,11]]New data set (val_x) is the same as the input dataset, which is also a list composed of list data.

(2) Convert the new data point to a tensor floating-point object and register it to thedevice Center:

val_x = torch.tensor(val_x).float().to(device)(3) By means of a trained neural network (mynet) passes the tensor object, which is the same usage as performing forward propagation through the model:

print(mynet(val_x))

# tensor([[20.0105]], device='cuda:0', grad_fn=<AddmmBackward>)The above code returns the model’s predicted output values for the input data points.

1.4 Getting the value of the middle layer

In practical applications, we may need to obtain the values of intermediate layers of neural networks, such as style migration and migration learning, for example.PyTorch Two ways of obtaining neural network intermediate values are provided.

One approach is to call the neural network layers directly, using them as functions:

print(mynet.hidden_layer_activation(mynet.input_to_hidden_layer(X)))Note that we must install the model inputs and outputs in order to call the appropriate neural network layer, for example, in the above codeinput_to_hidden_layer The output of thehidden_layer_activation Inputs to the layer.

Another way to do this is to use theforward method to specify the network layer you want to view, although the following code is not the same as in the previous subsection’sMyNeuralNet The classes are essentially the same, butforward method returns not only the output, but also the activated hidden layer value (hidden2):

class MyNeuralNet(nn.Module):

def __init__(self):

super().__init__()

self.input_to_hidden_layer = nn.Linear(2,8)

self.hidden_layer_activation = nn.ReLU()

self.hidden_to_output_layer = nn.Linear(8,1)

def forward(self, x):

hidden1 = self.input_to_hidden_layer(x)

hidden2 = self.hidden_layer_activation(hidden1)

x = self.hidden_to_output_layer(hidden2)

return x, hidden2Access the hidden layer values by using the following code.mynet thirtieth anniversary of the founding of the Republic of Korea0 The indexed output is the final output of the network forward propagation, and the first1 The indexed output is the value of the hidden layer after activation:

print(mynet(X)[1])2. Using Sequential Classes to Build Neural Networks

We have learned how to build a neural network by defining a class in which the layers and how they are connected to each other are defined. However, unless a complex network needs to be constructed, it’s easier to just utilize theSequential The neural network can be built by classing and specifying the order in which the layers and layers are stacked, and this section continues with training the neural network using a simple dataset.

(1) Import the relevant libraries and define the devices used:

import torch

import torch.nn as nn

import numpy as np

from torch.utils.data import Dataset, DataLoader

device = 'cuda' if torch.cuda.is_available() else 'cpu'(2) Define the dataset and dataset class (MyDataset):

x = [[1,2],[3,4],[5,6],[7,8]]

y = [[3],[7],[11],[15]]

class MyDataset(Dataset):

def __init__(self, x, y):

self.x = torch.tensor(x).float().to(device)

self.y = torch.tensor(y).float().to(device)

def __getitem__(self, ix):

return self.x[ix], self.y[ix]

def __len__(self):

return len(self.x)(3) Define the dataset (ds) and the data loader (dl) objects:

ds = MyDataset(x, y)

dl = DataLoader(ds, batch_size=2, shuffle=True)(4) utilizationnn modulesSequential Class Definition Model Architecture:

model = nn.Sequential(

nn.Linear(2, 8),

nn.ReLU(),

nn.Linear(8, 1)

).to(device)In the above code, we define the same network architecture as in the previous subsection, thenn.Linear accepting two-dimensional inputs and providing eight-dimensional outputs for each data point.nn.ReLU Execute on top of the eight-dimensional outputReLU activation, and finally, use thenn.Linear Accepts eight-dimensional input and gets one-dimensional output.

(5) Print a summary of the model (summary) to view model architecture information.

In order to view the model summary, you need to use thepip mountingtorchsummary Coop:

pip install torchsummaryImport after installationtorchsummary:

from torchsummary import summaryTo print the model summary, the function accepts the model name and the model input size (an integer tuple is required) as arguments:

print(summary(model, (2,)))The output is shown below:

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Linear-1 [-1, 8] 24

ReLU-2 [-1, 8] 0

Linear-3 [-1, 1] 9

================================================================

Total params: 33

Trainable params: 33

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.00

Forward/backward pass size (MB): 0.00

Params size (MB): 0.00

Estimated Total Size (MB): 0.00

----------------------------------------------------------------As an example, the first layer of output has the shape(-1, 8)which-1 indicatebatch size,8 means that for each data point you will get a8 dimensional output to get a shape ofbatch size x 8 The output of the

(6) Next, define the loss function (loss_func) and the optimizer (opt) and train the model:

loss_func = nn.MSELoss()

from torch.optim import SGD

opt = SGD(model.parameters(), lr = 0.001)

import time

loss_history = []

start = time.time()

for _ in range(50):

for ix, iy in dl:

opt.zero_grad()

loss_value = loss_func(model(ix),iy)

loss_value.backward()

opt.step()

loss_history.append(loss_value)

end = time.time()

print(end - start)(7) After training the model, the values are predicted on the validation dataset.

Define the validation dataset:

val = [[8,9],[10,11],[1.5,2.5]]Converts validation data to floating point numbers, then converts them to tensor objects and registers them to thedevice in which the data prediction output is validated by modeling passes:

val = torch.tensor(val).float()

print(model(val.to(device)))

"""

tensor([[16.7774],

[20.6186],

[ 4.2415]], device='cuda:0', grad_fn=<AddmmBackward>)

"""3. Saving and loading PyTorch models

An important aspect of neural network model processing is saving and loading the model after training; after saving the model, we can utilize the inference in the already trained model by simply loading the already trained model without having to train it again.

3.1 Components required for model saving

First understand the complete components required to save a neural network model:

- Unique name (key) for each tensor (parameter)

- A way to connect between tensors in a network

- Values for each tensor (weights/bias values)

The first component is defined in the__init__ stage is processed, while the second component is processed during the definition of the forward computation method. By default, the values in the tensor in the__init__ stage is randomly initialized, but when loading a pre-trained model we need to load a set of fixed weight values learned while training the model and associate each value with a specific name.

3.2 Model state

model.state_dict() Can be used to understand save and loadPyTorch The workings of the model, themodel.state_dict() The dictionary in (OrderedDict) corresponding to the names (keys) of the parameters of the model and their values (weights and biases).state refers to the current snapshot of the model, and the returned output has keys that are the names of the model’s network layers and values that correspond to the weights of those layers:

print(model.state_dict())

"""

OrderedDict([('0.weight', tensor([[-0.4732, 0.1934],

[ 0.1475, -0.2335],

[-0.2586, 0.0823],

[-0.2979, -0.5979],

[ 0.2605, 0.2293],

[ 0.0566, 0.6848],

[-0.1116, -0.3301],

[ 0.0324, 0.2609]], device='cuda:0')), ('0.bias', tensor([ 0.6835, 0.2860, 0.1953, -0.2162, 0.5106, 0.3625, 0.1360, 0.2495],

device='cuda:0')), ('2.weight', tensor([[ 0.0475, 0.0664, -0.0167, -0.1608, -0.2412, -0.3332, -0.1607, -0.1857]],

device='cuda:0')), ('2.bias', tensor([0.2595], device='cuda:0'))])

"""3.3 Model saving

utilizationtorch.save(model.state_dict(), 'mymodel.pth') It is possible to set the model toPython The serialized format is saved on disk, where themymodel.pth Indicates the filename. When calling thetorch.save It’s a good idea to transfer the model to theCPU in which the tensor is saved asCPU The tensor helps to load the model onto an arbitrary machine:

save_path = 'mymodel.pth'

torch.save(model.state_dict(), save_path)3.4 Model loading

Loading a model first requires initializing the model and then loading it from thestate_dict Load weights in:

(1) Create an empty model using the same code as for training:

model = nn.Sequential(

nn.Linear(2, 8),

nn.ReLU(),

nn.Linear(8, 1)

).to(device)(2) Load the model from disk and deserialize it to create aOrderedDict Value:

state_dict = torch.load('mymodel.pth')(3) (of cargo etc) loadstate_dict to the model and register it to thedevice in which the prediction task is performed:

model.load_state_dict(state_dict)

model.to(device)

val = [[8,9],[10,11],[1.5,2.5]]

val = torch.tensor(val).float()

model(val.to(device))

wrap-up

In this section, we use thePyTorch A neural network was constructed on a simple dataset, trained to map the inputs and outputs, and updated the weight values to minimize the loss values by performing backpropagation and utilizing theSequential class to simplify the network construction process; describes common methods for obtaining network intermediate values and how to use thesave、load method saves and loads the model to avoid training the model again.

Series Links

PyTorch Deep Learning in Action (1) – Neural Network and Model Training Process in Detail

PyTorch Deep Learning in Action (2) – PyTorch Basics

PyTorch deep learning in practice (4) – common activation function and loss function details

PyTorch Deep Learning in Action (5) – Computer Vision Basics

PyTorch Deep Learning in Action (6) – Neural Network Performance Optimization Techniques

PyTorch Deep Learning in Action (7) – Impact of Batch Size on Neural Network Training

PyTorch Deep Learning in Action (8) – Batch Normalization