catalogs

What is the Stable Diffusion model?

Application Scenarios for Stable Diffusion Modeling

Stable Diffusion free use site

Local deployment of Stable Diffusion methods:

Blogger Introduction: Specializing in front and back end, machine learning, artificial intelligence applications development of quality creators, adhering to the spirit of the Internet spirit of open source contribution to the spirit of answering questions and solving problems, adhere to the quality of the work to share. I am nuggets / Tencent cloud / Ali cloud and other platforms of quality authors, specializing in front and back-end project development and graduation project combat, by the whole network of fans love and support If you need to contact the author of my oh!

Three in a row at the end of the article.

I recommend subscribing to the column, or you won’t find it next time.

preamble

What is the Stable Diffusion model?

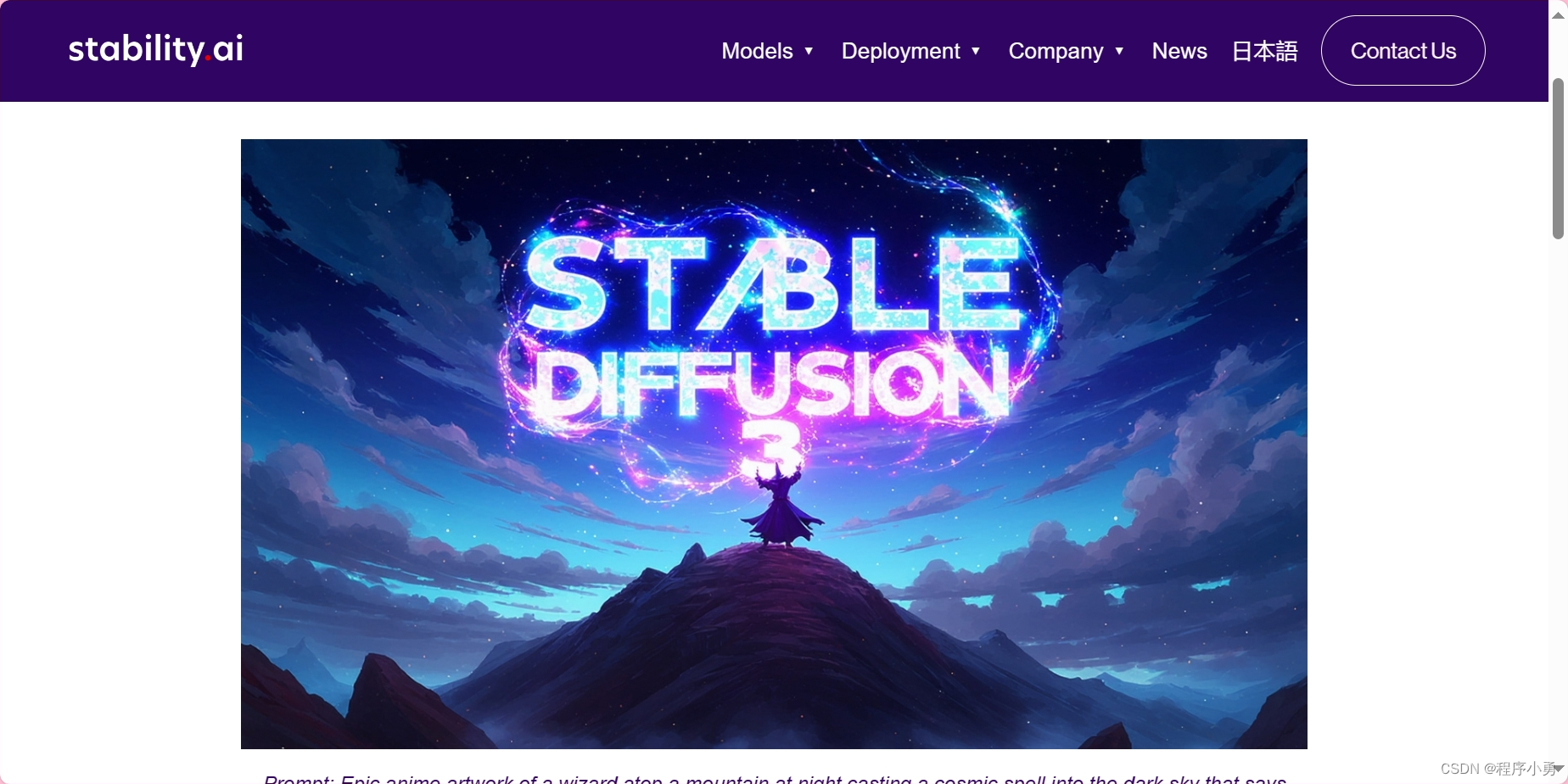

Stable Diffusion is a very recent approach to image generation. Based on a machine learning model of potential diffusion, it is primarily used to generate detailed images based on descriptions of text. Developed by the CompVis research group at the University of Munich, the model is one of a variety of generative artificial neural networks developed by the startups StabilityAI, CompVis in collaboration with Runway and supported by EleutherAI and LAION.

The Stable Diffusion model consists of three main components: a variational auto-encoder (VAE), a U-Net, and a text encoder. In the forward diffusion process, Gaussian noise is iteratively applied to characterize the compression potential. Each denoising step is accomplished by a U-Net architecture containing a residual neural network (ResNet), which obtains the latent representations by denoising in the opposite direction from forward diffusion. Finally, the VAE decoder generates the output image by transforming the representations back into pixel space.

The advantages of Stable Diffusion include higher stability, faster training, and easier optimization. By introducing a new stability coefficient, Stable Diffusion is able to control the stability of the model, thus avoiding the instability problem that occurs in Latent Diffusion. At the same time, by using a smaller batch size and fewer steps, as well as fewer parameters and a simpler network structure, Stable Diffusion improves training speed and makes the model easier to optimize.

However, Stable Diffusion has some drawbacks. Due to the introduction of stability coefficients, it may sacrifice some diversity in the generated samples. In addition, while Stable Diffusion is faster to train, it may be slower to generate samples.

Stable DiffusionWorking Principle:

The Stable Diffusion model works based on a diffusion process that gradually recovers image information from noise. In the training phase, the model learns how to gradually transform the noise into real image data. While in the generation phase, the model can generate images with similar distribution to the training data by reversing the diffusion process from random noise.

This diffusion process can be divided into the following steps:

- initialization: Given a raw data set, such as images, text, or other types of data.

- diffusion processDuring diffusion, the model gradually moves the data closer to the center of the original data set. This process can be represented by a function called “diffuser”. The diffuser takes the current data value and a small noise term and outputs a new data value. This process is repeated several times until the data value is close to the center of the original data set.

- Generate new data: At the end of the diffusion process, the model generates a new data sample that has similar characteristics to the original data set.

- backward diffusion process: In order to improve the quality of the generated data, Stable Diffusion uses a reverse diffusion process. This process is similar to the forward diffusion process but uses a different diffuser. The backward diffusion process allows the generated data to be closer to the distribution of the original dataset.

- Duplication and optimization: In order to increase the diversity and balance of the generated data, the diffusion process can be repeated several times with different diffusers and noise parameters.

Stable Diffusion model is able to generate images with diversity and creativity by introducing techniques such as conditional control and feature embedding. This model is not only highly flexible to generate various types of images such as faces, objects, etc., but also generates images with high quality, better realism and detail representation.

Stable Diffusion core code implementation

Implemented based on Python and common machine learning libraries:

Algorithmic Core Process

Compute the similarity matrix

The similarity between data points is calculated using Euclidean distance.

Convert Euclidean distance to similarity using Gaussian kernel function.

pairwise_dist = pairwise_distances(X, metric='euclidean') similarity_matrix = np.exp(-pairwise_dist ** 2)Centralized similarity matrix

The similarity matrix is centered so that the average of its rows and columns is zero.

kernel_centerer = KernelCenterer() similarity_matrix_centered = kernel_centerer.fit_transform(similarity_matrix)diffusion process

Initialize a unit matrix as a diffusion matrix.

Multiple iterations of the diffusion process spread the information from each data point to its neighboring data points.

diffusion_matrix = np.eye(X.shape[0]) for _ in range(n_steps): diffusion_matrix = (1 - alpha) * diffusion_matrix + alpha * np.dot(similarity_matrix_centered, diffusion_matrix)Extraction of the main directions

Compute the eigenvalues and eigenvectors of the diffusion matrix.

The first few major directions are selected as new eigenvectors based on the ordering of the eigenvalues.

eigenvalues, eigenvectors = np.linalg.eigh(diffusion_matrix) idx = np.argsort(eigenvalues)[::-1] principal_directions = eigenvectors[:, idx]

Core Complete Code:

class StableDiffusion:

def __init__(self, alpha=0.5, n_steps=10):

self.alpha = alpha

self.n_steps = n_steps

self.principal_directions = None

def fit(self, X):

# Calculate the similarity matrix

pairwise_dist = pairwise_distances(X, metric='euclidean')

similarity_matrix = np.exp(-pairwise_dist ** 2)

# Centralized similarity matrix

kernel_centerer = KernelCenterer()

similarity_matrix_centered = kernel_centerer.fit_transform(similarity_matrix)

# Diffusion processes

diffusion_matrix = np.eye(X.shape[0])

for _ in range(self.n_steps):

diffusion_matrix = (1 - self.alpha) * diffusion_matrix + self.alpha * np.dot(similarity_matrix_centered, diffusion_matrix)

# Extract the principal directions (eigenvectors)

eigenvalues, eigenvectors = np.linalg.eigh(diffusion_matrix)

# Sort by eigenvalue and select the first few major directions

idx = np.argsort(eigenvalues)[::-1]

self.principal_directions = eigenvectors[:, idx]

def transform(self, X, n_components):

if self.principal_directions is None:

raise ValueError("Model has not been fitted yet.")

return np.dot(X, self.principal_directions[:, :n_components])

def plot_eigenvalues(self):

if self.principal_directions is None:

raise ValueError("Model has not been fitted yet.")

eigenvalues, _ = np.linalg.eigh(np.dot(self.principal_directions.T, self.principal_directions))

plt.figure(figsize=(8, 6))

plt.plot(np.arange(1, len(eigenvalues) + 1), eigenvalues[::-1], marker='o', linestyle='-')

plt.xlabel('Principal Components')

plt.ylabel('Eigenvalues')

plt.title('Eigenvalues of Principal Components')

plt.grid(True)

plt.show()Application Scenarios for Stable Diffusion Modeling

Image Generation and Artistic Creation: The Stable Diffusion model has excellent performance in image generation and art creation. It is able to learn the statistical laws of large amounts of image data and utilize these laws to generate images with diversity and realism. This provides artists and designers with a new creative tool that allows them to create images with a unique style.

Music Video Generation: By combining audio input and visual modeling, Stable Diffusion can generate visual effects and animations that match the rhythm and emotion of the music. This technology can be used in areas such as music composition, art performance or advertising production to bring a more dynamic and artistic visual experience to the audience.

Emoji animation generation: Using image input as a condition, combined with facial expression modeling and image processing techniques, Stable Diffusion can generate character animation and give it a sense of realism and expression richness. This is important for game development, film production and virtual reality, enabling more realistic and personalized character animation effects.

Social networking and information dissemination: Stable Diffusion can be used to achieve advertising and promotional effects by utilizing the social networks of existing users to spread information to more potential customers. At the same time, it can also be used to study the process of information dissemination in social networks and predict the scope and effect of a certain message in the network.

Disease transmission simulation: Stable Diffusion can be used to model and predict the spread of a virus or disease through a population. By analyzing the connections and interactions between populations, it is possible to predict the rate and extent of disease transmission and take appropriate preventive and control measures.

Financial market analysis: In the financial markets, Stable Diffusion can help investors make smarter investment decisions by analyzing price and trading data in the stock, forex, or cryptocurrency markets to predict market volatility and future price movements.

Urban Planning and Transportation Management: Stable Diffusion can also be used to optimize urban traffic and people flow management. By analyzing population flows and transportation networks in cities, it is possible to predict traffic congestion, optimize traffic routes and supplement urban infrastructure to improve urban operational efficiency and traffic safety.

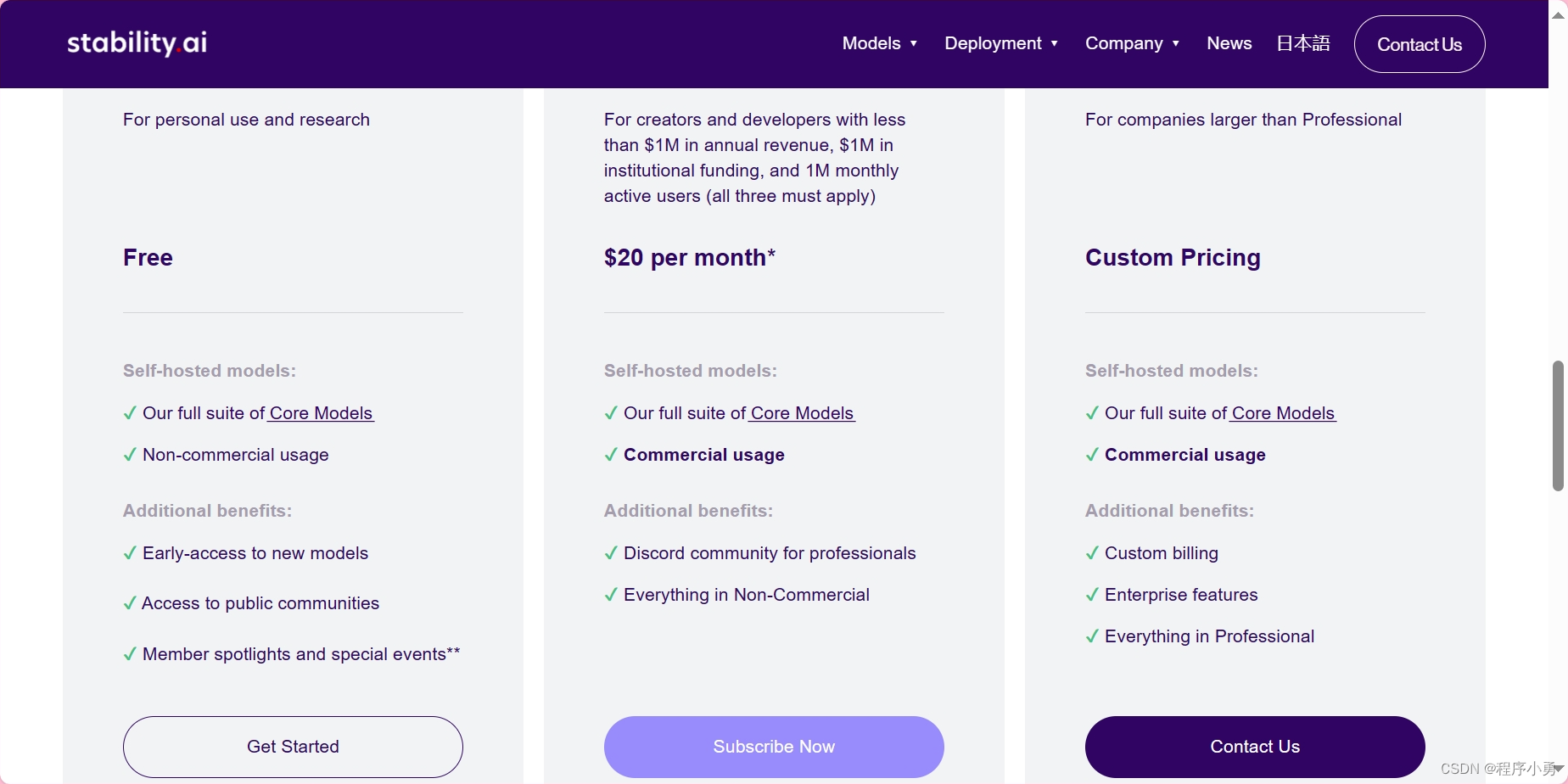

Stable Diffusion free use site

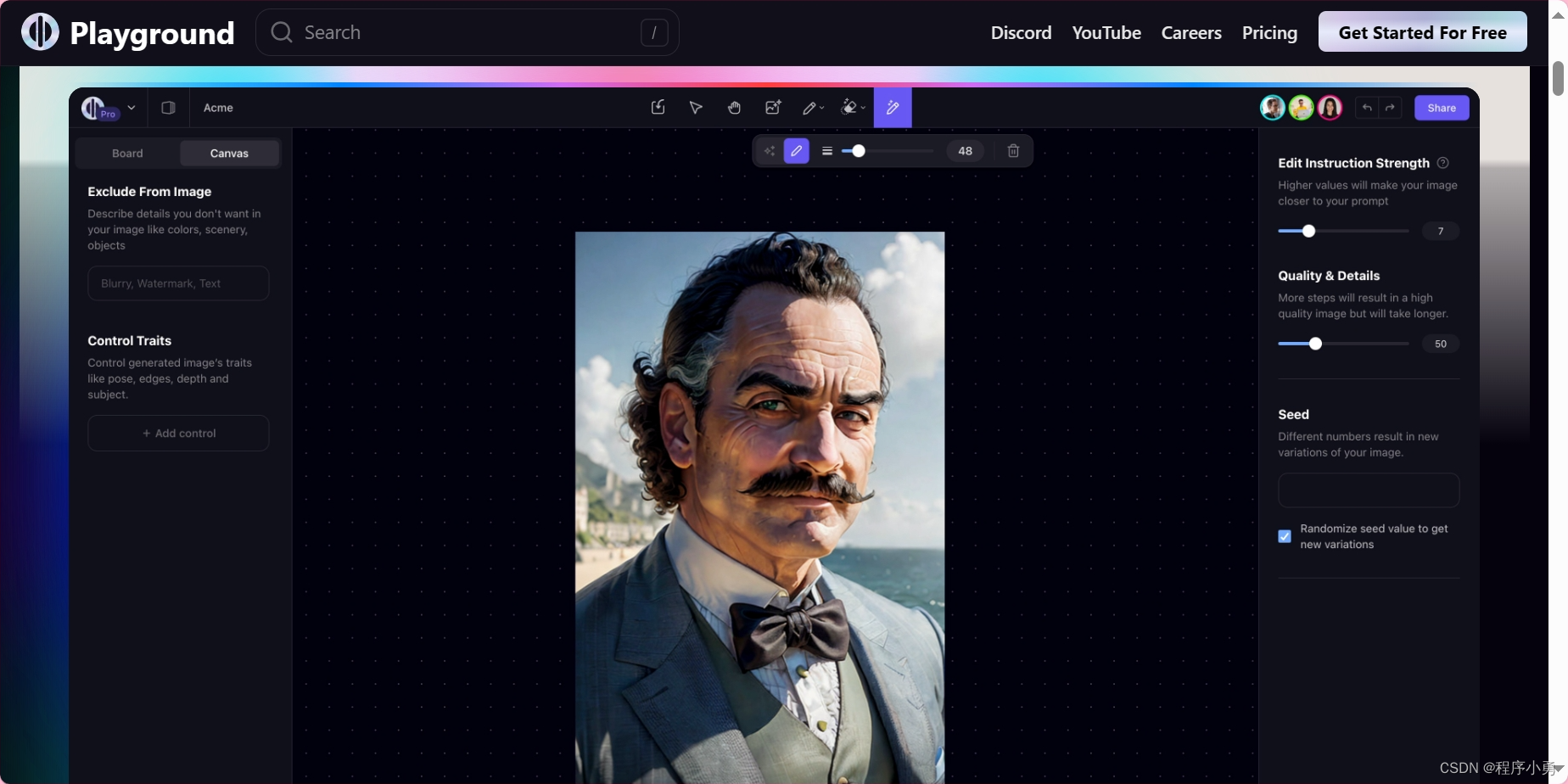

This is an innovative online tool that is especially suited for creative design. It allows users to generate AI-driven images that unleash creative power. Provides a seamless way to easily create social media posts, artwork, presentations, videos, logos and posters.

Users can personalize their creations thanks to its powerful image editor and library of pre-designed templates, backgrounds and effects.

This is a user-friendly AI image generator that utilizes AI features to generate visually appealing designs and artwork.

It may offer a range of tools and templates to help users quickly create high-quality image content.

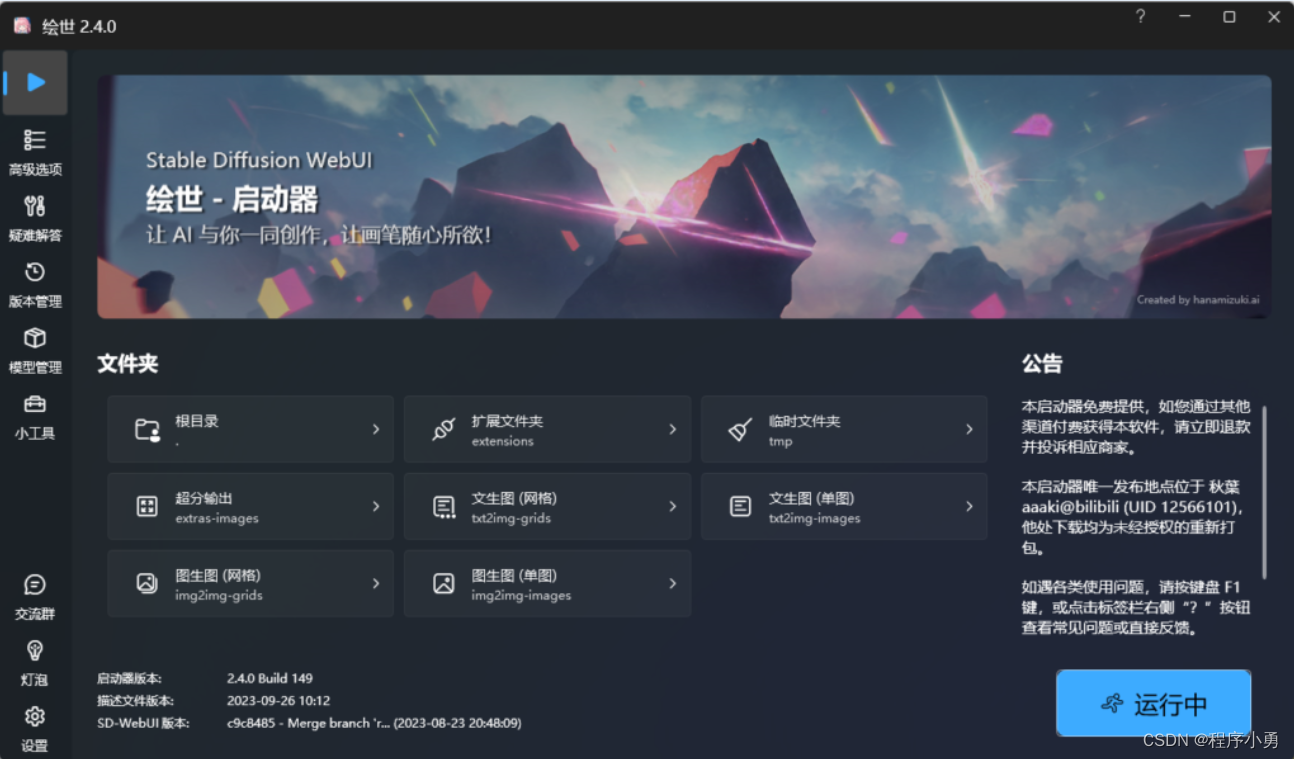

This is an easy-to-use integration package that integrates the core requirements of AI painting, such as ControlNet plugins and the latest deep learning technologies. It offers beginners and people with no programming knowledge the opportunity to learn to use Stable Diffusion from scratch.

We recommend the [Eisei Integration Pack] released by Akiba from B station.This package is based on the open source project Stable Diffusion WebUI.

ComfyUI: This is a node-based operation UI interface plugin for users with low graphics card configuration. It optimizes the internal generation process, improves the speed of generating images, and provides freer generation control.

Local deployment of Stable Diffusion methods:

Featured Site:

StableDiffusion Chinese

Open source AI drawing tool Stable Diffusion Chinese resource station

summarize

Today we mainly share an introduction to the Stable Diffusion model, a revolutionary generative model in the field of deep learning, which gradually transforms random noise into images with a high level of detail and realism by simulating a complex diffusion process. The power of this model is that it is able to learn the intrinsic pattern of image distribution from a large amount of image data, and generate images similar to the training data but completely new through optimization algorithms.

Today’s content is shared here oh!