The SENet module is often used for channel attention in convolutional networks to enhance the network model’s ability to select in channel weights and thus lift points. About the principle and specific details of SENet, we have already described in detail in the previous article:Ultra-detailed Explanation of Classical Neural Network Papers (VII) – SENet (Attention Mechanism) Study Notes (Translation + Intensive Reading + Code Replication)

Let’s reproduce the code next.

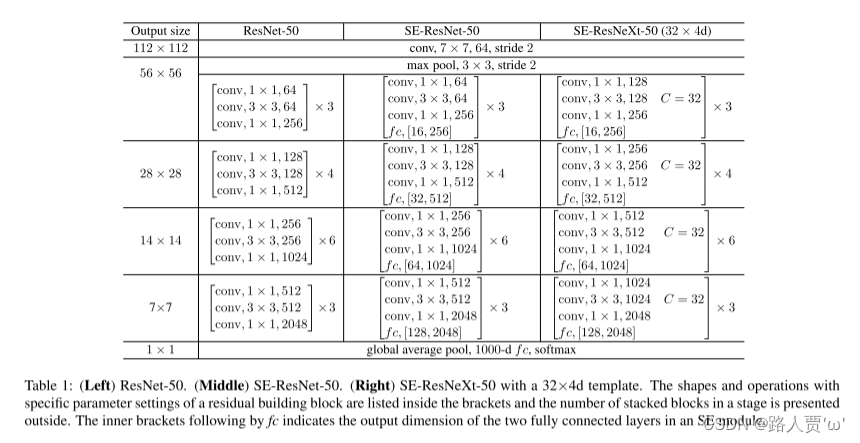

Because SENet is not a brand new network model, but is equivalent to proposing a plug-and-play high-performance small plug-in, the code implementation is also relatively simple. In this paper, we are adding the SEblock module on top of ResNet to implement ResNet_SE50.

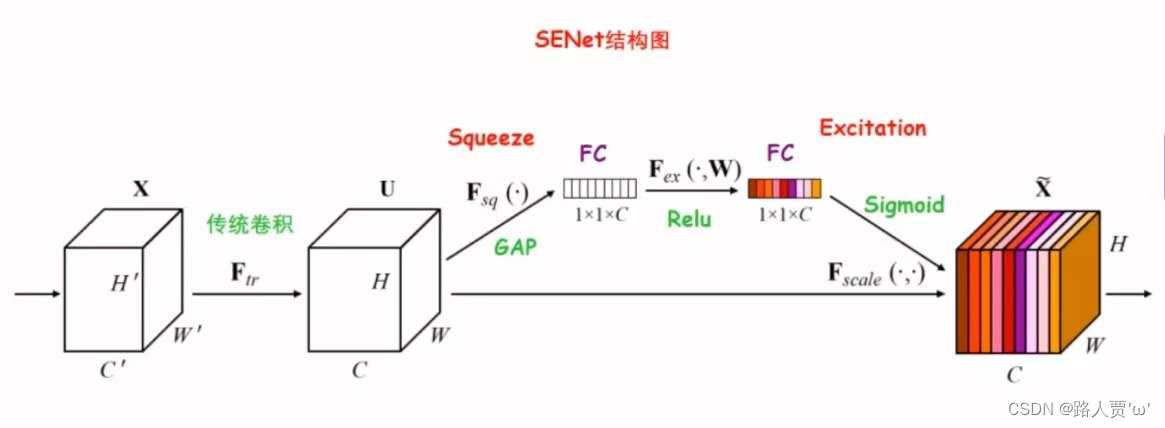

I. Introduction of SENet Structure Composition

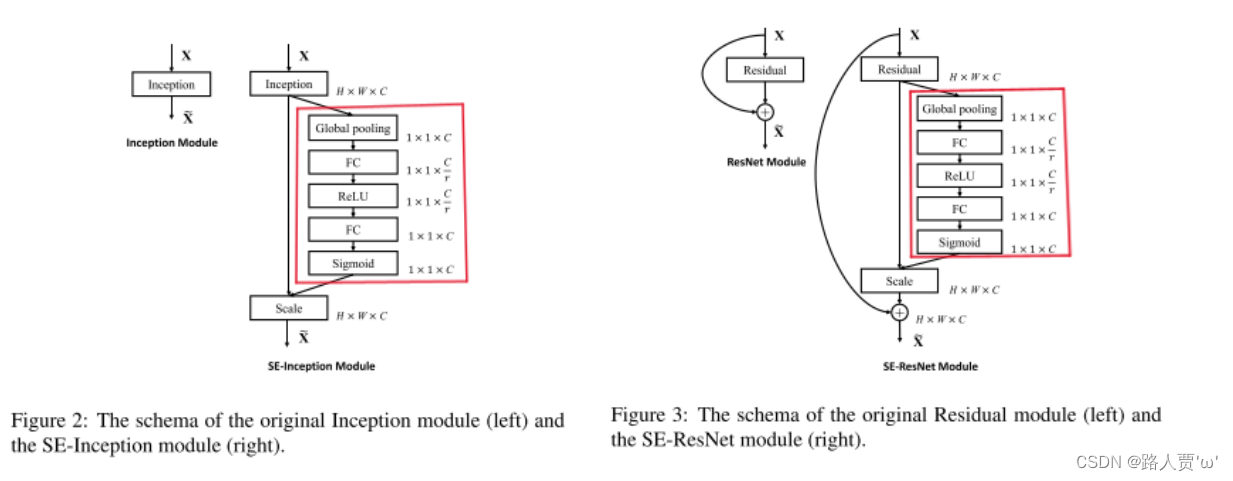

The above figure shows an SEblock, the network composed of SEblock blocks is called SENet; you can add SEblock blocks to compose SE-NameNet based on the native network, such as based on theAlexNetetc. add SE structures called SE-AlexNet, SE-ResNet, etc.

The effect of the combination of SE blocks with the advanced architectures Inception and ResNet

Principle:pass (a bill or inspection etc)A global average pooling layer plus two fully connected layers and the corresponding activation of the fully connected layers [ReLU and sigmoid]The composed structure outputs the same number of weight values as the input features, i.e., the weight coefficients for each feature channel, and learns a channel’s attention out of it, which is used to decide which channels should be focused on extracting the features, and which parts of them should be discarded.

SE block detailing process

1. Starting from the C×W×H feature map processed by Inception structure or ResNet structure, the feature map is subjected to Global Average Pooling (GAP) by Squeeze operation to obtain the 1×1×C feature vector.

2. Immediately following the two FC layers, a Bottleneck structure is formed to model the correlation between the channels:

(1) After the first FC layer, the C channels are turned into C/ r , reducing the number of parameters, and then the second FC layer is reached by the nonlinear activation of the ReLU

(2) After the second FC layer, the number of feature channels is then restored to C to obtain the weight parameters with the attention mechanism

3. Finally, after the Sigmoid activation function, a Scale operation is used to weight the normalized weights to the features of each channel.

II. Specifics of SEblock

Sequeeze:The Fsq operation is the use of the channelGlobal average poolingThe feature map of W×H×C containing global information is directly compressed into a 1×1×C feature vector, i.e., each 2D channel is turned into a numerical value with a global sensing field, and at this time, 1 pixel represents 1 channel, which shields the spatial distribution information and better utilizes the correlation between channels.

Specific operations:To the original feature map50×512×7×7performs global average pooling, and then gets a50×512×1×1size of a feature map that has a global sense field.

Excitation :Based on the correlation between the feature channels, a weight is generated for each feature channel to represent the importance of the feature channel. Feature vectors with different shades of color, i.e., different degrees of importance, are obtained from the features of the C channels that were originally all white.

Specific operations:outgoing50×512×1×1The feature map, passing through two fully-connected layers, and finally a gating mechanism similar to that in recurrent neural networks, is used to generate weights for each feature channel by means of parameters that are learned to explicitly model the correlation between the feature channels (a sigmoid is used in the paper).50×512×1×1change into50×512 / 16×1×1, and eventually reverted back:50×512×1×1

Reweight:The recalibration of the original features is accomplished by considering the weights of the Excitation output as the importance of each feature channel, i.e., for all values on H × W at each position of U multiplied by the weights of the corresponding channel.

Specific operations:50×512×1×1Getting by expand_as50×512×7×7, completes the recalibration of the original features in the channel dimension and serves as input data for the next level.

III.PyTorch code implementation

(1) SEblock construction

Global average pooling + 1*1 convolution kernel + ReLu + 1*1 convolution kernel + Sigmoid

'''------------- I. SE Module -----------------------------'''

# global average pooling + 1*1 convolution kernel + ReLu + 1*1 convolution kernel + Sigmoid

class SE_Block(nn.Module):

def __init__(self, inchannel, ratio=16):

super(SE_Block, self).__init__()

# Global average pooling (Fsq operation)

self.gap = nn.AdaptiveAvgPool2d((1, 1))

# Two fully connected layers (Fex operations)

self.fc = nn.Sequential(

nn.Linear(inchannel, inchannel // ratio, bias=False), # from c -> c/r

nn.ReLU(),

nn.Linear(inchannel // ratio, inchannel, bias=False), # from c/r -> c

nn.Sigmoid()

)

def forward(self, x):

# of batch data images and channels read

b, c, h, w = x.size()

# Fsq operation: output b*c matrix after pooling

y = self.gap(x).view(b, c)

# Fex operation: output (b, c, 1, 1) matrix via fully connected layer

y = self.fc(y).view(b, c, 1, 1)

# Fscale operation: multiply the resulting weights by the original feature map x

return x * y.expand_as(x)(2) Embedding SEblock in the residual module

SEblock has the flexibility to be added to relevant full models such as resnet, usually before residuals. [Because activation issigmoidFor this reason, there is a gradient dispersion problem, so try not to put it into the main signal channel, even if this residual module has a dispersion problem, in order not to affect the whole network model].

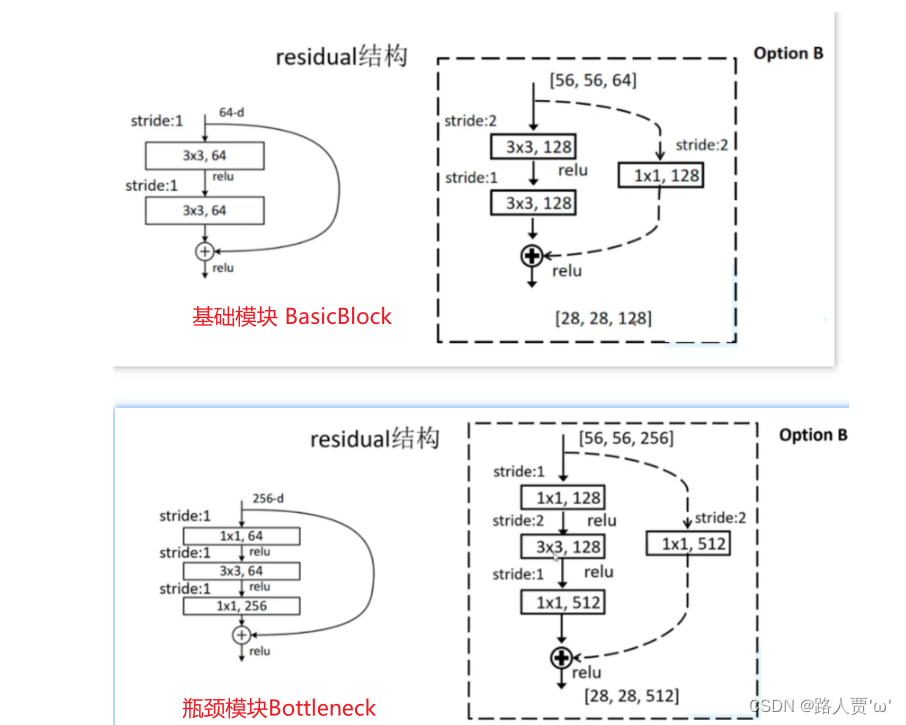

Here we embed the SE module into ResNet’s BasicBlock and Bottleneck to get SEBasicBlock and SEBottleneck respectively (see my previous post for an explanation).ResNet code reproduction + super-detailed annotations (PyTorch))

BasicBlock Module

'''------------- II. BasicBlock Module -----------------------------'''

# Residual block structures on the left (18-layer, 34-layer)

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, inchannel, outchannel, stride=1):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(inchannel, outchannel, kernel_size=3,

stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(outchannel)

self.conv2 = nn.Conv2d(outchannel, outchannel, kernel_size=3,

stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(outchannel)

# SE_Block after BN, before shortcut

self.SE = SE_Block(outchannel)

self.shortcut = nn.Sequential()

if stride != 1 or inchannel != self.expansion*outchannel:

self.shortcut = nn.Sequential(

nn.Conv2d(inchannel, self.expansion*outchannel,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(self.expansion*outchannel)

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.bn2(self.conv2(out))

SE_out = self.SE(out)

out = out * SE_out

out += self.shortcut(x)

out = F.relu(out)

return outBottleneck module

"' -- -- -- -- -- -- -- -- -- -- -- -- -- three, Bottleneck module -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- ' ' '

# Residual block structures on the right (50-layer, 101-layer, 152-layer)

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, inchannel, outchannel, stride=1):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(inchannel, outchannel, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(outchannel)

self.conv2 = nn.Conv2d(outchannel, outchannel, kernel_size=3,

stride=stride, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(outchannel)

self.conv3 = nn.Conv2d(outchannel, self.expansion*outchannel,

kernel_size=1, bias=False)

self.bn3 = nn.BatchNorm2d(self.expansion*outchannel)

# SE_Block after BN, before shortcut

self.SE = SE_Block(self.expansion*outchannel)

self.shortcut = nn.Sequential()

if stride != 1 or inchannel != self.expansion*outchannel:

self.shortcut = nn.Sequential(

nn.Conv2d(inchannel, self.expansion*outchannel,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(self.expansion*outchannel)

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = F.relu(self.bn2(self.conv2(out)))

out = self.bn3(self.conv3(out))

SE_out = self.SE(out)

out = out * SE_out

out += self.shortcut(x)

out = F.relu(out)

return out(3) Build SE_ResNet structure

'''------------- IV. Building the SE_ResNet structure -----------------------------'''

class SE_ResNet(nn.Module):

def __init__(self, block, num_blocks, num_classes=10):

super(SE_ResNet, self).__init__()

self.in_planes = 64

self.conv1 = nn.Conv2d(3, 64, kernel_size=3,

stride=1, padding=1, bias=False) # conv1

self.bn1 = nn.BatchNorm2d(64)

self.layer1 = self._make_layer(block, 64, num_blocks[0], stride=1) # conv2_x

self.layer2 = self._make_layer(block, 128, num_blocks[1], stride=2) # conv3_x

self.layer3 = self._make_layer(block, 256, num_blocks[2], stride=2) # conv4_x

self.layer4 = self._make_layer(block, 512, num_blocks[3], stride=2) # conv5_x

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.linear = nn.Linear(512 * block.expansion, num_classes)

def _make_layer(self, block, planes, num_blocks, stride):

strides = [stride] + [1]*(num_blocks-1)

layers = []

for stride in strides:

layers.append(block(self.in_planes, planes, stride))

self.in_planes = planes * block.expansion

return nn.Sequential(*layers)

def forward(self, x):

x = F.relu(self.bn1(self.conv1(x)))

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

out = self.linear(x)

return out(4) Creation and testing of network models

Network Model Creation Print SE_ResNet50

# test()

if __name__ == '__main__':

model = SE_ResNet50()

print(model)

input = torch.randn(1, 3, 224, 224)

out = model(input)

print(out.shape)

Print the model as follows

SE_ResNet(

(conv1): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(layer1): Sequential(

(0): Bottleneck(

(conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=256, out_features=16, bias=False)

(1): ReLU()

(2): Linear(in_features=16, out_features=256, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential(

(0): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=256, out_features=16, bias=False)

(1): ReLU()

(2): Linear(in_features=16, out_features=256, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

(2): Bottleneck(

(conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=256, out_features=16, bias=False)

(1): ReLU()

(2): Linear(in_features=16, out_features=256, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

)

(layer2): Sequential(

(0): Bottleneck(

(conv1): Conv2d(256, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=512, out_features=32, bias=False)

(1): ReLU()

(2): Linear(in_features=32, out_features=512, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=512, out_features=32, bias=False)

(1): ReLU()

(2): Linear(in_features=32, out_features=512, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

(2): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=512, out_features=32, bias=False)

(1): ReLU()

(2): Linear(in_features=32, out_features=512, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

(3): Bottleneck(

(conv1): Conv2d(512, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(128, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=512, out_features=32, bias=False)

(1): ReLU()

(2): Linear(in_features=32, out_features=512, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

)

(layer3): Sequential(

(0): Bottleneck(

(conv1): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=1024, out_features=64, bias=False)

(1): ReLU()

(2): Linear(in_features=64, out_features=1024, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential(

(0): Conv2d(512, 1024, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=1024, out_features=64, bias=False)

(1): ReLU()

(2): Linear(in_features=64, out_features=1024, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

(2): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=1024, out_features=64, bias=False)

(1): ReLU()

(2): Linear(in_features=64, out_features=1024, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

(3): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=1024, out_features=64, bias=False)

(1): ReLU()

(2): Linear(in_features=64, out_features=1024, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

(4): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=1024, out_features=64, bias=False)

(1): ReLU()

(2): Linear(in_features=64, out_features=1024, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

(5): Bottleneck(

(conv1): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(256, 1024, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=1024, out_features=64, bias=False)

(1): ReLU()

(2): Linear(in_features=64, out_features=1024, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

)

(layer4): Sequential(

(0): Bottleneck(

(conv1): Conv2d(1024, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=2048, out_features=128, bias=False)

(1): ReLU()

(2): Linear(in_features=128, out_features=2048, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential(

(0): Conv2d(1024, 2048, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=2048, out_features=128, bias=False)

(1): ReLU()

(2): Linear(in_features=128, out_features=2048, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

(2): Bottleneck(

(conv1): Conv2d(2048, 512, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv3): Conv2d(512, 2048, kernel_size=(1, 1), stride=(1, 1), bias=False)

(bn3): BatchNorm2d(2048, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(SE): SE_Block(

(gap): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Sequential(

(0): Linear(in_features=2048, out_features=128, bias=False)

(1): ReLU()

(2): Linear(in_features=128, out_features=2048, bias=False)

(3): Sigmoid()

)

)

(shortcut): Sequential()

)

)

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

(linear): Linear(in_features=2048, out_features=10, bias=True)

)

torch.Size([1, 10])Use torchsummary to print detailed information about each network model

if __name__ == '__main__':

net = SE_ResNet50().cuda()

summary(net, (3, 224, 224))Print the model as follows

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 224, 224] 1,728

BatchNorm2d-2 [-1, 64, 224, 224] 128

Conv2d-3 [-1, 64, 224, 224] 4,096

BatchNorm2d-4 [-1, 64, 224, 224] 128

Conv2d-5 [-1, 64, 224, 224] 36,864

BatchNorm2d-6 [-1, 64, 224, 224] 128

Conv2d-7 [-1, 256, 224, 224] 16,384

BatchNorm2d-8 [-1, 256, 224, 224] 512

AdaptiveAvgPool2d-9 [-1, 256, 1, 1] 0

Linear-10 [-1, 16] 4,096

ReLU-11 [-1, 16] 0

Linear-12 [-1, 256] 4,096

Sigmoid-13 [-1, 256] 0

SE_Block-14 [-1, 256, 224, 224] 0

Conv2d-15 [-1, 256, 224, 224] 16,384

BatchNorm2d-16 [-1, 256, 224, 224] 512

Bottleneck-17 [-1, 256, 224, 224] 0

Conv2d-18 [-1, 64, 224, 224] 16,384

BatchNorm2d-19 [-1, 64, 224, 224] 128

Conv2d-20 [-1, 64, 224, 224] 36,864

BatchNorm2d-21 [-1, 64, 224, 224] 128

Conv2d-22 [-1, 256, 224, 224] 16,384

BatchNorm2d-23 [-1, 256, 224, 224] 512

AdaptiveAvgPool2d-24 [-1, 256, 1, 1] 0

Linear-25 [-1, 16] 4,096

ReLU-26 [-1, 16] 0

Linear-27 [-1, 256] 4,096

Sigmoid-28 [-1, 256] 0

SE_Block-29 [-1, 256, 224, 224] 0

Bottleneck-30 [-1, 256, 224, 224] 0

Conv2d-31 [-1, 64, 224, 224] 16,384

BatchNorm2d-32 [-1, 64, 224, 224] 128

Conv2d-33 [-1, 64, 224, 224] 36,864

BatchNorm2d-34 [-1, 64, 224, 224] 128

Conv2d-35 [-1, 256, 224, 224] 16,384

BatchNorm2d-36 [-1, 256, 224, 224] 512

AdaptiveAvgPool2d-37 [-1, 256, 1, 1] 0

Linear-38 [-1, 16] 4,096

ReLU-39 [-1, 16] 0

Linear-40 [-1, 256] 4,096

Sigmoid-41 [-1, 256] 0

SE_Block-42 [-1, 256, 224, 224] 0

Bottleneck-43 [-1, 256, 224, 224] 0

Conv2d-44 [-1, 128, 224, 224] 32,768

BatchNorm2d-45 [-1, 128, 224, 224] 256

Conv2d-46 [-1, 128, 112, 112] 147,456

BatchNorm2d-47 [-1, 128, 112, 112] 256

Conv2d-48 [-1, 512, 112, 112] 65,536

BatchNorm2d-49 [-1, 512, 112, 112] 1,024

AdaptiveAvgPool2d-50 [-1, 512, 1, 1] 0

Linear-51 [-1, 32] 16,384

ReLU-52 [-1, 32] 0

Linear-53 [-1, 512] 16,384

Sigmoid-54 [-1, 512] 0

SE_Block-55 [-1, 512, 112, 112] 0

Conv2d-56 [-1, 512, 112, 112] 131,072

BatchNorm2d-57 [-1, 512, 112, 112] 1,024

Bottleneck-58 [-1, 512, 112, 112] 0

Conv2d-59 [-1, 128, 112, 112] 65,536

BatchNorm2d-60 [-1, 128, 112, 112] 256

Conv2d-61 [-1, 128, 112, 112] 147,456

BatchNorm2d-62 [-1, 128, 112, 112] 256

Conv2d-63 [-1, 512, 112, 112] 65,536

BatchNorm2d-64 [-1, 512, 112, 112] 1,024

AdaptiveAvgPool2d-65 [-1, 512, 1, 1] 0

Linear-66 [-1, 32] 16,384

ReLU-67 [-1, 32] 0

Linear-68 [-1, 512] 16,384

Sigmoid-69 [-1, 512] 0

SE_Block-70 [-1, 512, 112, 112] 0

Bottleneck-71 [-1, 512, 112, 112] 0

Conv2d-72 [-1, 128, 112, 112] 65,536

BatchNorm2d-73 [-1, 128, 112, 112] 256

Conv2d-74 [-1, 128, 112, 112] 147,456

BatchNorm2d-75 [-1, 128, 112, 112] 256

Conv2d-76 [-1, 512, 112, 112] 65,536

BatchNorm2d-77 [-1, 512, 112, 112] 1,024

AdaptiveAvgPool2d-78 [-1, 512, 1, 1] 0

Linear-79 [-1, 32] 16,384

ReLU-80 [-1, 32] 0

Linear-81 [-1, 512] 16,384

Sigmoid-82 [-1, 512] 0

SE_Block-83 [-1, 512, 112, 112] 0

Bottleneck-84 [-1, 512, 112, 112] 0

Conv2d-85 [-1, 128, 112, 112] 65,536

BatchNorm2d-86 [-1, 128, 112, 112] 256

Conv2d-87 [-1, 128, 112, 112] 147,456

BatchNorm2d-88 [-1, 128, 112, 112] 256

Conv2d-89 [-1, 512, 112, 112] 65,536

BatchNorm2d-90 [-1, 512, 112, 112] 1,024

AdaptiveAvgPool2d-91 [-1, 512, 1, 1] 0

Linear-92 [-1, 32] 16,384

ReLU-93 [-1, 32] 0

Linear-94 [-1, 512] 16,384

Sigmoid-95 [-1, 512] 0

SE_Block-96 [-1, 512, 112, 112] 0

Bottleneck-97 [-1, 512, 112, 112] 0

Conv2d-98 [-1, 256, 112, 112] 131,072

BatchNorm2d-99 [-1, 256, 112, 112] 512

Conv2d-100 [-1, 256, 56, 56] 589,824

BatchNorm2d-101 [-1, 256, 56, 56] 512

Conv2d-102 [-1, 1024, 56, 56] 262,144

BatchNorm2d-103 [-1, 1024, 56, 56] 2,048

AdaptiveAvgPool2d-104 [-1, 1024, 1, 1] 0

Linear-105 [-1, 64] 65,536

ReLU-106 [-1, 64] 0

Linear-107 [-1, 1024] 65,536

Sigmoid-108 [-1, 1024] 0

SE_Block-109 [-1, 1024, 56, 56] 0

Conv2d-110 [-1, 1024, 56, 56] 524,288

BatchNorm2d-111 [-1, 1024, 56, 56] 2,048

Bottleneck-112 [-1, 1024, 56, 56] 0

Conv2d-113 [-1, 256, 56, 56] 262,144

BatchNorm2d-114 [-1, 256, 56, 56] 512

Conv2d-115 [-1, 256, 56, 56] 589,824

BatchNorm2d-116 [-1, 256, 56, 56] 512

Conv2d-117 [-1, 1024, 56, 56] 262,144

BatchNorm2d-118 [-1, 1024, 56, 56] 2,048

AdaptiveAvgPool2d-119 [-1, 1024, 1, 1] 0

Linear-120 [-1, 64] 65,536

ReLU-121 [-1, 64] 0

Linear-122 [-1, 1024] 65,536

Sigmoid-123 [-1, 1024] 0

SE_Block-124 [-1, 1024, 56, 56] 0

Bottleneck-125 [-1, 1024, 56, 56] 0

Conv2d-126 [-1, 256, 56, 56] 262,144

BatchNorm2d-127 [-1, 256, 56, 56] 512

Conv2d-128 [-1, 256, 56, 56] 589,824

BatchNorm2d-129 [-1, 256, 56, 56] 512

Conv2d-130 [-1, 1024, 56, 56] 262,144

BatchNorm2d-131 [-1, 1024, 56, 56] 2,048

AdaptiveAvgPool2d-132 [-1, 1024, 1, 1] 0

Linear-133 [-1, 64] 65,536

ReLU-134 [-1, 64] 0

Linear-135 [-1, 1024] 65,536

Sigmoid-136 [-1, 1024] 0

SE_Block-137 [-1, 1024, 56, 56] 0

Bottleneck-138 [-1, 1024, 56, 56] 0

Conv2d-139 [-1, 256, 56, 56] 262,144

BatchNorm2d-140 [-1, 256, 56, 56] 512

Conv2d-141 [-1, 256, 56, 56] 589,824

BatchNorm2d-142 [-1, 256, 56, 56] 512

Conv2d-143 [-1, 1024, 56, 56] 262,144

BatchNorm2d-144 [-1, 1024, 56, 56] 2,048

AdaptiveAvgPool2d-145 [-1, 1024, 1, 1] 0

Linear-146 [-1, 64] 65,536

ReLU-147 [-1, 64] 0

Linear-148 [-1, 1024] 65,536

Sigmoid-149 [-1, 1024] 0

SE_Block-150 [-1, 1024, 56, 56] 0

Bottleneck-151 [-1, 1024, 56, 56] 0

Conv2d-152 [-1, 256, 56, 56] 262,144

BatchNorm2d-153 [-1, 256, 56, 56] 512

Conv2d-154 [-1, 256, 56, 56] 589,824

BatchNorm2d-155 [-1, 256, 56, 56] 512

Conv2d-156 [-1, 1024, 56, 56] 262,144

BatchNorm2d-157 [-1, 1024, 56, 56] 2,048

AdaptiveAvgPool2d-158 [-1, 1024, 1, 1] 0

Linear-159 [-1, 64] 65,536

ReLU-160 [-1, 64] 0

Linear-161 [-1, 1024] 65,536

Sigmoid-162 [-1, 1024] 0

SE_Block-163 [-1, 1024, 56, 56] 0

Bottleneck-164 [-1, 1024, 56, 56] 0

Conv2d-165 [-1, 256, 56, 56] 262,144

BatchNorm2d-166 [-1, 256, 56, 56] 512

Conv2d-167 [-1, 256, 56, 56] 589,824

BatchNorm2d-168 [-1, 256, 56, 56] 512

Conv2d-169 [-1, 1024, 56, 56] 262,144

BatchNorm2d-170 [-1, 1024, 56, 56] 2,048

AdaptiveAvgPool2d-171 [-1, 1024, 1, 1] 0

Linear-172 [-1, 64] 65,536

ReLU-173 [-1, 64] 0

Linear-174 [-1, 1024] 65,536

Sigmoid-175 [-1, 1024] 0

SE_Block-176 [-1, 1024, 56, 56] 0

Bottleneck-177 [-1, 1024, 56, 56] 0

Conv2d-178 [-1, 512, 56, 56] 524,288

BatchNorm2d-179 [-1, 512, 56, 56] 1,024

Conv2d-180 [-1, 512, 28, 28] 2,359,296

BatchNorm2d-181 [-1, 512, 28, 28] 1,024

Conv2d-182 [-1, 2048, 28, 28] 1,048,576

BatchNorm2d-183 [-1, 2048, 28, 28] 4,096

AdaptiveAvgPool2d-184 [-1, 2048, 1, 1] 0

Linear-185 [-1, 128] 262,144

ReLU-186 [-1, 128] 0

Linear-187 [-1, 2048] 262,144

Sigmoid-188 [-1, 2048] 0

SE_Block-189 [-1, 2048, 28, 28] 0

Conv2d-190 [-1, 2048, 28, 28] 2,097,152

BatchNorm2d-191 [-1, 2048, 28, 28] 4,096

Bottleneck-192 [-1, 2048, 28, 28] 0

Conv2d-193 [-1, 512, 28, 28] 1,048,576

BatchNorm2d-194 [-1, 512, 28, 28] 1,024

Conv2d-195 [-1, 512, 28, 28] 2,359,296

BatchNorm2d-196 [-1, 512, 28, 28] 1,024

Conv2d-197 [-1, 2048, 28, 28] 1,048,576

BatchNorm2d-198 [-1, 2048, 28, 28] 4,096

AdaptiveAvgPool2d-199 [-1, 2048, 1, 1] 0

Linear-200 [-1, 128] 262,144

ReLU-201 [-1, 128] 0

Linear-202 [-1, 2048] 262,144

Sigmoid-203 [-1, 2048] 0

SE_Block-204 [-1, 2048, 28, 28] 0

Bottleneck-205 [-1, 2048, 28, 28] 0

Conv2d-206 [-1, 512, 28, 28] 1,048,576

BatchNorm2d-207 [-1, 512, 28, 28] 1,024

Conv2d-208 [-1, 512, 28, 28] 2,359,296

BatchNorm2d-209 [-1, 512, 28, 28] 1,024

Conv2d-210 [-1, 2048, 28, 28] 1,048,576

BatchNorm2d-211 [-1, 2048, 28, 28] 4,096

AdaptiveAvgPool2d-212 [-1, 2048, 1, 1] 0

Linear-213 [-1, 128] 262,144

ReLU-214 [-1, 128] 0

Linear-215 [-1, 2048] 262,144

Sigmoid-216 [-1, 2048] 0

SE_Block-217 [-1, 2048, 28, 28] 0

Bottleneck-218 [-1, 2048, 28, 28] 0

AdaptiveAvgPool2d-219 [-1, 2048, 1, 1] 0

Linear-220 [-1, 10] 20,490

================================================================

Total params: 26,035,786

Trainable params: 26,035,786

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 0.57

Forward/backward pass size (MB): 3914.25

Params size (MB): 99.32

Estimated Total Size (MB): 4014.14

----------------------------------------------------------------

Process finished with exit code 0

(5) Complete code

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchsummary import summary

'''------------- I. SE Module -----------------------------'''

# global average pooling + 1*1 convolution kernel + ReLu + 1*1 convolution kernel + Sigmoid

class SE_Block(nn.Module):

def __init__(self, inchannel, ratio=16):

super(SE_Block, self).__init__()

# Global average pooling (Fsq operation)

self.gap = nn.AdaptiveAvgPool2d((1, 1))

# Two fully connected layers (Fex operations)

self.fc = nn.Sequential(

nn.Linear(inchannel, inchannel // ratio, bias=False), # from c -> c/r

nn.ReLU(),

nn.Linear(inchannel // ratio, inchannel, bias=False), # from c/r -> c

nn.Sigmoid()

)

def forward(self, x):

# of batch data images and channels read

b, c, h, w = x.size()

# Fsq operation: output b*c matrix after pooling

y = self.gap(x).view(b, c)

# Fex operation: output (b, c, 1, 1) matrix via fully connected layer

y = self.fc(y).view(b, c, 1, 1)

# Fscale operation: multiply the resulting weights by the original feature map x

return x * y.expand_as(x)

'''------------- II. BasicBlock Module -----------------------------'''

# Residual block structures on the left (18-layer, 34-layer)

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, inchannel, outchannel, stride=1):

super(BasicBlock, self).__init__()

self.conv1 = nn.Conv2d(inchannel, outchannel, kernel_size=3,

stride=stride, padding=1, bias=False)

self.bn1 = nn.BatchNorm2d(outchannel)

self.conv2 = nn.Conv2d(outchannel, outchannel, kernel_size=3,

stride=1, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(outchannel)

# SE_Block after BN, before shortcut

self.SE = SE_Block(outchannel)

self.shortcut = nn.Sequential()

if stride != 1 or inchannel != self.expansion*outchannel:

self.shortcut = nn.Sequential(

nn.Conv2d(inchannel, self.expansion*outchannel,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(self.expansion*outchannel)

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = self.bn2(self.conv2(out))

SE_out = self.SE(out)

out = out * SE_out

out += self.shortcut(x)

out = F.relu(out)

return out

"' -- -- -- -- -- -- -- -- -- -- -- -- -- three, Bottleneck module -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- -- ' ' '

# Residual block structures on the right (50-layer, 101-layer, 152-layer)

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, inchannel, outchannel, stride=1):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(inchannel, outchannel, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(outchannel)

self.conv2 = nn.Conv2d(outchannel, outchannel, kernel_size=3,

stride=stride, padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(outchannel)

self.conv3 = nn.Conv2d(outchannel, self.expansion*outchannel,

kernel_size=1, bias=False)

self.bn3 = nn.BatchNorm2d(self.expansion*outchannel)

# SE_Block after BN, before shortcut

self.SE = SE_Block(self.expansion*outchannel)

self.shortcut = nn.Sequential()

if stride != 1 or inchannel != self.expansion*outchannel:

self.shortcut = nn.Sequential(

nn.Conv2d(inchannel, self.expansion*outchannel,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(self.expansion*outchannel)

)

def forward(self, x):

out = F.relu(self.bn1(self.conv1(x)))

out = F.relu(self.bn2(self.conv2(out)))

out = self.bn3(self.conv3(out))

SE_out = self.SE(out)

out = out * SE_out

out += self.shortcut(x)

out = F.relu(out)

return out

'''------------- IV. Building the SE_ResNet structure -----------------------------'''

class SE_ResNet(nn.Module):

def __init__(self, block, num_blocks, num_classes=10):

super(SE_ResNet, self).__init__()

self.in_planes = 64

self.conv1 = nn.Conv2d(3, 64, kernel_size=3,

stride=1, padding=1, bias=False) # conv1

self.bn1 = nn.BatchNorm2d(64)

self.layer1 = self._make_layer(block, 64, num_blocks[0], stride=1) # conv2_x

self.layer2 = self._make_layer(block, 128, num_blocks[1], stride=2) # conv3_x

self.layer3 = self._make_layer(block, 256, num_blocks[2], stride=2) # conv4_x

self.layer4 = self._make_layer(block, 512, num_blocks[3], stride=2) # conv5_x

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.linear = nn.Linear(512 * block.expansion, num_classes)

def _make_layer(self, block, planes, num_blocks, stride):

strides = [stride] + [1]*(num_blocks-1)

layers = []

for stride in strides:

layers.append(block(self.in_planes, planes, stride))

self.in_planes = planes * block.expansion

return nn.Sequential(*layers)

def forward(self, x):

x = F.relu(self.bn1(self.conv1(x)))

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

out = self.linear(x)

return out

def SE_ResNet18():

return SE_ResNet(BasicBlock, [2, 2, 2, 2])

def SE_ResNet34():

return SE_ResNet(BasicBlock, [3, 4, 6, 3])

def SE_ResNet50():

return SE_ResNet(Bottleneck, [3, 4, 6, 3])

def SE_ResNet101():

return SE_ResNet(Bottleneck, [3, 4, 23, 3])

def SE_ResNet152():

return SE_ResNet(Bottleneck, [3, 8, 36, 3])

'''

if __name__ == '__main__':

model = SE_ResNet50()

print(model)

input = torch.randn(1, 3, 224, 224)

out = model(input)

print(out.shape)

# test()

'''

if __name__ == '__main__':

net = SE_ResNet50().cuda()

summary(net, (3, 224, 224))This is the end of this post, welcome to leave a comment to discuss ah!