1、Introduction to Spark

2、Spark-Core Core Arithmetic

3、Spark-Core

4、SparkSQL

Article Catalog

I. Introduction

1) Official website address:http://spark.apache.org/

2) Document Viewing Address:https://spark.apache.org/docs/3.1.3/

3) Download address:https://spark.apache.org/downloads.html

https://archive.apache.org/dist/spark/

II. Installation

1. Introduction

Deploying a Spark cluster is broadly categorized into two modes: standalone mode and cluster mode.

Most distributed frameworks support standalone mode, which is convenient for developers to debug the framework’s operating environment. However, in the production environment, the standalone mode is not used. Therefore, the subsequent deployment of Spark clusters directly follows the cluster mode.

The following is a detailed list of the deployment modes that Spark currently supports.

- Local mode: Deploying a single Spark service locally

- Standalone mode: Spark comes with a task scheduling mode. (Commonly used in China)

- YARN mode: Spark uses Hadoop’s YARN component for resource and task scheduling. (Most commonly used in China)

- Mesos mode: Spark uses the Mesos platform for scheduling resources and tasks. (Rarely used domestically)

2、Local deployment (Local mode)

2.1 Installation

Local mode is the mode that runs on a single computer, which is usually what is used for practicing and testing on this machine.

wget https://gitcode.net/weixin_44624117/software/-/raw/master/software/Linux/Spark/spark-3.4.1-bin-hadoop3.tgzCreating Folders

mkdir /opt/moduleUnzip the file

tar -zxvf spark-3.4.1-bin-hadoop3.tgz -C /opt/module/Changing the file name

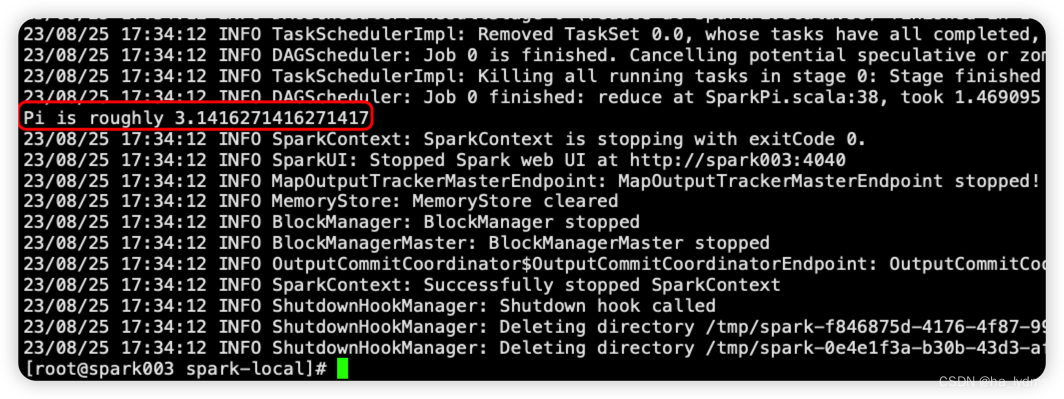

mv /opt/module/spark-3.4.1-bin-hadoop3/ /opt/module/spark-localOfficial request for PI cases

cd /opt/module/spark-local

bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master local[2] \

./examples/jars/spark-examples_2.12-3.4.1.jar \

10Parameter parsing:

--class: Indicates the main class of the program to be executed;--master local[2]:

(1)local: If no number of threads is specified, all calculations run in a single thread, without any parallel calculations.

(2)local[K]: Specifies that K Cores are used to run the computation, e.g., local[2] is running 2 Cores for execution.

(3)local[*]: Default mode. Automatically help you set the number of threads according to the CPU with the most cores. For example, if the CPU has 8 cores, Spark helps you automatically set 8 threads to compute.

spark-examples_2.12-3.1.3.jar: The program to be run;10: The input parameter for the program to be run (the number of times the pi is calculated; the more times it is calculated, the more accurate it is);

The algorithm uses the Monte-Carlo algorithm to find the PI

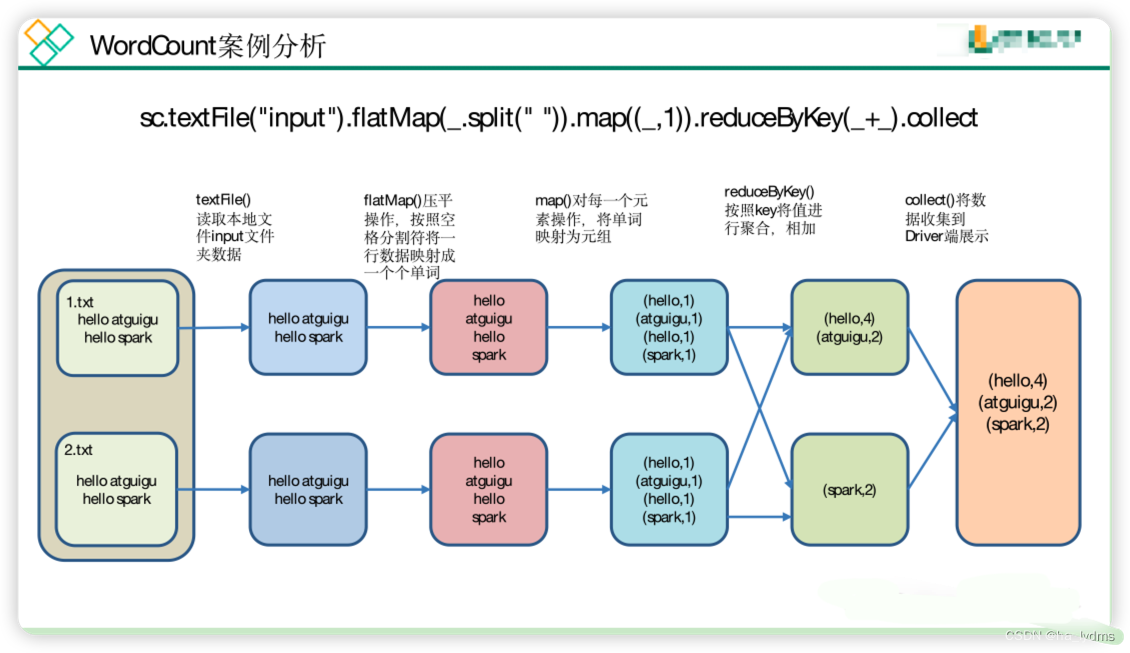

2.2 Official WordCount example

Prepare data

mkdir input

Enter the following

hello atguigu

hello spark

Start Spark-shell

bin/spark-shell

operate

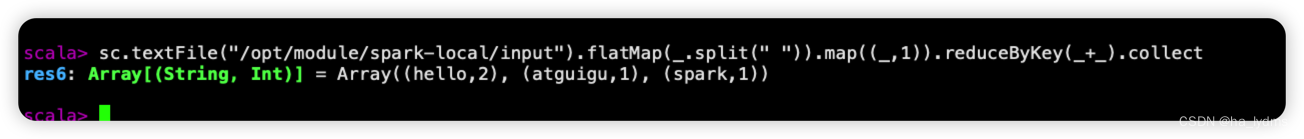

scala> sc.textFile("/opt/module/spark-local/input").flatMap(_.split(" ")).map((_,1)).reduceByKey(_+_).collect

res6: Array[(String, Int)] = Array((hello,2), (atguigu,1), (spark,1))

View execution results:

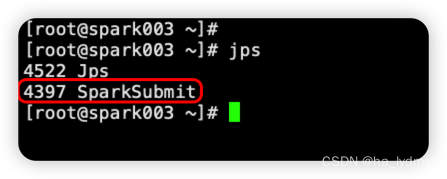

Connection window, a SparkSubmit process was found

The hadoop102:4040 page is closed when the spark-shell window is closed out.

3. Standlong mode

3.1 Introduction

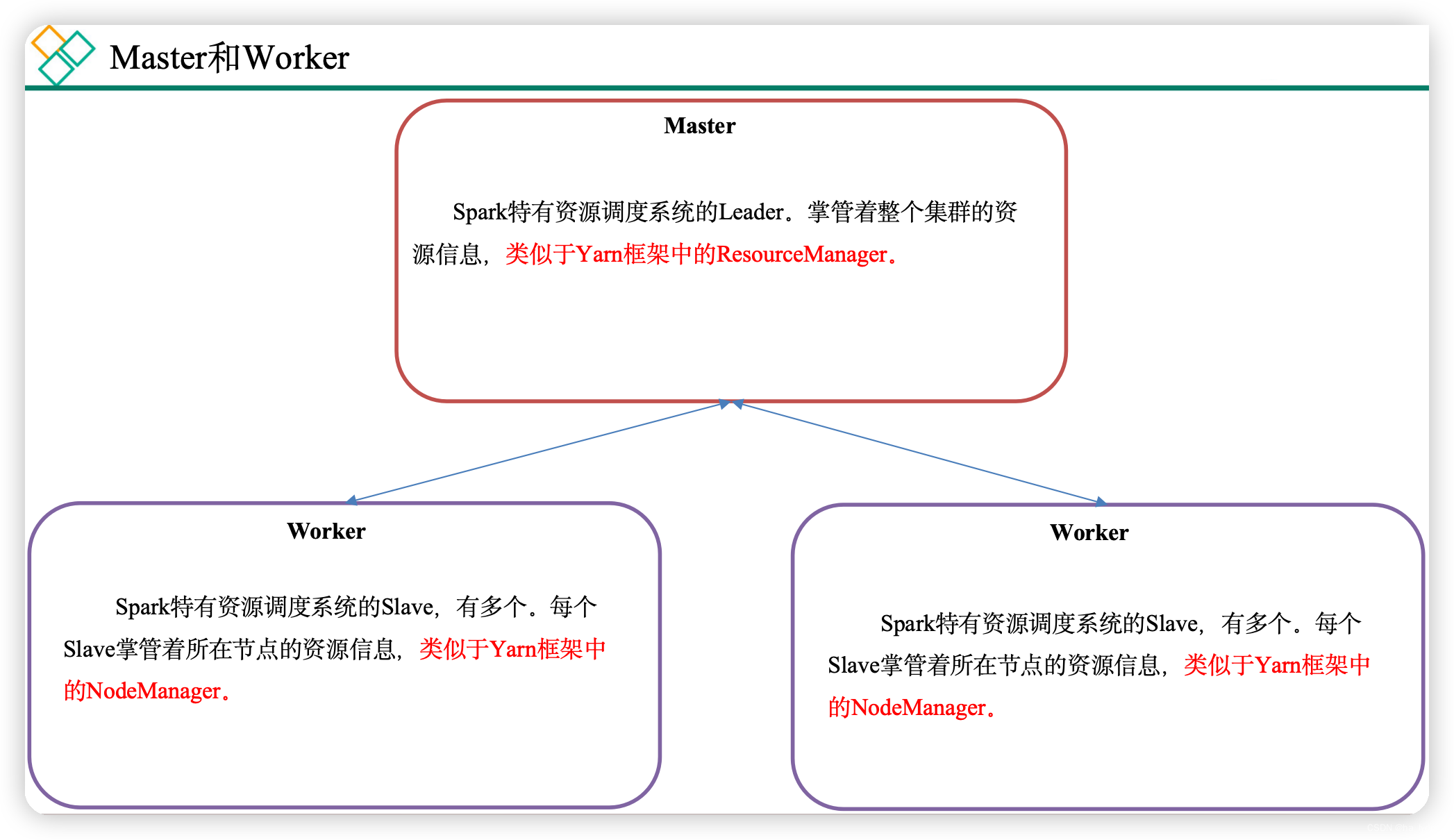

Standalone mode is the resource scheduling engine that comes with Spark to build a Spark cluster consisting of Master + Worker, and Spark runs in the cluster.

This is to be distinguished from Standalone in Hadoop. Standalone here means that only Spark is used to build a cluster without resorting to other frameworks like Yarn and Mesos in Hadoop.

Master and Worker are Spark daemons, cluster resource managers, i.e., the background resident processes that Spark must have to run normally in a specific mode (Standalone).

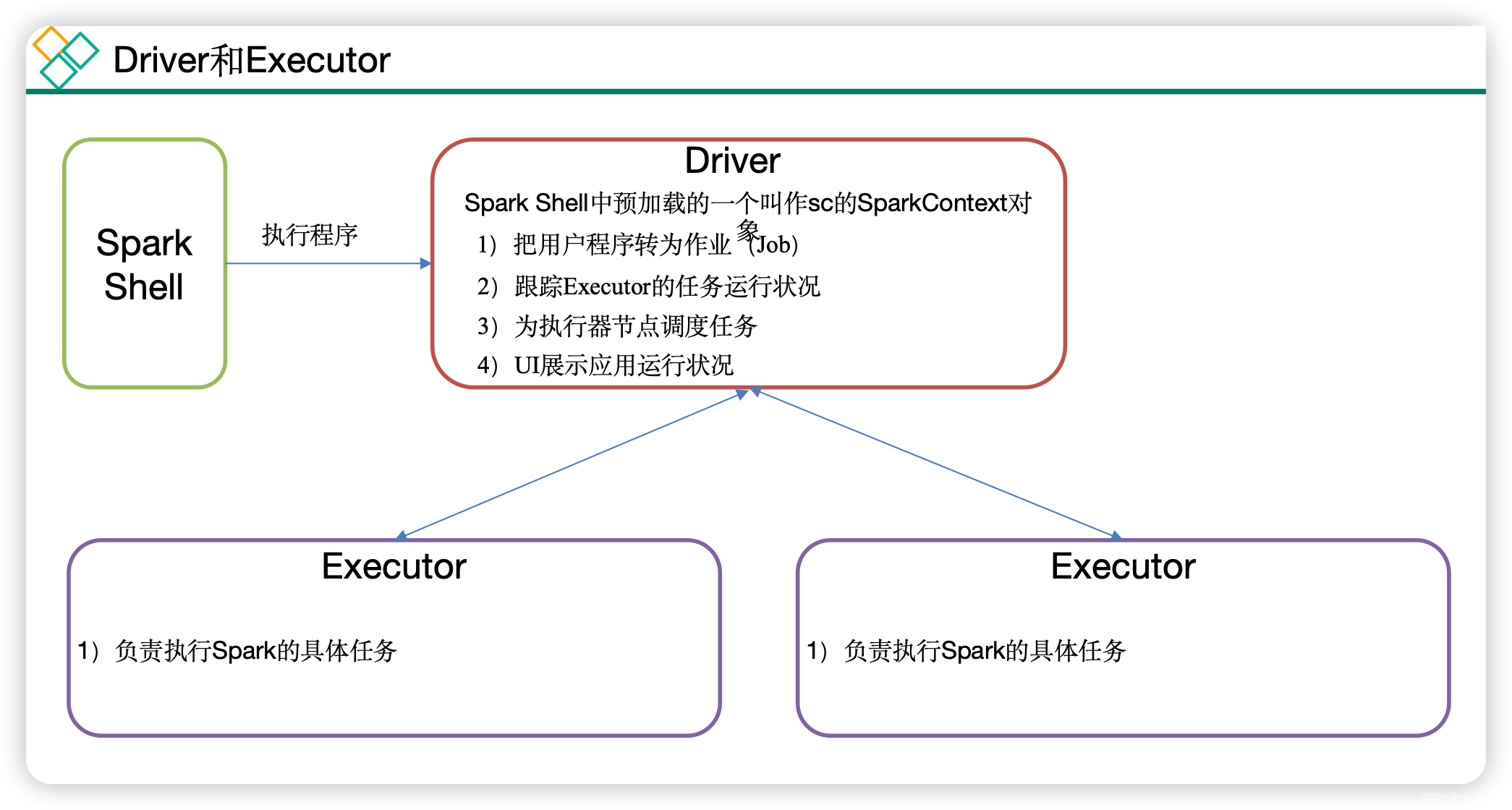

- Driver and Executor are temporary programs that are opened when a specific task is submitted to the Spark cluster.Standalone mode is Spark’s own resource scheduling engine that builds a Spark cluster consisting of Master + Worker, and Spark runs in the cluster.

- This is to be distinguished from Standalone in Hadoop. Standalone here means that only Spark is used to build a cluster without resorting to other frameworks like Yarn and Mesos in Hadoop.

2.2 Installing the cluster

| Hadoop101 | Hadoop102 | Hadoop103 | |

|---|---|---|---|

| Spark | MasterWorker | Worker | Worker |

Unzip the file

tar -zxvf spark-3.4.1-bin-hadoop3.tgz -C /opt/module/Rename a folder

mv /opt/module/spark-3.4.1-bin-hadoop3 /opt/module/spark-standaloneincreaseWorkernodal

cd /opt/module/spark-standalone/conf/

vim slaveshadoop101

hadoop102

hadoop103

increaseMasternodal

mv spark-env.sh.template spark-env.sh

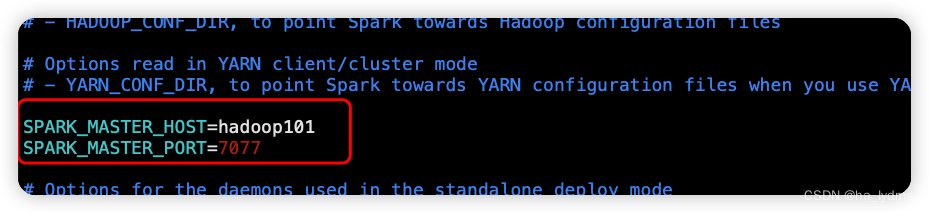

vim spark-env.shSPARK_MASTER_HOST=hadoop101

SPARK_MASTER_PORT=7077

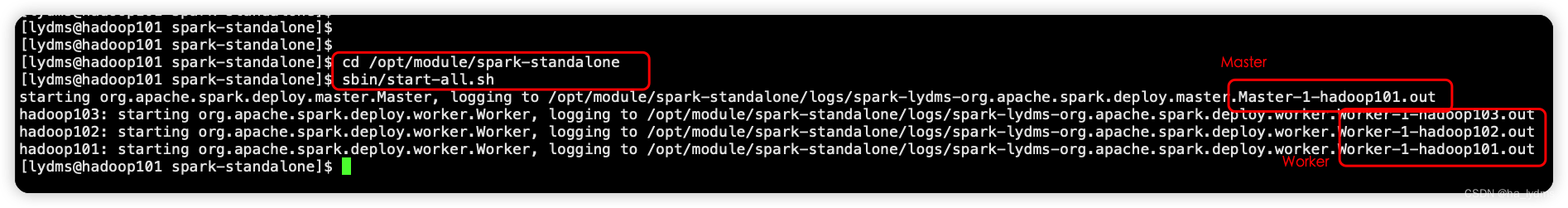

cd /opt/module/spark-standalone

sbin/start-all.sh

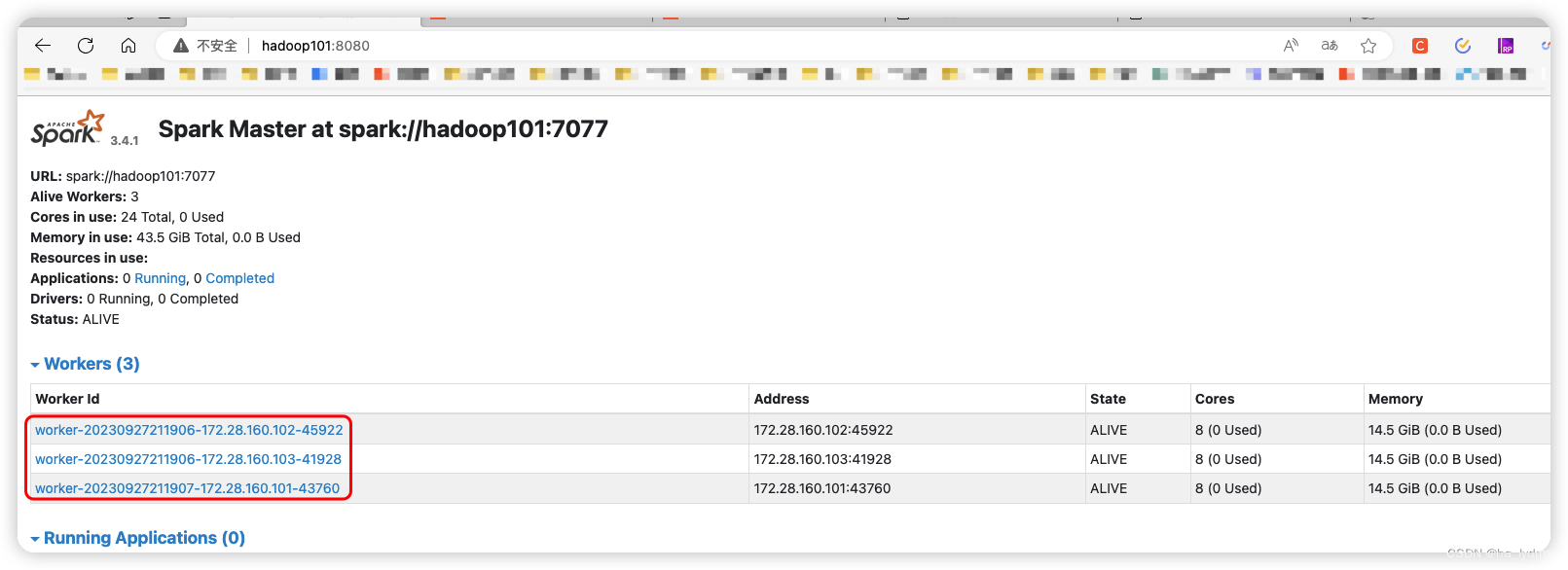

View page:http://hadoop101:8080

-

8080: master’s webUI

-

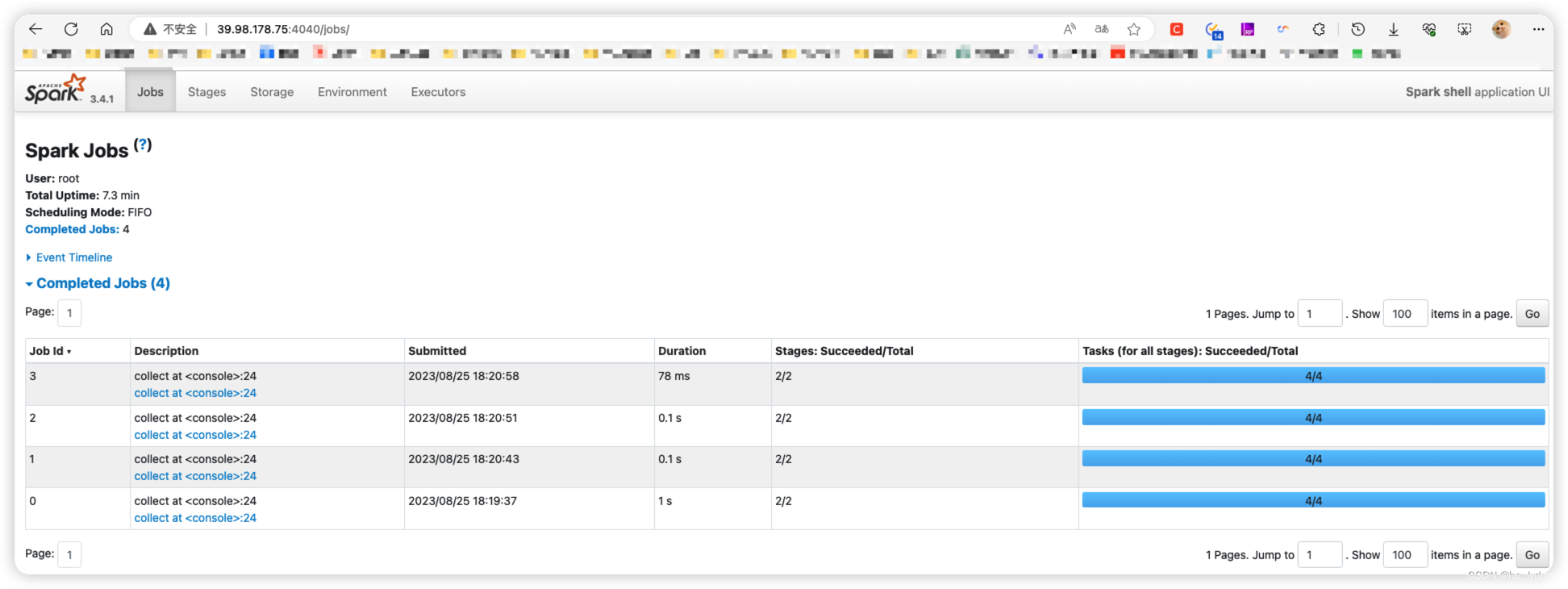

4040: Port number of the application’s webUI

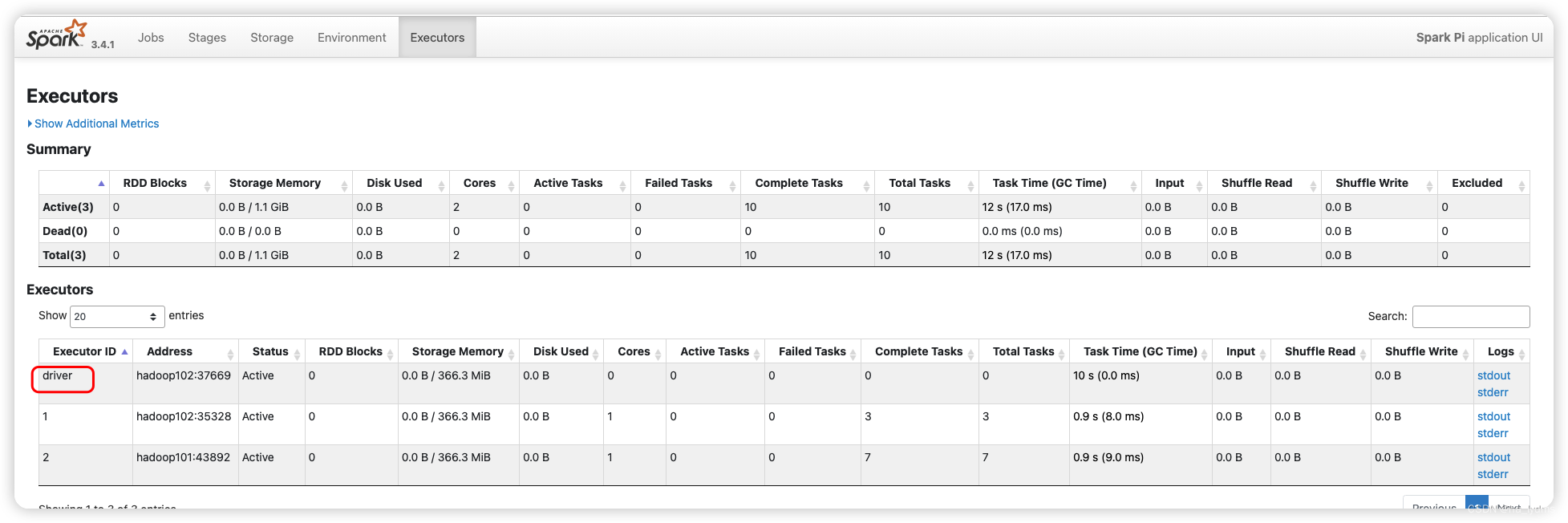

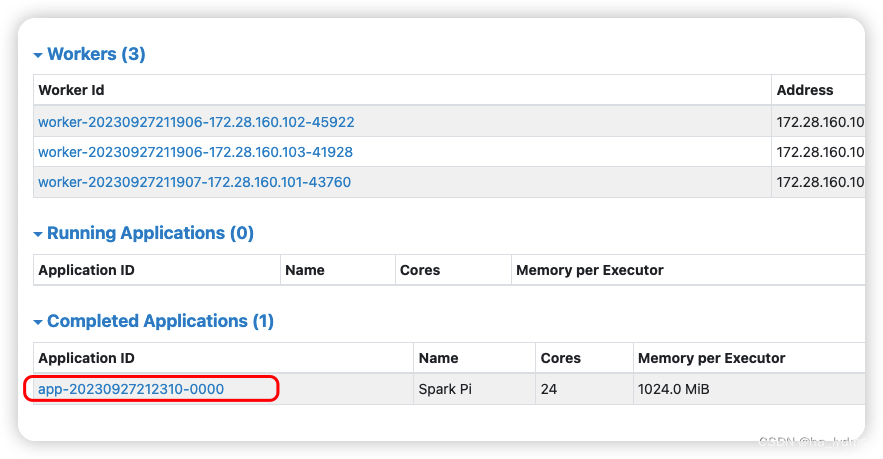

2.3 Official Test Cases

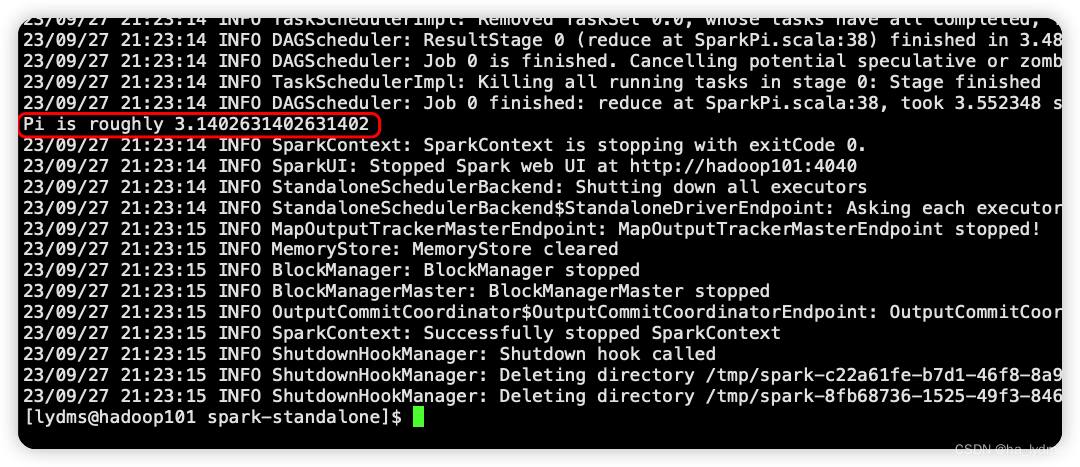

bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master spark://hadoop101:7077 \

./examples/jars/spark-examples_2.12-3.4.1.jar \

10

Implementation results:

8080: master’s webUI

4040: Port number of the application’s webUI

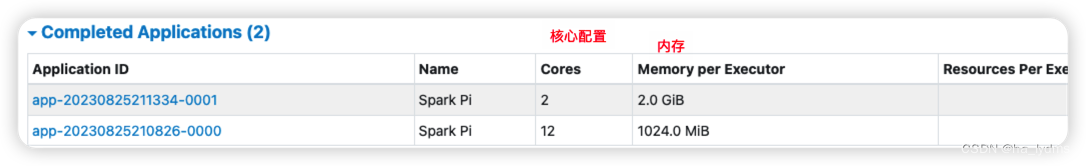

implementation parameter

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master spark://hadoop102:7077 \

--executor-memory 2G \

--total-executor-cores 2 \

./examples/jars/spark-examples_2.12-3.4.1.jar \

10parameter analysis

--executor-memory: 2G of available memory.--total-executor-cores: The number of CPU cores used is 2.

| parameters | account for | Examples of optional values |

|---|---|---|

| –class | Classes in Spark programs that contain main functions | |

| –master | Patterns of Spark program operation | Local mode: local[*], spark 7077, Yarn |

| –executor-memory 1G | designationeveryoneThe executor has 1G of free memory. | It is sufficient to conform to the cluster memory configuration, which is analyzed on a case-by-case basis. |

| –total-executor-cores 2 | designationpossessThe number of cpu cores used by the executor is 2. | |

| application-jar | Packaged application jar with dependencies. This URL is globally visible in the cluster. For example, for hdfs:// shared storage system, if it is file:// path, then the path of all nodes contains the same jar | |

| application-arguments | Arguments passed to the main() method |

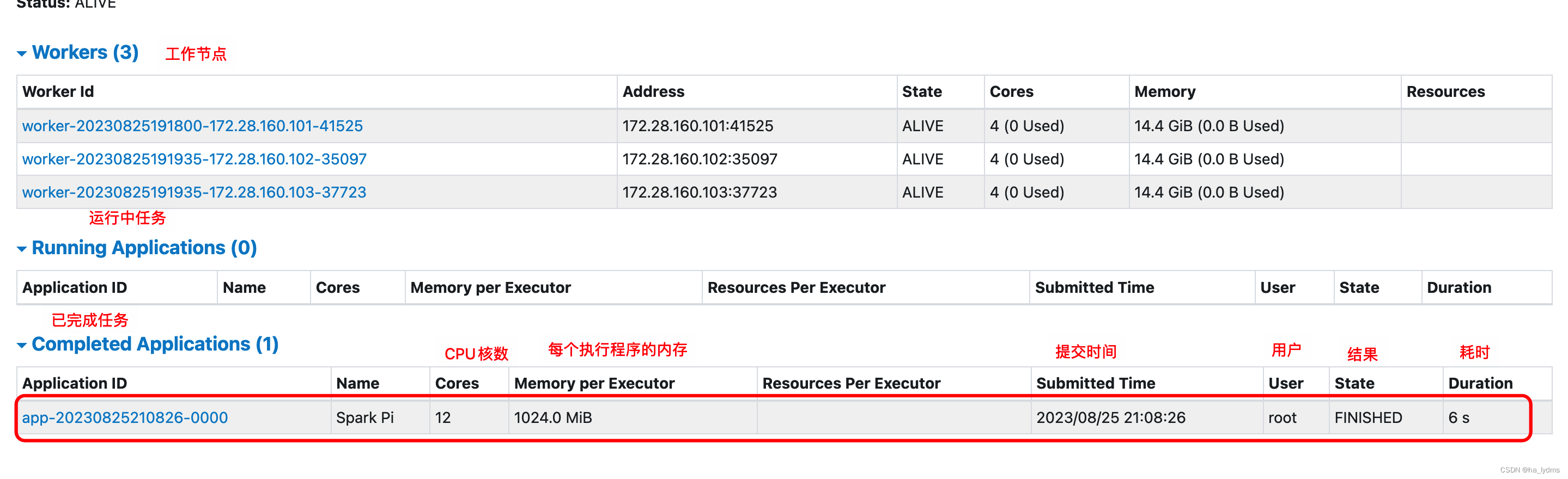

4. Yarn model

- Spark clients connect directly to Yarn without the need to build additional Spark clusters.

- A server that can submit Yarn is sufficient

3.1 Installation

Access to documents

wget https://gitcode.net/weixin_44624117/software/-/raw/master/software/Linux/Spark/spark-3.4.1-bin-hadoop3.tgzPressurize the installation package

tar -zxvf spark-3.4.1-bin-hadoop3.tgz -C /opt/module/Modify the directory name

mv /opt/module/spark-3.4.1-bin-hadoop3 /opt/module/spark-yarnModify the startup file

mv /opt/module/spark-yarn/conf/spark-env.sh.template /opt/module/spark-yarn/conf/spark-env.shAdding Configuration Files

vim /opt/module/spark-yarn/conf/spark-env.sh

# Add configuration content (Yarn configuration file address)

YARN_CONF_DIR=/opt/module/hadoop-3.1.3/etc/hadoopSubmission of mandates

Parameters:--master yarn: means run in Yarn mode; -deploy-mode means run the program in client mode

bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master yarn \

./examples/jars/spark-examples_2.12-3.4.1.jar \

10

3.2 Configuring the History Server

Moving Profiles

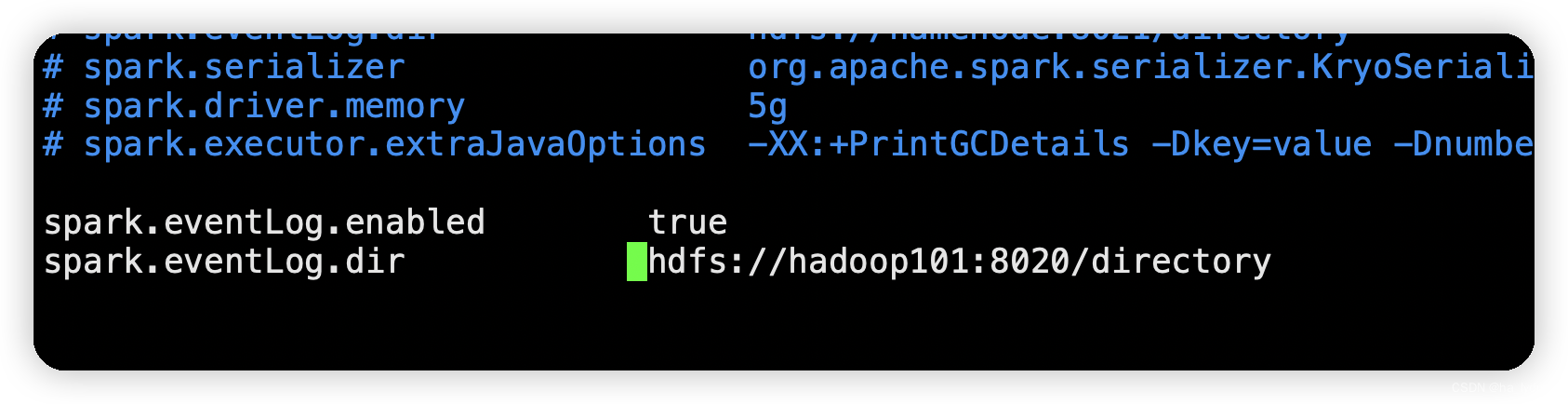

mv /opt/module/spark-yarn/conf/spark-defaults.conf.template /opt/module/spark-yarn/conf/spark-defaults.confModify the configuration file

cd /opt/module/spark-yarn/conf

vim spark-defaults.confspark.eventLog.enabled true

spark.eventLog.dir hdfs://hadoop101:8020/directory

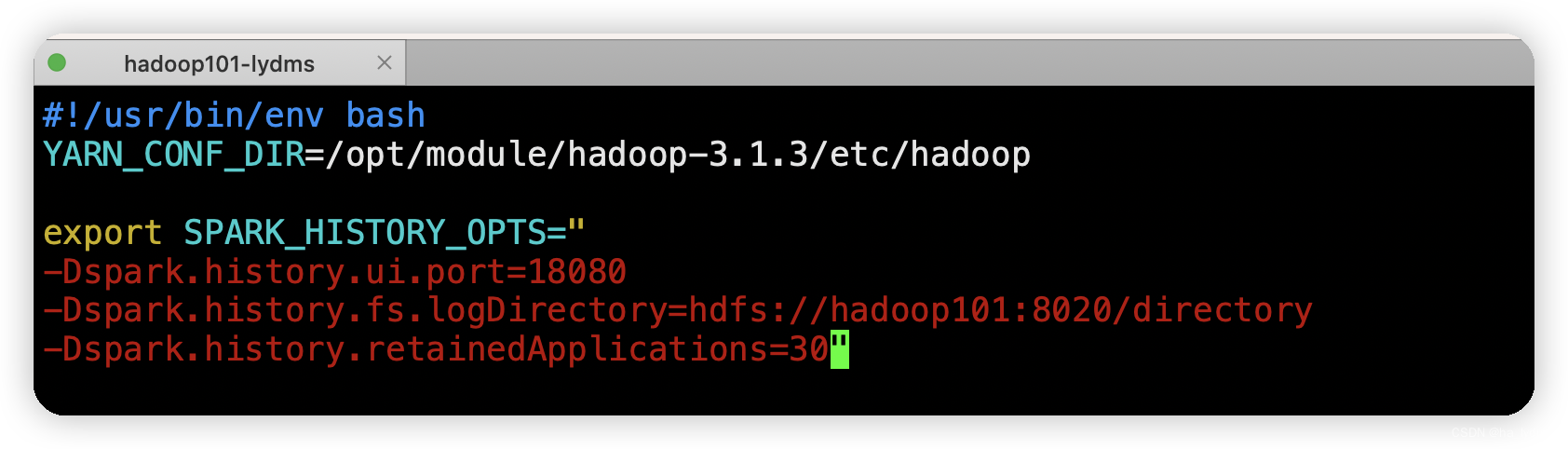

Modify the configuration filespark-env.sh

vim spark-env.shexport SPARK_HISTORY_OPTS="

-Dspark.history.ui.port=18080

-Dspark.history.fs.logDirectory=hdfs://hadoop101:8020/directory

-Dspark.history.retainedApplications=30"Configuration file parsing:

Dspark.history.ui.port=18080: The port number for WEBUI access is 18080-Dspark.history.fs.logDirectory: Specify the history server log storage path (read)-Dspark.history.retainedApplications: Specifies the number of Application history to be kept, if this value is exceeded, the old application information will be deleted, this is the number of applications in memory, not the number of applications displayed on the page.

3.3 Configuring to View Historical Logs

In order to be able to associate to the Spark History Server from Yarn, you need to configure the spark history server association path.

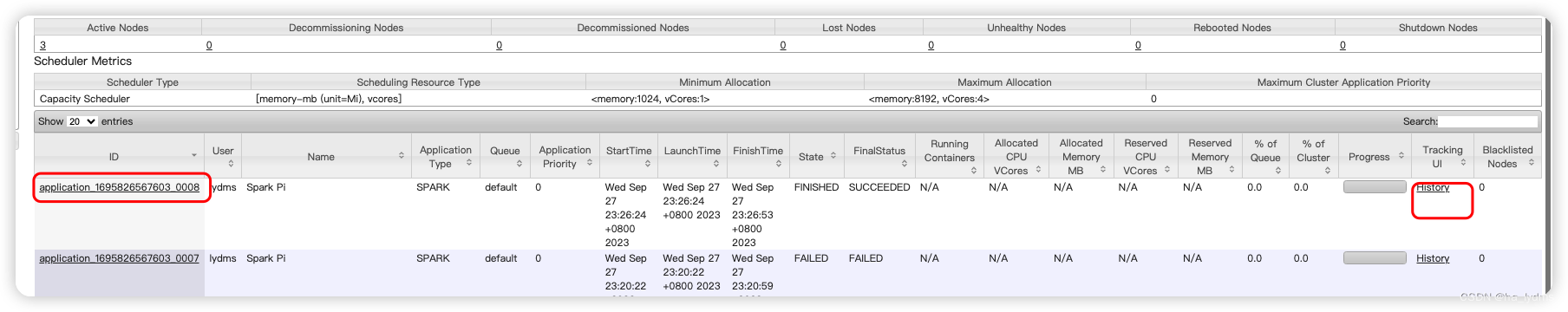

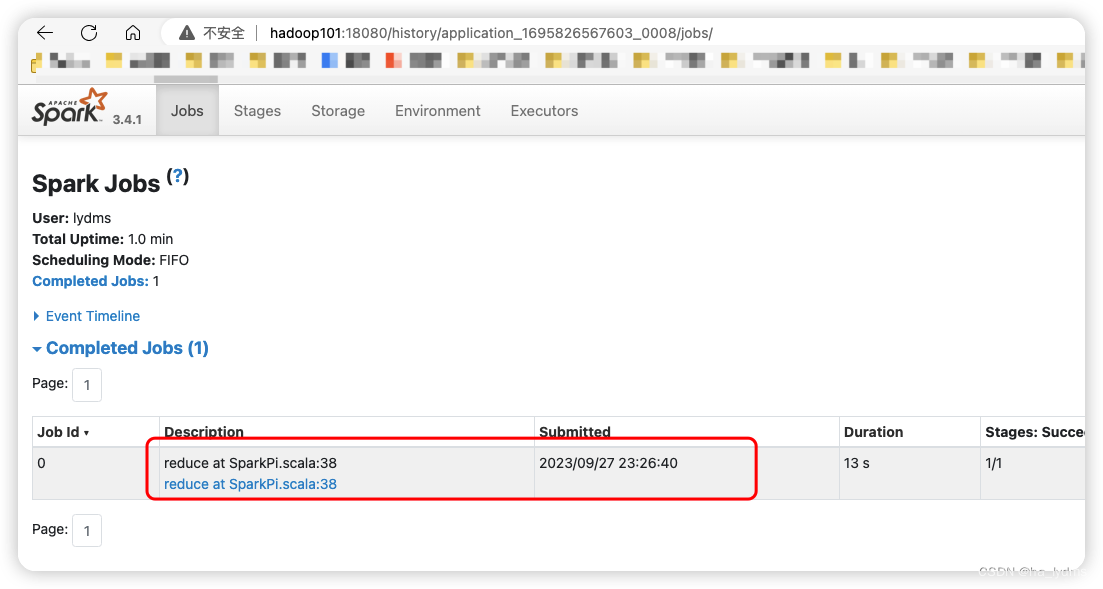

Purpose: Clicking on the HISTORY button for the spark task on yarn (8088) goes to the spark history server (18080) and no longer to the yarn history server (198888).

Modify the configuration file/opt/module/spark-yarn/conf/spark-defaults.conf

vim /opt/module/spark-yarn/conf/spark-defaults.confspark.yarn.historyServer.address=hadoop101:18080

spark.history.ui.port=18080

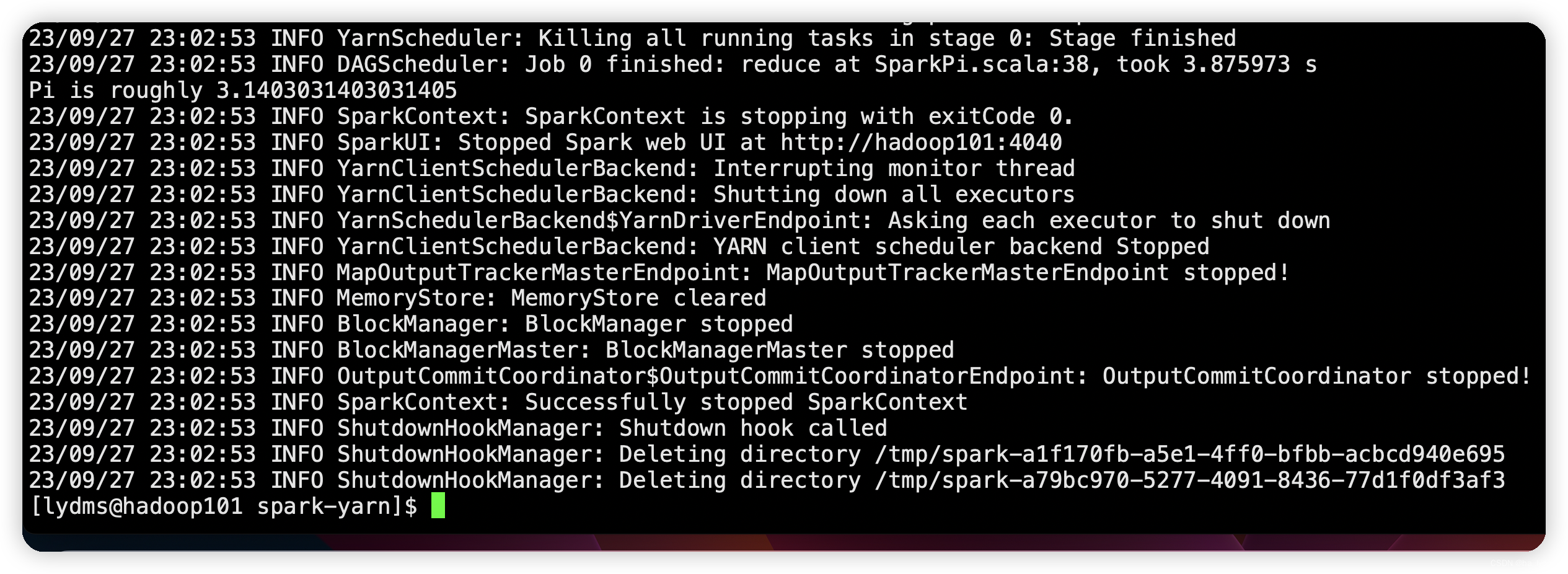

Starting the History Server

cd /opt/module/spark-yarn

sbin/start-history-server.shRe-submission of tasks

bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master yarn \

./examples/jars/spark-examples_2.12-3.4.1.jar \

10Page to view historical tasks:http://hadoop102:8088/cluster

5. Mesos mode

Spark client directly connects to Mesos; there is no need to build additional Spark clusters. Domestic applications are relatively small, more use of Yarn scheduling.

6. Comparison of several models

| paradigm | Number of Spark installed machines | process to be launched | member |

|---|---|---|---|

| Local | 1 | There is no | Spark |

| Standalone | 3 | Master and Worker | Spark |

| Yarn | 1 | Yarn and HDFS | Hadoop |

7. Common ports

4040: Spark to view the current Spark-shell running tasks Port number: 40407077: Spark Master internal communication service port number: 7077 (analogous to yarn’s port 8032 (RM and NM’s internal communication))8080: Spark Standalone mode Master Web port number: 8080 (analogous to Hadoop YARN task run view port number: 8088) (yarn mode) 898918080: Spark historical server-side port: 18080 (analogous to Hadoop historical server-side port: 198888)

Third, Yarn mode details

1. Introduction

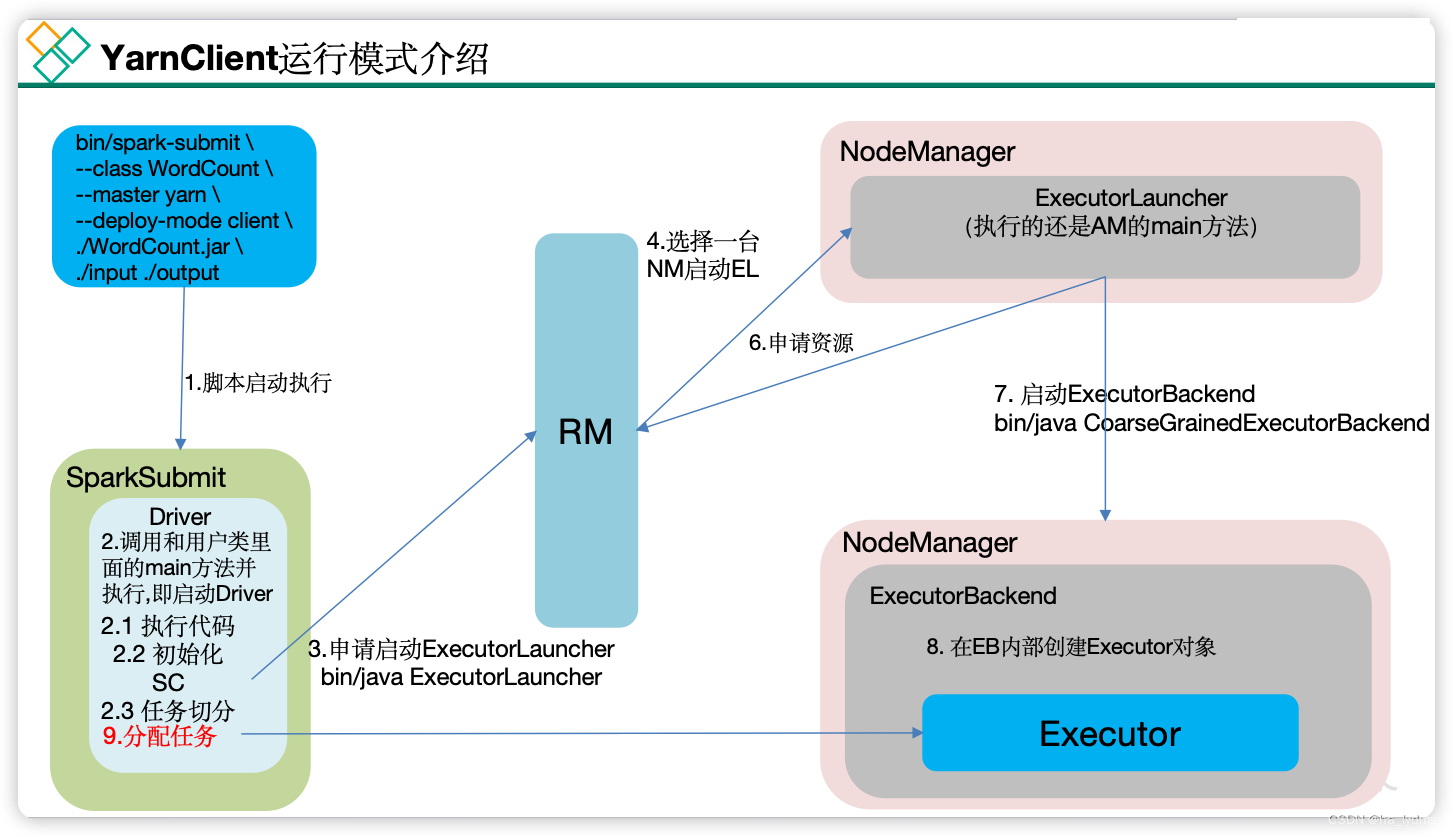

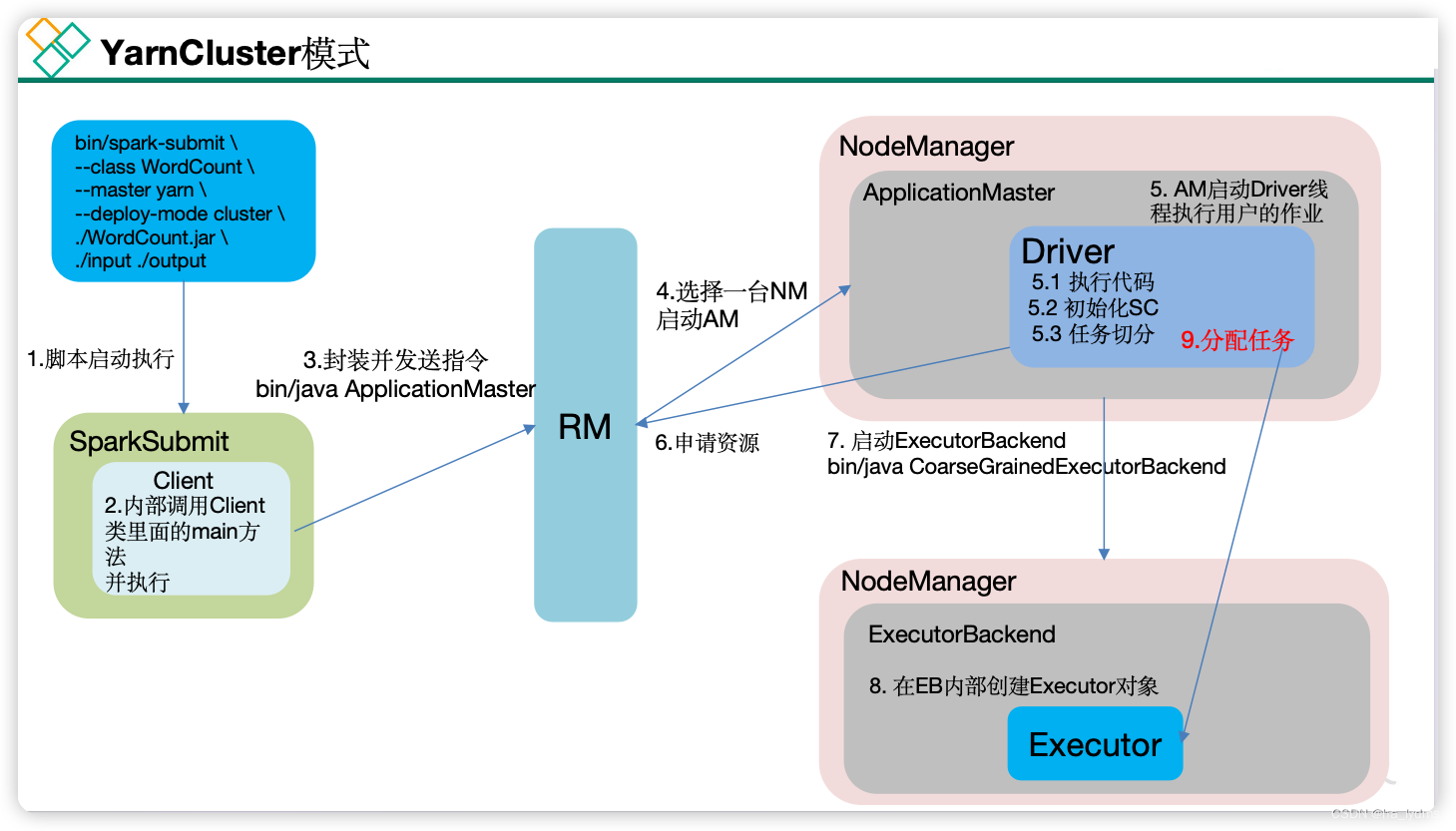

Spark hasyarn-clientandyarn-clusterTwo modes, the main difference is: the node where the Driver program runs.

yarn-client: Driver programs run on the client side and are suitable for interaction, debugging, and wanting to see the app’s output immediately.

yarn-cluster: The Driver program runs on APPMaster started by ResourceManager and is suitable for production environments.

2、Client mode

clientmode activation

bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master yarn \

--deploy-mode client \

./examples/jars/spark-examples_2.12-3.4.1.jar \

10

3. Cluster model

clustermode activation

bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master yarn \

--deploy-mode cluster \

./examples/jars/spark-examples_2.12-3.4.1.jar \

10