Some script files for file processing in computer vision!

First of all, it must be clearOwn json format, set your own dataset to the format required by yolo.

#Json files with different attributes extract different information

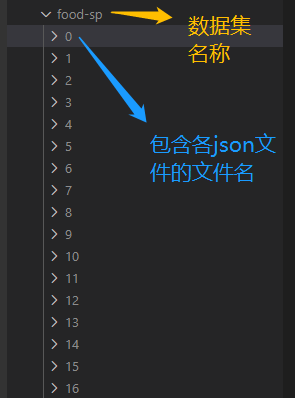

Own dataset

Folder format

json file format

[

{

"Code Name": "A270332XX_00871.jpg",

"Name": "galibi",

"W": "0.564815",

"H": "0.587961",

"File Format": "jpg",

"Cat 1": "27",

"Cat 2": "03",

"Cat 3": "32",

"Cat 4": "xx",

"Annotation Type": "binding",

"Point(x,y)": "0.587963,0.522375",

"Label": "0",

"Serving Size": "xx",

"Camera Angle": "xx",

"Cardinal Angle": "xx",

"Color of Container": "xx",

"Material of Container": "xx",

"Illuminance": "xx"

}

]What needs to be extracted is

Photograph width “W”: “0.564815”,

Photo height “H”: “0.587961”,

Normalized x, y values “Point(x,y)”: “0.587963,0.522375”, *Note: double quotes here!

Label “Label”: “0”,

Here, the width and height WH refer to the width and height of an object that has passed through thenormalization processSo you don’t need to normalize the values, just iterate through all the files and save them. (Most of the file widths and heights are the original widths and heights of the photos)

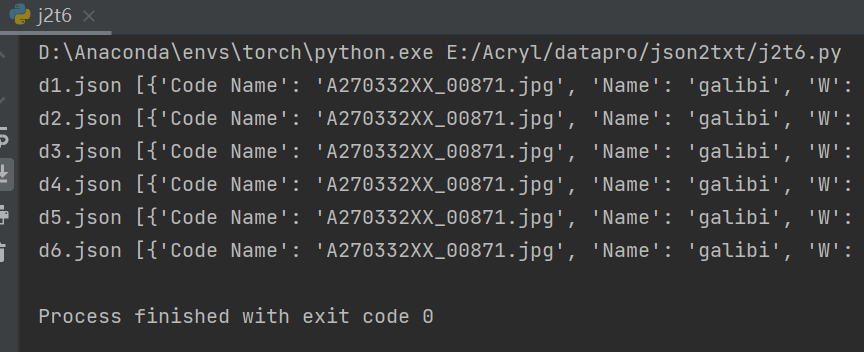

The above figure shows the properties of the json file, which will be used in the following extraction.

The following is a direct explanation of the code:

Because due to work needs I only use to the image json file point coordinates and labels, so only extracted points and labels attributes, you can add the appropriate content as needed.

dir_json and dir_tx are the path where your json file is located and the path where the txt file is generated.

In the final for loop is to iterate through the generated txt and the specific contents of the txt.

realizationcoding

import json

import os

from pathlib import Path # Recursive

def json2txt(path_json, path_txt):

with open(path_json, 'r', encoding='gb18030') as path_json:

jsonx = json.load(path_json)

filename = path_txt.split(os.sep)[-1]

with open(path_txt, 'w+') as ftxt:

for shape in jsonx:

xy = shape["Point(x,y)"]

label = shape["Label"]

w = shape["W"]

h = shape["H"]

strxy = ' '

ftxt.writelines(str(label) + strxy + str(xy) + strxy + str(w) + strxy + str(h) + "\n")

# dir_json = r'E:\Acryl\datapro\jsonfileall\**\**.json'

jpath = Path('/workspace/yolo/data/dataset/f001json/')

dir_txt = '/workspace/yolo/data/dataset/f5/txtfile/'

if not os.path.exists(dir_txt):

os.makedirs(dir_txt)

# list_json = os.listdir(str('jsonfileall'))

# print(type(list_json))

# for cnt, json_name in enumerate(list_json):

# print(cnt, json_name)

# The role of this method is to Path object under the pattern (regular expression, wildcard) to get all the files, return a generator, you can for traversal or next to get the details of the location of files

for p in jpath.rglob("*.json"):

# print('%s :/ "%s"' % (("file" if os.path.isfile(str(p)) else "dir "), str(p)))

#print(p)

# # Here p doesn't iterate

# # Find all json files with paths

# list_json = os.listdir(str(p))

# # with open(list_json, 'r', encoding='utf-8') as path_json:

# # jsonx = json.load(list_json)

# for cnt, json_name in enumerate(list_json):

# print('cnt=%d,name=%s' % (cnt, json_name))

# # path_json = dir_json+json_name

# path_json = jpath + json_name

# path_txt = dir_txt + json_name.replace('.json', '.txt')

# print(path_json, path_txt)

# json2txt(path_json, path_txt)

path_txt = dir_txt + os.sep + str(p).split(os.sep)[-1].replace('.json', '.txt')

# print(p, path_txt)

json2txt(p, path_txt)Data Preprocessing Extension

1. Use the pandas library to read the file single json file code (optional)

# Read JSON files as Pandas types

import pandas as pd

# Single json file test

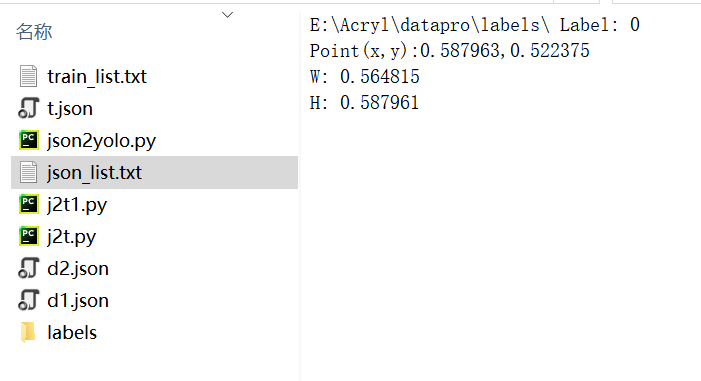

df = pd.read_json('E:\datapro\d1.json')2. Test a single json file to extract the required information and write it to a txt file (optional)

import json

person_dict = {}

final_dict = "E:\Acryl\datapro\labels\"

#import json file and load the content in person_dict as a dictionary

with open('E:\Acryl\datapro\d1.json') as f:

person_dict=json.load(f)

#get the first list from the json(person_dict)

for data in person_dict:

try:

final_dict = final_dict + ("Label: " + data[str('Label')] + "\n")

final_dict = final_dict + ("Point(x,y):" + data[str('Point(x,y)')] + "\n")

final_dict = final_dict + ("W: " + data[str('W')] + "\n")

final_dict = final_dict + ("H: " + data[str('H')] + "\n")

except:

pass

text_file = open("json_list.txt", "w") # write output as a txt

n = text_file.write(final_dict)

text_file.close()

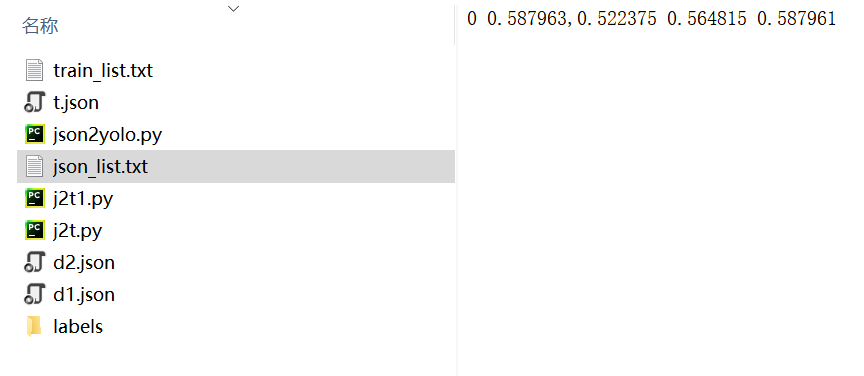

3. YOLO requested format: test a single json file to extract the required information and write it to a txt file (optional)

import json

person_dict = {}

final_dict = "E:\Acryl\datapro\labels\ "

#import json file and load the content in person_dict as a dictionary

with open('E:\Acryl\datapro\d1.json') as f:

person_dict=json.load(f)

#get the first list from the json(person_dict)

for data in person_dict:

try:

final_dict =data[str('Label')] +' '+ data[str('Point(x,y)')] +' '+ data[str('W')]+' '+data[str('H')]

except:

pass

text_file = open("json_list.txt", "w") # write output as a txt

n = text_file.write(final_dict)

text_file.close()

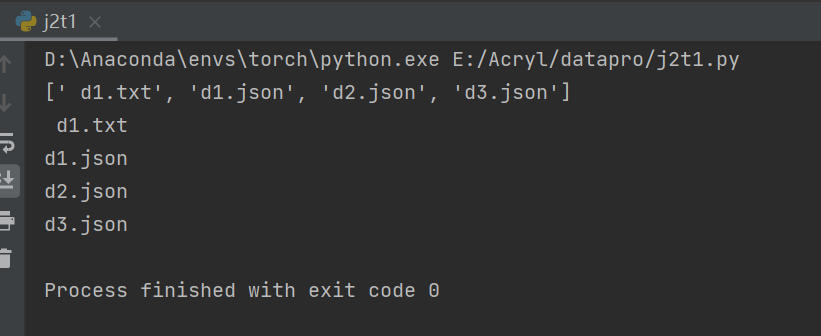

4. Iterate through all json folders in a folder (optional)

import os

import requests

from urllib.parse import quote

import re

import json

# Read file names in folders

fileList = os.listdir('E:/Acryl/datapro/jsonfile/')

print(fileList)

# Loop over the file and request

for json_id in fileList:

print(json_id)

With the open (' E: / Acryl/datapro jsonfile/' + json_id, "r", encoding = "utf-8") as f: # to open the file

data = f.read() # read the file

#print(data) test prints all data

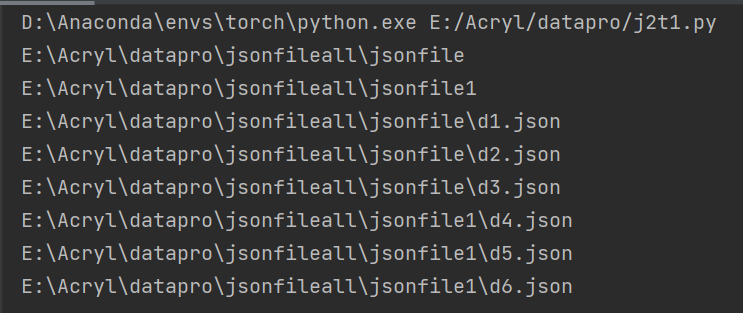

5. View all files in a folder and subdirectory files (optional)

from pathlib import Path

# Can only view but not read

if __name__ == '__main__':

p = Path('E:/Acryl/datapro/jsonfileall/')

#print(p)

for path in p.rglob("*"):

print(path)

6. python traverses all directories and files in a directory, python parses json files, python-opencv intercepts subgraphs (optional)

import cv2

import os

import json

import numpy as np

"""First of all, according to the incoming path of the main directory, we get the path of the sub-folders inside, in which each sub-folder holds a number of jpg images and a json file respectively.""""

def get_dirs(main_dir):

list_dirs = []

for root, dirs, files in os.walk(main_dir):

for dir in dirs:

list_dirs.append(os.path.join(root, dir))

return list_dirs

"""Each folder contains a number of jpg images and a json file underneath, according to the passed-in folder path, get all the jpg files and json files under that folder. (Contains path)""""

def get_file(dir_path):

list_jpgs = []

for root, dirs, files in os.walk(dir_path):

for file in files:

if file.endswith(".jpg"): #filter to get the jpg file, the

#print(os.path.join(root, file))

list_jpgs.append(os.path.join(root, file))

if file.endswith(".json"): #filter to get json file

json_path = os.path.join(root, file) # there is only one json file, so no need for a list

return list_jpgs, json_path #Get a list of all jpg files and json files (with paths)

"""

After getting the coordinate information from the json file and opening and loading the json file, start parsing the json content.

object in json corresponds to a dictionary in python.

array in json corresponds to a list in python.

Then it's nothing more than their nesting, just parse them one next to the other.

"""

def get_coordinate(json_path):

coordinates = []

with open(json_path, 'rb') as file_json:

datas = json.load(file_json)

#print(datas['shapes']) #the shapes element of datas is a list

for list in datas['shapes']:# Iterate through each element of datas['shapes'] list one by one, where each element is a dictionary.

#print(list['points']) #The values corresponding to the points in the list dictionary are the coordinate information, which in turn is two lists.

coordinates.append(list['points'])

return coordinates

"""Intercept subimage from jpg image below folder based on coordinate information inside json file below folder""""

if __name__ == '__main__':

main_dir = r"F:\tubiao"

i = 0

dirs = get_dirs(main_dir)# This step is to get all the folder paths under the folder, where each folder in turn contains a number of photos and a json file underneath.

for dir in dirs:# for each subfolder inside the image and json are processed separately

print(dir)

j = 0 # Start from 0 when saving intercepted subgraphs inside each folder.

list_jpgs, json_path = get_file(dir)#This step is to get the path name of the jpg image and the json path name inside each subfolder.

coordinates = get_coordinate(json_path)#This step is to get the coordinates stored in the shuttle according to the path of the json file.

for list_jpg in list_jpgs:# take a screenshot of each image, the

for coordinate in coordinates:#Screenshot according to coordinate information, there are a few coordinate information on the screenshot of a few pictures

#image = cv2.imread(list_jpg) #can't read Chinese paths, use imdecode instead

#print(list_jpg)

image = cv2.imdecode(np.fromfile(list_jpg, dtype=np.uint8), cv2.IMREAD_COLOR)

#image = cv2.cvtColor(image_temp, cv2.COLOR_RGB2BGR) This can not be added, plus after the cut out of the sub-image after saving the color of all changed.

x1 = int(coordinate[0][0]) # Vertex X in the upper left corner

y1 = int(coordinate[0][1]) # top left vertex y

x2 = int(coordinate[1][0]) # Vertex x in the lower right corner

y2 = int(coordinate[1][1]) # Vertex Y of the lower right corner

cropImg = image[y1:y2, x1:x2] # The coordinate order is Y1:Y2, X1:X2, with Y in front and X behind.

save_name = str(i) + "_cut" + str(j) + ".jpg" #added cut because it's a truncated image

save_path = os.path.join(dir,save_name)

j = j + 1

#print(save_path)

# cv2.imwrite(save_path, frame) # save path contains Chinese, can't use imwrite to save, use next line of imencode's method.

ret = cv2.imencode('.jpg', cropImg)[1].tofile(save_path) # [1] represents the second return value of imencode, which is the memory data corresponding to this image

i = i + 1# Ensure that there are no duplicates in each folder when intercepting subgraphs.7. python traverses all directories and files in the directory, python reads the json file information, generate a list.txt (optional)

#Y

import json

import os

def readjson():

path = 'E:/Acryl/datapro/jsonfileall/jsonfile/' # contains all json folder locations

files = os.listdir(path)

label_txt = open('E:/Acryl/datapro/jsonfileall/label_txt.txt', mode='w')

for file in files:

f = open(path + '\\' + file, mode='r', encoding='utf-8')

temp = json.loads(f.read())

for temp in temp:

try:

json_str = temp[str('Label')] + ' ' + temp[str('Point(x,y)')] + ' ' + temp[str('W')] + ' ' + temp[str('H')]

except:

pass

Json_str = temp/" Label "+" + \ [STR (" Point (x, y) ")] + ' '+ temp [STR (' W')] + ' '+ temp [STR (' H')] # write TXT file

label_txt.writelines(json_str + '\n')

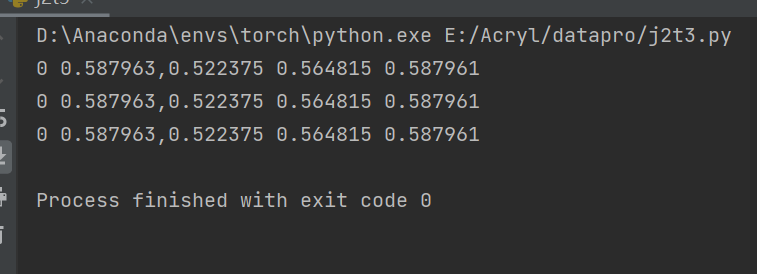

print(json_str) # print the extracted data

label_txt.close()

if __name__ == '__main__':

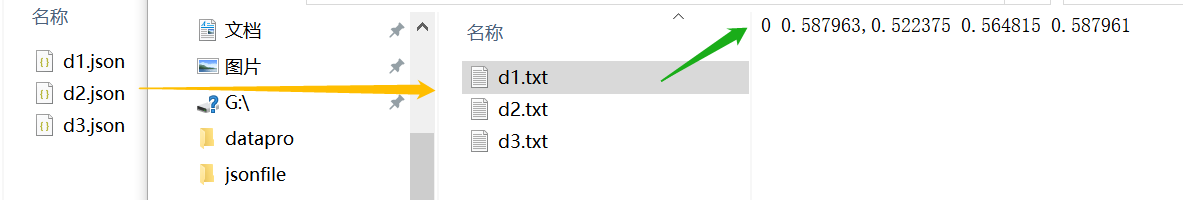

readjson()The effect is as follows

The test data are three json files, json files as above

Run it and output a txt file as follows:

8. their own dataset json file for the bbox four points of information, the need to normalize the processing for YOLO format code

import os

import json

# There's only one json file

json_dir = 'train_annos.json' # json file path

out_dir = 'output/' # Path to output txt file

def main():

# Read json file data

with open(json_dir, 'r') as load_f:

content = json.load(load_f)

# Cyclic processing

for t in content:

tmp = t['name'].split('.')

filename = out_dir + tmp[0] + '.txt'

if os.path.exists(filename):

# Calculate the values of the relative x, y coordinates, w,h of the center point needed for the yolo data format.

x = (t['bbox'][0] + t['bbox'][2]) / 2 / t['image_width']

y = (t['bbox'][1] + t['bbox'][3]) / 2 / t['image_height']

w = (t['bbox'][2] - t['bbox'][0]) / t['image_width']

h = (t['bbox'][3] - t['bbox'][1]) / t['image_height']

fp = open(filename, mode="r+", encoding="utf-8")

file_str = str(t['category']) + ' ' + str(round(x, 6)) + ' ' + str(round(y, 6)) + ' ' + str(round(w, 6)) + \

' ' + str(round(h, 6))

line_data = fp.readlines()

if len(line_data) != 0:

fp.write('\n' + file_str)

else:

fp.write(file_str)

fp.close()

# Create file if it doesn't exist

else:

fp = open(filename, mode="w", encoding="utf-8")

fp.close()

if __name__ == '__main__':

main()9. their own data set of batch reading json files, read the necessary information into the corresponding txt file (YOLO need the format)

import os

import numpy as np

import json

def json2txt(path_json, path_txt):

with open(path_json, 'r', encoding='gb18030') as path_json:

jsonx = json.load(path_json)

with open(path_txt, 'w+') as ftxt:

for shape in jsonx:

xy = shape["Point(x,y)"]

label = shape["Label"]

w = shape["W"]

h = shape["H"]

strxy = ' '

ftxt.writelines(str(label)+ strxy + str(xy) + strxy + str(w)+ strxy + str(h)+"\n")

dir_json = 'E:/Acryl/datapro/jsonfileall/jsonfile/'

dir_txt = 'E:/Acryl/datapro/labels/'

if not os.path.exists(dir_txt):

os.makedirs(dir_txt)

list_json = os.listdir(dir_json)

for cnt, json_name in enumerate(list_json):

print('cnt=%d,name=%s' % (cnt, json_name))

path_json = dir_json + json_name

path_txt = dir_txt + json_name.replace('.json', '.txt')

print(path_json, path_txt)

json2txt(path_json, path_txt)effect

10. Iterate through multiple folders to find and print json file information

import json

import sys

import os

def walkrec(root):

for root, dirs, files in os.walk(root):

for file in files:

path = os.path.join(root, file)

if file.endswith(".json"):

print(file, end=' ')

with open(path) as f:

data = json.load(f)

print(data)

if __name__ == '__main__':

path='E:\\datapro\\\jsonfileall' # own path

walkrec(path)

11. python traverses the json file in the directory, generating a list.txt file containing the path (optional)

# -*- coding: utf-8 -*-

import os

path1 = '/workspace/yolo/data/dataset/f001json'

def file_name(file_dir):

for root, dirs, files in os.walk(file_dir):

file = open('labellist.txt', 'w+')

for f in files:

# print(os.path.join(path1,f))

i = (os.path.join(path1, f))

file.write( i + '\n')

file.close()

if __name__ == '__main__':

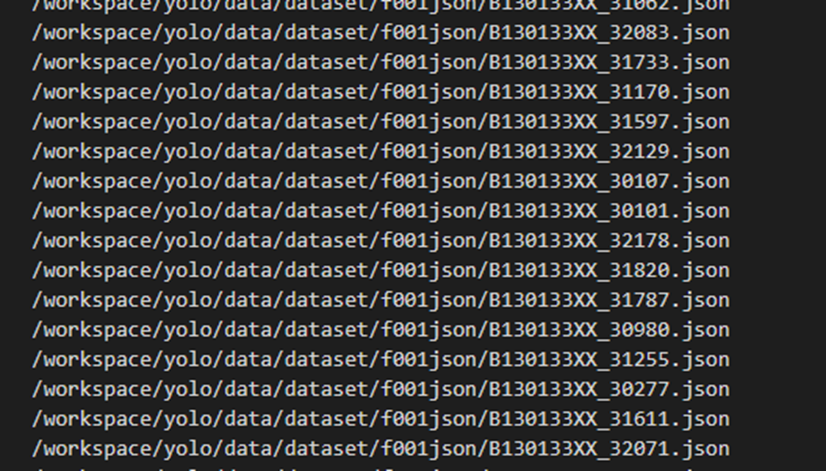

file_name('/workspace/yolo/data/dataset/f001json')effect

labellist.txt

12. Batch modification processing txt file content

Batch modify txt file, in doing YOLO project, will need to be a part of the text file for batch replacement and modification of the content, so write a python program to batch replace all the text file in a specific part of the content.

import re

import os

# 38 labels

def reset():

i= 0

path='/workspace/yolo/data/dataset/test/'

#path = "/workspace/yolo/data/dataset/labels0208/"

filelist = os.listdir(path) # all files in the folder (including folders)

for files in filelist: # Traverses all files

i = i + 1

Olddir = os.path.join(path,files); # Original file path

if os.path.isdir(Olddir):

continue;

filename = os.path.splitext(files)[0];

filetype = os.path.splitext(files)[1];

filePath = path+filename+filetype

# Here it will take all 0->1

alter(filePath,"0","1")

def alter(file,old_str,new_str):

with open(file,"r",encoding="utf-8") as f1,open("%s.bak"% file,"w",encoding="utf-8") as f2:

for line in f1:

if old_str in line:

line = line.replace(old_str,new_str)

f2.write(line)

os.remove(file)

os.rename("%s.bak" % file,file)

reset()Method 2:

"""

Created on 2023.02

@author: Elena

"""

# coding=utf-8

import os

path = path='/workspace/yolo/data/dataset/test'

def listfiles(dirpath):

filelist = []

for root, dirs, files in os.walk(dirpath):

for fileObj in files:

filelist.append(os.path.join(root, fileObj))

return filelist

def txt_modify(files):

for file in files:

label_path = os.path.join(path, file)

with open(label_path, 'r+') as f:

lines = f.readlines()

for line in lines:

# e.g.'Bus' is the new one, Truck is the old one

f.seek(0)

f.truncate()

f.write(line.replace('Truck', 'Bus'))

# f.write(line.replace('dog', 'cat').replace('man', 'boy')) # Multiple content replacement

f.close()

def main():

filelist = listfiles(path)

for fileobj in filelist:

f = open(fileobj, 'r+')

lines = f.readlines()

f.seek(0)

f.truncate()

for line in lines:

f.write(line.replace('2 ', '3 '))

f.close()

if __name__ == main():

main()Method 3: Handling yolo’s already converted label count modification

import os

import re

#path = '/workspace/yolo/data/dataset/testjson2txt/1/'

path='/workspace/yolo/data/dataset/labels0208/'

files = []

for file in os.listdir(path):

if file.endswith(".txt"):

files.append(path+file)

for file in files:

with open(file, 'r') as f:

new_data = re.sub('^12', '1', f.read(), flags=re.MULTILINE) # replace 12 in column with 1

print("Down")

with open(file, 'w') as f:

f.write(new_data)Other types

Article 4 – json Tags (labelme) to txt Tags (YOLOv5 feature) – Bilibili (bilibili.com)